Intel launches $299 Arc Pro B50 with 16GB of memory, 'Project Battlematrix' workstations with 24GB Arc Pro B60 GPUs

Arc Pro arrives for inference workstations.

Intel has announced its Arc Pro B-series of graphics cards at Computex 2025 in Taipei, Taiwan, with a heavy focus on AI workstation inference performance boosted by segment-leading amounts of VRAM. The Intel Arc Pro B50, a compact card that's designed for graphics workstations, has 16GB of VRAM and will retail for $299, while the larger Intel Arc Pro B60 for AI inference workstations slots in with a copious 24GB of VRAM. While the B60 is designed for powerful 'Project Battlematrix' AI workstations sold as full systems ranging from $5,000 to $10,000, it will carry a roughly $500 per-unit price tag.

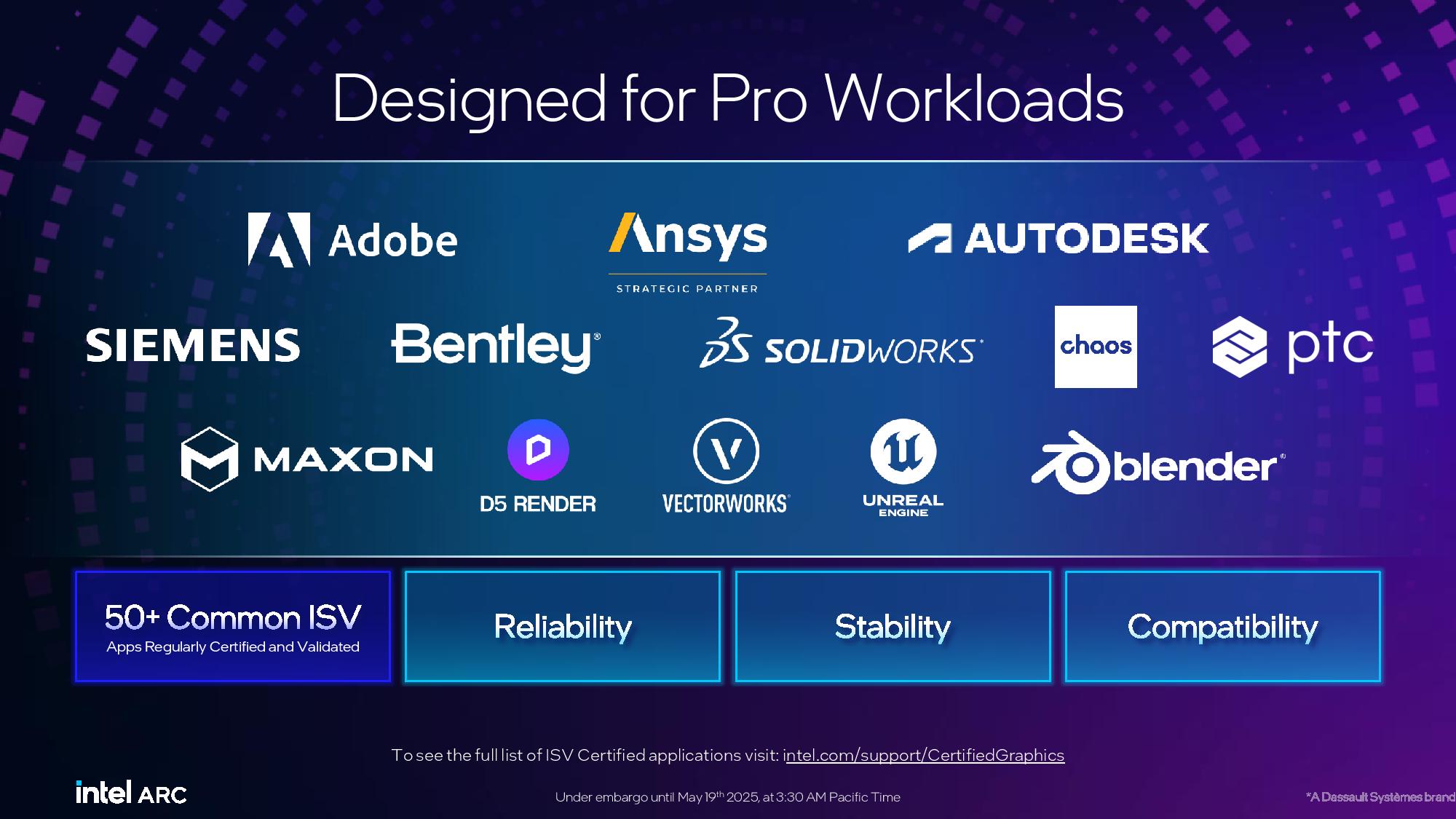

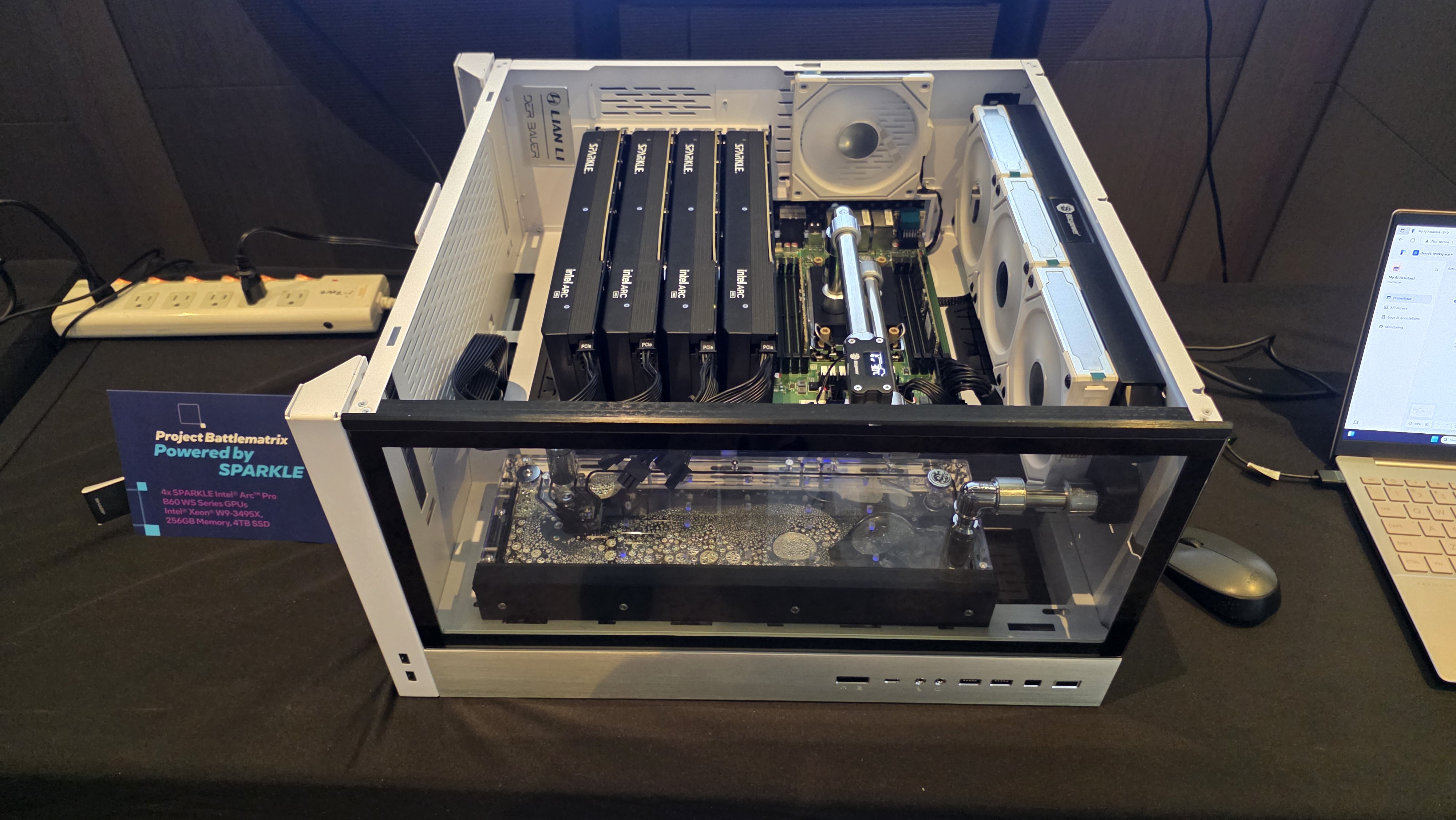

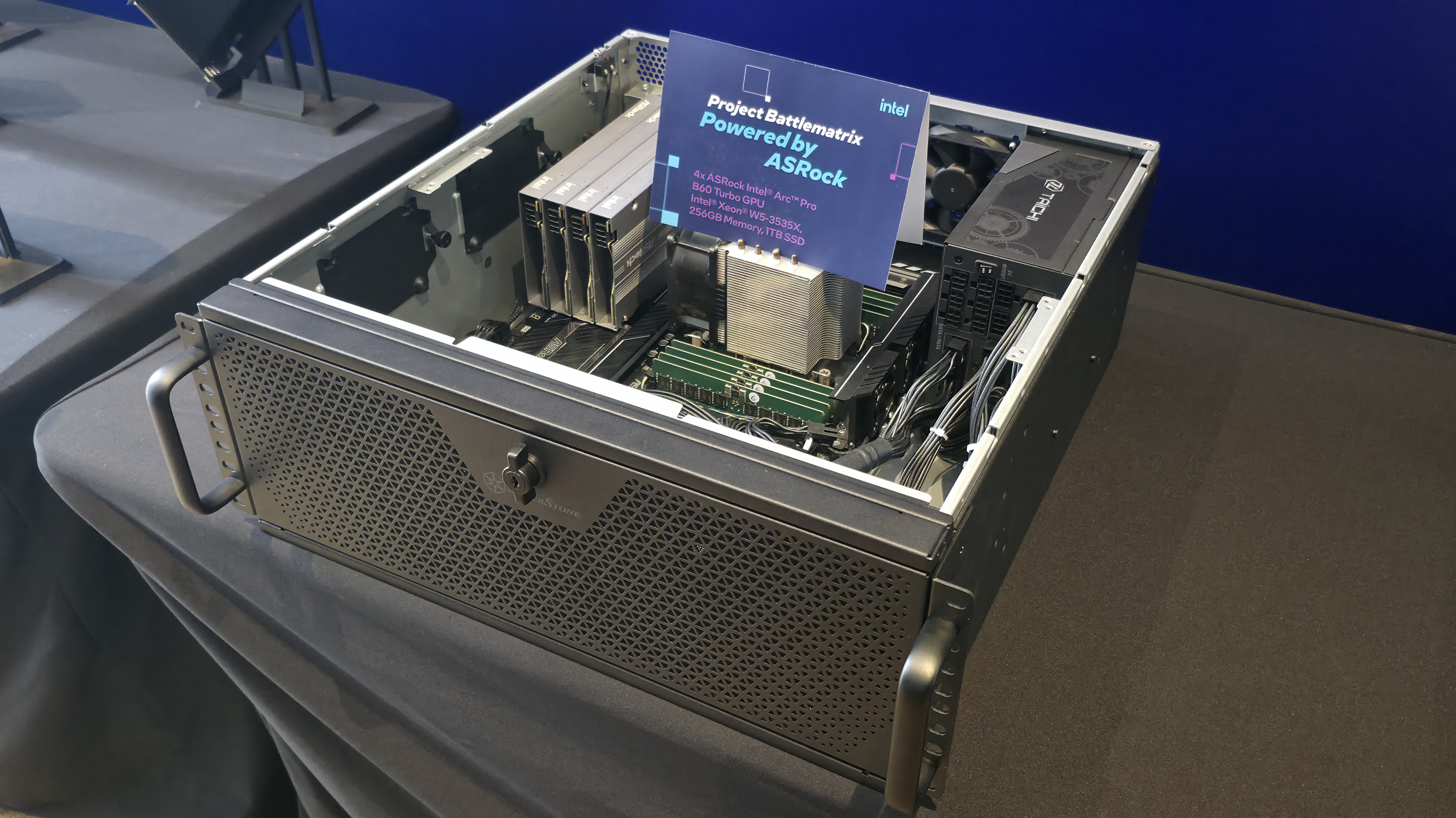

Intel has focused on leveraging the third-party GPU ecosystem to develop its Arc Pro cards, in contrast to its competitors, who tend to release their own-branded cards for the professional segment. That includes partners like Maxsun, which has developed a dual-GPU card based on the B60 GPU. Other partners include ASRock, Sparkle, GUNNR, Senao, Lanner, and Onix.

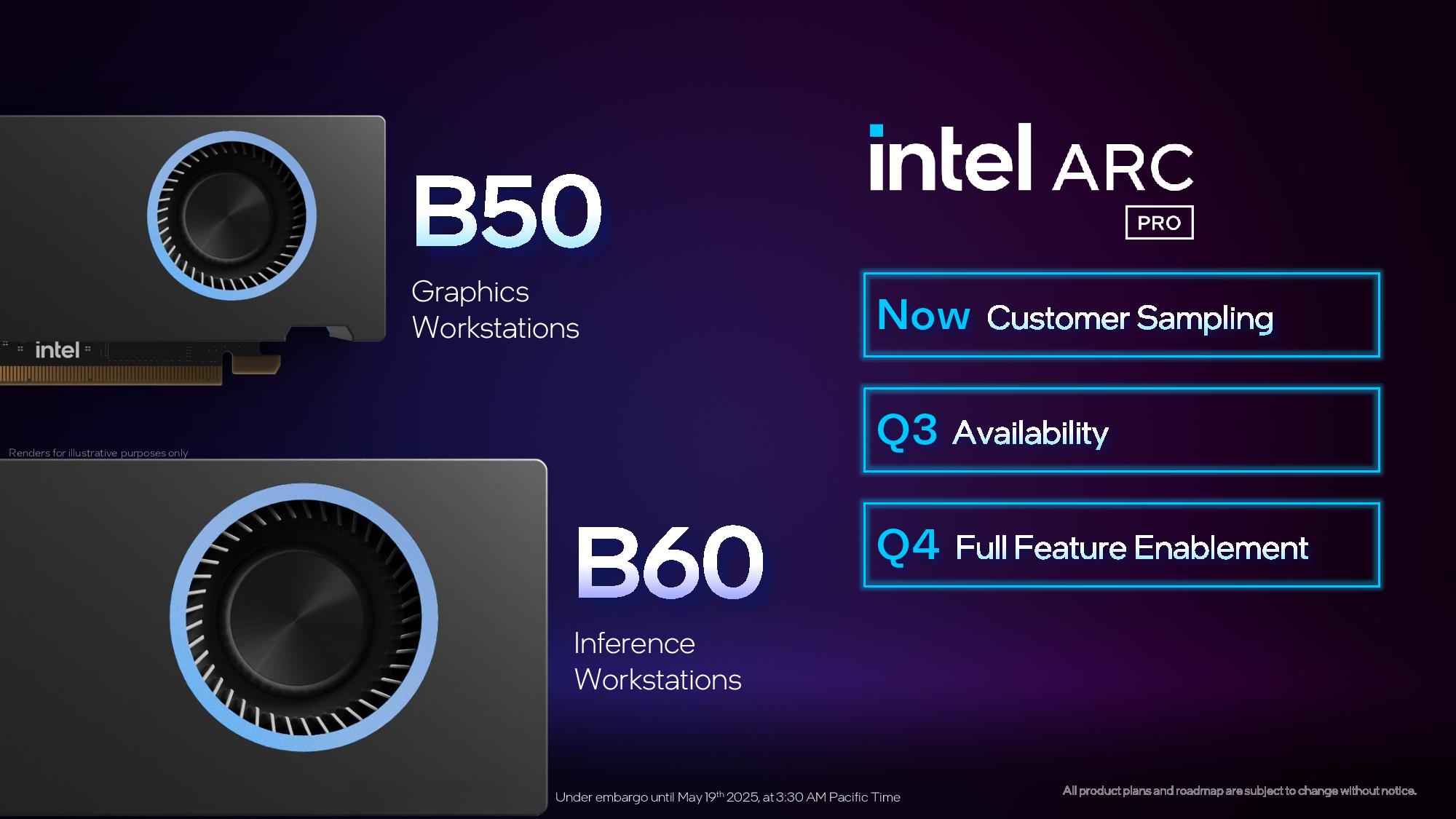

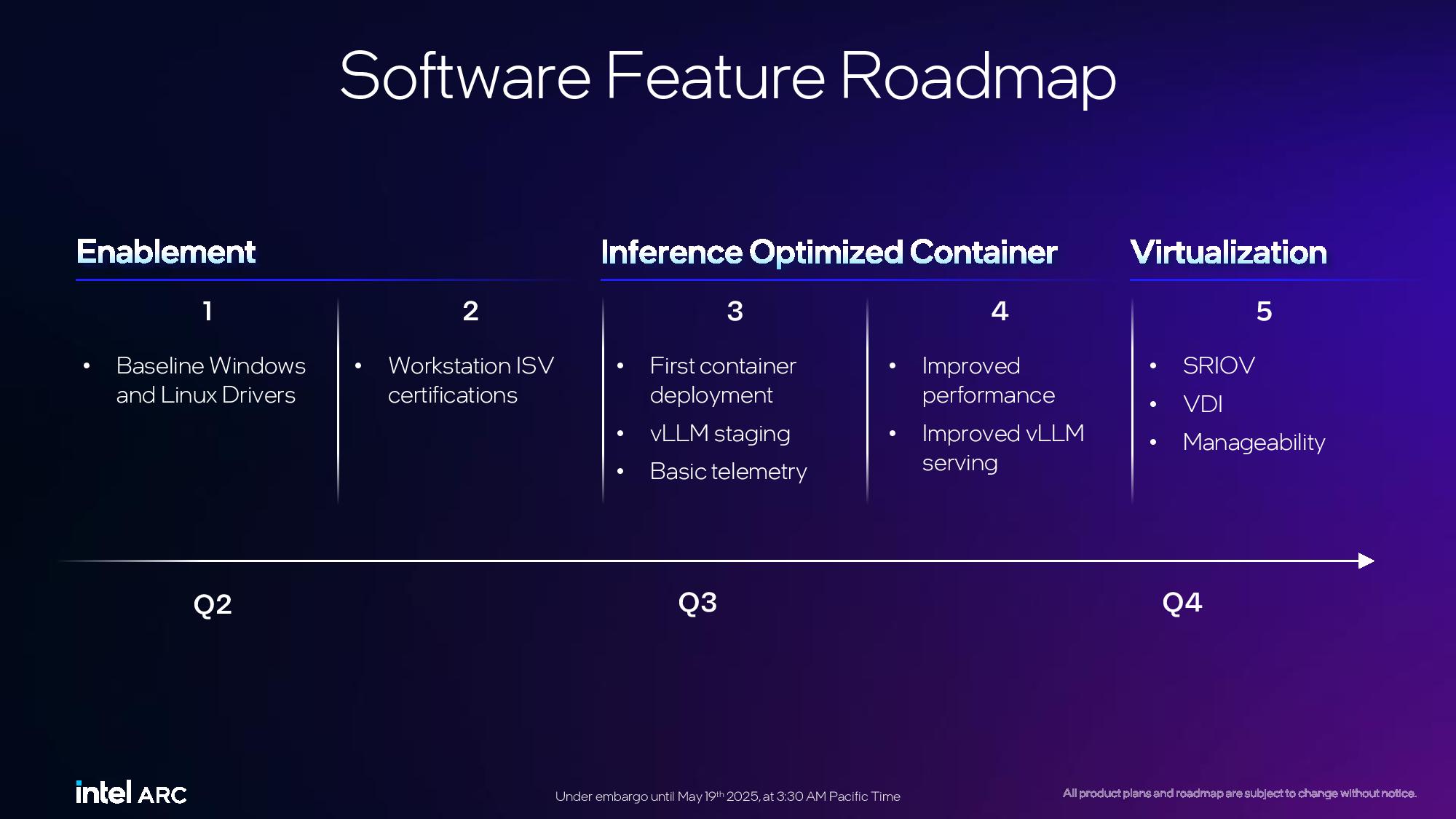

Both the B50 and B60 GPUs are now being sampled to Intel partners, as evidenced by a robust display of partner cards and full systems on display, and will arrive on the market in the third quarter of 2025. Intel will initially launch the cards with a reduced software featureset, but will add support for features like SRIOV, VDI, and manageability software in the fourth quarter of the year.

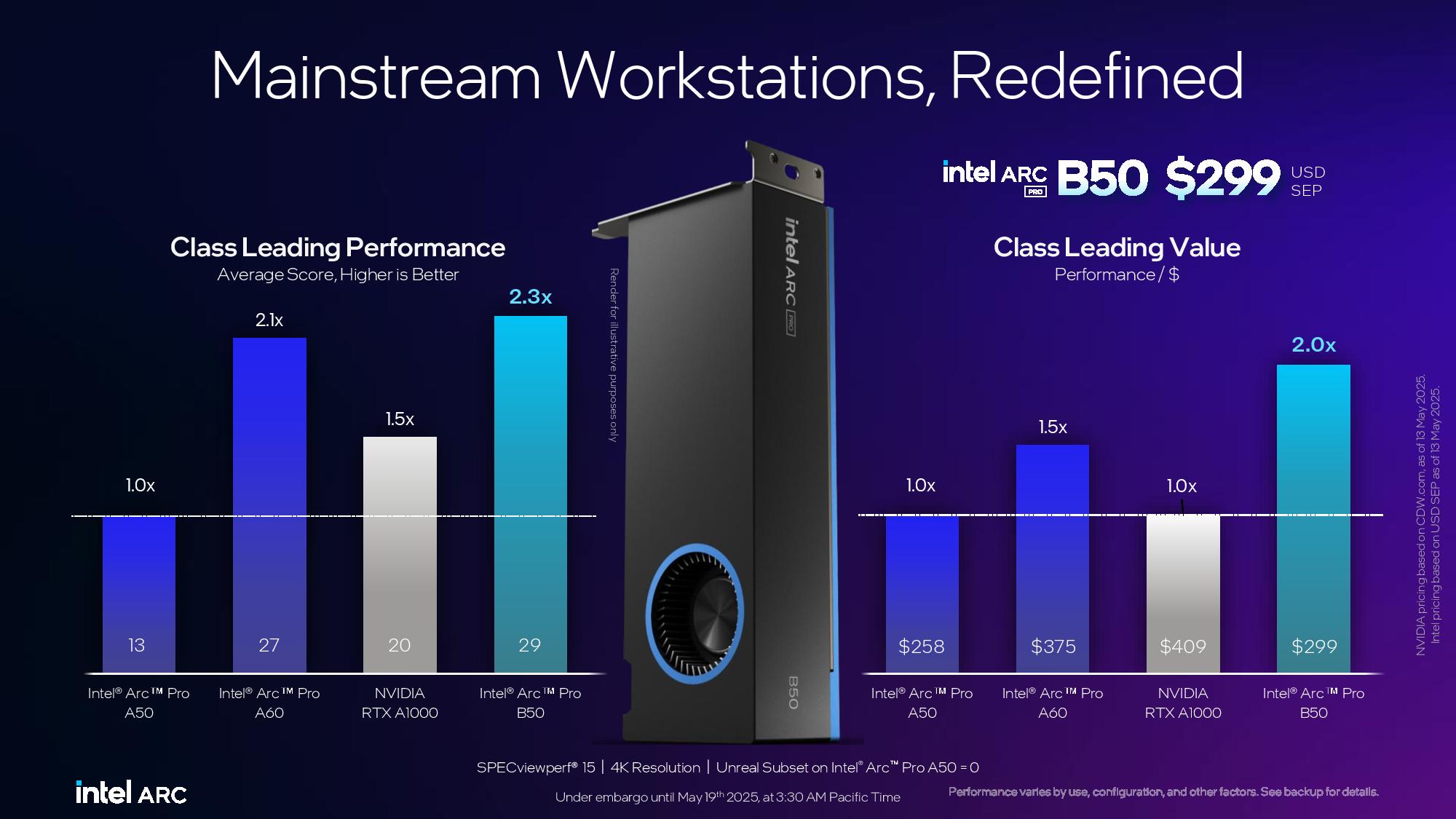

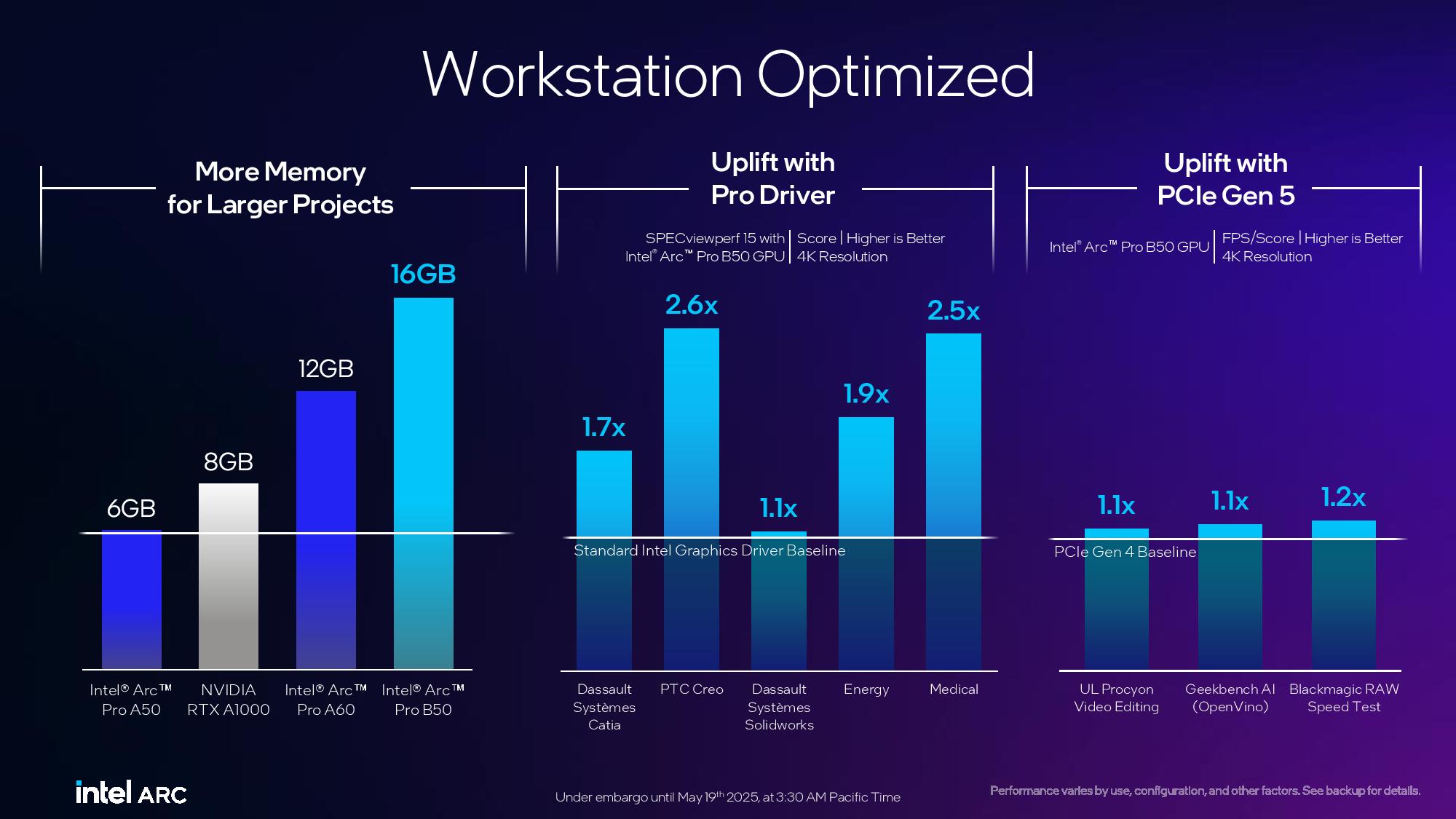

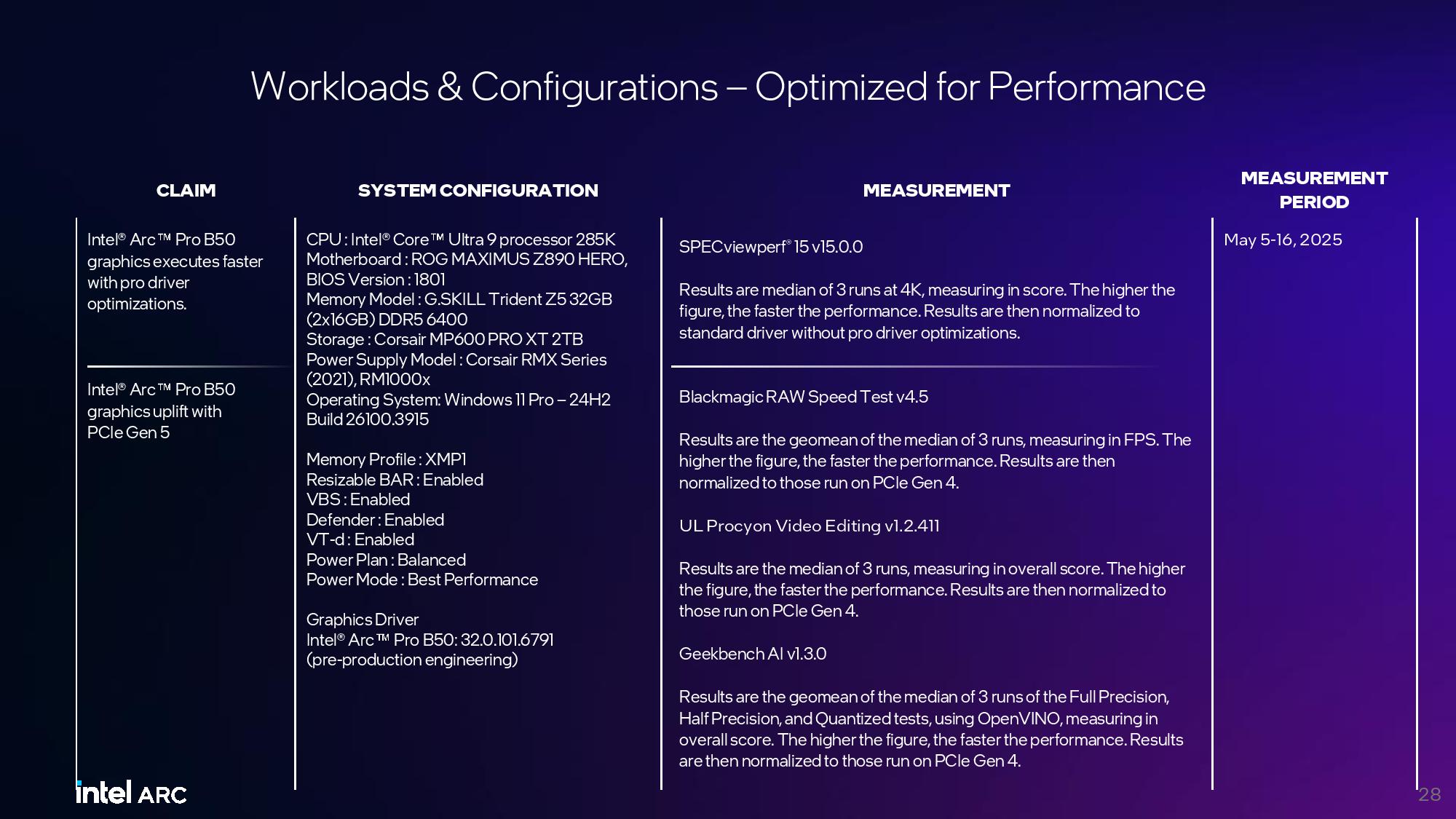

The Intel Arc Pro B50 has a compact dual-slot design for slim and small-form-factor graphics workstations. It has a 70W total board power (TBP) rating and does not have external power connectors. The GPU wields 16 Xe cores and 128 XMX engines that deliver up to 170 peak TOPS, all fed by 16GB of VRAM that delivers 224 GB/s of memory bandwidth. The card also sports a PCIe 5.0 x8 interface, which Intel credits with speeding transfers from system memory, ultimately delivering 10 to 20% more performance in some scenarios.

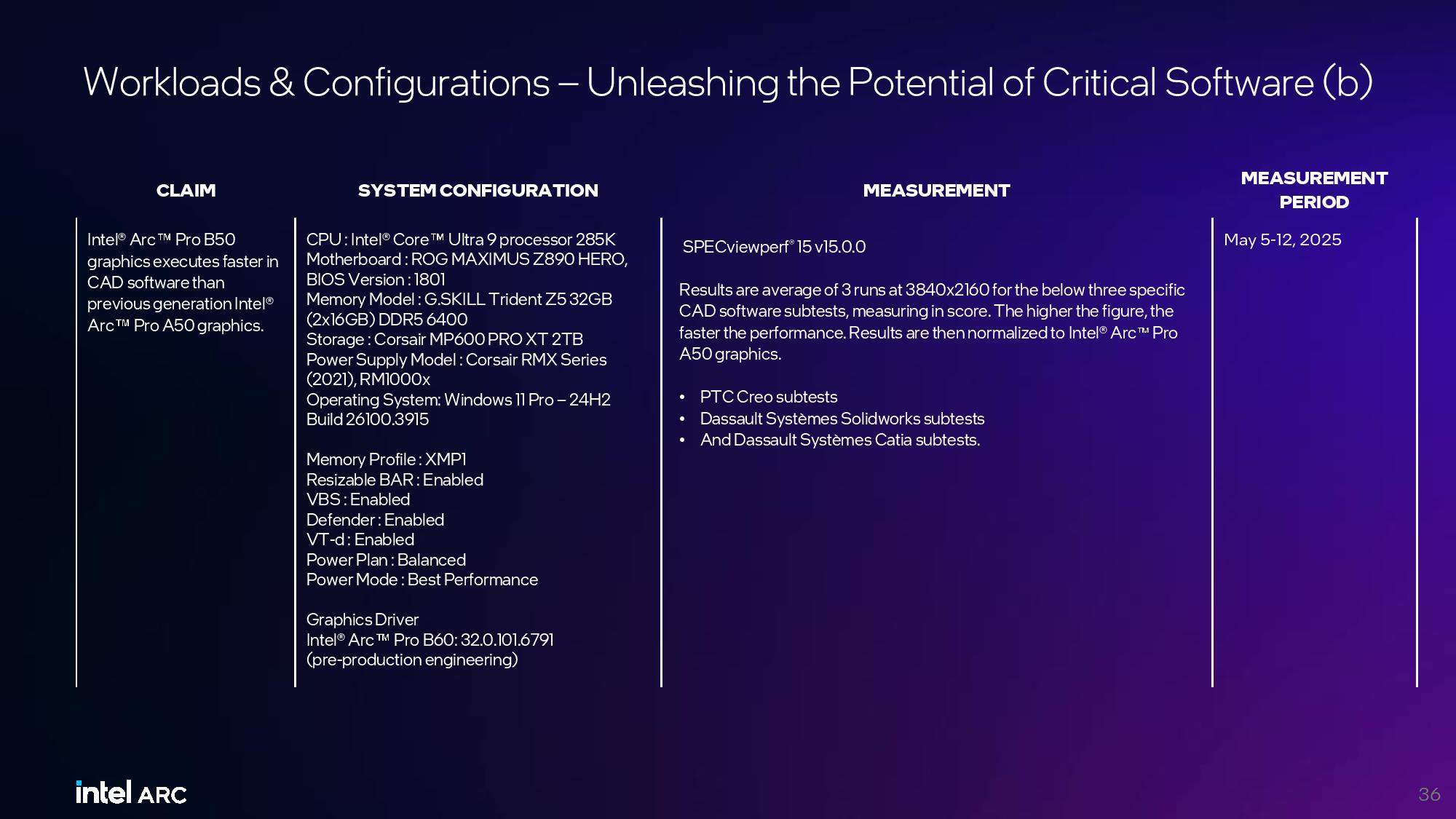

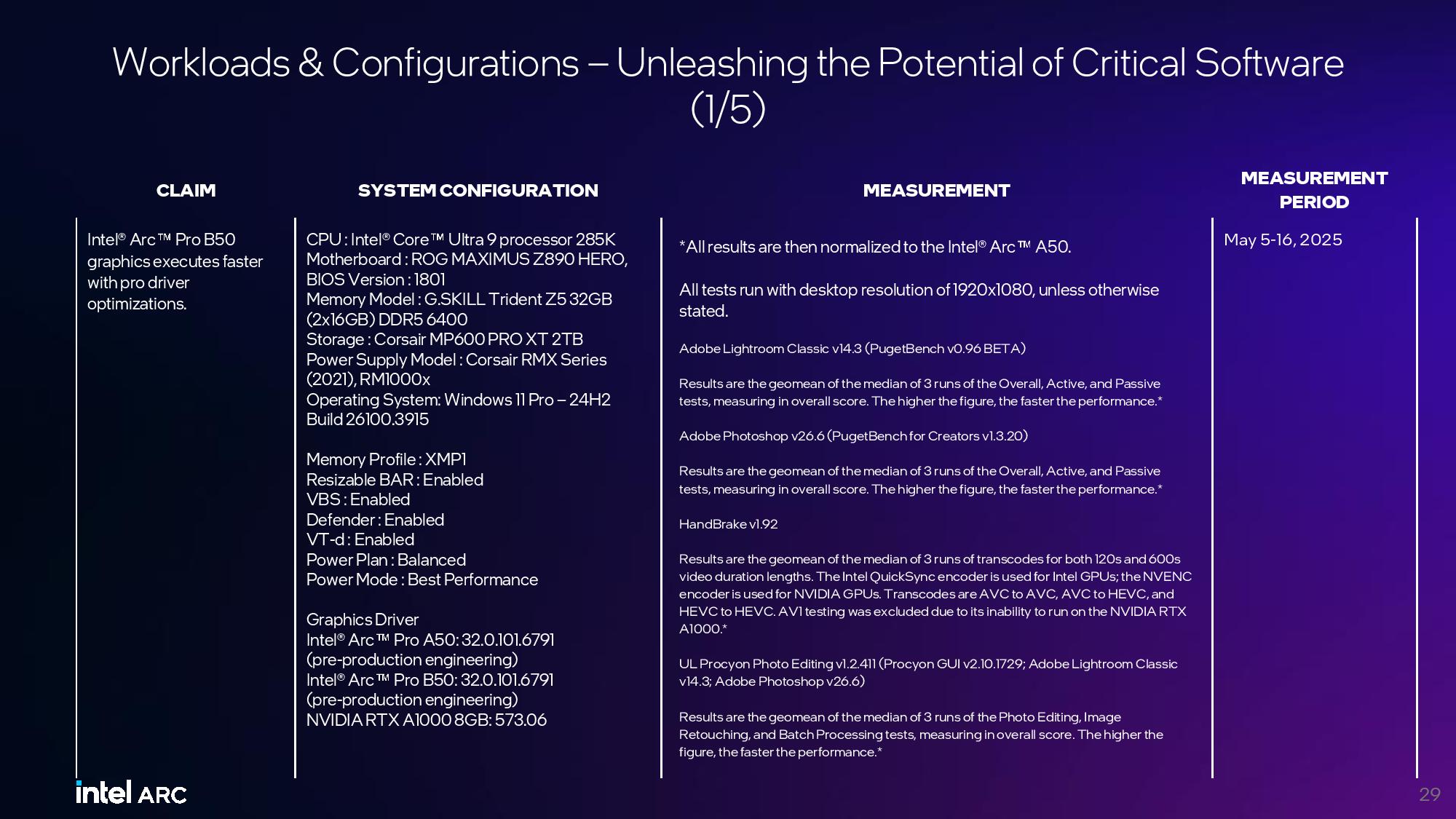

The B50's 16GB of memory outweighs its primary competitors in this segment, which typically come armed with 6 or 8GB of memory. The card also has certified drivers that Intel claims deliver up to 2.6X more performance than the baseline gaming drivers.

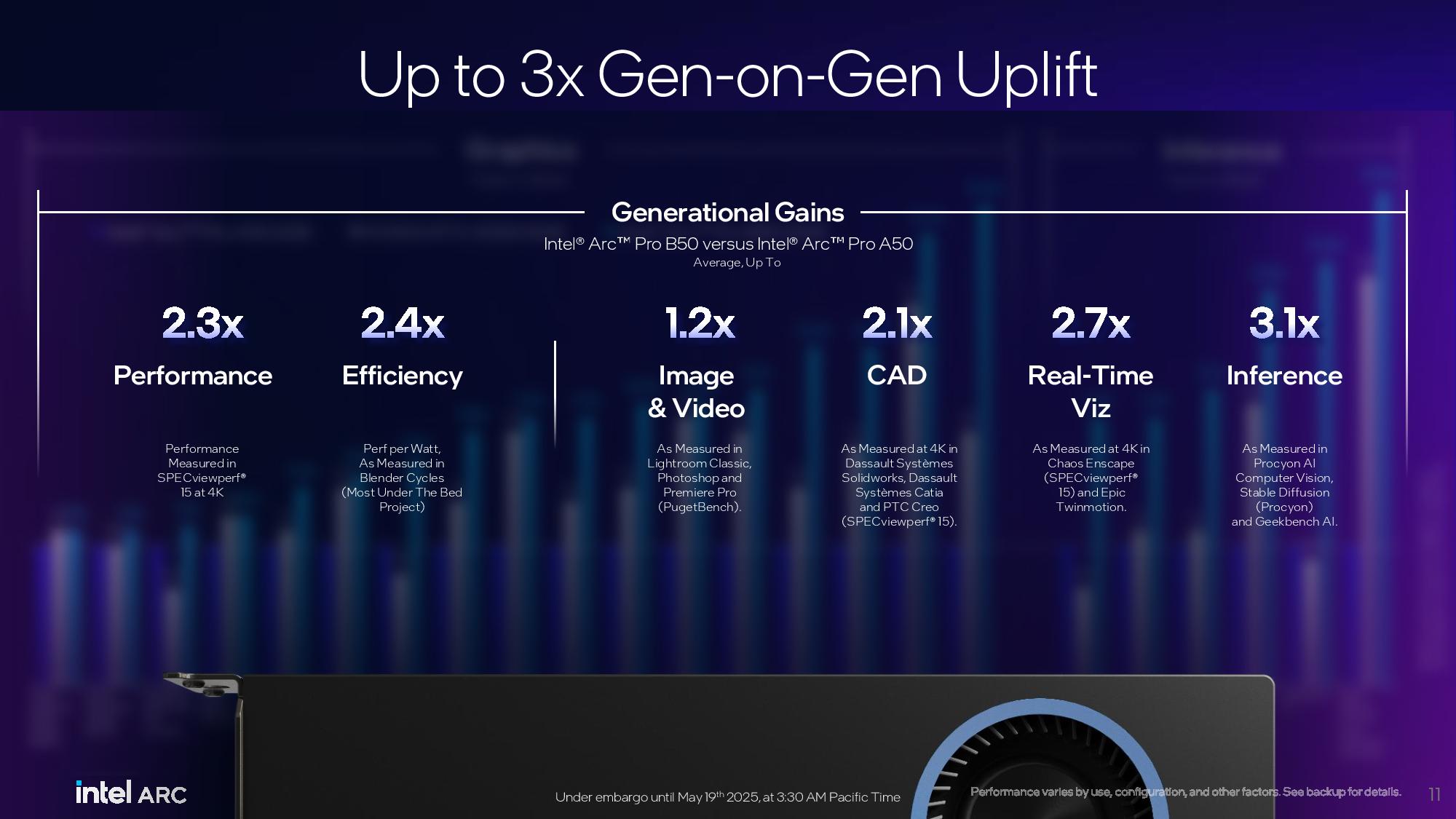

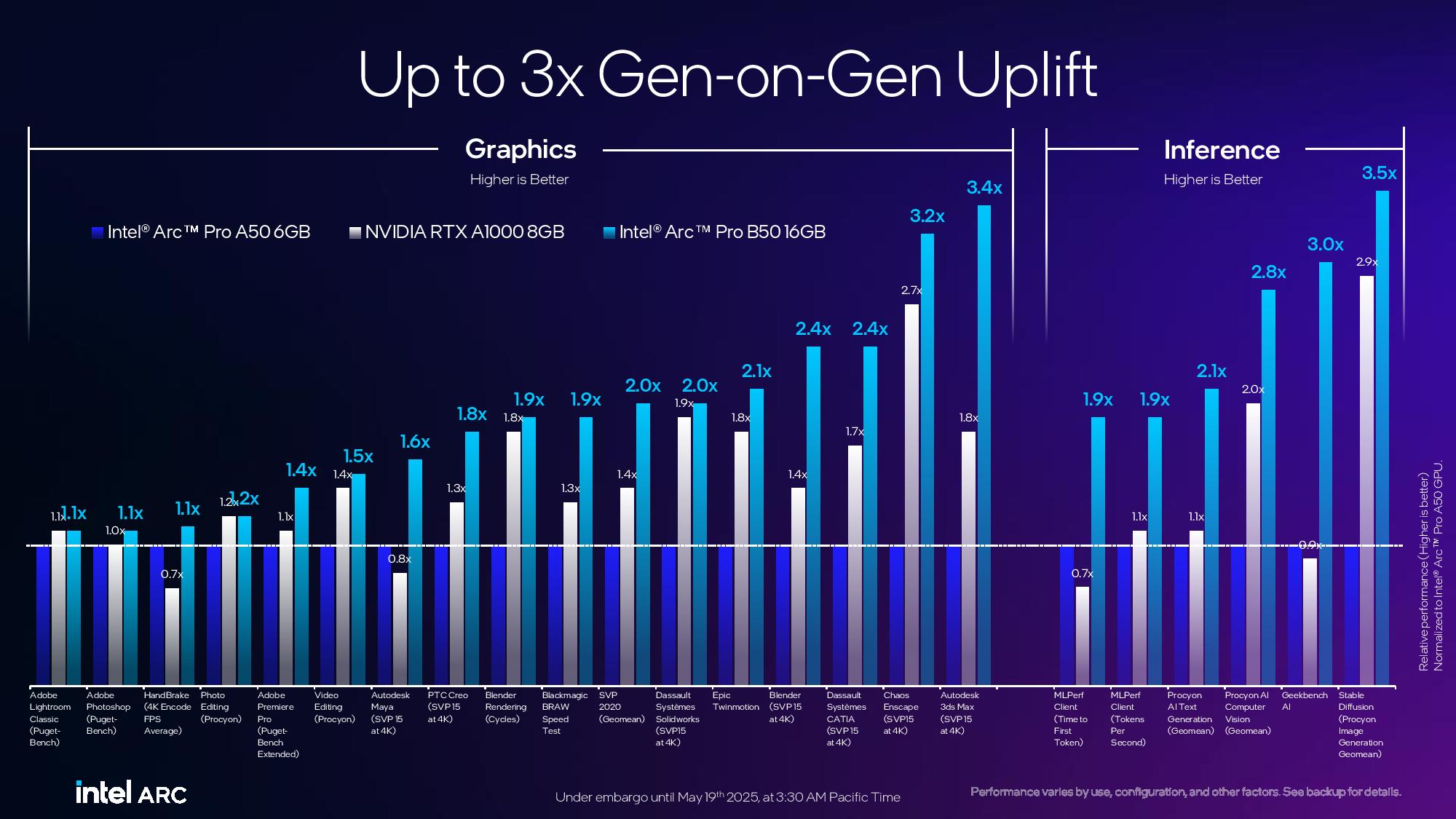

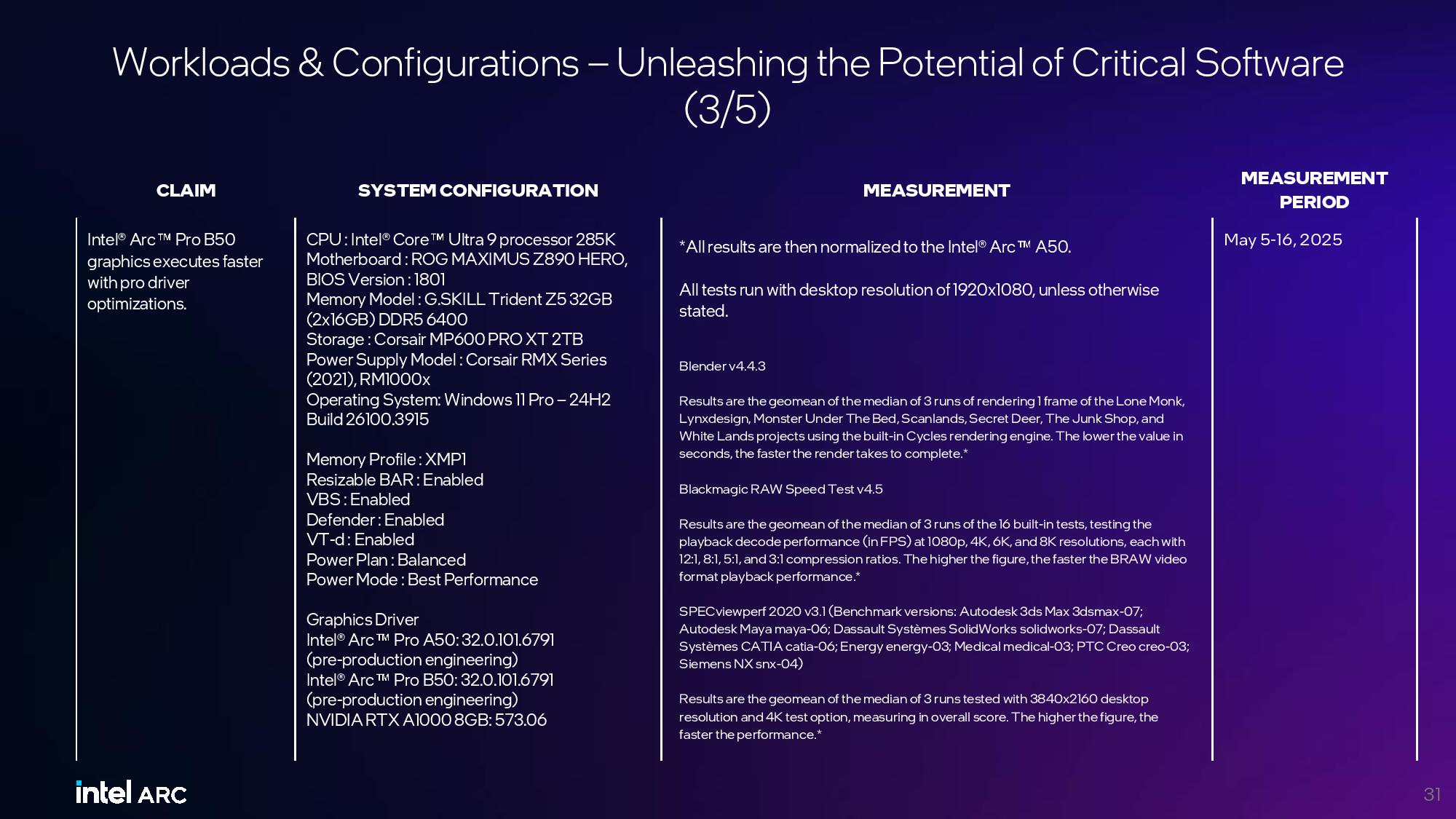

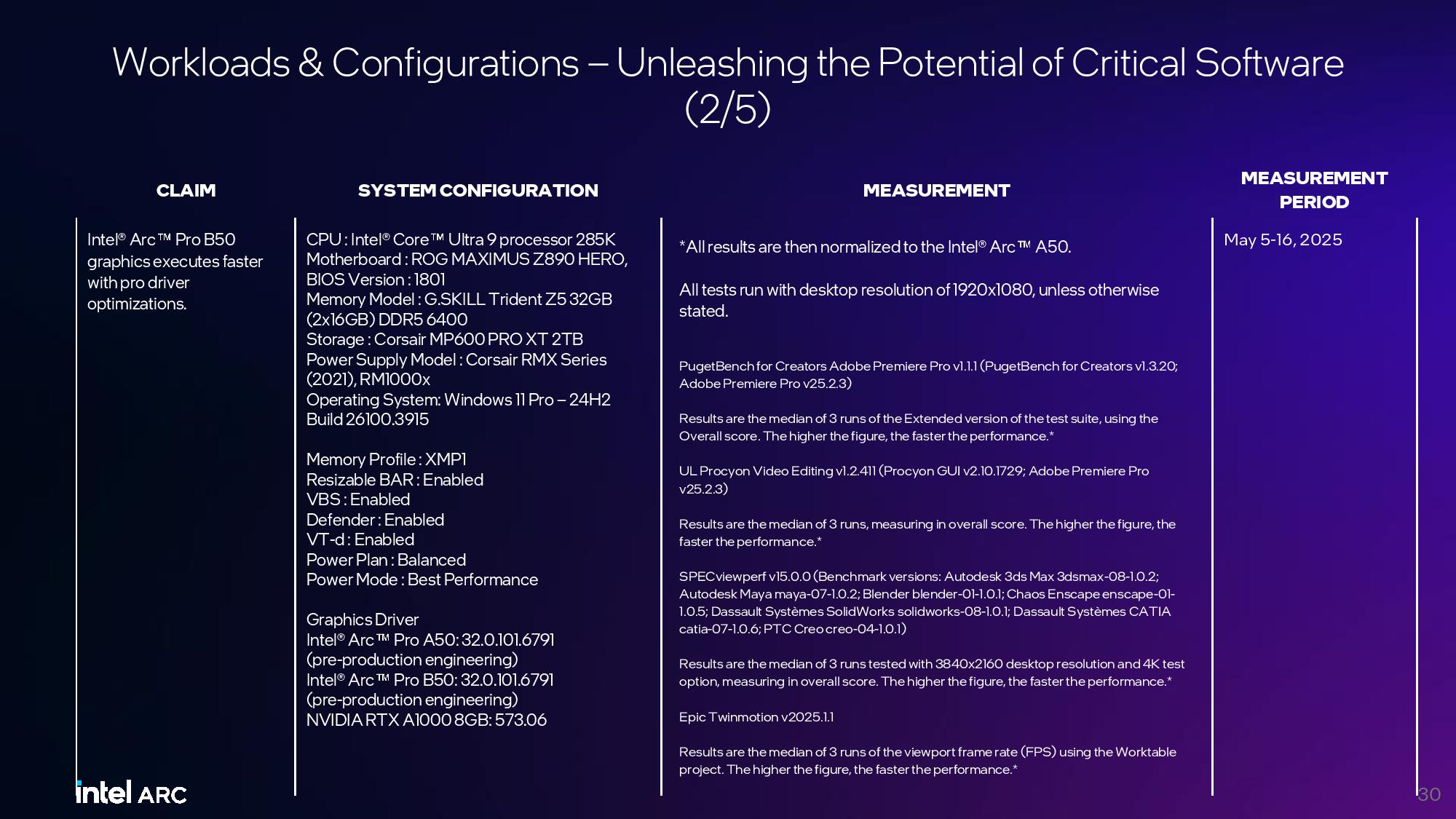

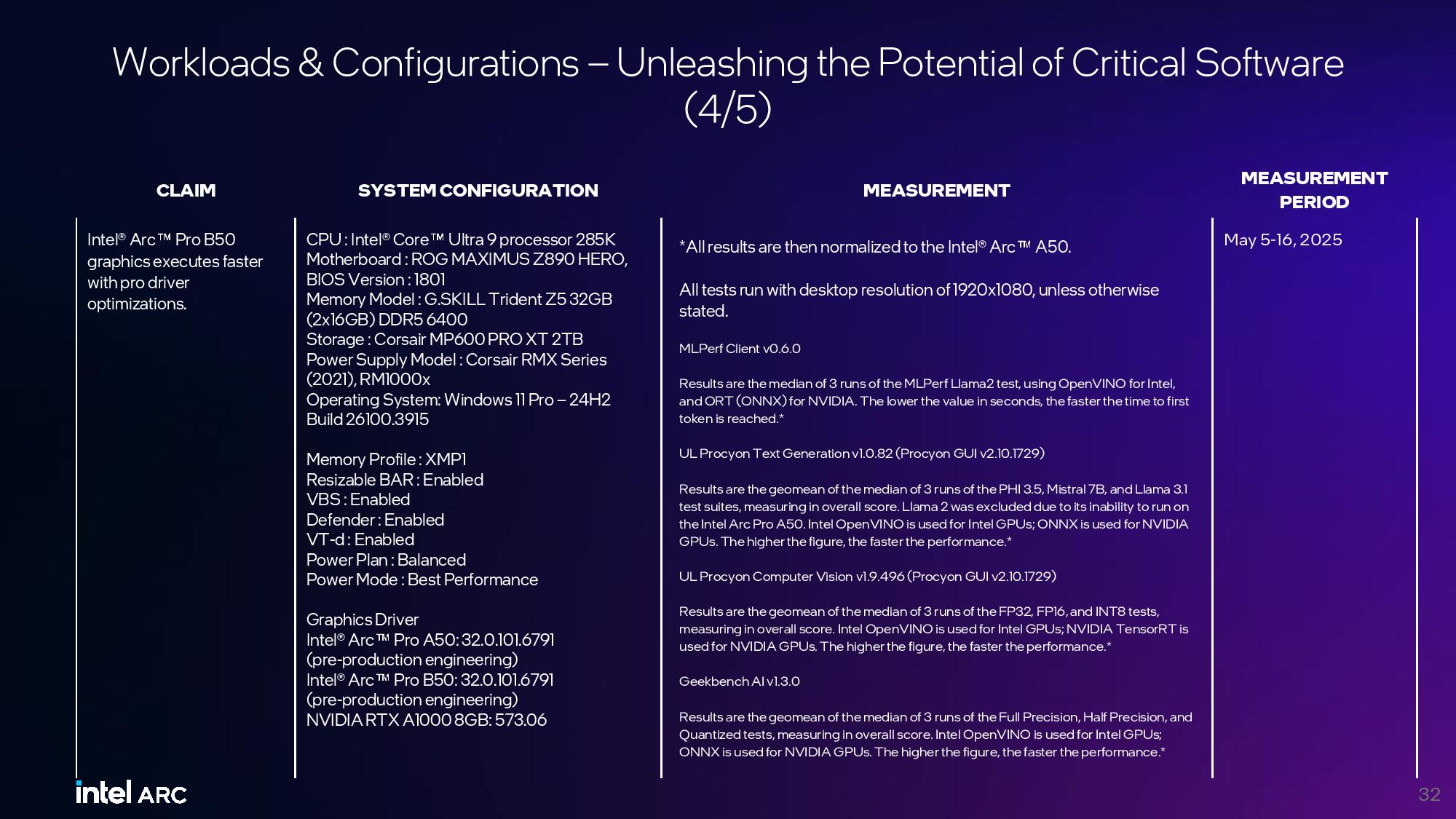

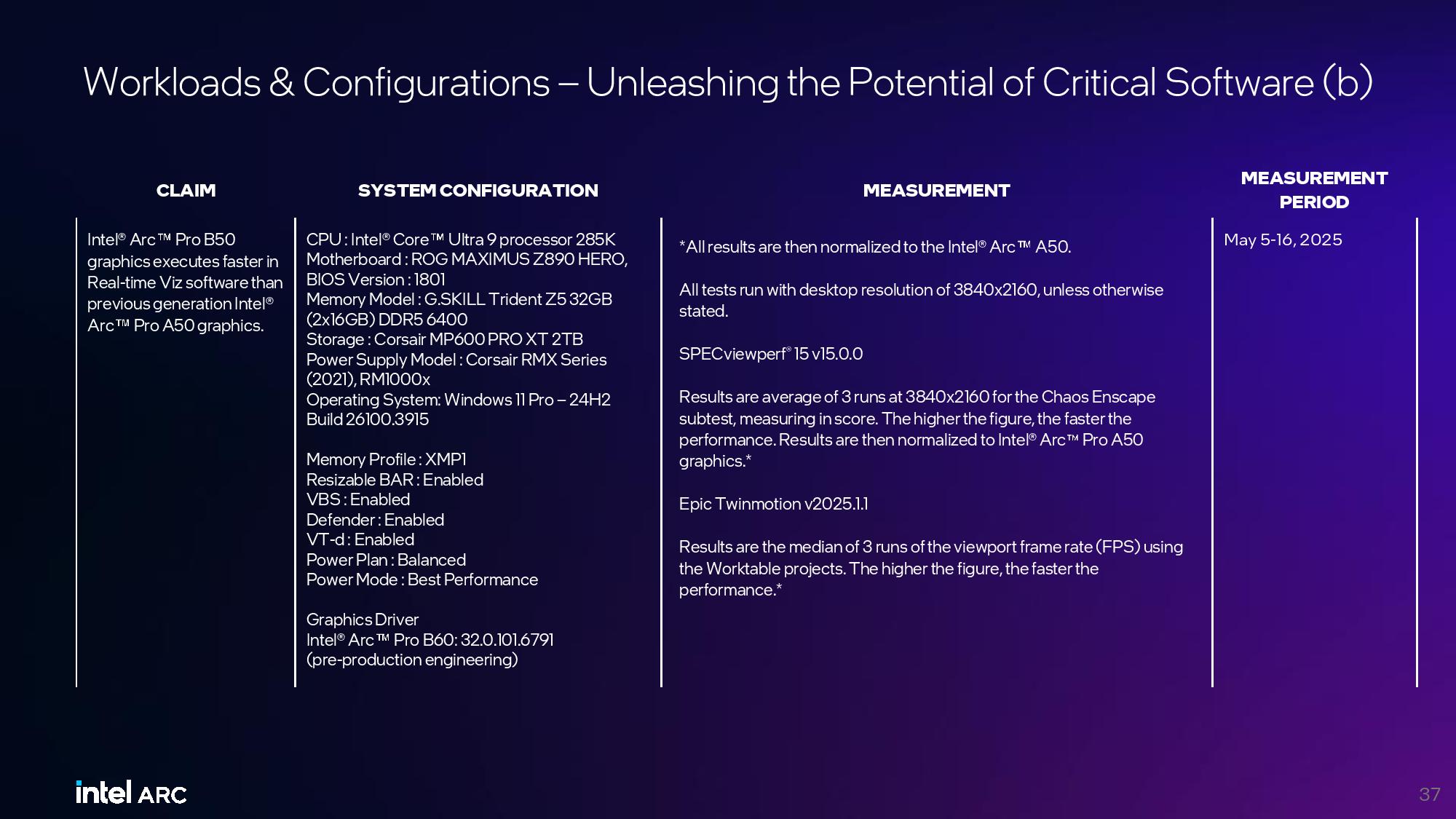

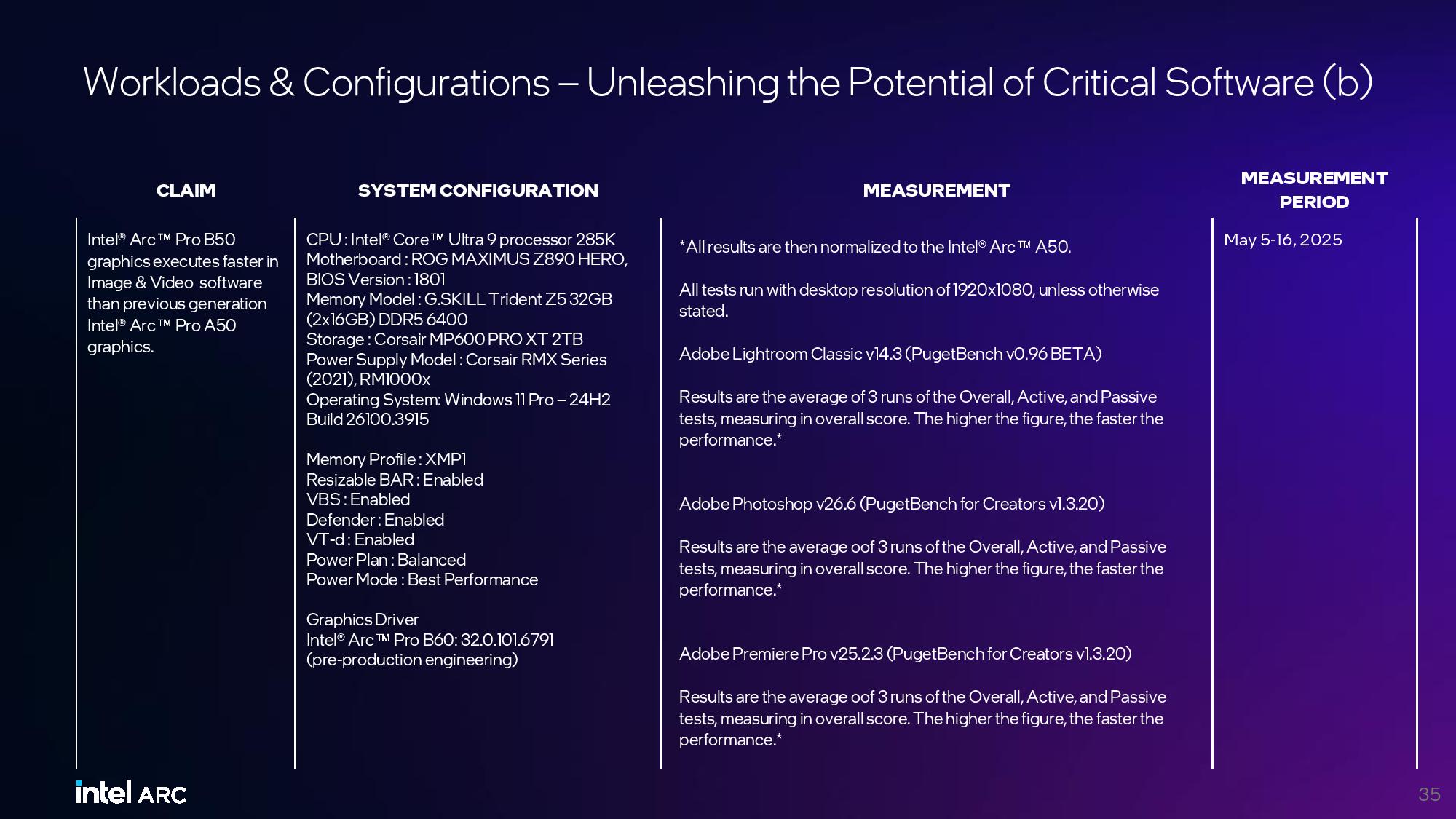

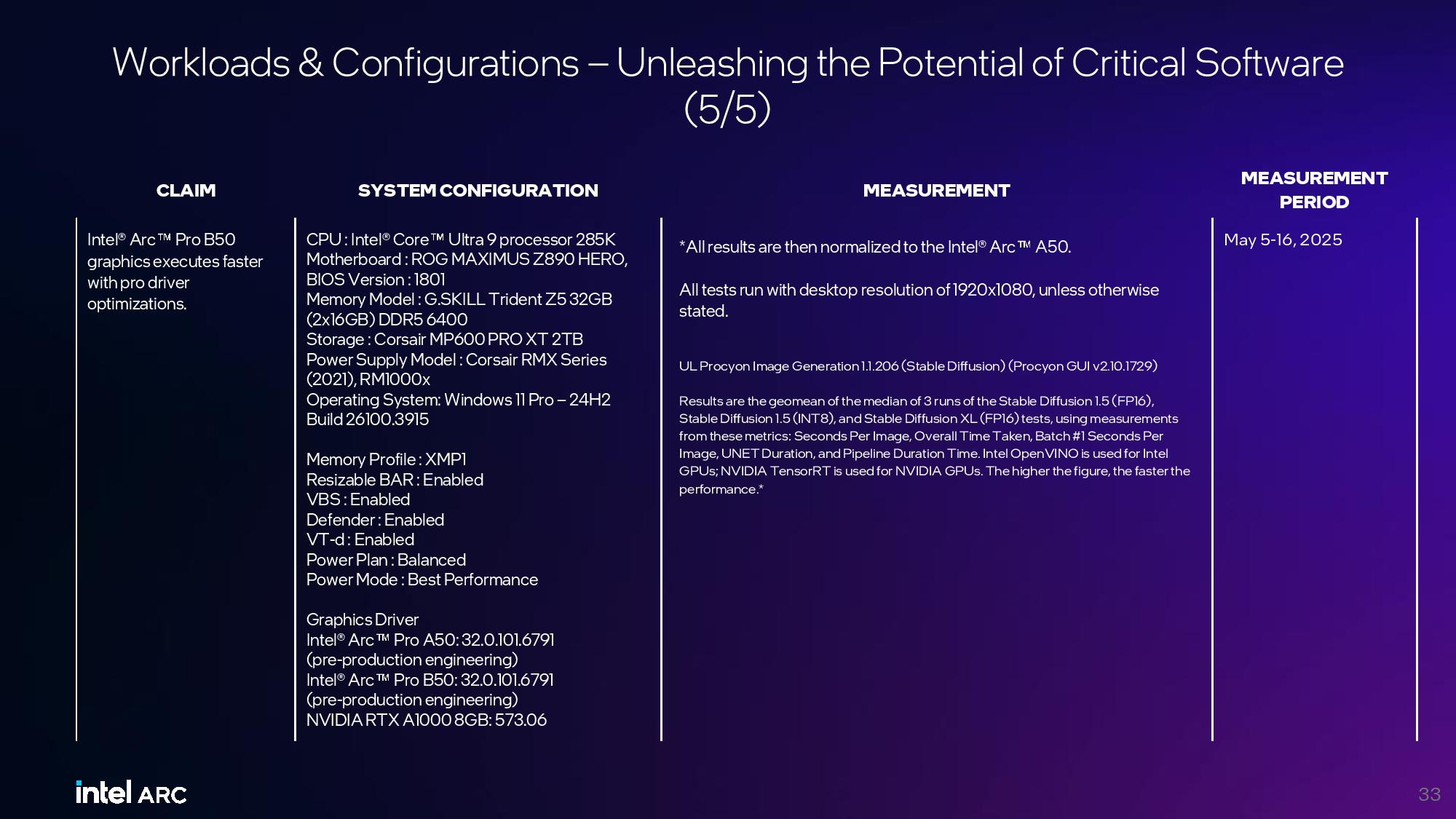

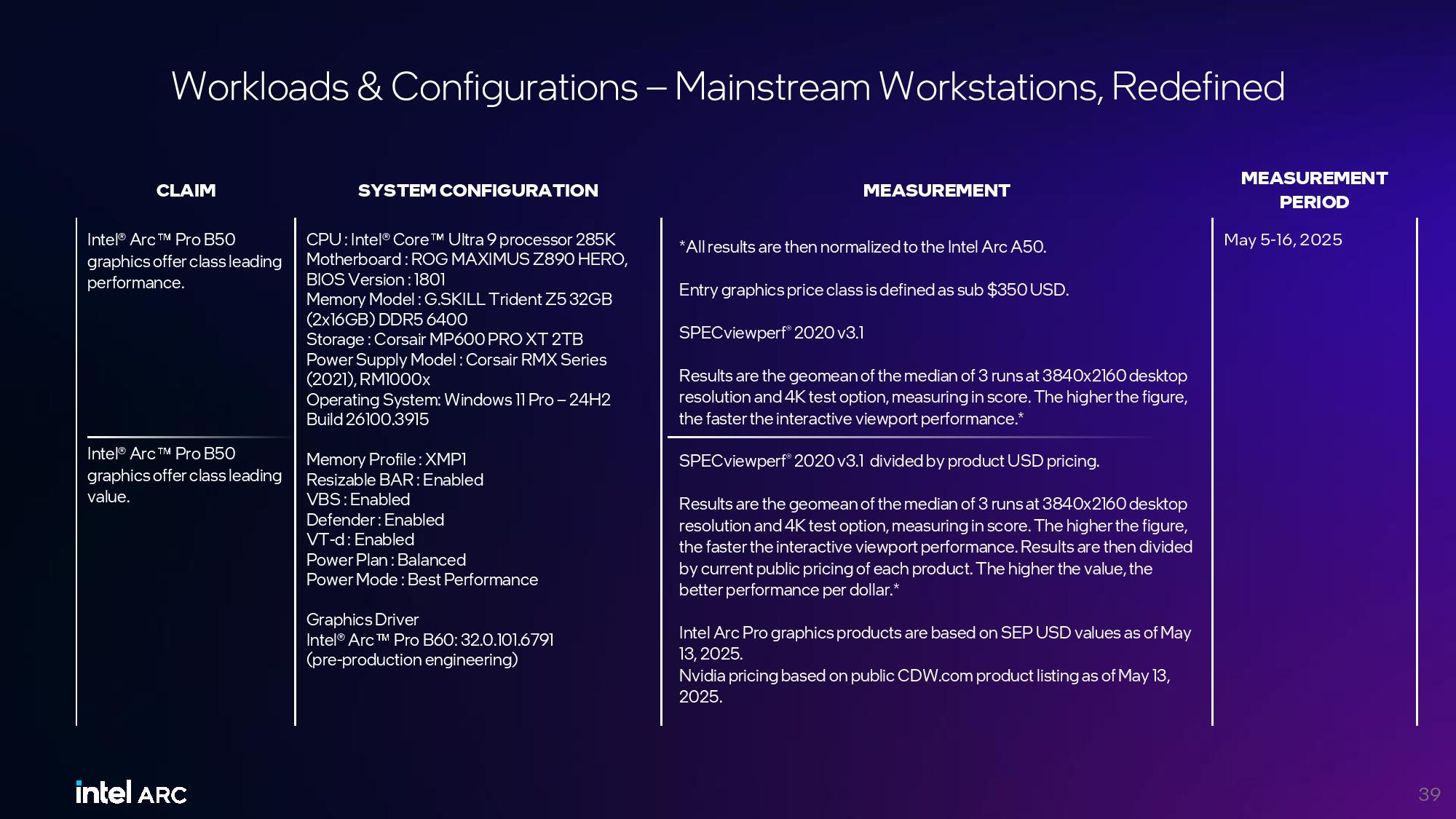

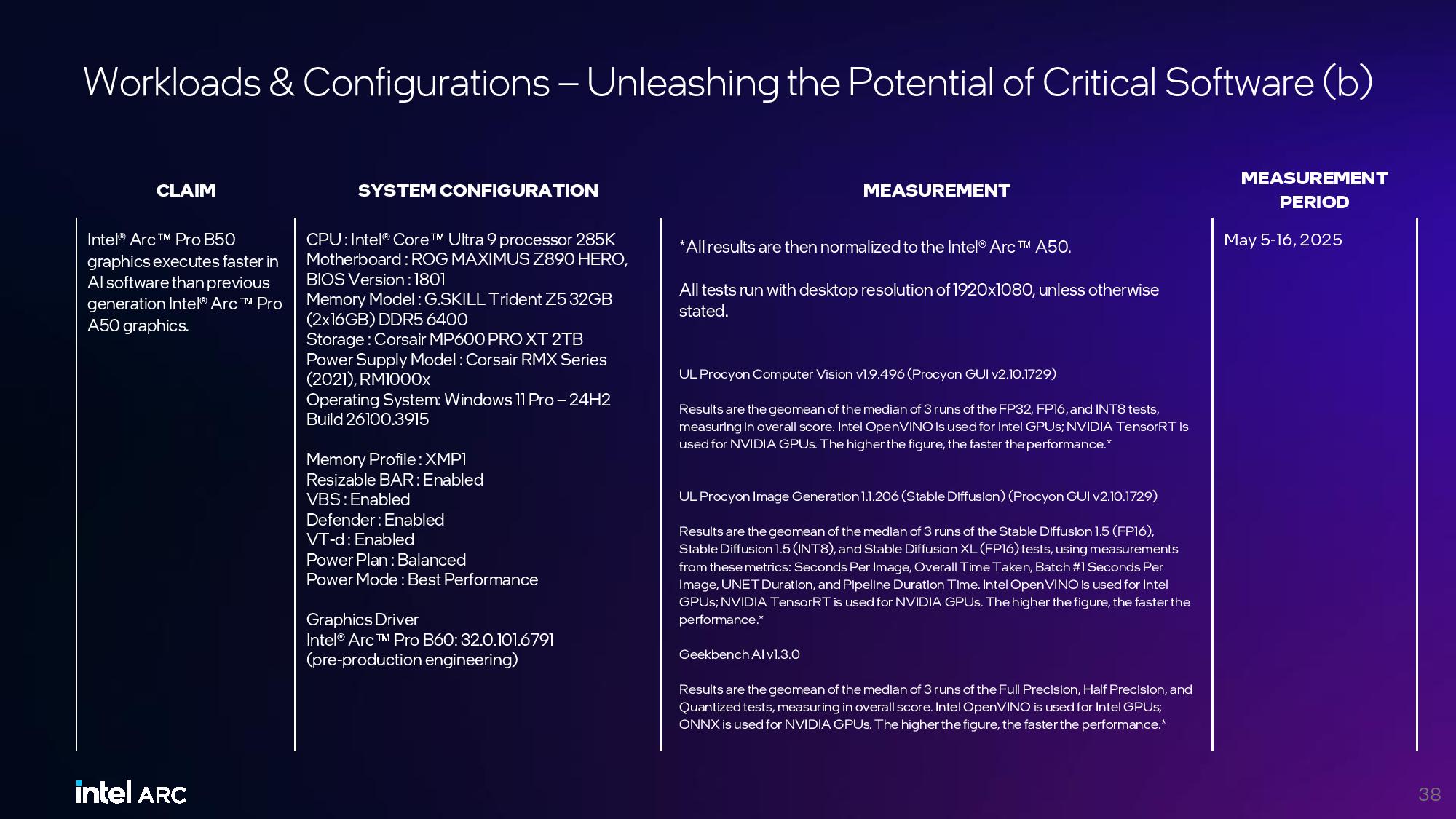

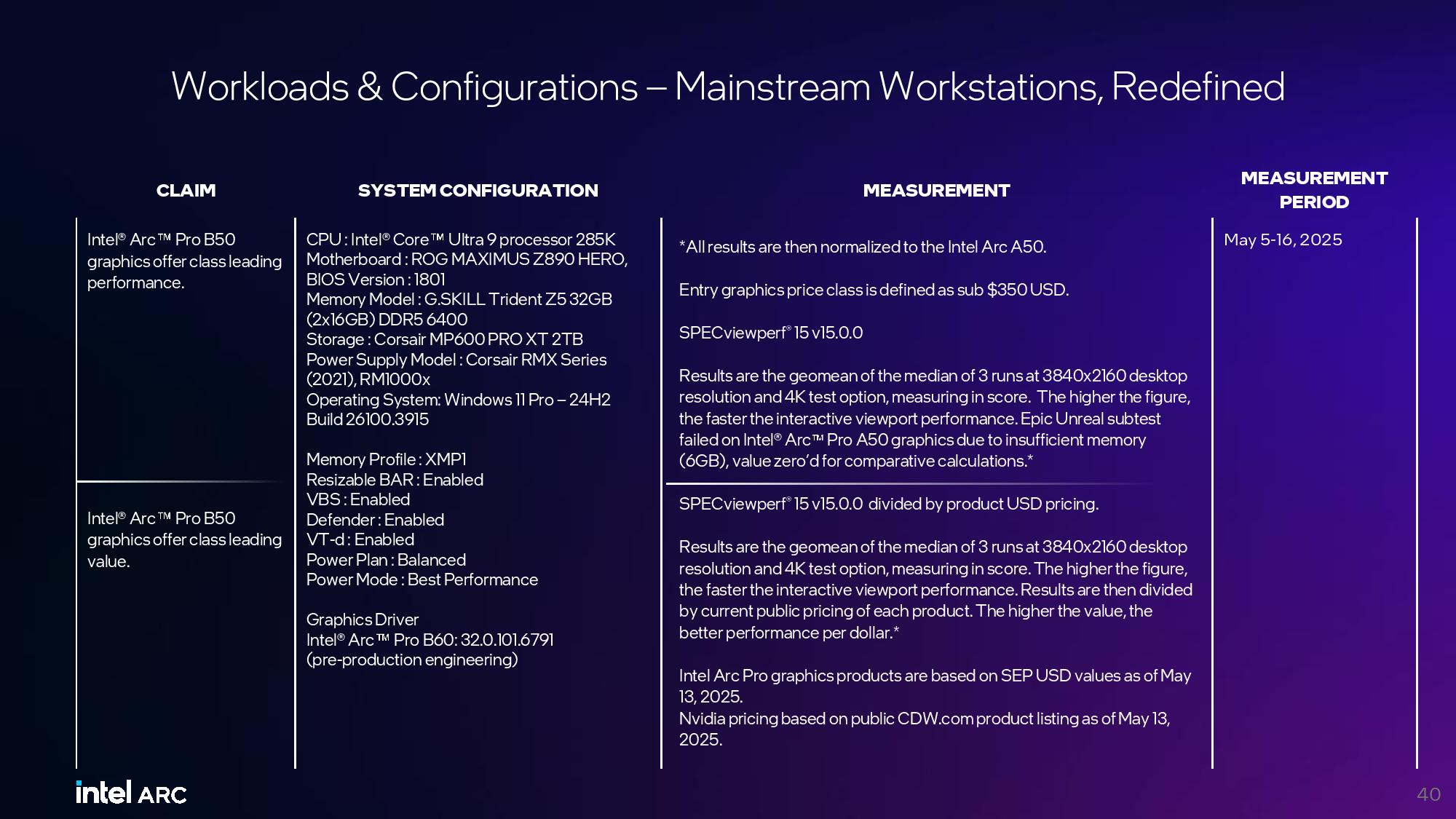

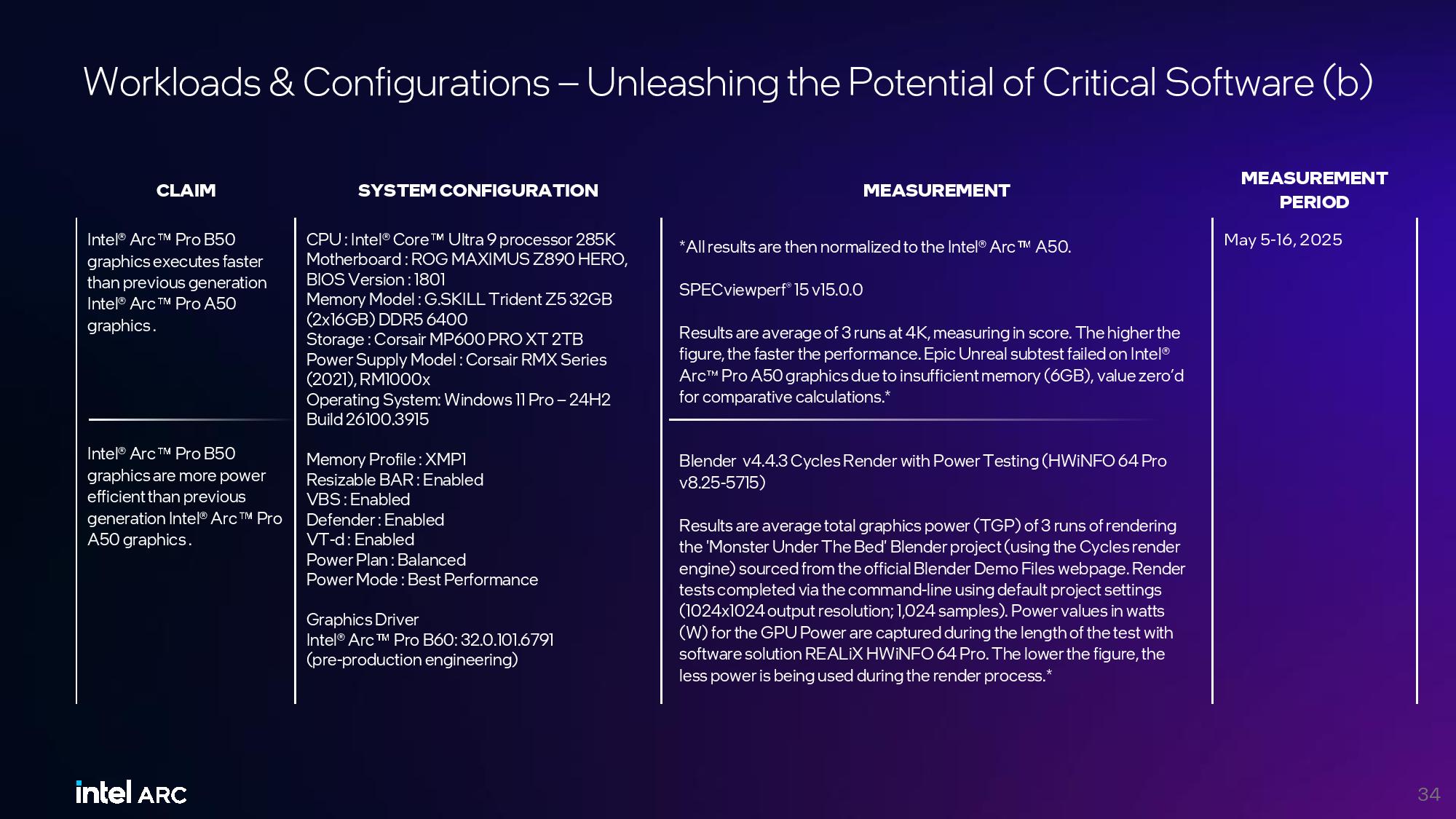

Intel shared a slew of benchmarks against the competing Nvidia RTX A1000 8GB and the previous-gen A50 6GB, but as with all vendor-provided benchmarks, take them with a grain of salt (we included the test notes at the end of the article). In graphics workloads, Intel claims up to a 3.4X advantage over its previous-gen A50, and solid gains across the board against the RTX A1000. It sports similar advantages in a spate of AI inference benchmarks.

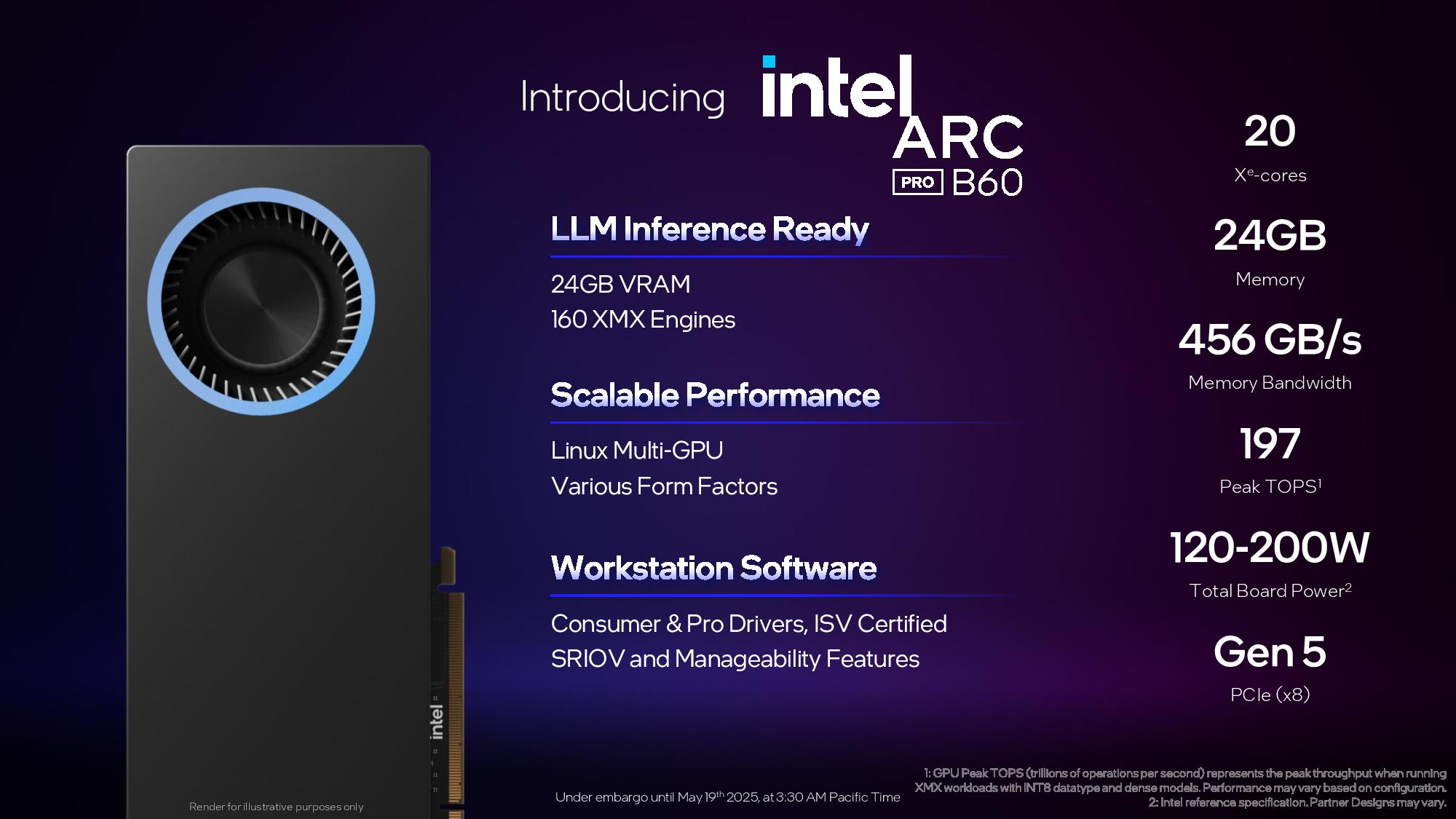

The Intel Arc Pro B60 has 20 Xe cores and 160 XMX engines fed by 24GB of memory that delivers 456 GB/s of bandwidth. The card delivers 197 peak TOPS and fits into a 120 to 200W TBP envelope. This card also comes with a PCIe 5.0 x8 interface.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Intel supports multiple B60 GPUs on a single board, as evidenced by Maxsun's GPU, with software support in Linux for splitting workloads across both GPUs (each GPU interfaces with the host on its own bifurcated PCIe 5.0 x8 connection).

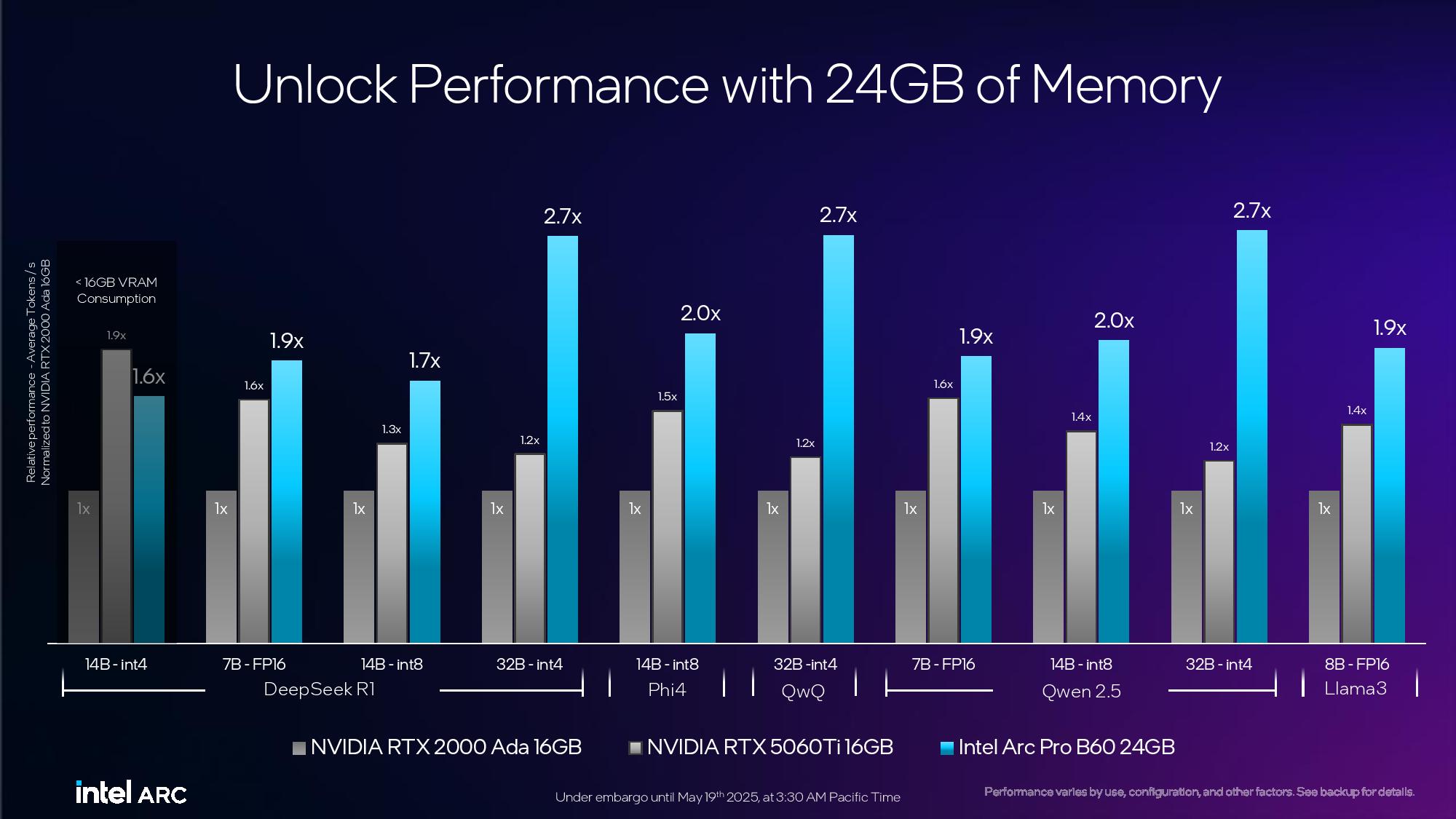

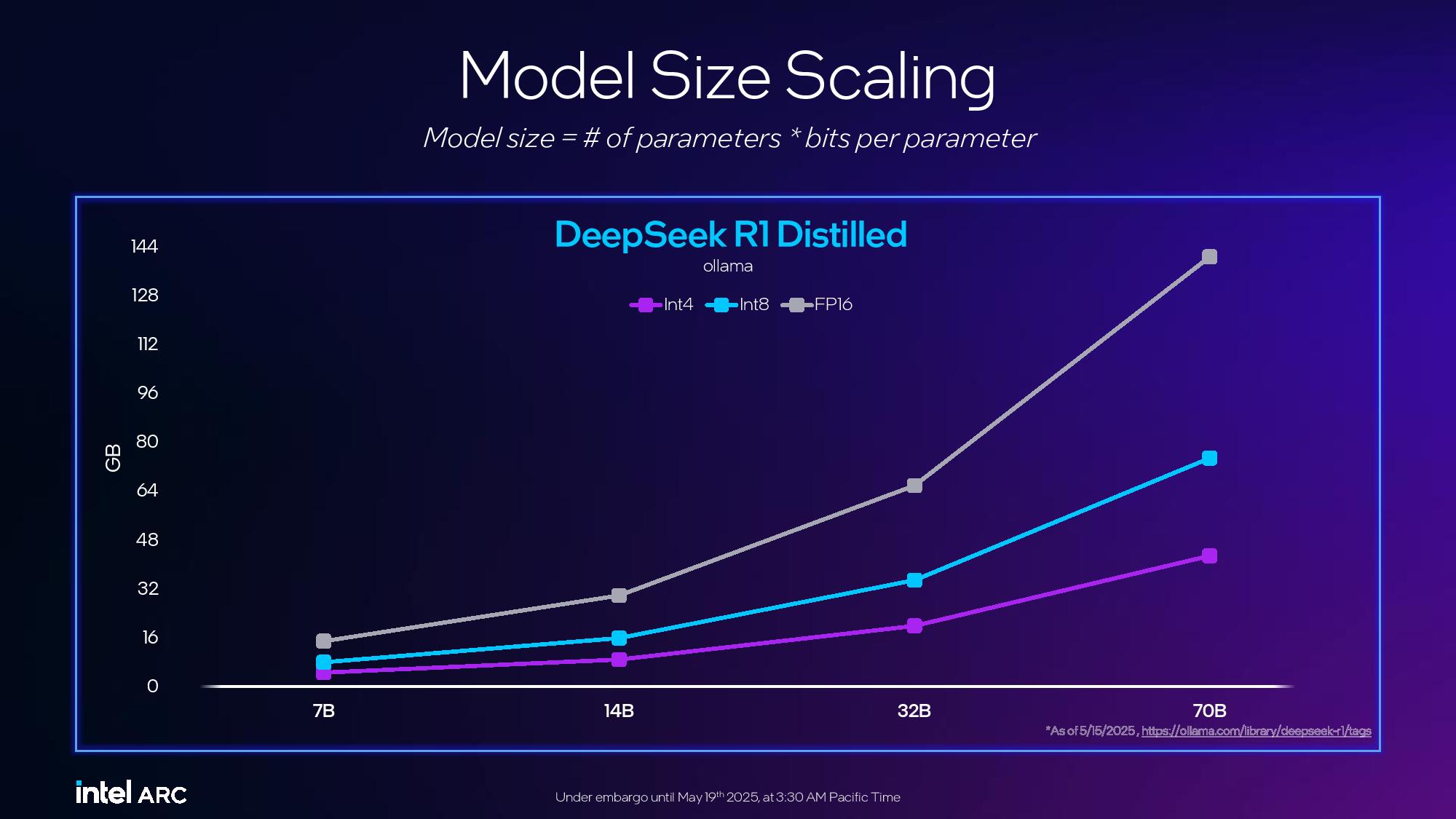

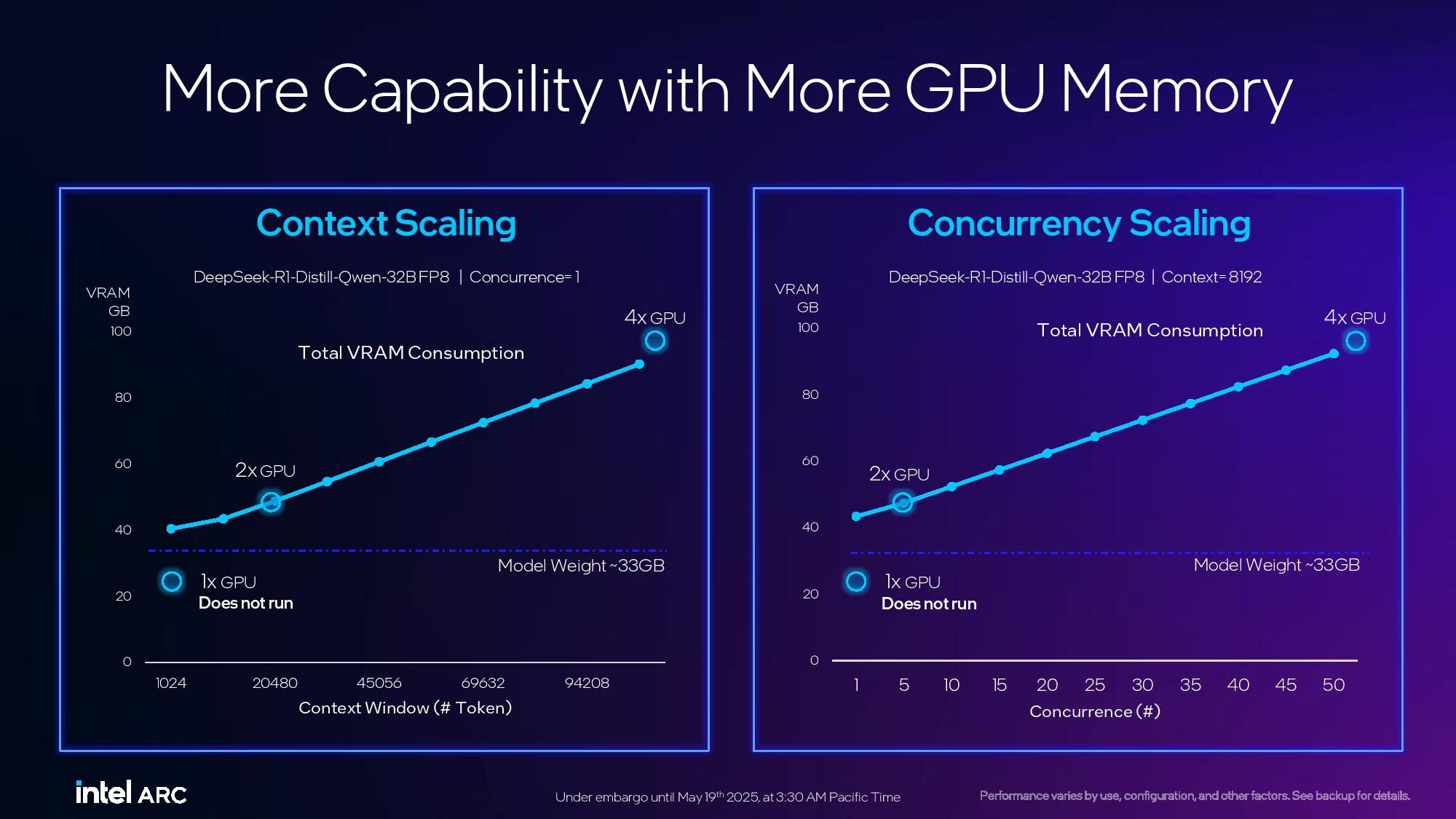

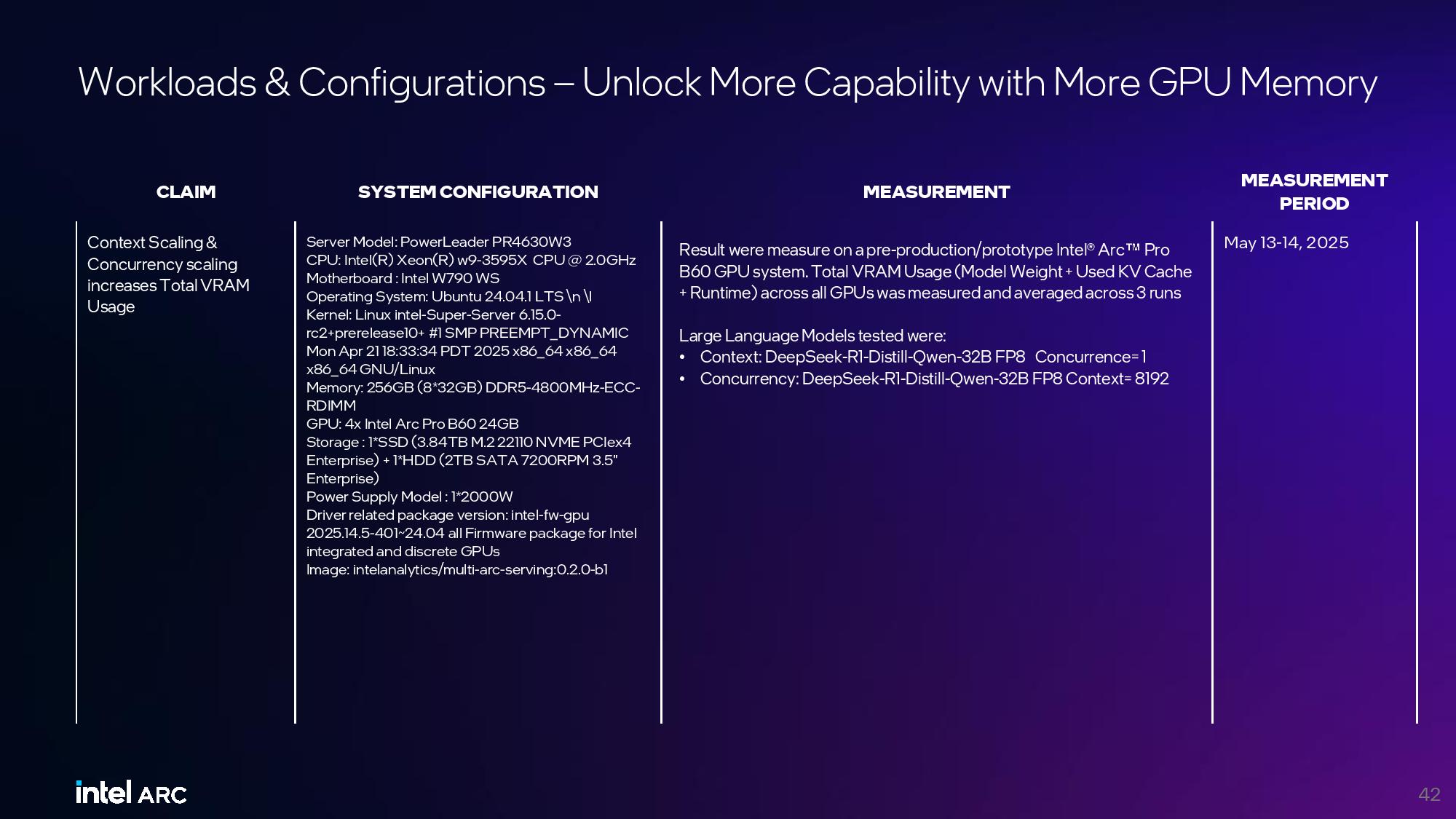

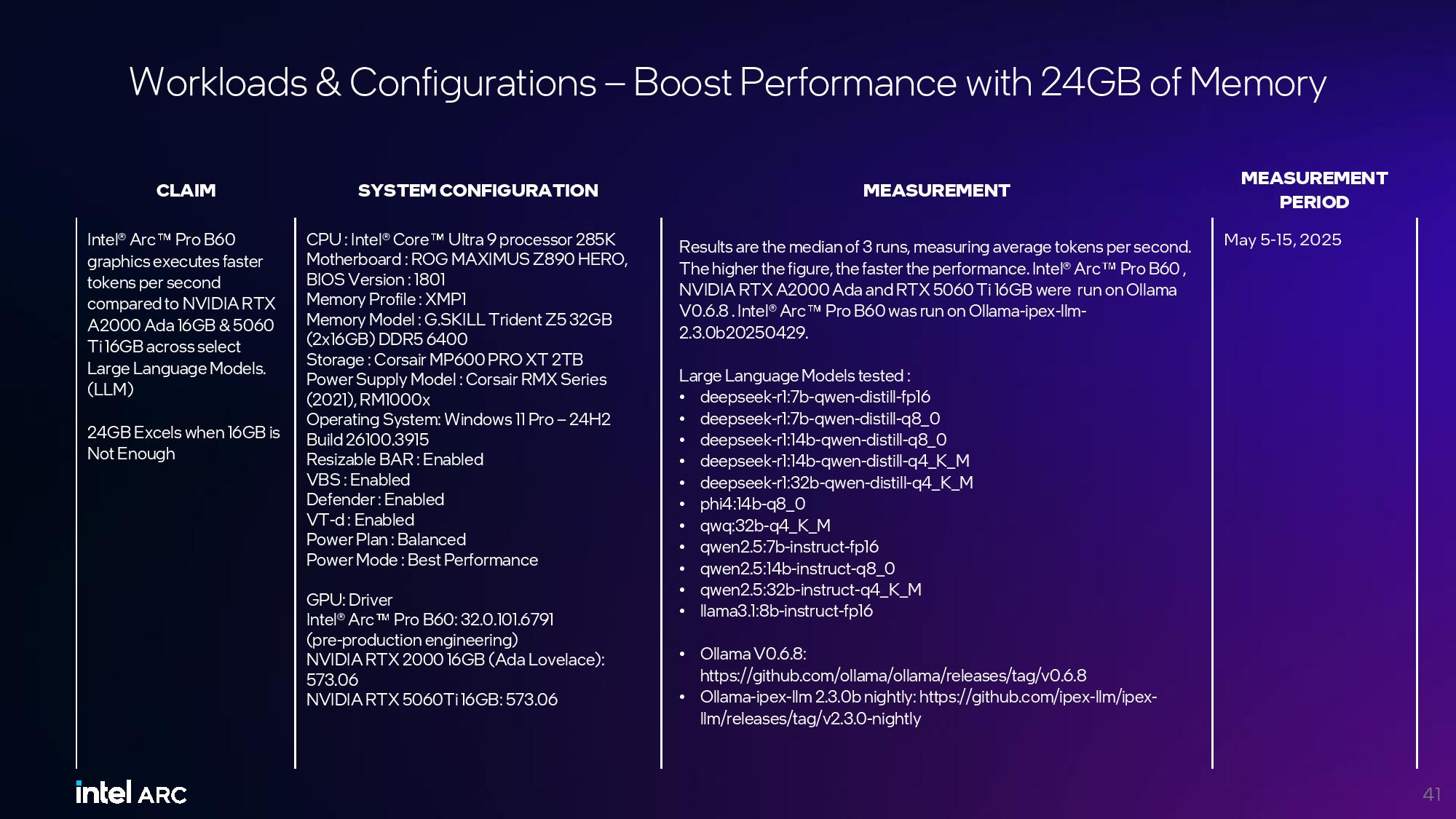

Intel's benchmarks again highlighted the advantages of the B60's 24GB of memory vs the competing RTX 200 Ada 16GB and RTX 5060Ti 16GB GPUs, claiming this can impart gains of up to 2.7X over the competition in various AI models. Intel also highlighted the advantages of higher memory capacity in model size, context, and concurrency scaling.

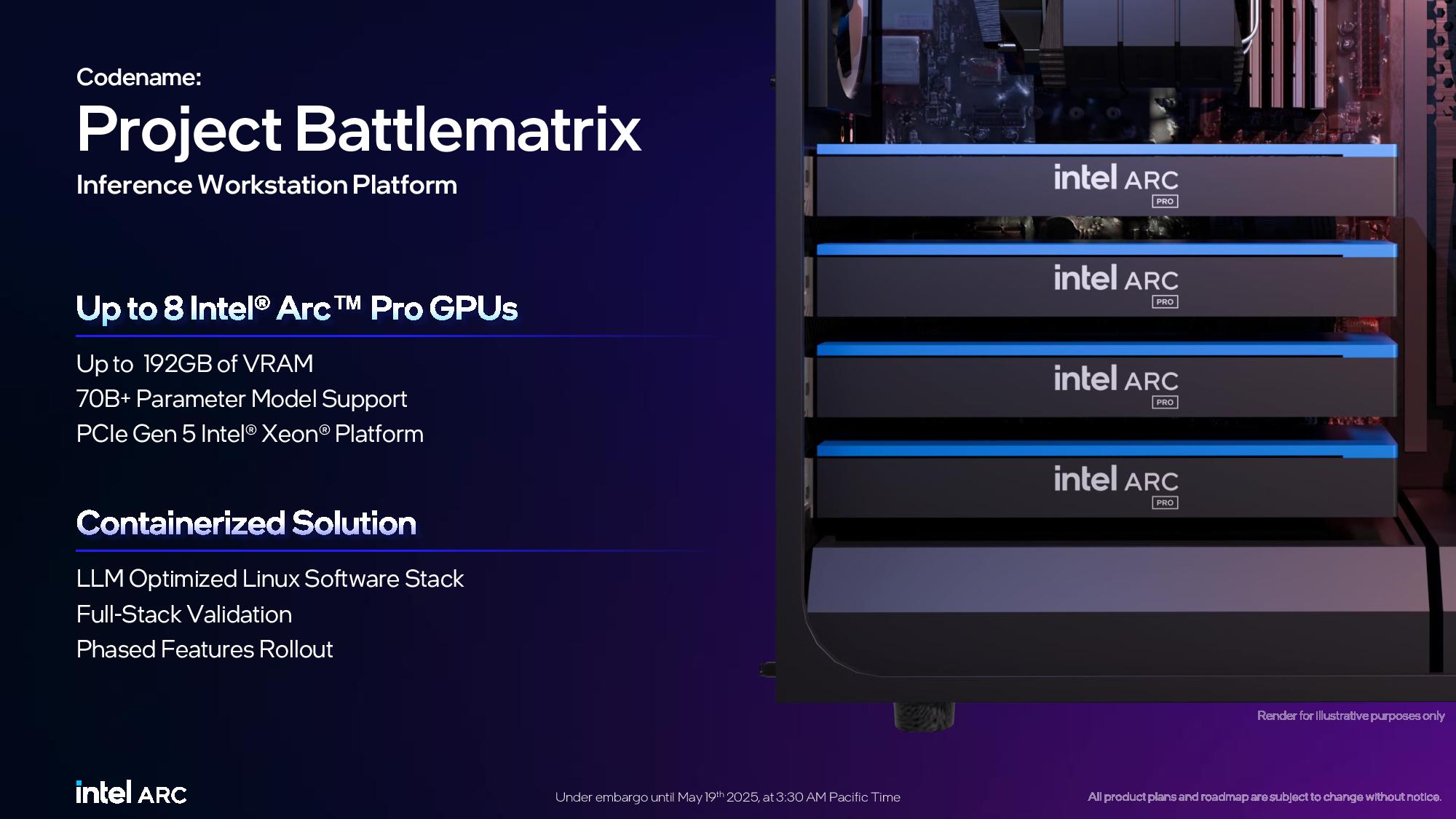

The Intel Arc Pro B60 will primarily come in pre-built inference workstations ranging from $5,000 to $10,000, dubbed Project Battlematrix. The goal is to combine hardware and software to create one cohesive workstation solution. However, the per-unit cost will be in the range of $500 per GPU, depending on the specific model.

Project Battlematrix workstations, powered by Xeon processors, will come with up to eight GPUs, 192GB of total VRAM, and support up to 70B+ parameter models.

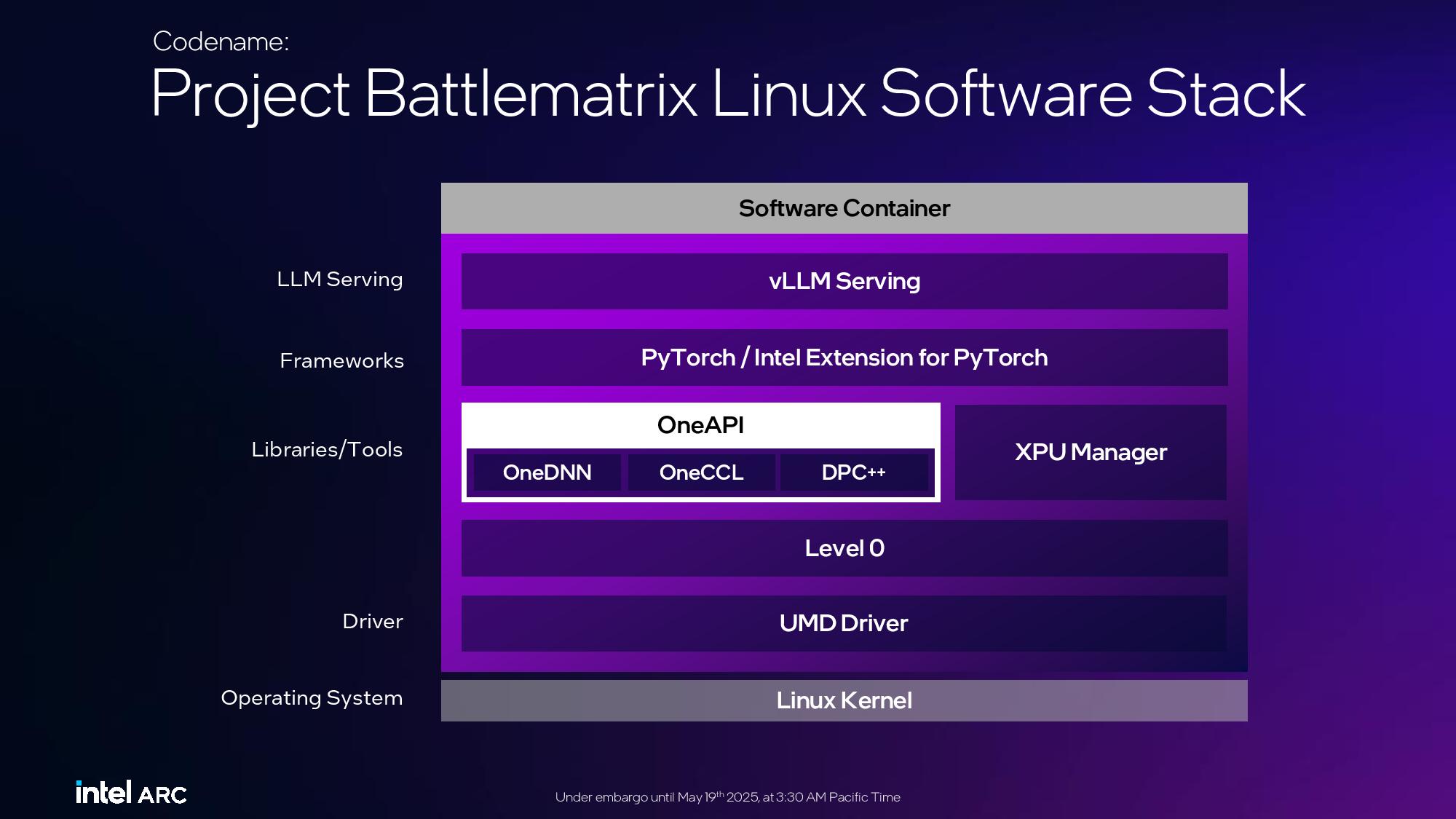

Intel is working to deliver a validated full-stack containerized Linux solution that includes everything needed to deploy a system, including drivers, libraries, tools, and frameworks, that's all performance optimized, allowing customers to hit the ground running with a simple install process. Intel will roll out the new containers in phases as its initiative matures.

Intel also shared a roadmap of the coming major milestones. The company is currently in the enablement phase, with ISV certification and the first container deployments coming in Q3, eventually progressing to SRIOV, VDI, and manageability software deployment in Q4.

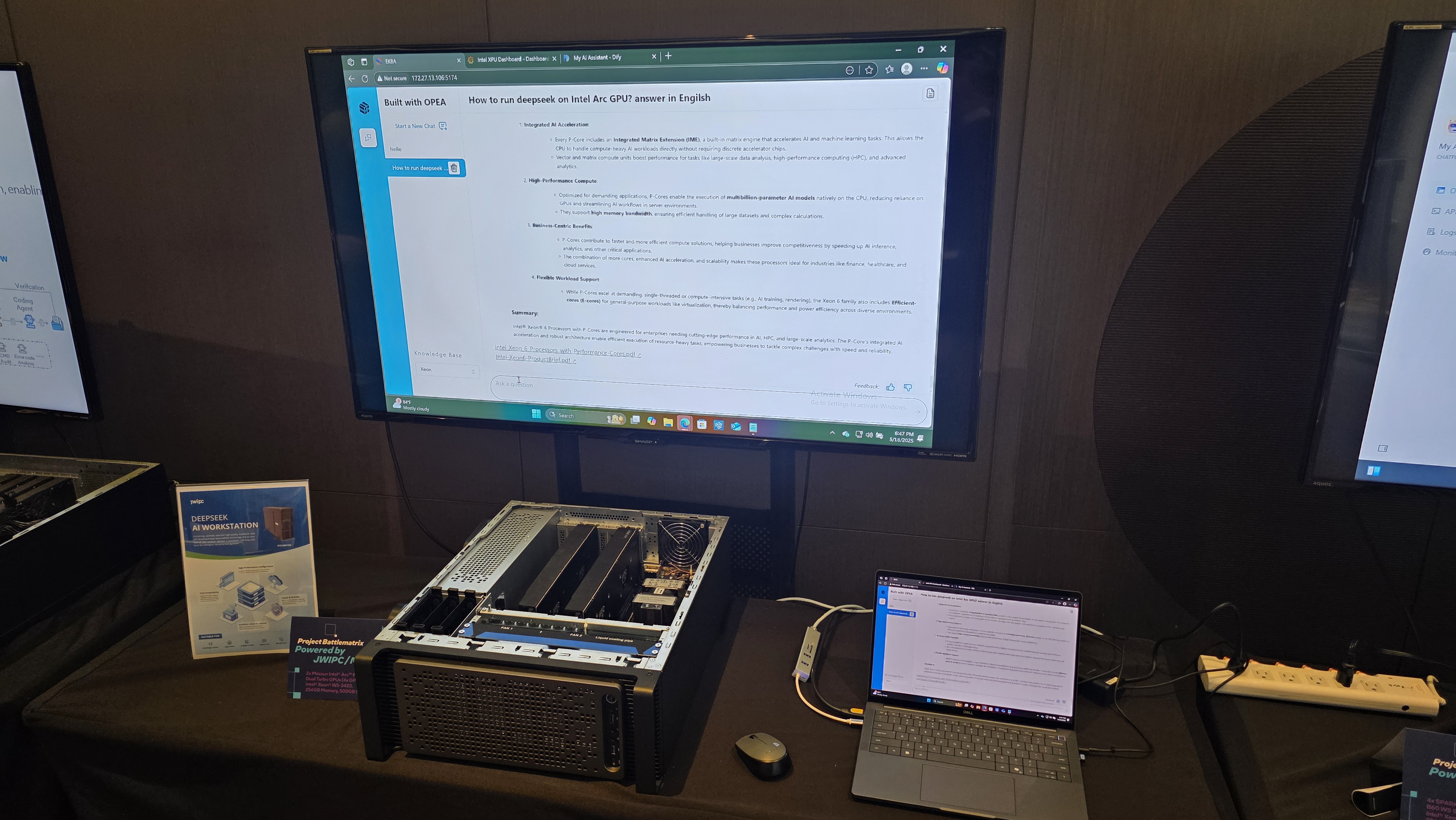

Intel's partners had multiple Project Battlematrix systems up and running live workloads in the showroom, highlighting that development is already well underway.

One demo included a system running the full 675B parameter Deepseek model entirely on a single eight-GPU system, with 256 experts running on the CPU and the most frequently used experts running on the GPU.

Other demos included running and finding bugs in code, an open enterprise platform for building RAGs quickly, and a RAG orchestration demo, among others.

As noted above, the Intel Arc Pro B50 and Intel Arc Pro B60 will arrive on the market in the third quarter of 2025.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Mindstab Thrull IF it ends up being a good AI GPU, you could fit four in a typical PC chassis as long as your motherboard has sufficient slots. Four PCIE slots means 96GB VRAM which is a lot for handling AI on a home server and only be about $2000 for GPU's. Sounds like fun!Reply

... Calling Wendell at Level1Techs? :) -

abufrejoval Reply

Unfortunately GPUs don't scale like CPUs and even in CPUs scaling was never easy or for free (Amdahl's law).Mindstab Thrull said:IF it ends up being a good AI GPU, you could fit four in a typical PC chassis as long as your motherboard has sufficient slots. Four PCIE slots means 96GB VRAM which is a lot for handling AI on a home server and only be about $2000 for GPU's. Sounds like fun!

... Calling Wendell at Level1Techs? :)

So just taking 4 entry level GPUs to replace one high-end variant doesn't just work out of the box, if even with a carefully taylormade model for that specific hardware: the bandwidth cliff between those GPUs slows performance to CPU-only levels, which are basically PCIe bandwidth levels, mostly.

There is a reason Nvidia can sell those NVLink switching ASICs for top dollar, because they provide the type of bandwidth it takes to somewhat moderate the impact of going beyond a single device. -

abufrejoval I predict a future as bright and long as Xeon Phi, only much accelerated.Reply

This tastes much more like a desperate attempt to moderate the fall of stock prices than honest delusions, of which Intel had aplenty.

Not even the best and greatest single shot product really has much of a chance in that market. Unless the vast majority of potential buyers truly believe that you have a convincing multi-generation roadmap which you'll be able to execute, nobody is going to invest much thought, let alone commit budgets and people in that direction, just look at how hard it is already for AMD.

And they have a little more to offer than one entrly level GPU chip that fails to sell even with a high-price competition. -

jlake3 Reply

With all due respect, I’m not sure how this is consumer, AI-dedicated, or leading?baboma said:Good to see Intel taking the lead on dedicated AI GPUs for the consumer market. Pricing is pretty amazing. Hope to see more coverage of these once they come to market.

B50/B60 are explicitly part of the workstation line up, and run professional drivers

The standard display pipelines are enabled, and they’re equipped with the normal amount of outputs

2025Q3, with “full enablement” in Q4 is hardly first to the party when it comes to using workstation GPUs for AITwo B60s on one PCB is a neat twist that was only being done in rack mount servers and not tower workstations, but if one B60 costs $500 I’d expect the dual version to cost 2x plus a premium for complexity and density. -

abufrejoval Reply

I have been very much into evaluating the potential of AIs for home use as part of my day job. And for a long time I've been excited about the fact that a home-assistant can afford to be rather less intelligent than an AI that's supposed to replace lawyers, doctors, scientists or just programmers: most servants came from a rather modest background and were only expected to perform a very limited range of activities, so even very small LLMs which could fit into a gaming GPU might be reasonable and able to follow orders over the limited domain of your home.baboma said:>There is a reason Nvidia can sell those NVLink switching ASICs for top dollar,

No one would argue that Intel B-series would be competitive with Nvidia AI products in the pro market. It's a product for a different market, being priced at thousands of dollars as vs tens or hundreds of thousands.

There's groundswell of enthusiast and startup interest in client-side LLMs/AIs. As of now, there's really no product that serves that segment as vendors (Nvidia mostly) are busy catering to the higher-end segment. So it's not a question of high vs low, but more a question of something vs nothing.

I put your concern to a Perplexity query, which follows (excuse the verbiage). I don't pretend to be familiar with the minutiae of the processes mentioned, but it's fairly evident that speed concerns can be optimized to some degree, and what's offered is better than what's available now.

=====

GPUs do not scale as easily as CPUs due to bandwidth and communication bottlenecks--especially when using PCIe instead of high-bandwidth interconnects like NVLink. Simply combining multiple entry-level GPUs rarely matches the performance of a single high-end GPU, because the inter-GPU communication can become a major bottleneck, negating much of the potential speedup.

How vLLM Addresses This Challenge

vLLM implements several strategies that can help mitigate these scaling limitations:

1. Optimized Parallelism Strategies

2. Memory and Batching Optimizations

3. Super-Linear Scaling Effects

4. Practical Recommendations

. For best scaling, use high-bandwidth GPU interconnects (NVLink, InfiniBand) if available.

. On systems limited to PCIe, prefer pipeline parallelism across nodes and tensor parallelism within nodes to reduce communication overhead.

But the hallucinations remain a constant no matter what model size, and very basic facts of life and the planet are ignored to the point, where I wouldn't trust AIs to control my light switches.

The idea that newer and bigger models would heal those basic flaws has been proven wrong for already several generations and across the range of 1-70B, which hints at underlying systematic issues.

Perhaps there could be a change perhaps at 500B or 2000B, but I have no use for the "smarts" a model like that would provide, certainly the expense wouldn't offset the value gained and that's only if hallucinations could indeed be managed: without a change in the approach, there is no solution in sight, reasoning and mixture of expert models aren't really doing better and walk off a hallucination cliff with invented assumptions.

Note that all of the above approaches described by Perplexity adress model design and model training, which no end-user can afford to do: that would be like doing genetic engineering to create perfect kids for doing chores.

The best you can realistically do for your private AI servant is to get open source models and then provide them with all the context they need to serve you, via RAG or whatever.

And those models won't come taylor-made for Intel Battlematrix, at best Intel might publish one or two demo variants as "proof". What would pay for the effort of just keeping a well known open source model even compatible with this niche and complex hardware base? Let alone something new at the level of a Mistral, Phi, Llama or DeepSeek?

1% improvement per GPU is already "closer", just not enough value return on the invest: at this point AIs are resorting to tautologies.baboma said:. Tune batch sizes and memory allocation parameters to maximize utilization without overloading communication channels.

Accept that some inefficiency is unavoidable with entry-level hardware and slow interconnects, but vLLM's optimizations will help you get closer to optimal performance than naive multi-GPU setups.

With 45 years as an IT professional, 20 of those in technical architecture, and the last 10 years in technical architecture for AI work in a corporate research lab, I'm not quite juvenile any more, nor am I much of a fan, or boy, or fanboy: don't mistake my harshness here for ignorance.baboma said:=====

>This tastes much more like a desperate attempt to moderate the fall of stock prices than honest delusions, of which Intel had aplenty.

Wow. Now you are veering into conspiracy theory and juvenile fanboy territory. So much for hopes of a productive talk.

I've followed Intel's 80432, the first graphics processor they licensed from NEC during 80286 times, the "Cray on a chip" iAPX850, Itanium and Xeon Phi working in HPC publicly funded research institutes during my thesis and later as part of a company that manufactured HPC computers. I've met and known the guys who designed them for 15 years.

I've had the privilege of being able to put technology which I was enthusiastic about to the test and getting paid for that.

Surprises do happen, but e.g. with regards to the potential of AMD's Zen revival, my prediction (of success) actually proved some of those people wrong.

Most importantly, I've run and benchmarked AI models for performance and scalability myself and supported a much larger team of AI researchers and model designers to do that across many AI domains, not just LLMs.

But I've also done significant LLM testing for the last 2 years, again with a focus on scalability, but also the quality impact of distinct numerial formats for weight representation, quantizations and model size.

That experience has made my very much a sceptic, and that's not the result I was hoping for: I really want my AI servants! But I want them to be loyal, valuable, and not to kill me before I tell them to.

So I feel rather comfortable in my prediction, ...or rather quite a lot of discomfort at how far off any plausible path to success Intel is straying here: desperation becomes the more likely explanation than sound engineering.

But let's just revisit this "product" in 1/2/5 years and see who projected its success better, ok? -

cyrusfox Reply

Disagree here, there is certain workloads (LLM) which are memory hungry (higher precision models) and the battlemage architecture supplies sufficient throughput to make this a viable product especially in the price range these are marketed at ($300 for a B50). The B580 is capable of 30 tokens per second, compare that to Chatgpt, which charges ~1$ per million tokens, a B580 would produce 30*3600*24=2.5 million tokens a day, so equivalent of ~$2.50. Roughly pay for itself in ~100 days, payoff slower if electricity cost is factored in. This has a lot of parallels to crypto workloads.abufrejoval said:I predict a future as bright and long as Xeon Phi, only much accelerated.

This tastes much more like a desperate attempt to moderate the fall of stock prices than honest delusions, of which Intel had aplenty.

Not even the best and greatest single shot product really has much of a chance in that market. Unless the vast majority of potential buyers truly believe that you have a convincing multi-generation roadmap which you'll be able to execute, nobody is going to invest much thought, let alone commit budgets and people in that direction, just look at how hard it is already for AMD.

And they have a little more to offer than one entrly level GPU chip that fails to sell even with a high-price competition.

These professional cards come with more vram by default and the B50 should be capable of 24 tokens/s, B60 same as the b580, and the dual version double that (it scales quite well as long as bandwidth between units is high).

No one else is offering this much memory bandwidth at this price, intel is serving an unfulfilled market and these should be as hard to find as a b580- due to the lack of competition. While it won't game as well b570 or b580 especially, but for these will perform exceptionally well for professional workloads as long as you don't need CUDA. As these are 1/4 or less the price of the competing RTX pro, these should do quite well. -

User of Computers Reply

I agree herecyrusfox said:Disagree here, there is certain workloads (LLM) which are memory hungry (higher precision models) and the battlemage architecture supplies sufficient throughput to make this a viable product especially in the price range these are marketed at ($300 for a B50). The B580 is capable of 30 tokens per second, compare that to Chatgpt, which charges ~1$ per million tokens, a B580 would produce 30*3600*24=2.5 million tokens a day, so equivalent of ~$2.50. Roughly pay for itself in ~100 days, payoff slower if electricity cost is factored in. This has a lot of parallels to crypto workloads.

These professional cards come with more vram by default and the B50 should be capable of 24 tokens/s, B60 same as the b580, and the dual version double that (it scales quite well as long as bandwidth between units is high).

No one else is offering this much memory bandwidth at this price, intel is serving an unfulfilled market and these should be as hard to find as a b580- due to the lack of competition. While it won't game as well b570 or b580 especially, but for these will perform exceptionally well for professional workloads as long as you don't need CUDA. As these are 1/4 or less the price of the competing RTX pro, these should do quite well. -

User of Computers Reply

They have the silicon. Why not put in the software work and take a piece of a much bigger pie than the consumer low-end would ever offer?abufrejoval said:This tastes much more like a desperate attempt to moderate the fall of stock prices than honest delusions, of which Intel had aplenty. -

thestryker Reply

Given that it's a PCIe 5.0 x8 GPU which doesn't need a ton of power I'm guessing the only thing they share is power delivery, display output routing and cooling. That shouldn't particularly add much of anything to the cost of manufacture so I'd bet any premium would be based on market demand.jlake3 said:Two B60s on one PCB is a neat twist that was only being done in rack mount servers and not tower workstations, but if one B60 costs $500 I’d expect the dual version to cost 2x plus a premium for complexity and density.