Nvidia GeForce Driver Promises Doubled Stable Diffusion Performance

Bringing Microsoft's Olive toolset into GeForce Machine Learning acceleration.

Nvidia today announced a new GeForce Game Ready Driver update that's bound to turn the head of anyone dabbling with local Stable Diffusion installations. The latest GeForce Game Ready Driver Release 532.03, which will be released later today, packs in learnings from Microsoft's Olive toolchain, a hardware-aware model optimization tool that aims to perfectly stitch your diffusion model's processing to your graphics cards' capabilities.

According to Microsoft, Olive has the ability to modify the base model according to the hardware capabilities available — whether in local installations, on the cloud, or on the edge. Olive is a way to abstract away all the different Machine Learning (ML) acceleration tool chains distributed by the different market players. Part of its mission, then, is to reduce market fragmentation around ML acceleration techniques.

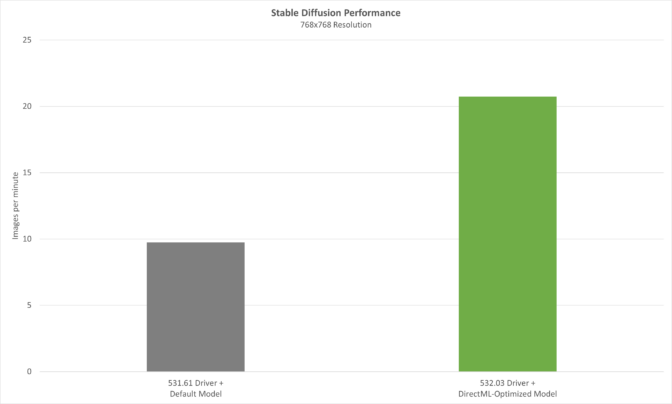

Nvidia says the new Olive integration into GeForce will allow for performance improvements in AI operations that leverage the fixed-function RTX Tensor cores present in Nvidia GPUs. Using an Olive-optimized version of the Stable Diffusion text-to-image generator (paired with the popular Automatic1111 distribution), performance is improved over 2x.

“AI will be the single largest driver of innovation for Windows customers in the coming years,” said Pavan Davuluri, corporate vice president of Windows silicon and system integration at Microsoft. “By working in concert with Nvidia on hardware and software optimizations, we’re equipping developers with a transformative, high-performance, easy-to-deploy experience.”

There shouldn't be a reason for AMD to not be able to implement Microsoft's Olive toolset in its own graphics drivers, although it's currently unclear how much these optimizations actually depend on the Tensor performance (remember that AMD doesn't possess an equivalent hardware solution on-board its RX 7000 GPU family). For now, it seems that Nvidia users in particular are poised for even better performance than can be extracted from AMD's GPUs.

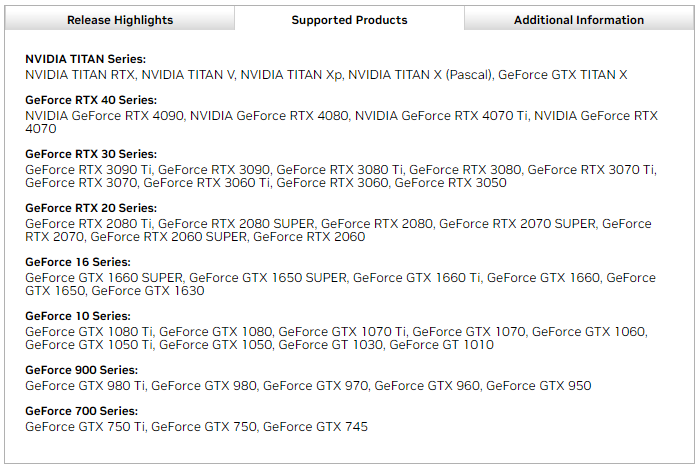

Interestingly, while Nvidia makes it clear that the RTX architecture's Tensor cores are mainly responsible for the cards' performance in ML acceleration, graphics cards listed for support with version 532.03 include cards starting from the GTX 700 family. Do any of these older architecture cards that don't sport any Tensor cores see a similar 2x improvement in ML acceleration? Okay, maybe not a GTX 700, but how about GTX 10-series and 16-series parts? If you have one of those and test this out, let us know in the comments.

Besides Microsoft's Olive integration, the latest GeForce Game Ready v 532.03 drivers also bring support for the NVIDIA RTX 4060 Ti, they're Game Ready for Lord of the Rings: Gollum, and they fix some known bugs in Age of Wonders 4 and Bus Simulator 31.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

The 2x performance claim is certainly interesting, given we can more or less get 50% uplift with SD using hardware-specific optimized models.Reply

Though it looks like this optimized version has to be executed through an ONNX pipeline which is not out of the box for SD webui as of now. Also interested to know the relative memory requirements between using the optimized pipeline vs the current SD pipeline, since they haven't mentioned this.

Kind of off topic, and not directly related to this news, but if anyone is interested now, for preliminary testing generative AI models, the Dolly 2.0 large language model is actually now on Hugging Face, which is also an Olive-optimized version.

NVIDIA's NeMo LLM for conversational AI is also coming soon, although there is no ETA.

It'd be interesting to see if these older-architecture cards that don't sport any Tensor cores see a similar 2x performacne improvement in ML acceleration or not.

I don't think the older GTX cards can get a 2X perf improvement uplift, unlike the RTX GPUs, since they obviously lack specific dedicated hardware. -

JarredWaltonGPU Reply

Looks like I have something else to try and figure out. Gotta talk to Nvidia and see what exactly is needed to get this speedup with Automatic1111's WebUI.Metal Messiah. said:The 2x performance claim is certainly interesting, given we can more or less get 50% uplift with SD using hardware-specific optimized models.

Though it looks like this optimized version has to be executed through an ONNX pipeline which is not out of the box for SD webui as of now. Also interested to know the relative memory requirements between using the optimized pipeline vs the current SD pipeline, since they haven't mentioned this.

Kind of off topic, and not directly related to this news, but if anyone is interested now, for preliminary testing generative AI models, the Dolly 2.0 large language model is actually now on Hugging Face, which is also an Olive-optimized version.

NVIDIA's NeMo LLM for conversational AI is also coming soon, although there is no ETA.

I don't think the older GTX cards can get a 2X perf improvement uplift, unlike the RTX GPUs, since they obviously lack specific dedicated hardware.

As for the older GTX cards, I'm also curious if there's any benefit from these drivers. Not even 2X, but maybe 10-25% faster? But the last time I tried Automatic1111 on a GTX 1660 Super, it was horribly slow — far slower than it ought to be!

Considering RX 6600 manages over two images per minute, I'd expect a GTX 1660 Super to at least be able to do maybe one per minute. Last time I tried, I think I got about one 512x512 image every two and a half minutes! -

ReplyJarredWaltonGPU said:Not even 2X, but maybe 10-25% faster?

That uplift sounds reasonable. Will you retest all the cards now, and update the SD benchmark/performance analysis article ? -

jp7189 Reply

If you figure it out, I for one would love a "how to" article so I can follow along at home.JarredWaltonGPU said:Looks like I have something else to try and figure out. Gotta talk to Nvidia and see what exactly is needed to get this speedup with Automatic1111's WebUI.

As for the older GTX cards, I'm also curious if there's any benefit from these drivers. Not even 2X, but maybe 10-25% faster? But the last time I tried Automatic1111 on a GTX 1660 Super, it was horribly slow — far slower than it ought to be!

Considering RX 6600 manages over two images per minute, I'd expect a GTX 1660 Super to at least be able to do maybe one per minute. Last time I tried, I think I got about one 512x512 image every two and a half minutes! -

jp7189 I wonder if training speed can be increased as well? I would definitely welcome a 2x boost there.Reply -

The training speed will actually depend on many other factors as well. But I will let Jarred confirm this.Reply

-

Gerosu I tested it on my GTX 1080.Reply

Installed new drivers, optimized the ONNX model.

But the generation time has not changed. Tested on 512x512 50 steps, 1 image. It was 24 seconds and it became 24 seconds.

I created a question on github, I hope to explain there why there are no changes, or just the model without RT kernels does not improve