Microsoft’s Speech Recognition Tech Achieves Human Parity--Sort Of

Microsoft announced that its speech recognition technology has achieved a word error rate (WER) of only 5.9%, which the company said was similar to what human transcribers are able to achieve.

Historic Achievement In Word Error Rates

The company also said many have sought this milestone since the early 1970s, when DARPA began researching speech recognition technology in the interest of national security.

“We’ve reached human parity,” said Xuedong Huang, the company’s chief speech scientist. “This is an historic achievement.”

Although Microsoft has constantly improved its speech recognition technology--just last month it hit a WER of 6.3%, which isn’t that far away from the 5.9% it achieved this month. However, the 5.9% milestone has much more significance because it’s as low as it is for humans, and it’s the first time any company has reached it.

Human-Level WER, But Achieved Differently

Microsoft is indeed right that reaching this low WER is a significant milestone. However, just as CPU benchmarks that return a total score don’t tell you the whole story about a chip’s performance, neither does the “Switchboard” (SWB) benchmark Microsoft used to compare its software against human transcribers.

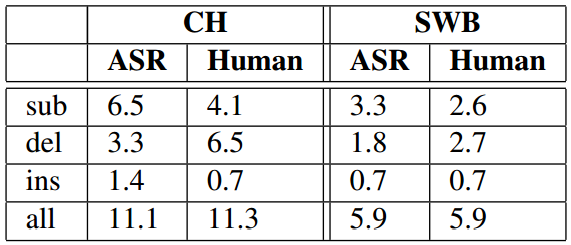

As you can see in the table below, taken from Microsoft’s paper, the overall WER may be exactly the same for humans and the company’s automatic speech recognition (ASR) system, but it’s quite different when you look deeper. The deletion rate is significantly smaller for the ASR system compared to humans; for substitution, the situation reverses.

"Substitution" in this case refers to words being replaced with other words when the recording is being transcribed. "Deletion" refers to words being added wrongfully, and then deleted.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

In another conversational telephone speech benchmark, CallHome (CH), the ASR system does significantly more substitutions and insertions than humans, but fewer deletions. However, the overall WER is also similar here (11.1% for the ASR and 11.3% for human transcribers), although it’s higher than in the Switchboard test for both the ASR system and the human transcribers.

WER Parity, Not True Human Parity

Even assuming the word error rates are identical in every way, it still wouldn’t mean that machine speech recognition is just as good as a human's. Even if the number of word errors that machines make are on par with humans, machines can still make significantly different ones. Therefore, sentences transcribed by a machine could be much more confusing to humans than they would be if other humans transcribed them, even if the error rate is the same.

For instance, Microsoft’s paper also noted that the ASR system confused “backchannel” words such as “uh-huh,” which is an acknowledgement to what the other speaker is saying, with hesitations such as “uh,” which is a pause before continuing to speak. Humans don’t make these mistakes because they know intuitively what these spoken words represent.

Speech Recognition Keeps Getting Better

Human speech recognition isn’t perfect either, which is shown by the Switchboard and CallHome benchmarks. Machine learning-based speech recognition may not yet be quite as good as humans in real world usage, but just the fact that word error rates are now similar means that speech recognition software is getting close to achieving true human parity, or even surpassing humans in speech recognition.

These latest improvements also mean that Microsoft’s services which take advantage of speech recognition, such as Cortana, are going to become more useful and less frustrating to use. Microsoft's latest achievement, along with Google's recently announced near-human level accuracy in machine translation, synthetic speech generation that sounds almost as good as humans, and better-than-human image recognition, all show that we're living in a time when machines are beginning to truly understand humans and the world around us.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

Icepilot "... we're living in a time when machines are beginning to truly understand humans and the world around us."Reply

One word too far, truly. -

stuartturner34 “We’ve reached human parity,” said Xuedong Huang, the company’s chief speech scientist. “This is an historic achievement.”Reply

A typo in a quote of a scientist talking about word error ratings. So meta. -

alextheblue Reply18750343 said:I wonder how that thing will work when plugged into siri on a noisy subway?

That depends on your audio hardware/software more than anything. For example on a PC, it would depend on the type and quality of the microphone / mic array, the sound card, audio drivers, recording software, etc. There's a couple of places where there's opportunities for noise cancellation, depending on the gear and ware used. The result gets handed to this translation software, garbage in garbage out - you have to feed it good audio for it to do it's job. The situation isn't all that different for a smartphone. Unfortunately the iPhone probably wouldn't do the best job compared to a smartphone with a HAAC twin membrane quad-mic array. -

Kafantaris Microsoft's AI driven voice recognition has left all rivals in the dust. Great work by Dr. Xuedong Huang's speech team.Reply -

bit_user Good job digging into the error rates, Lucian.Reply

This is the question I had. How much compute does it use? It's not a small detail whether this requires a long time on a big GPU, or whether it can run on a smartphone in realtime. If too much compute is required, then this won't be deployed in most real-world uses cases for years.18757879 said:using super computers or normal pc ?

BTW, humans are still way more energy efficient. -

bit_user Reply

It would be, but where's the error?18751434 said:“We’ve reached human parity,” said Xuedong Huang, the company’s chief speech scientist. “This is an historic achievement.”

A typo in a quote of a scientist talking about word error ratings. So meta.