Nvidia G-SYNC Fixes Screen Tearing in Kepler-based Games

Nvidia has introduced a Kepler-powered chip that fixes V-Sync problems in monitors and games.

Nvidia's Tom Peterson has updated the company's blog with news of a new technology aimed to fix the problems related to V-SYNC. With it off, many games have fast frame rates but suffer annoying, visual tearing, whereas when V-SYNC is turned on, many games stutter and lag. This has been a problem since the early '90s; the GPU company has embarked on a way to figure out a way around stuttering problems related to monitor refresh rates.

"We brought together about two dozen GPU architects and other senior guys to take apart the problem and look at why some games are smooth and others aren't," he writes. "It turns out that our entire industry has been syncing the GPU's frame-rendering rate to the monitor's refresh rate – usually 60 Hz – and it's this syncing that's causing a lot of the problems."

He said that because of historic reasons, monitors have fixed refresh rates at 60 Hz. That's because PC monitors originally used TV components, and here in the States, 60 Hz has been the standard TV refresh rate since the 1940s. Back then, the U.S. power grid was based on a 60 Hz AC power grid, thus setting the TV refresh rate to the same 60 Hz that made it easier to build for TVs. The PC industry simply inherited the refresh rate.

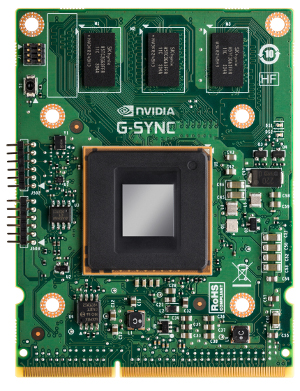

That's where the G-SYNC module comes in. The device will be built inside a display and work with the hardware and software in certain Kepler GeForce GTX GPUs. Thanks to the module, the monitor begins a refresh cycle right after each frame is completely rendered on the GPU. And because the GPU renders with variable time, the refresh of the monitor now no longer has a fixed rate.

"This brings big benefits for gamers. First, since the GPU drives the timing of the refresh, the monitor is always in sync with the GPU," Peterson writes. "So, no more tearing. Second, the monitor update is in perfect harmony with the GPU at any FPS. So, no more stutters, because even as scene complexity is changing, the GPU and monitor remain in sync. Also, you get the same great response time that competitive gamers get by turning off V-SYNC."

According to Nvidia's separate press release, many monitor manufacturers have already included G-SYNC technology in their product roadmaps for 2014. Among the first planning to roll out the technology are ASUS, BenQ, Philips and ViewSonic. Compatible Nvidia GPUs include GTX 650 Ti Boost, GTX 660, GTX 660 Ti, GTX 670, GTX 680, GTX 690, GTX 760, GTX 770, GTX 780 and GTX TITAN. The driver requirement is R331.58 or higher.

"With G-SYNC, you can finally have your cake and eat it too -- make every bit of the GPU power at your disposal contribute to a significantly better visual experience without the drawbacks of tear and stutter," said John Carmack, co-founder, iD Software.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow us @tomshardware, on Facebook and on Google+.

Kevin Parrish has over a decade of experience as a writer, editor, and product tester. His work focused on computer hardware, networking equipment, smartphones, tablets, gaming consoles, and other internet-connected devices. His work has appeared in Tom's Hardware, Tom's Guide, Maximum PC, Digital Trends, Android Authority, How-To Geek, Lifewire, and others.

-

dragonsqrrl I love how Nvidia has put so much effort over the past generation into not just the raw performance of a real-time experience, but also the quality of that real-time experience. While this is a solution that ultimately eliminates micro-stutter from real-time rendering entirely, I don't think it's a practical solution to the problem, yet.Reply -

Sid Jeong Does this mean we won't have to care about fps anymore?Reply

Will 20 fps on 20hz g-sync'ed monitor look as smooth as 60 fps on regular monitor? -

dragonsqrrl Reply11748710 said:Gonna hold off the monitor upgrade and wait to see this in action.

You can see it in action here:

http://www.guru3d.com/news_story/nvidia_announced_g_sync_eliminates_stutter_and_screen_tearing_with_a_daughter_module.html

Although from what I've heard the difference isn't as apparent in the video as it is in person. It's still pretty impressive though. -

Nilo BP Call me crazy, but the only game in which I remember getting uncomfortable with screen tearing was the first Witcher. Everything else? Just turn off VSync and go on my merry way. From humble CS:GO to Tomb Raider with everything jacked up.Reply -

dragonsqrrl Reply11748732 said:Does this mean we won't have to care about fps anymore?

Will 20 fps on 20hz g-sync'ed monitor look as smooth as 60 fps on regular monitor?

Of course at a certain point a game won't look smooth no matter what. At 5 fps rendered graphics are going to look like a slideshow regardless (although those will be some clean, tear-free 5 frames per second), but I think G-Sync does give you much more flexibility with low frame-rates, but again only to a certain point. I'm willing to bet you could probably go as low as ~24 fps and still have a perfectly 'smooth' experience. -

drezzz Another question is will this interfere with the lightboost hack or does this make lightboost hack unnecessary?Reply -

iam2thecrowe Reply11748732 said:Does this mean we won't have to care about fps anymore?

Will 20 fps on 20hz g-sync'ed monitor look as smooth as 60 fps on regular monitor?

no, 20fps is still 20fps, it can never look like 60fps. Game's fps is always variable, so with normal vsync, with a 60hz monitor for example, at 20fps, the monitor refreshes 3 times for one frame displayed, which is fine. But at 23fps, the monitor cant display this with vsync on, 23 can not be divided into 60 evenly, so it drops down to 20fps to keep frames synced with the monitor, so you get a slight delay in the game engine before the frame is released to the monitor to keep things in sync (this is how you get stutter and input lag from your mouse/keyboard). Compare this with gsync, a constant variable refresh rate synced with the frame rate there is no delay, no fps drops, no stuttering, the game engine/rendering doesnt have to slow down to keep things synced, so having similar fps will feel way smoother with g-sync. Personally, i cant wait to get a monitor that supports this, so long as they aren't ridiculously priced. -

iam2thecrowe Reply

I would say below 50hz/fps with lightboost, things would become very flickery, so it probably isn't a good idea unless fps is high.11748905 said:Another question is will this interfere with the lightboost hack or does this make lightboost hack unnecessary? Very happy that I bought the Asus over the Benq in any case. -

boju What's AMD going to do, implant their own chips as well to compete? do they have an alternative im not aware about?Reply

Sounds like what Gsync/Nvidia is trying to accomplish should have been done generically, no?