Nvidia Showcases Incredible Instant NeRF 2D to 3D Photo AI Processing

And it commemorates the 75th anniversary of the first Polaroid instant photo.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

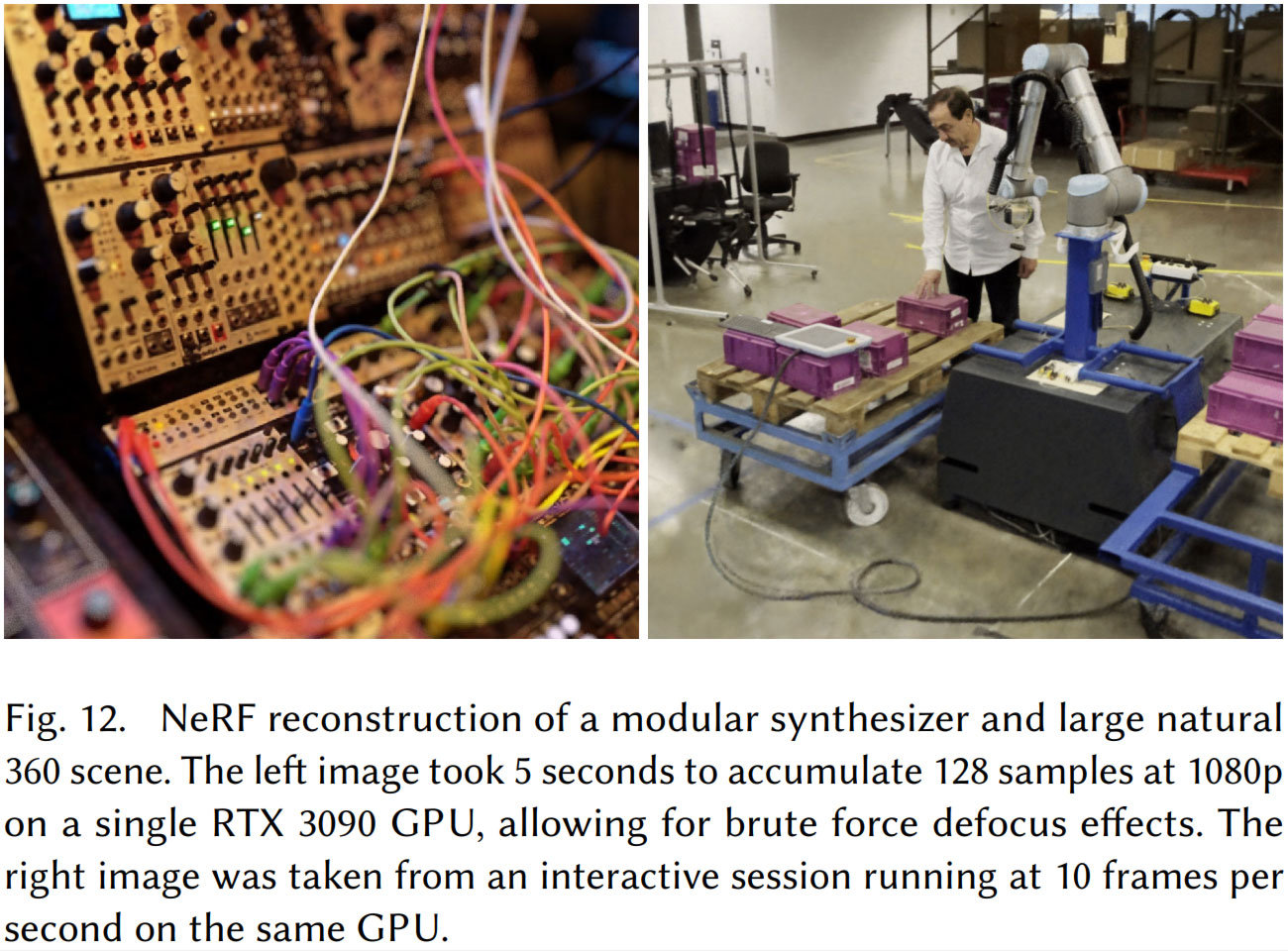

Nvidia researchers have developed an approach to reconstructing a 3D scene from a handful of 2D images "almost instantly." A new blog post describes the feat, which leverages a popular new technology called neural radiance fields (NeRF), which is accelerated up to 1,000x compared to rival implementations. Nvidia's processing speed is largely due to its AI acceleration leveraging Tensor Cores which speed up both model training and scene rendering. If you are interested but want a TLDR, take a peek at the short video embedded directly below.

Providing some context to its demo, Nvidia says that previous NeRF techniques could take hours to train for a scene and then minutes to render target scenes. Though the results of previous slower implementations were good, Nvidia researchers leveraging AI technology have put a rocket into the performance, and hence Nvidia has the confidence to describe its tech as "Instant NeRF."

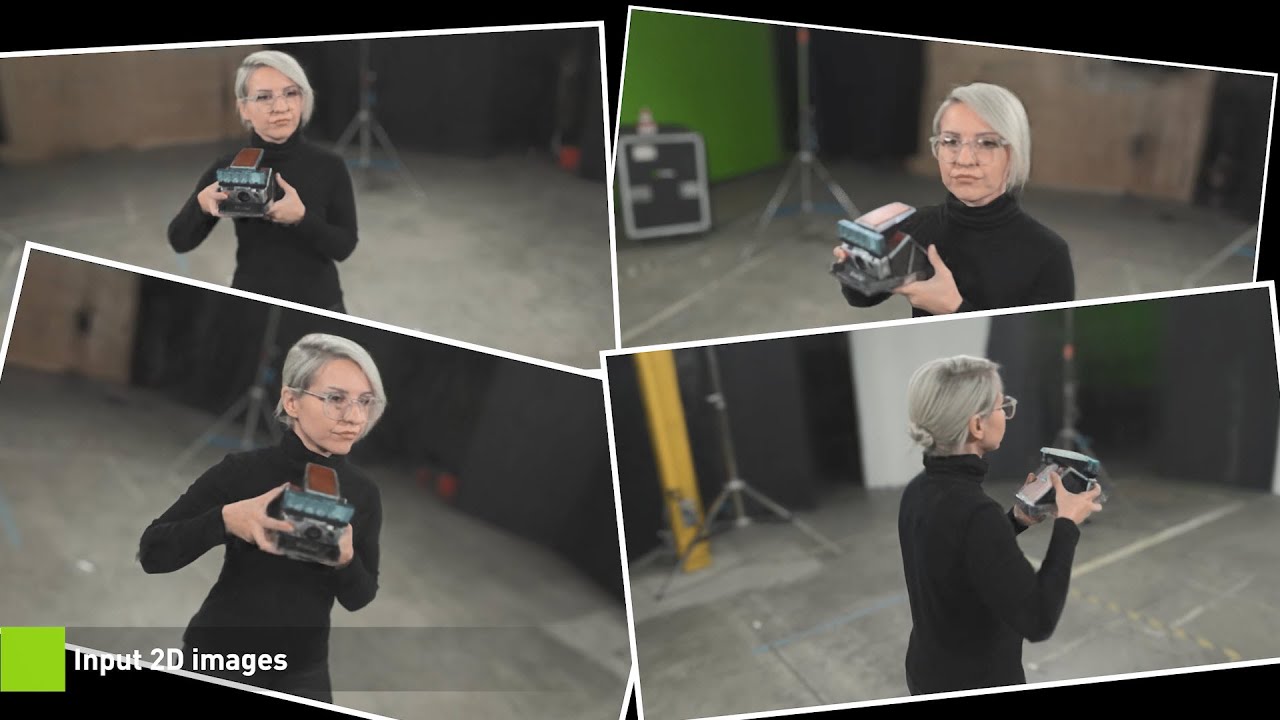

You probably have already guessed, but this NeRF tech uses neural networks to represent and render realistic 3D scenes based on an input collection of 2D images. The above video implies that just four snaps were required to create the 3D representation we see in motion. However, the blog might be more realistic in explaining the "the neural network requires a few dozen images taken from multiple positions around the scene, as well as the camera position of each of those shots." The neural network fills in the blanks of the full 360-degree scene and can predict the color of light radiating in any direction, from any point in 3D space for added realism. Nvidia says the technique can work around occlusions.

With Instant NeRF, Nvidia facilitates the rendering of a full 3D scene as described above in tens of milliseconds. That is impressive, but what use could it be? David Luebke, VP for graphics research at Nvidia has big hopes for the technology. "If traditional 3D representations like polygonal meshes are akin to vector images, NeRFs are like bitmap images: they densely capture the way light radiates from an object or within a scene," said Luebke. "In that sense, Instant NeRF could be as important to 3D as digital cameras and JPEG compression have been to 2D photography — vastly increasing the speed, ease and reach of 3D capture and sharing."

Other uses foreseen for Instant NeRF tech include providing help for robots and autonomous vehicles to understand the size and shape of real-world objects from incomplete data. Instant NeRF might also be of value where speed is of the essence for virtual environment design or even architecture. It also will surely be of interest to gaming and VR / metaverse developers.

If you want to read about Instant NeRF in more depth, Nvidia research has published a paper called Instant Neural Graphics Primitives with a Multiresolution Hash Encoding. Last but not least, you can also download, train and run the demo code, available via that same GitHub link.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

cryoburner Reply

Something like this could be used for easily capturing navigable 3D environments using standard camera equipment. For example, a real-estate company could walk through a home while recording video of each room, then let that get automatically converted into a 3D model of the house. A potential buyer could then navigate through a number of houses from home through their web browser, or even in VR, to get a better feel for each of them than what a collection of photos can provide. The same process could be used for creating a model to base renovations off of, with potential changes to a room tested out in real-time. And people might just want to take 3D photos capturing a scene in its entirety, like a panorama, but with the ability to move around within that space. Or perhaps a game or virtual chat program could allow a person to appear in the virtual environment as they appear in person.Jimbojan said:It has little use for most people.

Though as far as their demonstration video went, it wouldn't exactly be practical to have a subject pose perfectly still while you take numerous photos of them from every direction. And for that matter, I suspect the system would get confused if you tried to do that outside a controlled environment, with other people walking around and trees blowing in the wind, and so on. AI algorithms might be able to filter those things out, but it's probably not going to be happening in near-real-time on standard computer hardware, at least not yet. -

jp7189 Reply

Photogrammetry is used for many things.. real estate, crime scene reconstruction, factory upgrades... heck anywhere you have complex equipment far away from the design team. You send a guy with a camera to capture the scene and then the design can figure out what new equipment fits in what spaces.Jimbojan said:It has little use for most people.

but it's finicky to get the images captured properly and is slow to recontruct. What that means to the worlflow is you capture images in the field and hope you got enough of the right areas in the right ways. Then you bring them back to the lab to rescontruct the scene which takes minutes to hours.. only to find out something wasn't right with the capture. Sometimes it's not possible to go back to the scene or the scene has changed enough that you have to recapture the entire thing again, and sometimes you don't recapture but instead spend days or weeks manually tweaking to get the render right.

If this tech can render instantly in the field on a commodity laptop, then that would be a game changer and very valuable to the right industries. -

Geef It won't take long for ladies to dress up in a dress they saw a movie star in and then take several front pics of themself and then use the rear pic of the movie star to make their butt look nice. :ROFLMAO:Reply -

Chung Leong ReplyJimbojan said:It has little use for most people.

Most people watch porn, where this tech has obvious application. -

Pollopesca So essentially this is just Photogrammetry boosted by AI. It does have use in many 3D development fields. The downside to this method is the topology is usually a garbled mess. So you may get a solid texture out of it, but the geometry will need to be re-worked if efficiency is a concern. Then again looking at how unreal 5 handles high density geometry, this may become less of an issue.Reply -

Matt_ogu812 Why does this model have a 'I Don't Want To Be Here' look on her face?Reply

3D someone else's face in place of hers is priority one. -

cryoburner Reply

They probably made her stand perfectly still holding that heavy camera at an awkward angle for hours as they gathered all the photos they needed for this. : PMatt_ogu812 said:Why does this model have a 'I Don't Want To Be Here' look on her face?

3D someone else's face in place of hers is priority one.