OEM Exclusive RTX 3050 Confirmed With Cutdown Specs

Thankfully the neutered core count and core clocks appear to be minor.

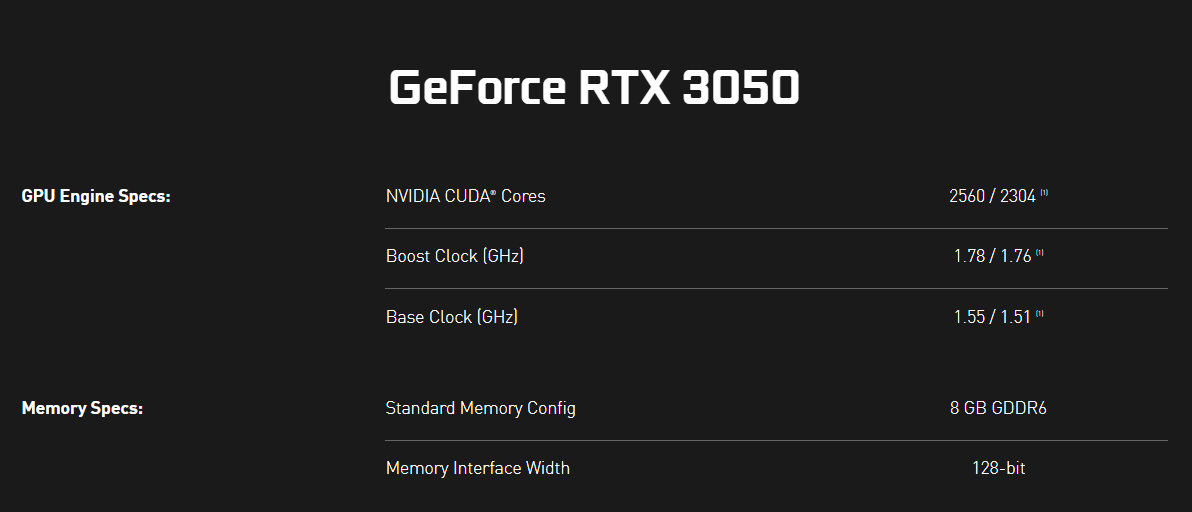

Nvidia has silently confirmed the release of a new RTX 3050 variant today, but it's a slower model aimed at the OEM market only. This variant comes with a slight reduction in core clocks and CUDA cores. But all its other core specifications, including the memory configuration and TDP, remain the same.

Unfortunately, Nvidia has not stated why this new model was created, which is barely any different from the standard model. The neutered version comes with an 11% reduction in CUDA core counts from 2560 to 2304 (20 SMs to 19 SMs) and around a 2% reduction in core clocks.

In real-world use, the performance degradation between the two cards shouldn't be noticeable at all with such a minor deviation in core count and especially clock speeds – if anything. Furthermore, Nvidia's GPU Boost 4.0 algorithm should be able to boost clocks even higher than the standard variant due to power consumption remaining the same between the two models.

Presumably, Nvidia has disabled some cores to increase yields from slightly defective GA106 dies manufactured at the factory. This is the only logical explanation we have right now, but it makes a lot of sense.

This isn't the first time Nvidia has pulled this maneuver on the GPU market. Over the past few generations, we've seen several GPU models featuring two to four different versions to optimize manufacturing efficiency. The most notable examples of this are the RTX 3080 12GB and GTX 1060 3GB, which featured more or fewer CUDA cores than their vanilla counterparts.

Nvidia also released OEM-exclusive models of graphics cards in the past, but it hasn't done so in a very long time. But unlike OEM models of the past, this new 3050 model lacks any OEM branding and shares the same naming scheme as the vanilla RTX 3050 8GB.

This could be problematic for customers choosing gaming PCs from OEMs and customers looking at graphics cards on the used market. Without a proper distinction between the two 3050 models, customers won't be able to know which GPU they are buying until they purchase the machine and fire up GPU-Z on the system. Hopefully, this potential issue doesn't become a reality.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Pricing and availability are unknown, and since this neutered 3050 is OEM exclusive, it's doubtful it has an MSRP of any sort. But since Nvidia has released the specs of the new GPU on its main website, it should already be in the hands of Nvidia's many OEM partners. So expect new machines to be updated with the new 3050 at any time.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

King_V Oh for crap's sake, Nvidia . . when you do this, GIVE IT A DIFFERENT MODEL NUMBER!Reply

Or at least call it a (whatever model) LE or LT or something.

Also looking at you AMD, but at least you haven't pulled this crap since the RX 550 and RX 560 variants, unless I've forgotten something. -

InvalidError The original RTX3050 was already a 30+% cut down GA106, I find it unlikely there are specimens so badly defective that they need one extra SM cut out to make the grade.Reply

It would make more sense if this was the cost-optimized RTX3050 based on GA107, likely to be followed by a Ti variant with all 20 GA107 SMs available. -

Giroro How hard is it to just add an extra letter or 2 to the name of the product?Reply

Or if this is just about cost-cutting, then why not make it an RTX 3050 4GB? The 3050 can't play 4k games, so it has no need for the VRAM needed to support 4k "ultra" textures.

4096x4096 textures take 4x the VRAM of 2048x2048 textures, even though the difference is unviewable on a 1920x1080 monitor. Even the situations where 2048x2048 has a resolvable difference to 1024x1024 is limited to when a single texture is covering over half the screen, but how often does that actually happen in games when there's usually dozens, if not hundreds of textured objects on screen at once? New games might be coming out with huge, impractical textures, but what does it matter in FHD gaming when they exceed the resolution of the display? Am I completely off base with how pixels work?

If people are unhappy that they can only play a game at medium then, like, just do the TV thing and rename the Texture settings to resolutions or something like "HD, FHD, QHD, UHD", etc.

I get that the 8GB selling point can help move a product off the shelf compared to a less expensive/better performing RTX 2060 6GB, but since when did OEMs bother advertising how much VRAM is in their low-end gaming systems? -

InvalidError Reply

4k gaming and 4k textures are two different and only loosely related things. I've done some 4k gaming on a GTX1050, works great in older games like Portal 2, though not quite there for 4k PS2 emulation. I don't remember trying N64 emulation since getting a 4k TV, might be worth a look.Giroro said:Am I completely off base with how pixels work?

Regardless, most games will readily blow through 4GB of VRAM with anything lowest everything when available, so 4GB definitely isn't viable for anything pitched to actual gamers who want to be able to play stuff at some reasonable approximation of how they are meant to look instead of rock-bottom details to squeeze everything within 4GB. -

King_V ReplyInvalidError said:4k gaming and 4k textures are two different and only loosely related things. I've done some 4k gaming on a GTX1050, works great in older games like Portal 2, though not quite there for 4k PS2 emulation. I don't remember trying N64 emulation since getting a 4k TV, might be worth a look.

Regardless, most games will readily blow through 4GB of VRAM with anything lowest everything when available, so 4GB definitely isn't viable for anything pitched to actual gamers who want to be able to play stuff at some reasonable approximation of how they are meant to look instead of rock-bottom details to squeeze everything within 4GB.

Slight digression, but you might get a kick out of this guy doing 8K gaming with a giant screen... and an RX 6400.

Some of it went hilariously badly, as you might expect, but there were some really pleasant surprises.

POcXNhIew4A -

InvalidError Reply

280fps at 8k on an RX6400... nice :)King_V said:Slight digression, but you might get a kick out of this guy doing 8K gaming with a giant screen... and an RX 6400.

Some of it went hilariously badly, as you might expect, but there were some really pleasant surprises.

And yeah, I really like the extra sharpness in older games on 4k for what little stuff is playable on 4k with a 1050. -

King_V ReplyInvalidError said:280fps at 8k on an RX6400... nice :)

And yeah, I really like the extra sharpness in older games on 4k for what little stuff is playable on 4k with a 1050.

Yeah! That bit with Half-Life 2, and looking at the crowbar, and his comment about how 8K made the old graphics look kind of weirdly real - it was nuts!

This whole thing now makes me want to game on a gigantic TV.... uh, except my TV, while being a 60-incher, is an older one . . 1920x1080. My eyes aren't good enough to complain about that low resolution at TV-viewing distance, though.