Record 1.84 Petabit/s Data Transfer Achieved With Photonic Chip, Fiber Optic Cable

Said to be more than enough bandwidth for today’s internet.

Scientists from the Technical University of Denmark in Copenhagen have achieved 1.84 petabits per second data transfers using a single photonic chip connected via a single optical fiber cable. The feat was accomplished over a distance of 7.9 km (4.9 miles). For some perspective regarding this achievement, at any time of day, the average internet bandwidth being used by the whole world’s population is estimated to be about 1 petabit/s.

With the ever increasing amounts of data shifted across the internet for business, for pleasure, and software downloads or updates - infrastructure firms are always on the lookout for new ways to increase the available bandwidth. The 1.84 petabits/s over a standard optical cable using a compact single chip solution will therefore hold much appeal.

Photonic chip technology holds great promise for optical data transfer purposes – as the processor and the transfer medium both work with light waves. The New Scientist explains in simple terms how the Danish scientists, led by Asbjørn Arvad Jørgensen, managed to deliver such bandwidth with the resources at hand.

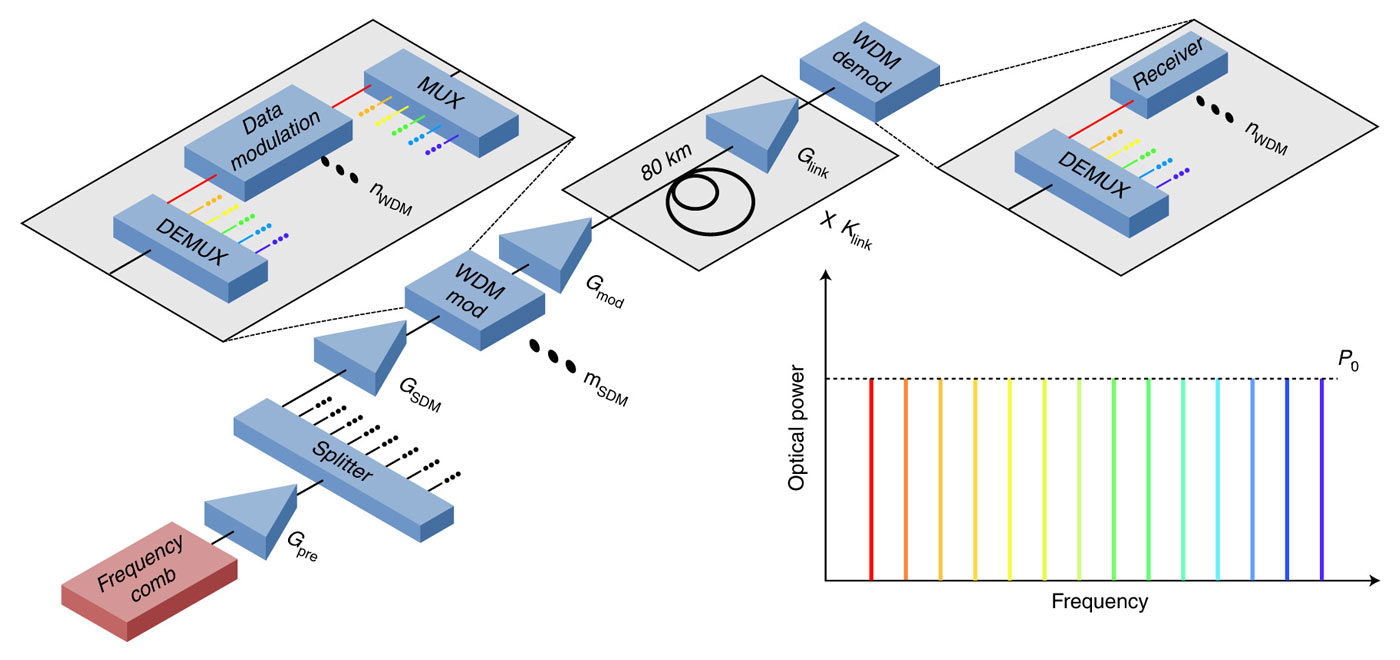

Firstly, the data stream used in the trial was split into 37 lines, with each one sent down a different optical thread in the cable. Each of the 37 data lines were split into 223 data chunks corresponding to zones of the optical spectrum. What this allowed is for creating a "frequency comb" where data was transmitted in different colors at the same time, without interfering with other streams. In other words a “massively parallel space-and-wavelength multiplexed data transmission” system was created. Of course, this splitting, and re-splitting massively increased the potential data throughput supported by a fiber optic cable.

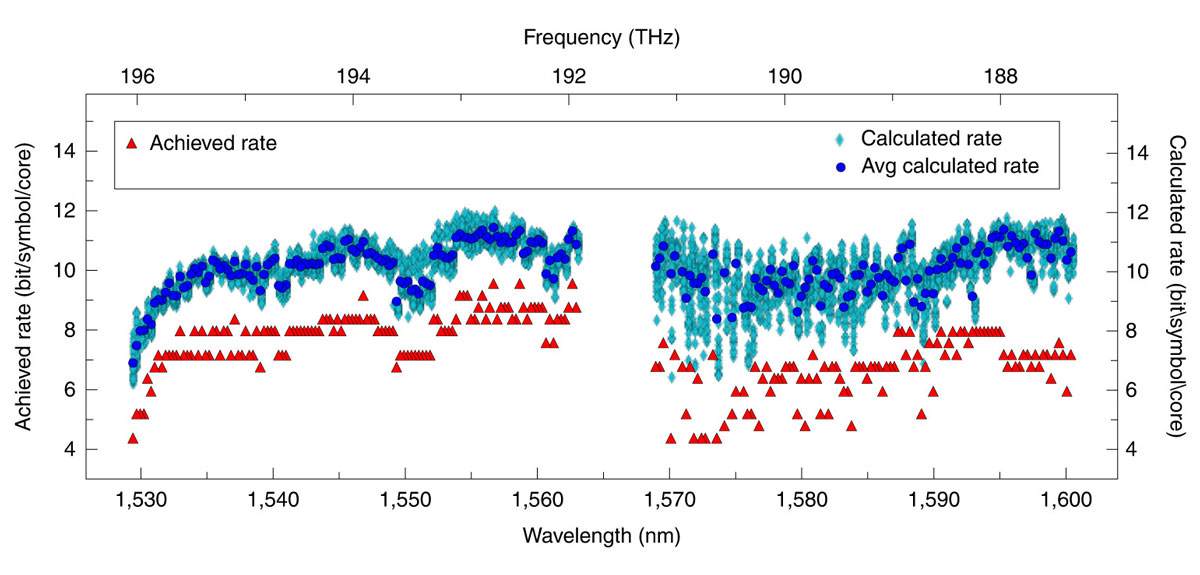

It wasn’t easy to test and verify 1.84 petabits/s bandwidth – as no computer can send, or receive, never mind store, such a humungous amount of data. The research team used dummy data over individual channels to verify what would be the full-on bandwidth capacity. Each channel was tested individually to ensure data received matched what was transmitted.

In action, the photonic chip splits a single laser into many frequencies and some processing is required to encode light data for each of the 37 data optical fiber streams. A refined fully capable optical processing device should be possible to build at approximately the size of a match box, according to Jørgensen. This is a similar size to current single color laser transmission devices used by the telecoms industry.

Ut is reassuring that we will be able to keep the same fiber optical cable infrastructure, but replace matchbox-sized optical data encoders / decoders with the similar sized photonic chip powered devices, potentially delivering an effective 8,251x increase in data bandwidth. The researchers say there is enough potential shown in their work to inspire “a shift in the design of future communications systems.”

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

For further information about the record 1.84 petabits/s data transfers you can check out the Petabit-per-second data transmission using a chip-scale microcomb ring resonator source paper.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

Fates_Demise I got bored, at that speed you can download a 100GB game 2.3million times per second.Reply -

George³ Meanwhile the Ethernet Alliance roadmap stop on miserable 1.6 Tbps from years. Today last communication tech in production is 800Gbps.Reply -

A Stoner They tested them individually, there will be no issues with having all these light waves interfering with one another?Reply -

curtisv ReplyGeorge³ said:Meanwhile the Ethernet Alliance roadmap stop on miserable 1.6 Tbps from years. Today last communication tech in production is 800Gbps.

Ethernet wants to be able to operate over a carrier WDM as used in metro ethernet with WDM and optical add/drop multiplexers (OADM). So one core and ideally one wavelength. 10GbE is one 10 Gb/s wavelength and cheap colored optic components. For example 100GbE is 4x25Gb/s closely spaced to fit in a 100 GHz band. So in something like a Wall Street fiber ring a brokerage trading office can easily get 10GbE to the exchange from local carrier or competitive provider. Plenty of other application of course.

400 GbE and up is more useful in data centers that have been bumping scaling limits for a decade of more.

Ethernet is not used at all as a link layer in longer hauls than metro but is used to hand off data to the transport layer. There is also encapsulations of Ethernet, for example 100GbE carried in ODU4 but the transport equipment sees this as ODU4 and has no idea it is carrying Ethernet inside. Some transport equipment can take 400GbE and 800GbE as an external interface. I'm not sure but I think 100GbE is still least cost per bit. -

curtisv ReplyA Stoner said:They tested them individually, there will be no issues with having all these light waves interfering with one another?

Yeah. Look up wave division multiplexing (WDM). WDM has been widely used since the 1990s. The original paper for this article uses the term SDM (spectral division multiplexing). -

curtisv The article that this article cites doesn't go into what exists and what is new and what the implcations are most likely because the author doesn't have the background. So this article reflects that. The original article in Nature makes no false claims AFAIK.Reply

Multiple wavelengths of light on a fiber (or core in this type of fiber) is wave division multiplexing, in use since mid 1990s. Optical amplifiers is similar timeframe. Multiple cores per fiber is only applicable to very short distances (relative to size of the earth) and the original paper in Nature points this out. The longest production DWDM (dense WDM) as of about 2010 is US west coast to Australia without stopping for signal regeneration in Hawaii. That is 6,000+ km with fiber and amplifiers at about 100 km intervals (I think). I don't know the configuration but I think it is 160 waves at 100 Gb/s each but may be higher. Infinera gear on either end. Details probably still burried on the web site blog. One core because the power to drive 100 km and keep signal integrity for that many amplifications would melt the fiber if multicore.

They also didn't invent photonic integrated circuits nor are photonic integrated circuits new. Infinera was the first to use them commercially, shipping first product in 2005. Current product 800 Gp/s per chip pair (transmitter chip and receiver chip), soon 1.6 Tb/s, planned 4 Tb/s. But this is for ultra long haul with claimed 10,000 km reach. Heat is a huge problem in the transmitter chip as well as for the fiber itself.

What is new and the paper in Nature is clear about this from the title, abstract, etc, is the source laser. They don't use a single laser per wavelength or laser array (small number of lasers) but rather use a single polychromatic laser on a chip and a microcomb ring resonator. The paper in Nature points out this works for very short distances (relative to earth, therefore the Internet) and their demo is 7.9 km. This limitation is due to be very low power produced this way. That is not to say it has no use. It might be great for data center use where multiple building nearby is all the distance needed.

It is unfortunate when a one mainstream publication botches the details and imagines something revolutionary and that every bit of technology mentioned is new and unique (because they never heard of it) when in reality the contribution is useful, very impressive, but much more limited than the mainstream publication imagines it to be. It is worse when the mainstream publication that has no idea what they are talking about becomes a cited source all over the Internet. None of this flawed reporting is intentional. (And maybe I got some facts wrong too). It does remind me a bit when TV news anchors tried to explain what the Internet was in 1994 and 1995 or worse yet tried to give the layman's version of how the Internet works and completely botched it (and now famously). Lower consequences and lower exposure here. Now someone please explain to Redit and elsewhere.