New Startup uSens Wants To Give You Hands In VR

VR has progressed faster than we could have predicted, and the current projects on the market can do an amazing job projecting us into virtual worlds. Once inside those worlds, though, there’s still an unfortunate limit to what we can do. The dream of true room-scale VR, where you can walk around and interact with a space unencumbered by tethers and controllers, has yet to arrive.

Although the Oculus Rift and HTC Vive can let you walk around in certain circumstances, they require additional hardware around your room. Interaction is possible, but via controllers that have never felt entirely intuitive for me.

Mobile VR is entirely left out, being unable to account for the users position, and relying on controllers for complex interaction (though Google’s Daydream may help fix this).

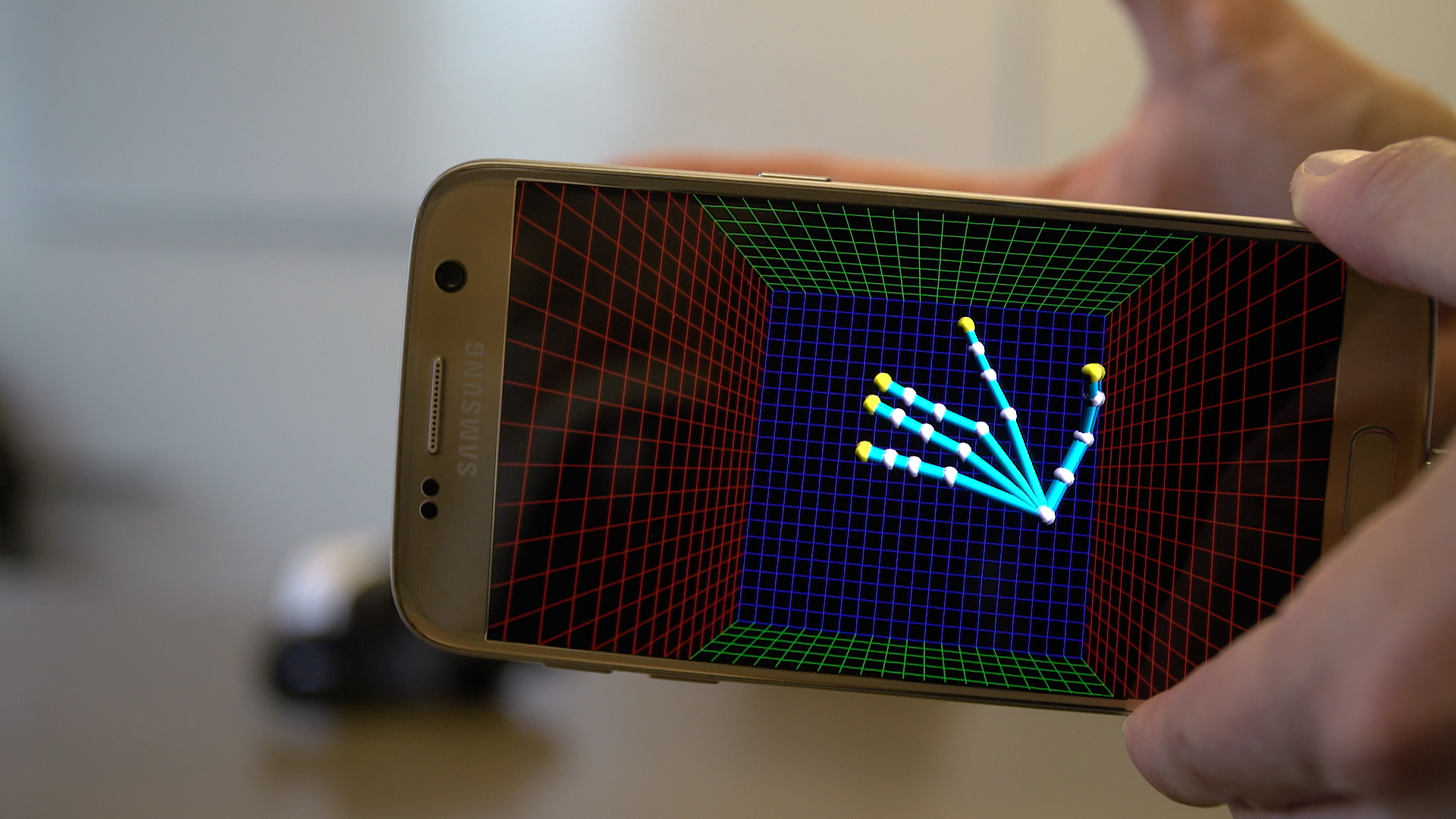

One San Jose-based company, uSens, is trying to solve this issue, for mobile and PC-based VR alike.The company is developing infrared (IR) and camera-based sensors that can not only potentially add room-scale tracking to any VR system, but also track and map your hands in space, letting you interact with a VR world with your bare hands.

Handy

USens said its plan is to create seamless interaction in VR around gesture control (say, a wave to the left to rewind), direction manipulation of objects (picking up, throwing, poking), and the use of virtual tools (remember Google’s pancake demo?). This kind of controller-less interaction is uSens’ real goal, and the idea behind its “Fingo” system.

USens has designed three different models of Fingo, all of which currently take the form of a small add-on device strapped to an HMD, but which uSens hopes will eventually be incorporated directly into future headsets built by manufacturing partners.

The first is Fingo IR, a sensor system that includes three small IR blasters and an IR camera. Fingo connects to a phone or other headset via USB and uses the companion device’s processor to determine hand and finger position.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The system is based on machine learning and kinematic models of how human hands work. Essentially, the Fingo measures where IR light is bouncing from, and then it does its best to guess the hand position based on that.

In the demos I experienced, the add-on system, which connected to a Samsung phone via USB, was fairly accurate, though it struggled as I brought my hands closer to the headset. At times, the virtual models of my hands would spasm and twist in ways I don’t think I’m capable of moving. By contrast, a version of the Fingo IR built into a prototype headset seemed remarkably accurate, matching my hand and arm movements with precision.

Another potential use of the Fingo IR is to improve head tracking in more conventional IR systems. One demo involved a gameboard-style mat with silver markers at the corners. Fingo could use these markers for position tracking, including some limited proximity tracking (i.e., the virtual mat got smaller when I moved away from it). USens claimed this sort of tracking was more accurate and required less processing than traditional sensor-based tracking.

Another product in the works has been designated "Fingo Color," and as the name implies, this system uses color cameras. The goal of Fingo Color is still to add hand and position tracking, but this time from an AR perspective. The demo I saw used Fingo Color to allow me to draw in mid-air using my fingers. I could still see the room around me through the Color’s camera, but my AR interactions were overlaid on the real world.

Remarkably, the Fingo Color can also allow for room-scale position tracking based solely on the camera’s perception. Although it currently works best in fairly simple environments, the color can process the camera’s inputs to determine where you are walking, what direction you are facing, and so on.

This brings us to the real product: the PowerFingo. The PowerFingo uses both sets of sensors and couples them with on-board chips for processing the data, instead of offloading the information to the host devices.

Giving The Vive A Hand

In the main demo, the PowerFingo was hacked into an HTC Vive and used to navigate a virtual bedroom. Even without any of the “lighthouse” stations to provide position tracking, I was able to walk around the room and pick up and interact with virtual objects. The system even knew if I was standing or crouching, as well as in what direction I was facing.

I should stress that all of these products were in the fairly early stages, and hiccups and glitches were common, but when they worked it felt remarkable. A VR model of the solar system stubbornly refused to acknowledge about half of the gesture commands I tried, but when it did respond, I was able to summon planets and zoom around outer space with simple gestures. It’s hard to overstate how satisfying that felt.

Of course, uSens’ success will depend on its ability to partner with HMD manufacturers. Although uSens has created SDKs and dev kits, it has yet to secure its Fingo technology for use in a major headset. With Fingo still in the prototype stage, and most manufacturers already having rushed HMDs to market, it may be another hardware generation before we see this technology in our VR headsets.

-

wifiburger great more stuff to pile on the already defunct VR, meanwhile I'll enjoy my couch & controllerReply -

Robert Cook I was at a summer internship where some college interns made the same thing with the use of a leap motion controller and two 1080P webcams. the result was that you could use AR mode where you also saw your surroundings (albeit slightly grainy) and the VR was floating in the space like a holo lens. it also could be used in pure VP mode. So they beat them out be nearly 4 months (granted their final product was not that polished). I guess I am just not impressed because their project did everything this will ever do, and their budget was certainly lower. Also I dislike VR, so It is hard to win me over in the first place.Reply -

cryoburner Reply18910356 said:great more stuff to pile on the already defunct VR, meanwhile I'll enjoy my couch & controller

You must have a really strong neck to be able to strap a couch to your head.