DeepMind's 'WaveNet' Synthetic Speech System Is Now 1,000x More Efficient

Last year, DeepMind, a sister company of Google in the new Alphabet conglomerate, announced its new machine learning-based text-to-speech (TTS) system called “WaveNet.” The system represented a significant improvement in how natural the synthetic speech sounded, but it had one critical flaw: it was too computationally intensive to be used for anything other than research projects.

A year later, the DeepMind team changed this by making the system 1,000x faster, which means it’s now efficient enough to be used in consumer products, such as Google Assistant.

Old Models For Synthetic Speech Systems

The best way to do synthetic speech generation so far has been to use the concatenative TTS system, which uses a database of high-quality recordings from a single voice author. The recordings are split into tiny chunks that can then be combined or concatenated to generate the synthetic speech.

This is also why TTS systems have sounded so “robotic” for so many years. This system can’t easily be altered or improved without creating a whole new database of recordings, which is why progress in synthetic speech has been so slow over the years, too.

Another method—which has been even less common, because it sounds more robotic—is the parametric TTS system. With this system, voices are completely machine-generated based on grammar and mouth movement rules that are supposed to make the synthetic voice sound human.

The parametric system never worked quite as well as the concatenative TTS system because, as with other similar attempts, it’s been too difficult to program the complexity of human movements and actions through fixed algorithms and parameters. The reason deep learning has been so successful is because it does away with such human-programmed algorithms, and can instead generate its own parameters by “learning” how things are done by humans.

The Neural Network-Based WaveNet Model

The WaveNet system takes roots in machine-generated synthetic speech, but instead of using fixed parameters, it trains neural networks on large datasets of human speech samples so it can learn on its own how to generate “human-like” speech.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

During the training phase, the neural network determined the underlying structure of speech, such as which tones followed each other and which were more realistic. It then synthesized one voice sample at a time, while taking into account the properties of the previous sample. The resulting voice contained natural intonation and even features such as lip smacks.

This approach doesn’t only generate more natural-sounding synthetic speech, but it should also be much easier to improve it in the future, as it will just be a matter of fine-tuning the neural network training or throwing more data and computational resources at it.

The new model also has the advantage of being easily modified to create any number of unique voices from blended datasets.

A 1,000x Increase In Performance

However good it was, WaveNet couldn’t have been deployed to real-world applications, even by a company such as Google. Therefore, the DeepMind team had to drastically improve the system’s performance before using it in any consumer products.

The original WaveNet could generate only 0.02 seconds of synthetic speech in 1 second. The new WaveNet is 1,000x faster, and it can now create 20 seconds of even higher-quality sound from scratch in 1 second.

The new WaveNet can generate 24kHz audio samples with 16-bit resolution for each sample (same resolution used for CD-quality music), while the older system could only generate 16kHz audio samples with 8-bit resolution for each sample.

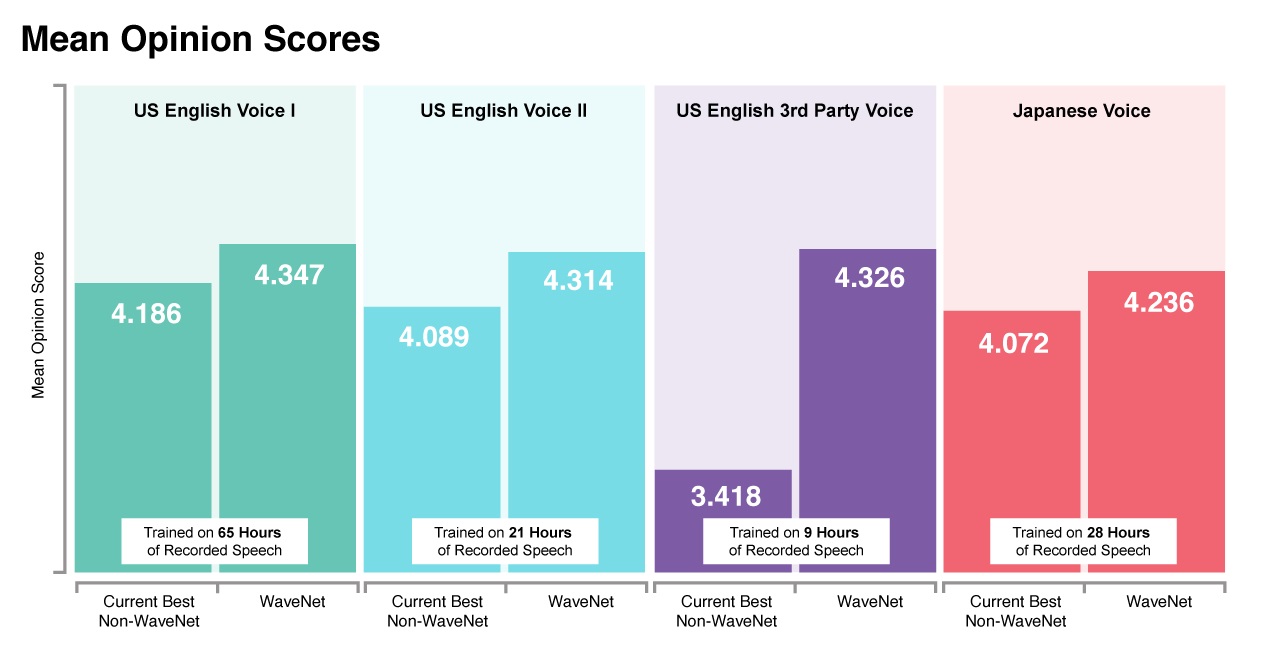

According to human testers, the new WaveNet does generate higher-quality sounds. For the new U.S. English I voice, the mean-opinion-score (MOS) has increased from about 4.2 for the old WaveNet to about 4.35 for the new one. The human voice was rated at 4.67, so it’s getting rather close to the ideal.

DeepMind noted that the WaveNet system also gives it the flexibility to build synthetic voices by training them using data from multiple human voices. This can be used to generate high-quality and nuanced synthetic voices even when the voice datasets are small.

The new WaveNet has been launched into production in Google Assistant, and it’s also the first application to run on Google’s new cloud TPU chips. However, Google still seems to need more time to train voices for multiple languages, because for now only the English and Japanese languages take advantage of the new technology.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.