Phison's new software uses SSDs and DRAM to boost effective memory for AI training — demos a single workstation running a massive 70 billion parameter model at GTC 2024

Phison claims this is the world's first workstation that can run a 70B Llama 2 model.

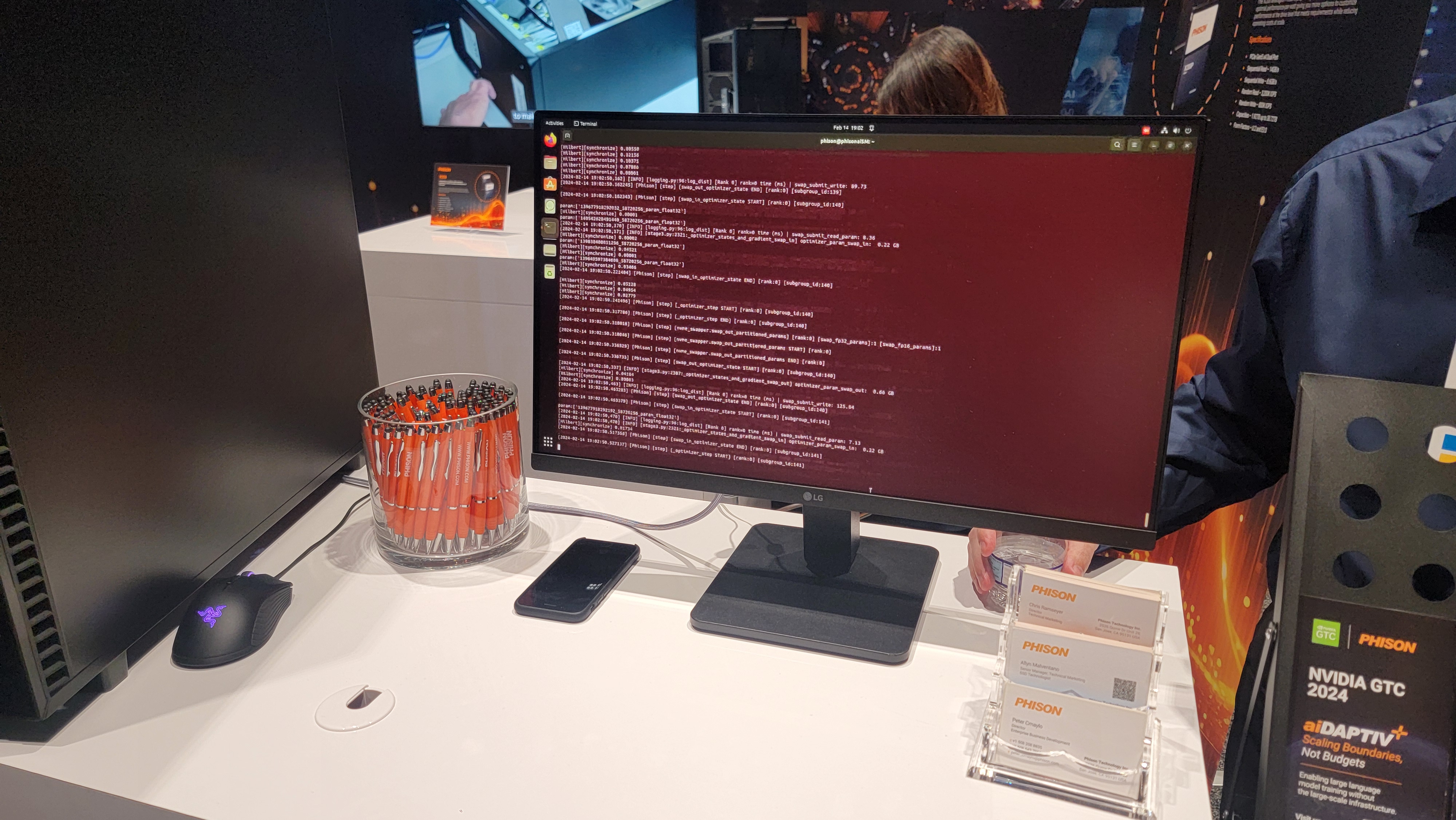

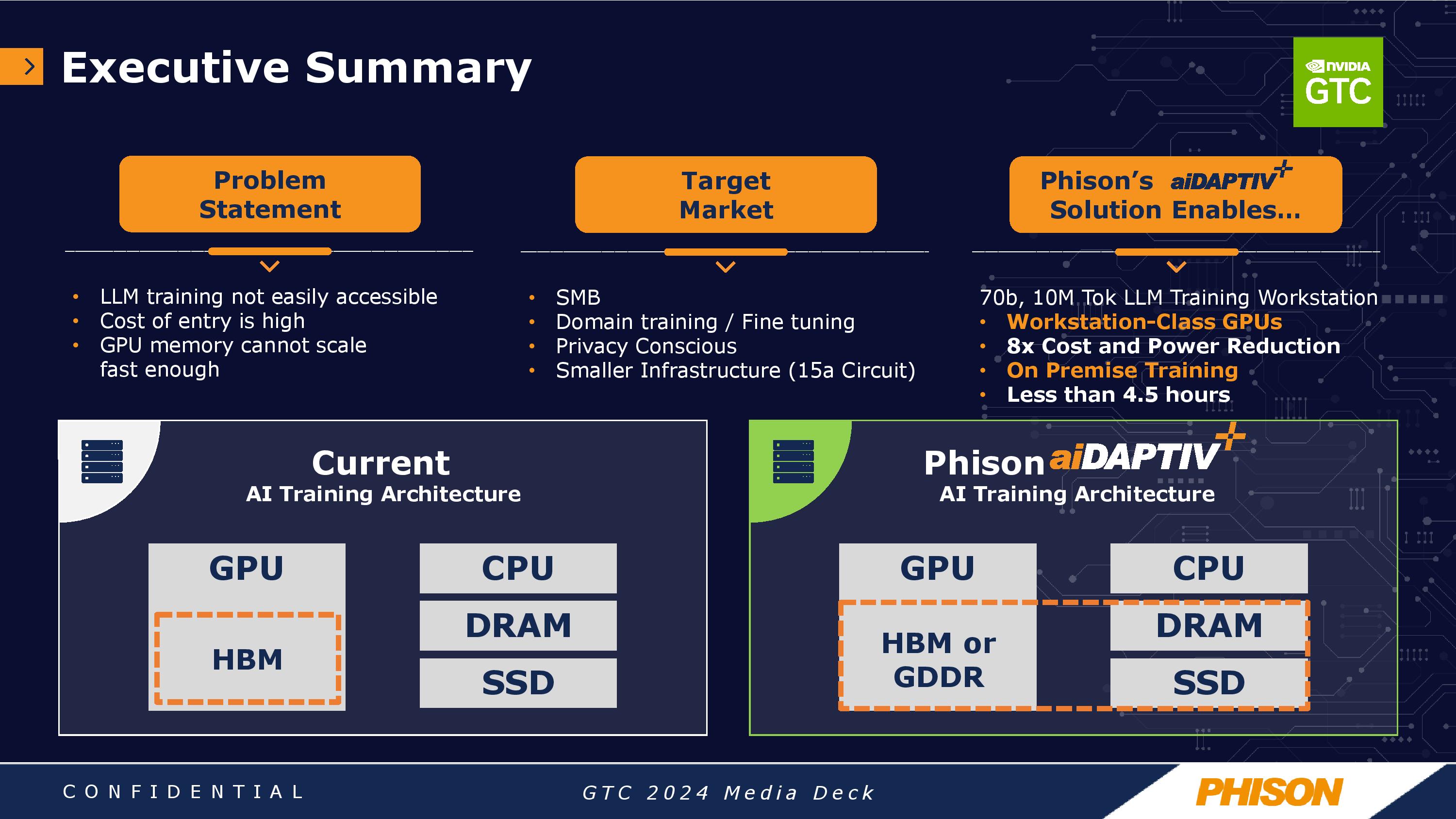

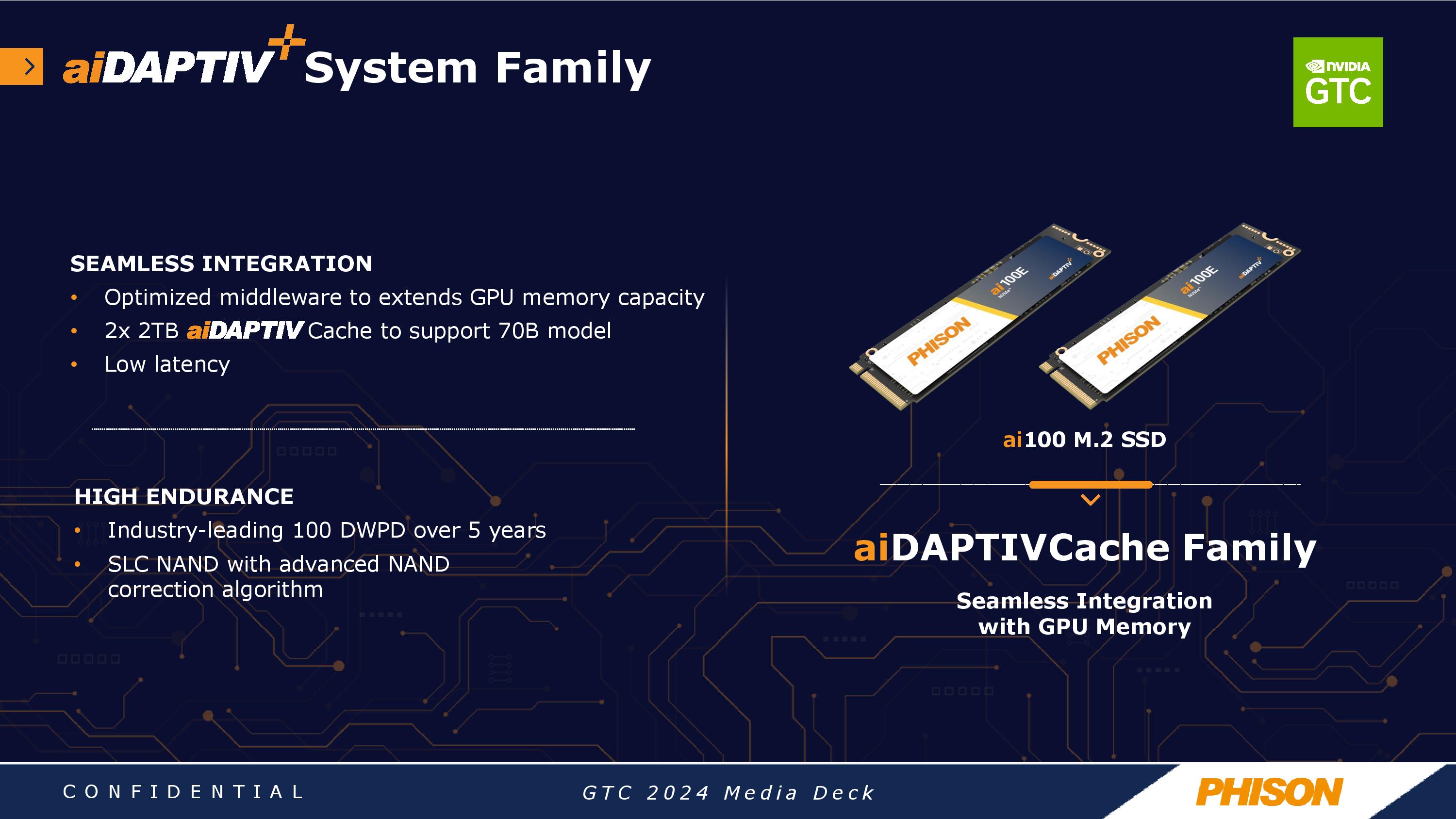

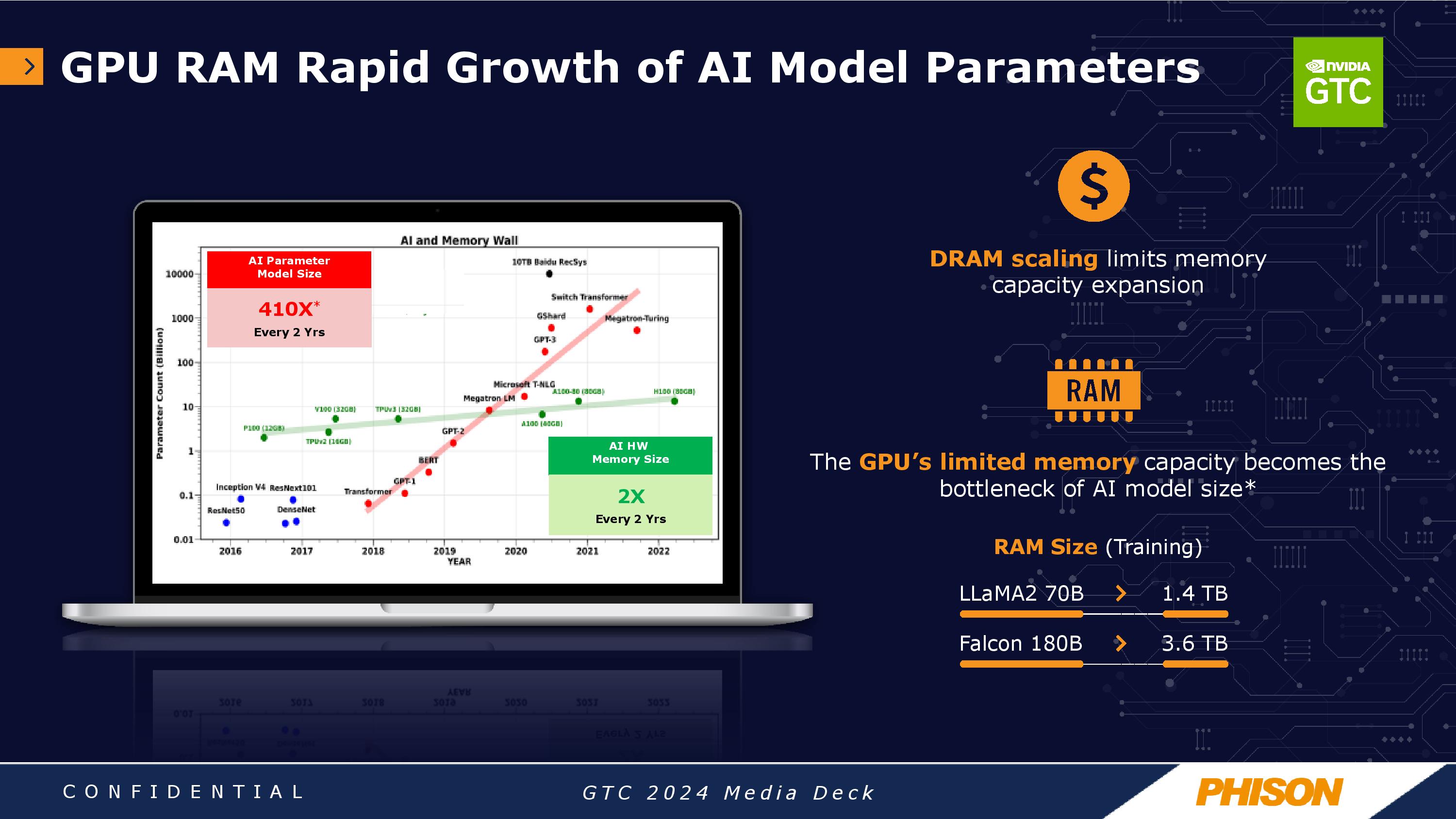

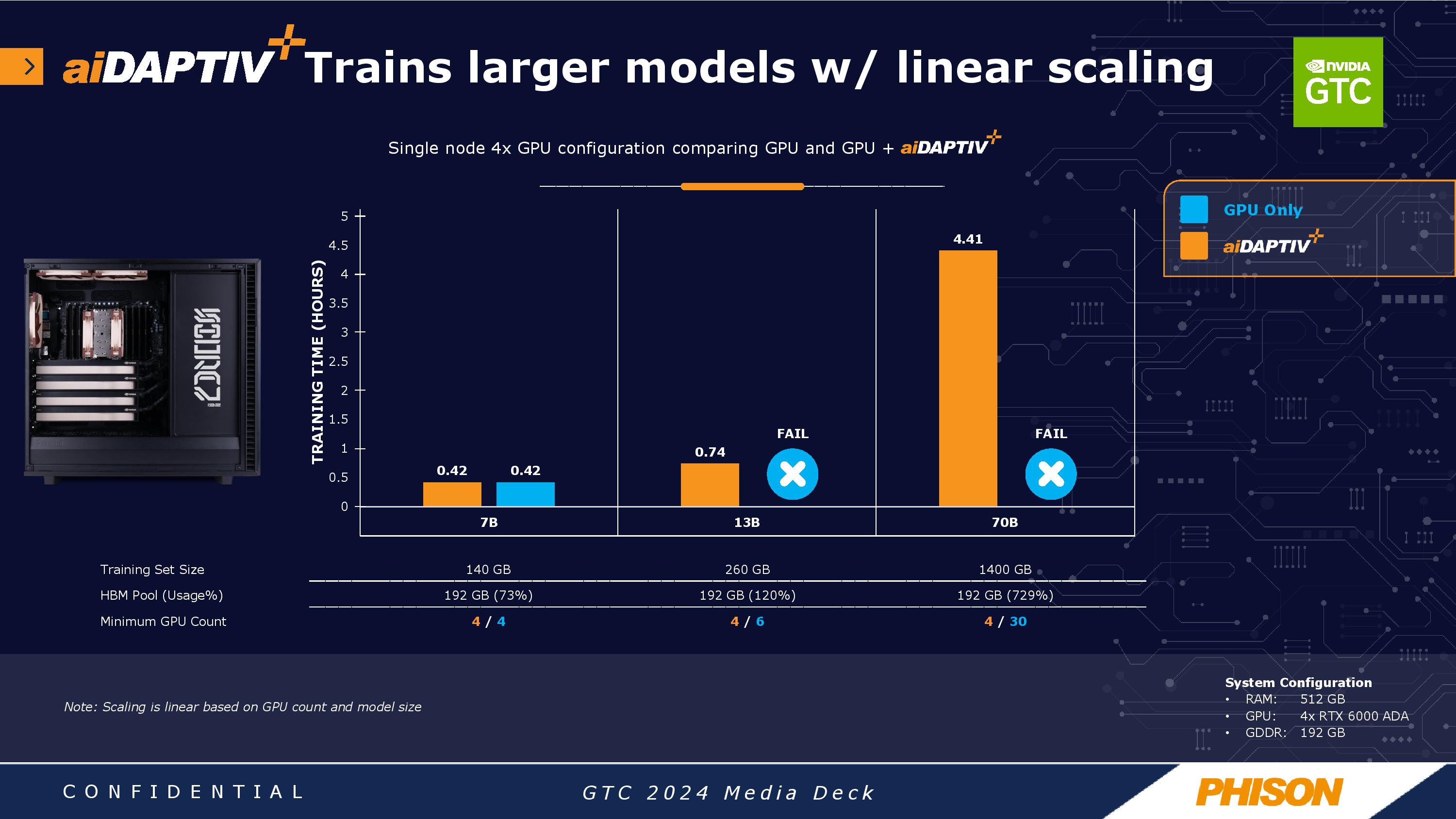

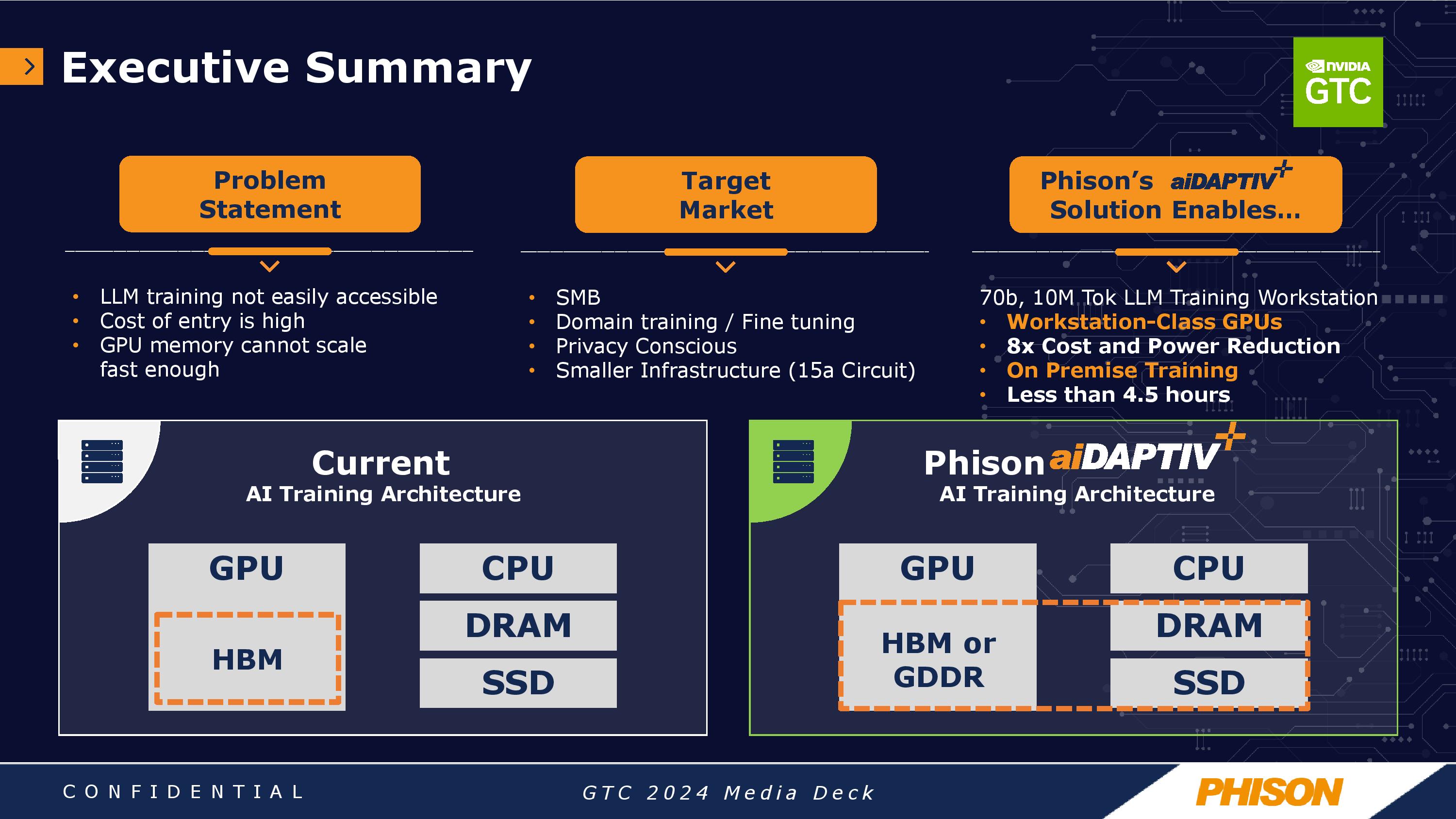

Phison's booth at GTC 2024 held an unexpected surprise: The company demoed a single workstation with four GPUs using SSDs and DRAM to expand the effective memory space for AI workloads, allowing it to run a workload that typically requires 1.4TB of VRAM spread across 24 H100 GPUs. The company's new aiDaptiv+ platform is designed to lower the barriers of AI LLM training by employing system DRAM and SSDs to augment the amount of GPU VRAM available for training, which Phison says will allow users to accomplish intense generative AI training workloads at a fraction of the cost of just using standard GPUs, albeit trading the lower cost of entry for reduced performance and thus longer training times.

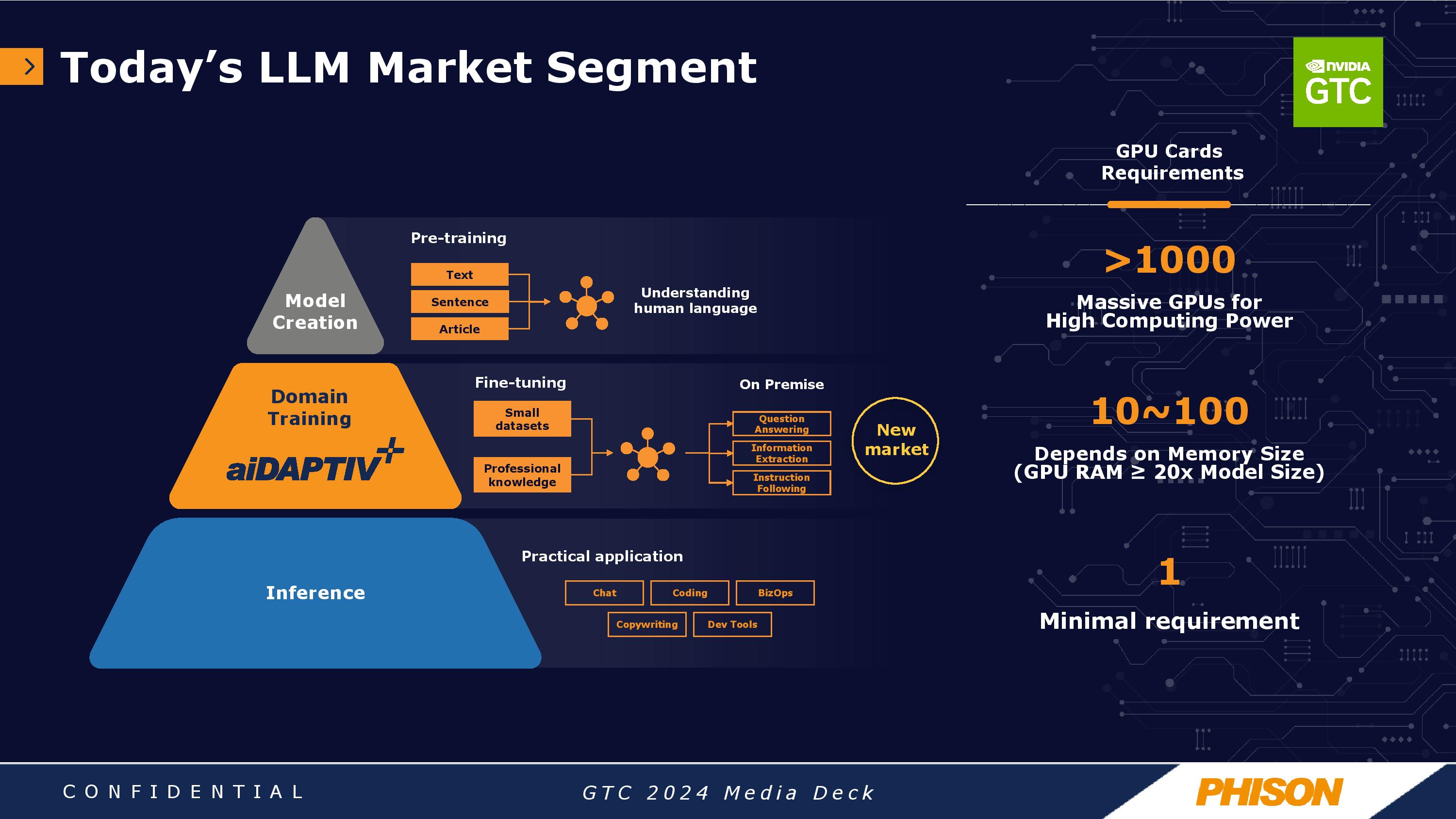

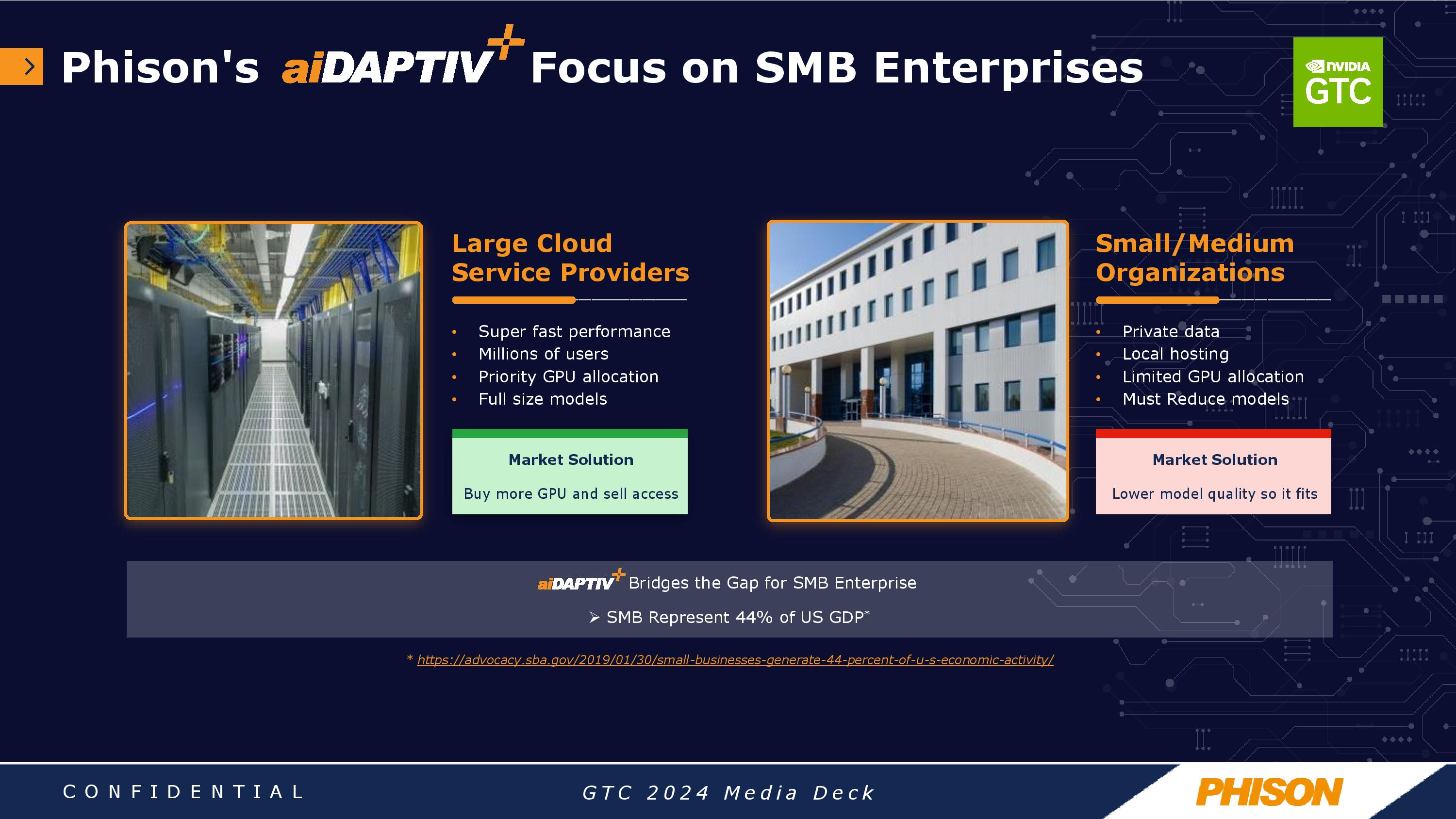

The upside for businesses using this type of deployment is that they can reduce cost, avoid the crushing GPU shortages that continue to plague the industry, and also use open-source models that they train on-prem, thus allowing them to keep sensitive private data in-house. Phison and its partners aim the platform at SMBs and other users who aren't as concerned with overall LLM training times yet could benefit from using off-the-shelf pre-trained models and training them on their own private data sets.

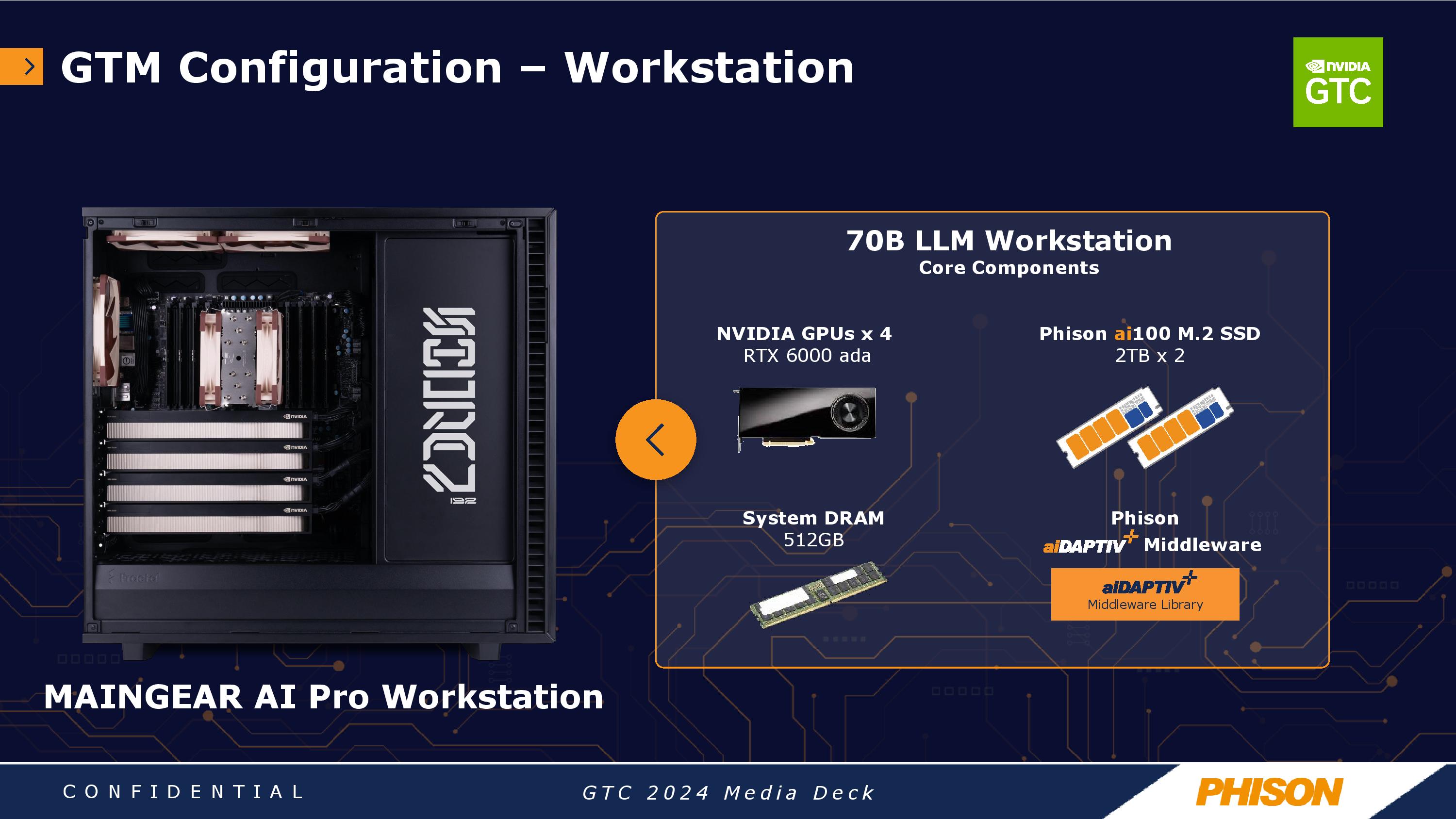

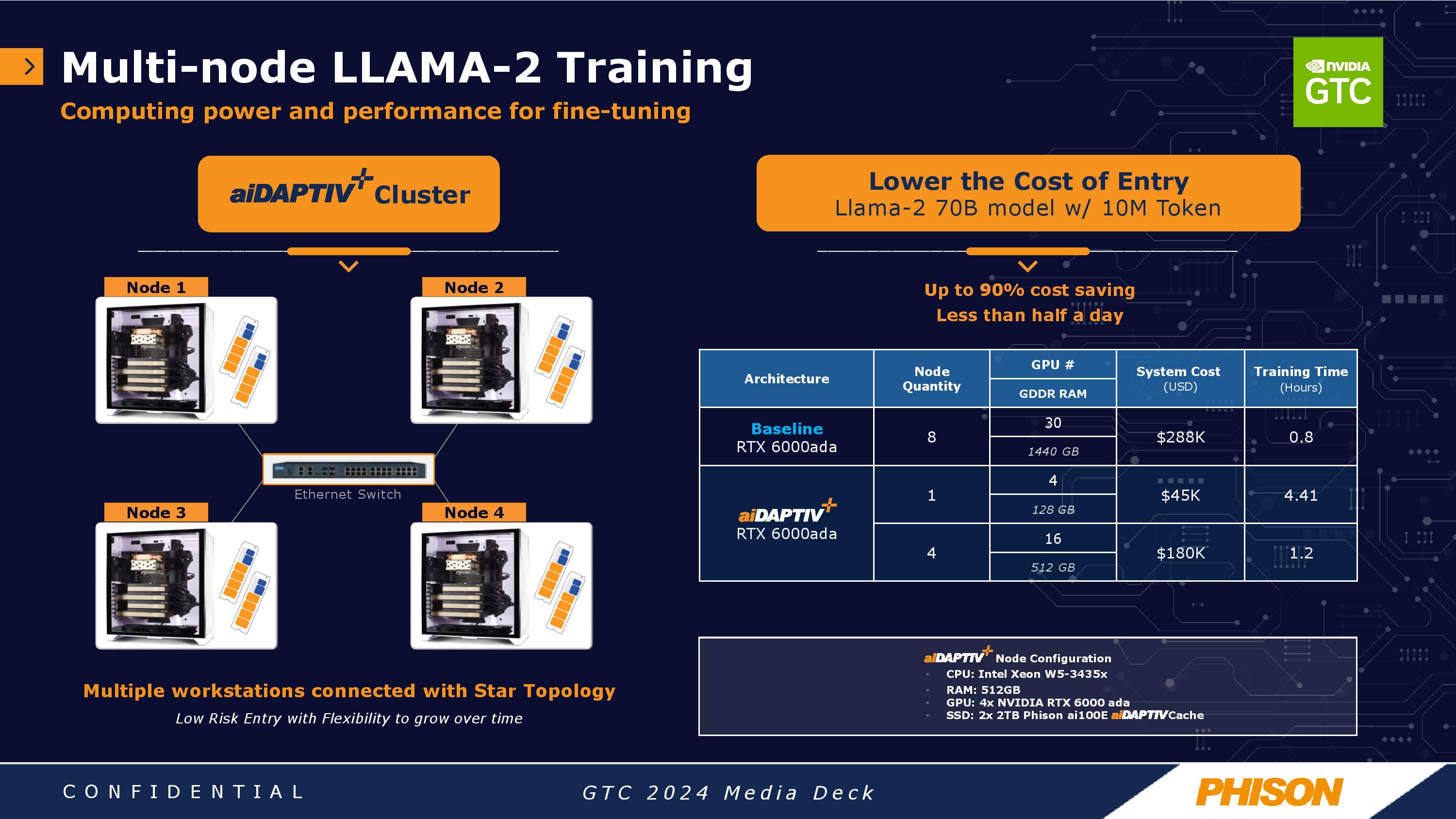

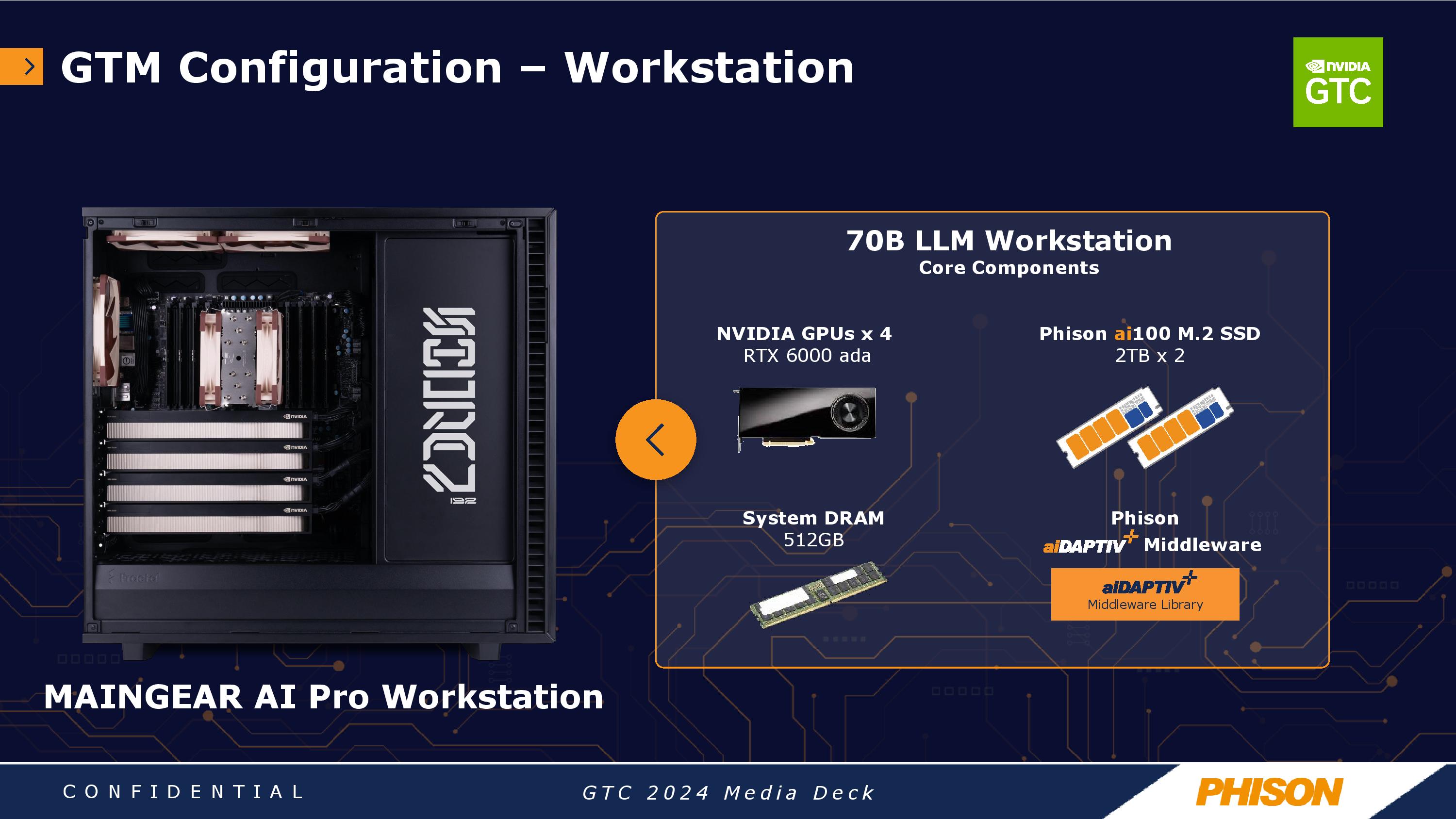

The company's demo served as a strong proof point for the tech, showing a single workstation with four Nvidia RTX 6000 Ada A100 GPUs running a 70 billion parameter model. Larger AI models are more accurate and deliver better results, but Phison estimates that a model of this size typically requires about 1.4 TB of VRAM spread across 24 AI GPUs dispersed across six servers in a server rack — and all the supporting networking and hardware required.

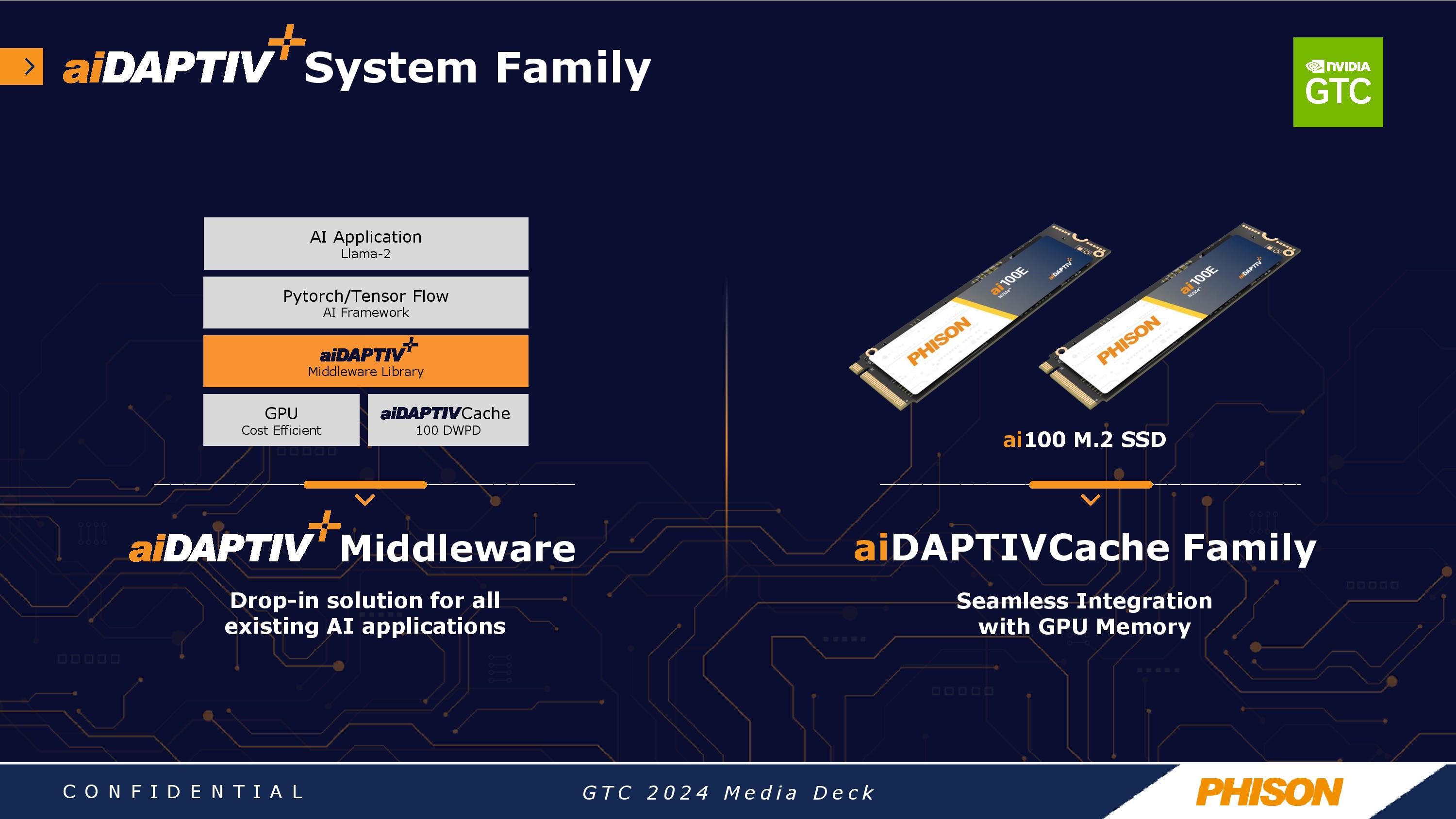

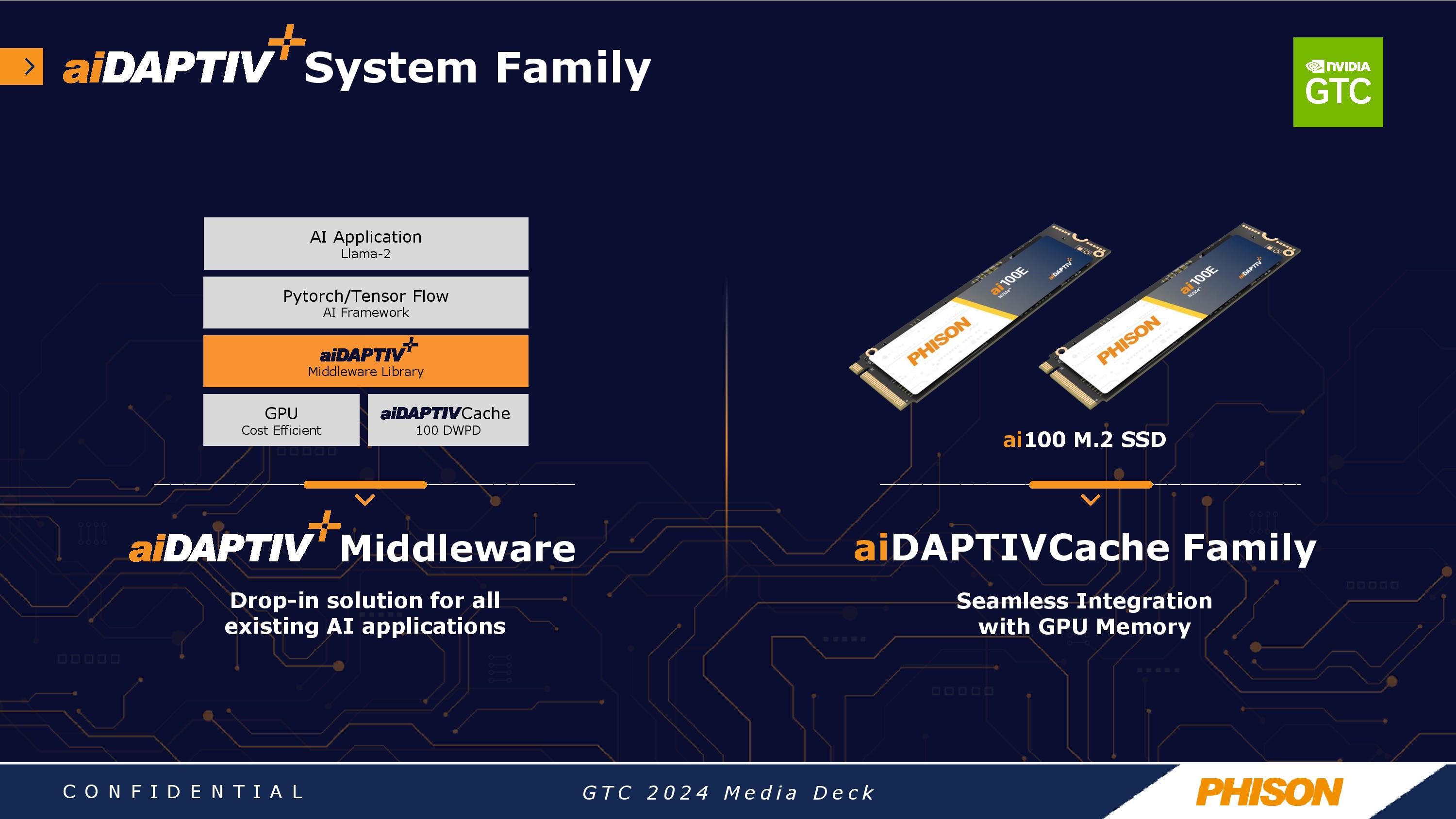

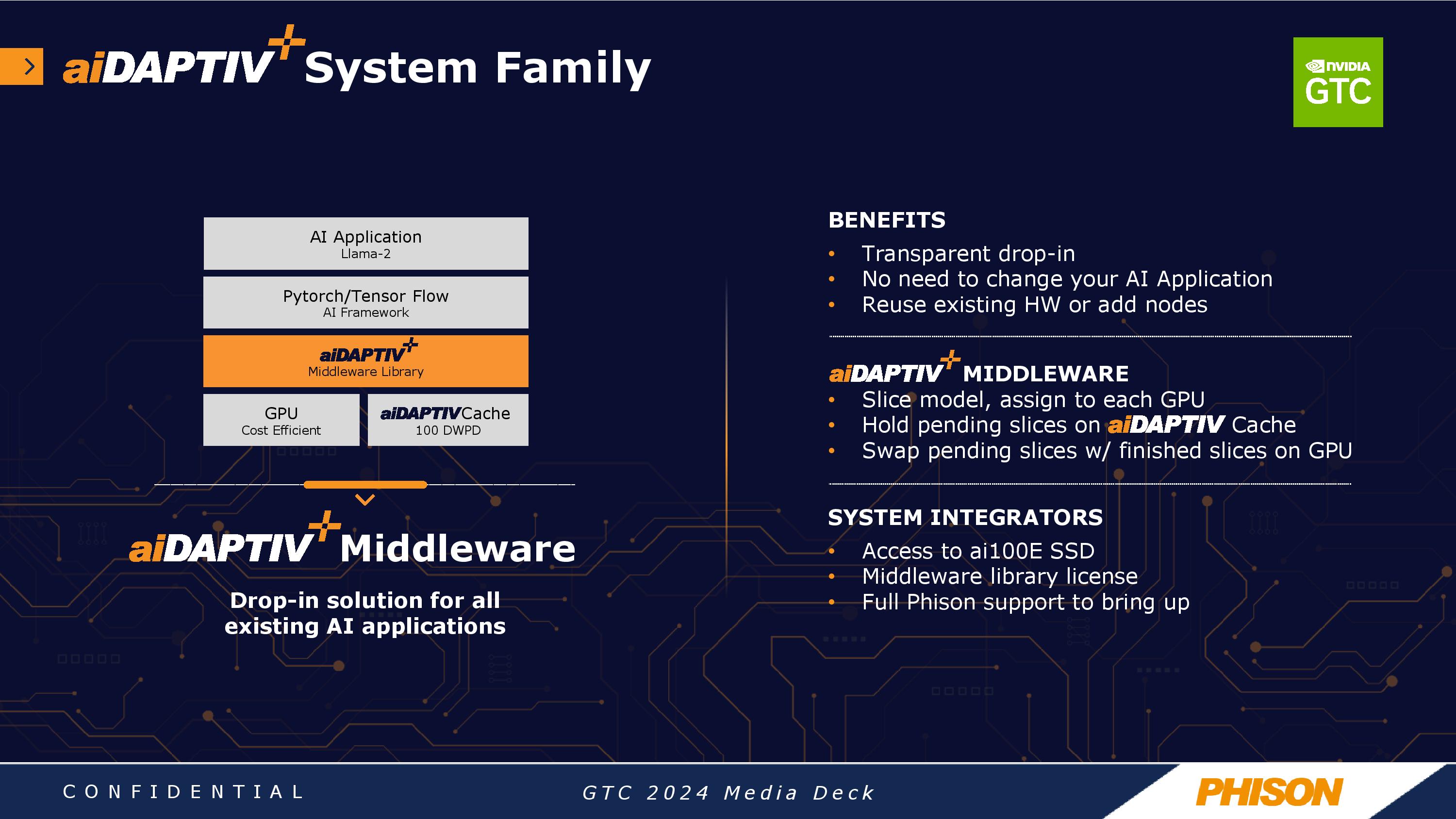

Phison's aiDaptiv+ solution uses a middleware software library that 'slices' off layers of the AI model from the VRAM that aren't actively being computed and sends them to system DRAM. The data can then either remain in DRAM if needed sooner, or it can be flushed to SSDs if it has a lower priority. The data is then recalled and moved back into the GPU VRAM for computation tasks as needed, with the newly-processed layer being flushed to the DRAM and SSD to make room for the next layer to be processed.

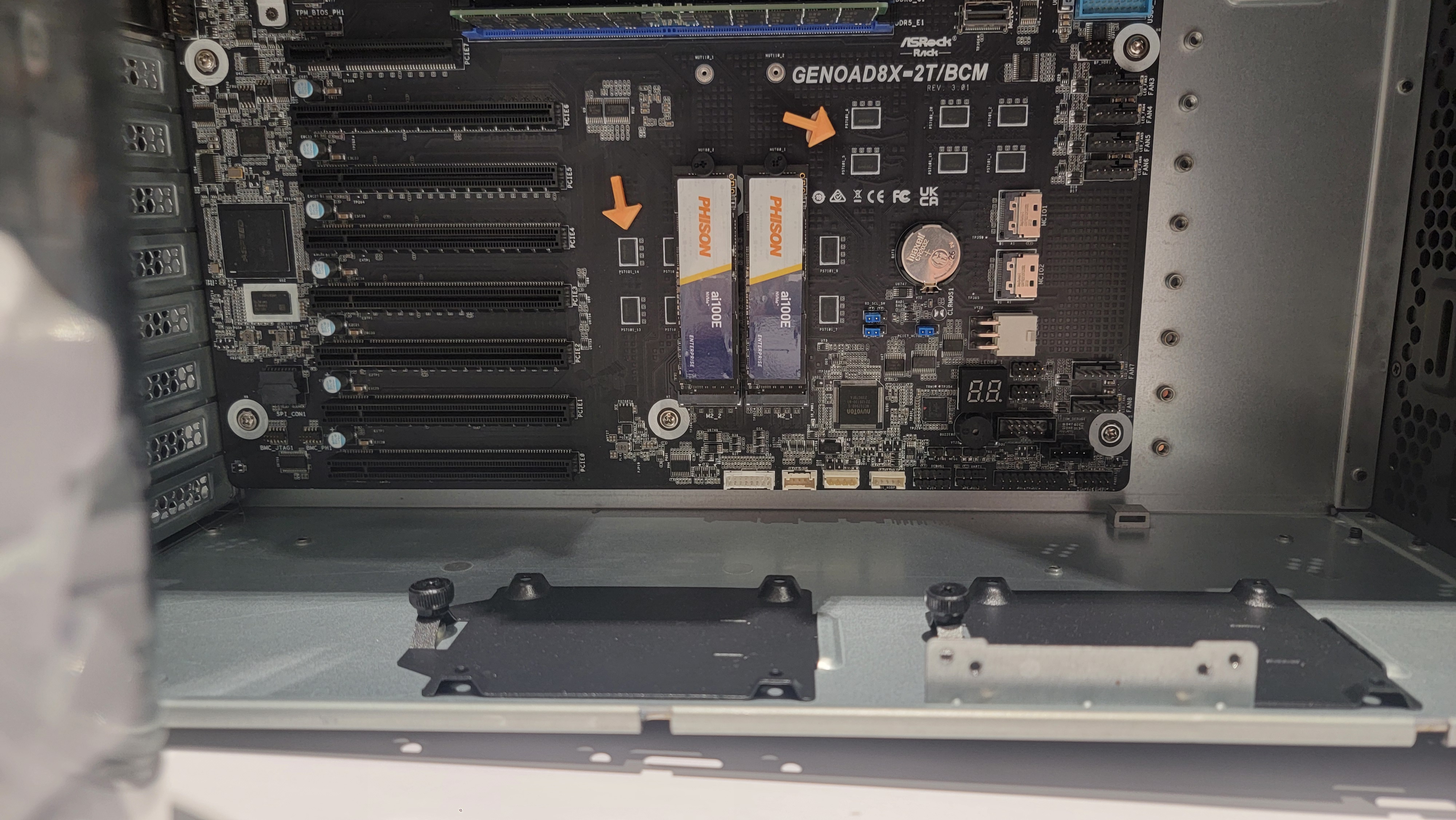

Phison conducted its demo with Maingear's new Pro AI workstation. This demo system comes kitted out with a Xeon w7-3435X processor, 512GB of DDR5-5600 memory, and two specialized 2TB Phison SSDs (more on that below). However, they come in multiple flavors ranging from $28,000 with one GPU to $60,000 for a system with four GPUs. Naturally, that's a fraction of the amount needed to put together six or eight GPU training servers with all of the requisite networking. Additionally, these systems will run on a single 15A circuit, whereas a server rack would require a much more robust electrical infrastructure.

Maingear is Phison's lead hardware partner for the new platform, but the company also has many other partners, including MSI, Gigabyte, ASUS, and Deep Mentor, who will also offer solutions for the new platform.

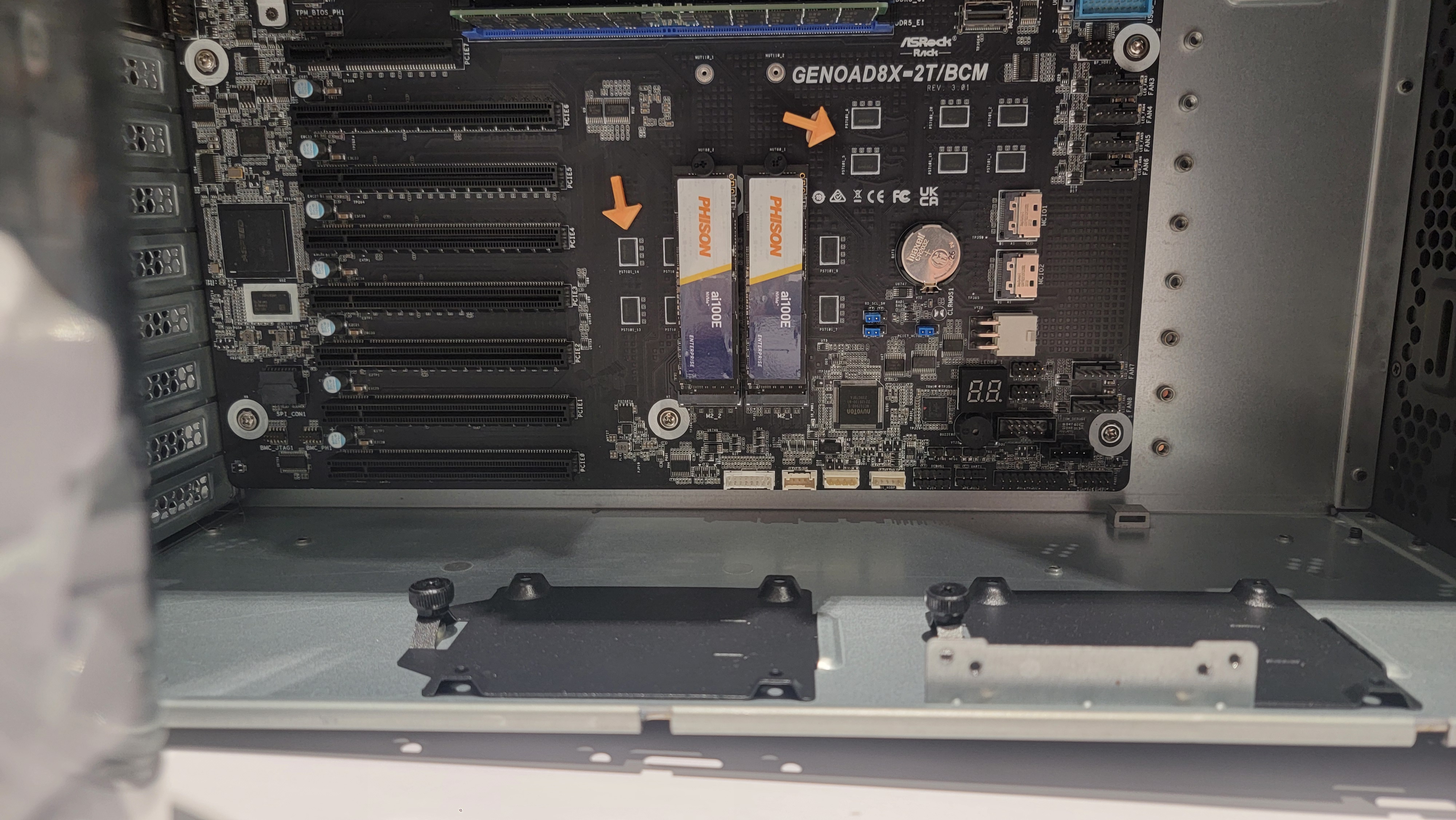

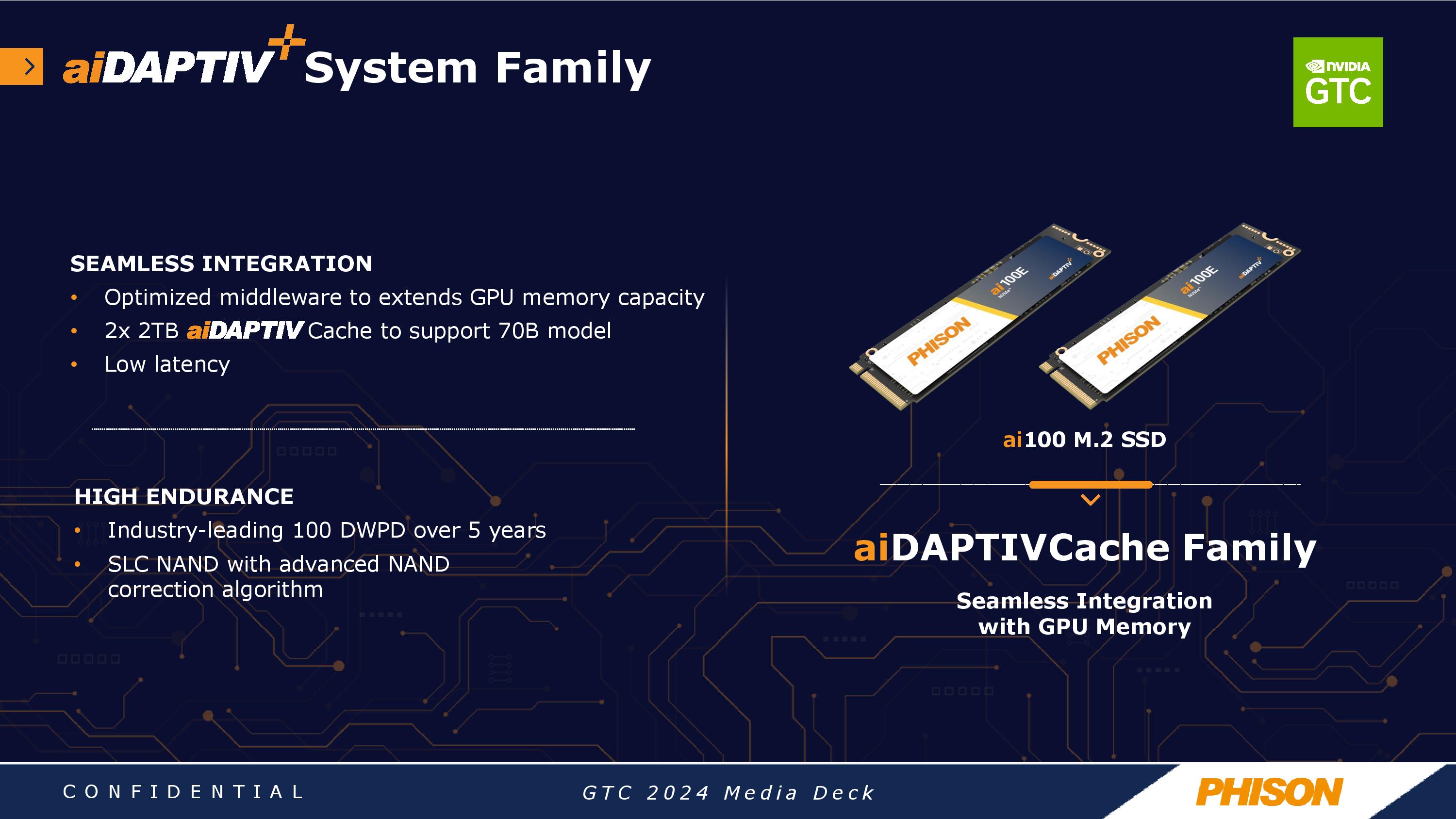

Phison's new aiDaptiveCache ai100E SSDs come in the standard M.2 form factor but are specially designed for caching workloads. Phison isn't sharing the deep-dive details of these SSDs yet, but we do know that they use SLC flash to improve both performance and endurance. The drives are rated for 100 drive writes per day over five years, which is exceptionally endurant compared to standard SSDs.

As you can see in the above slides, the aiDaptive+ midleware sits beneath the Pytorch/Tensor Flow layer. Phison says the middleware is transparent and doesn't require modification of the AI applications.

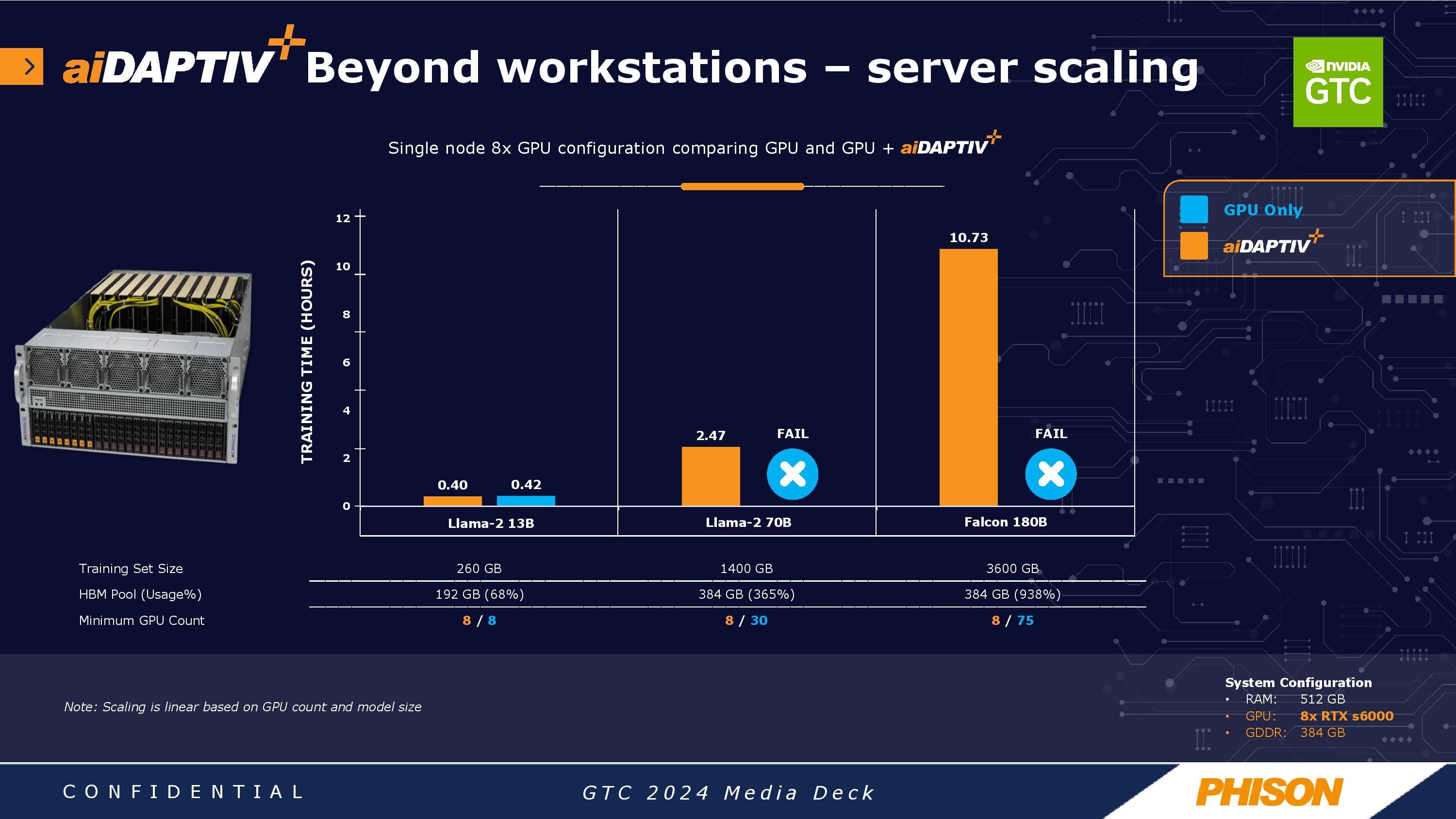

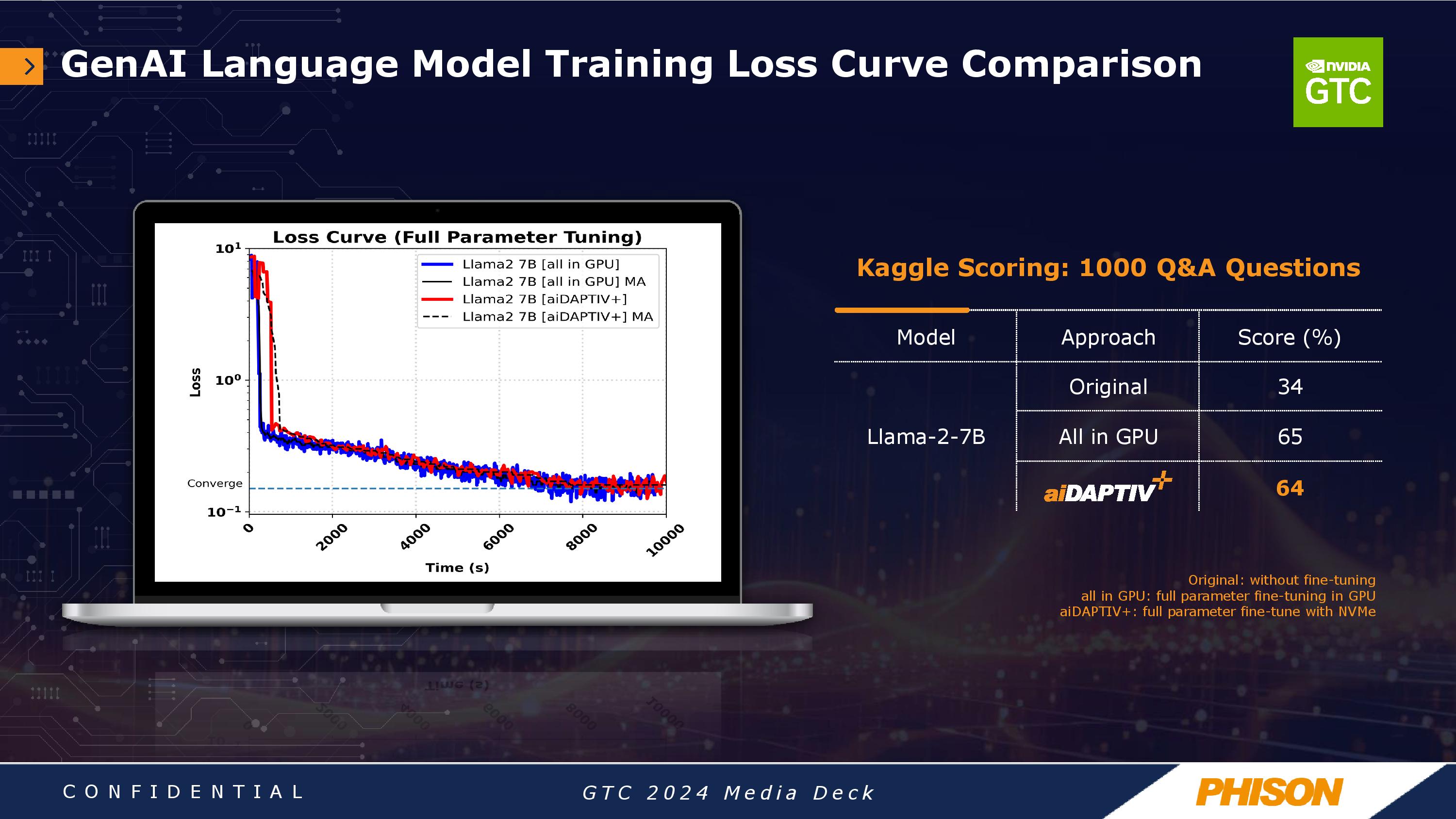

Pulling off this type of training session with a single workstation will obviously drastically reduce costs but it does come at the expense of performance. Phison predicts that this single setup is six times lower than the cost of training a large model with 30 GPUs spread out over eight nodes, but models will take roughly four times longer to train. The company also offers a scale-out option that ties together four nodes for a bit over half the cost, which it says reduces training times for a 70B model down to 1.2 hours as opposed to 0.8 hours with the 30-GPU system.

The move marks Phison's expansion from its standard model of creating SSD controllers and retimers to providing new hybrid software and hardware solutions that improve the accessibility of AI LLM training by vastly reducing the overall solution's costs. Phison has a number of partners to bring systems to market for the new software, and we expect to see more announcements in the coming months. As always, the proof of performance will be in third-party benchmarks, but with systems now filtering out to Phison's customers, it probably won't be long before we see some real-world examples.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

derekullo https://cdn.mos.cms.futurecdn.net/BbG9F5vqyJ45LkuymbE2j-970-80.jpgReply

Since the cost of each system is a fixed cost (besides electricity and maintenance ... and IT people gotta pay them!) a few questions come up.

Namely how would you convert time saved training an AI into a dollar amount?

Does the the power savings of only having to run an aiDaptiv 4 node/ 16 GPUs offset whatever profit gained or time saved from running 50% slower than the baseline 8 node / 30 GPU ?

Or in other words ... how soon if ever would you recoup the extra $108k spent on the baseline 8 node / 30 GPU over the aiDaptiv+ 4 node / 16 GPU? -

slightnitpick Reply

This is kind of assuming the purchaser would only want to train one thing at a time, and/or that there is sufficient supply to fill all of the needs.derekullo said:https://cdn.mos.cms.futurecdn.net/BbG9F5vqyJ45LkuymbE2j-970-80.jpg

Since the cost of each system is a fixed cost (besides electricity and maintenance ... and IT people gotta pay them!) a few questions come up.

Namely how would you convert time saved training an AI into a dollar amount?

Does the the power savings of only having to run an aiDaptiv 4 node/ 16 GPUs offset whatever profit gained or time saved from running 50% slower than the baseline 8 node / 30 GPU ?

Or in other words ... how soon if ever would you recoup the extra $108k spent on the baseline 8 node / 30 GPU over the aiDaptiv+ 4 node / 16 GPU?

Or that this opens things up a bit more for disruption given that it has made the machine learning cheaper, and thus more accessible without renting time on someone else's nodes.

For those who really want to push the envelope it also will make things more scalable I assume. It's pretty nice having terabytes of accessible and speedy enough memory to access while only using gigabytes of RAM. Though I'm not even a dilettante I am under the impression that addressable RAM is a limitation of the CPU/GPGPU. -

usertests RAM is a lot cheaper than GPUs + VRAM. It would be interesting to see what e.g. Strix Halo + 256-512 GB of RAM could accomplish.Reply