AMD shows off DX12-related rendering advances that make game engines more efficient and less dependent on the CPU -- demo shows a 64% improvement

The CPU is being used less and less for rendering, which should be good for performance.

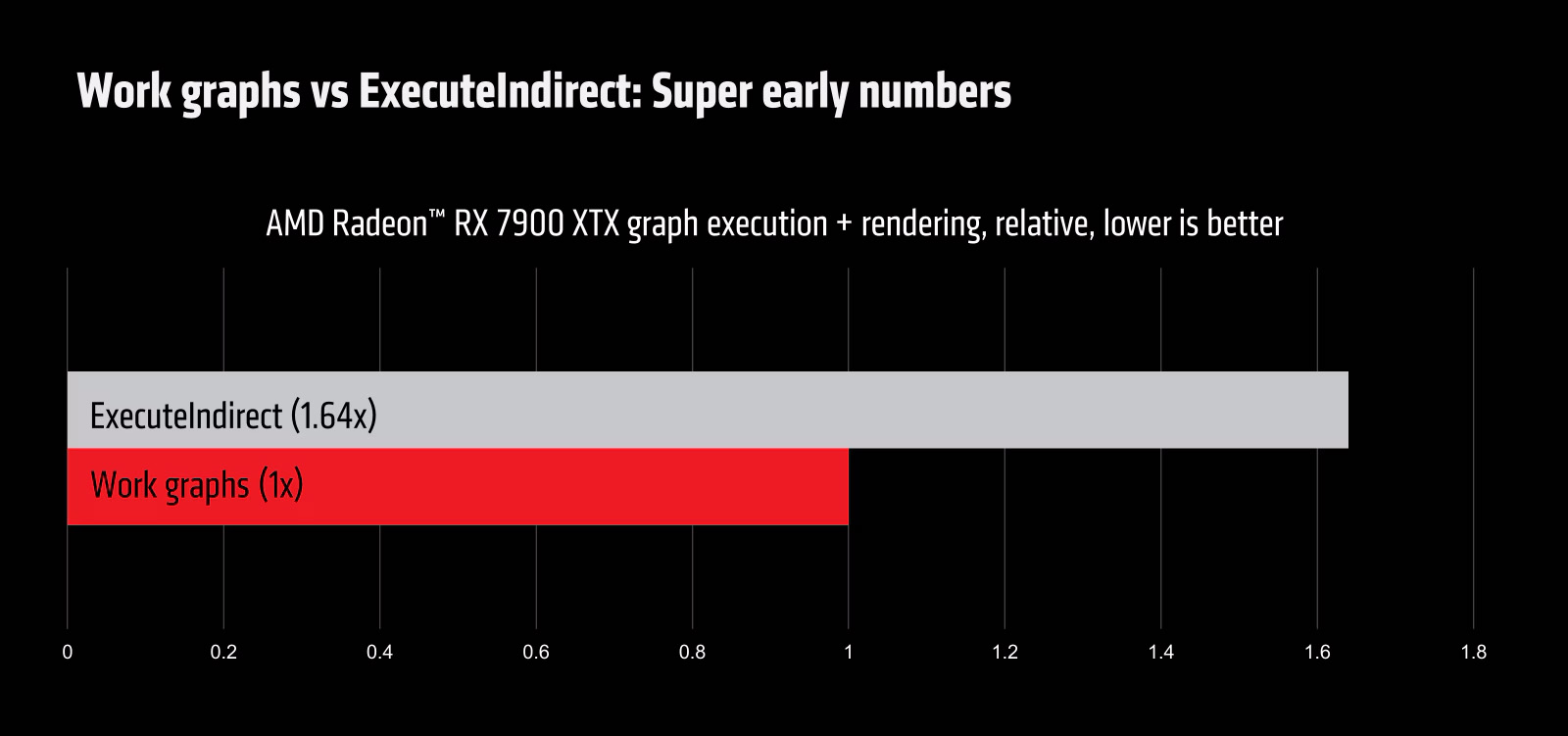

At GDC 2024, AMD announced it's adding draw calls and mesh nodes as part of Work Graphs — moving these features from the CPU to the GPU, with the aim of improving gaming performance. AMD showed off benchmark numbers of Work Graphs with mesh shaders running on an RX 7900 XTX, revealing a 64% performance improvement compared to normal Work Graphs without mesh shaders applied.

Before we dive into these features, what exactly are Work Graphs? They are a new GPU-driven rendering design built into the Direct3D 12 API that enables the GPU to apply work to itself. In Work Graph-enabled applications, this means that certain parts of the 3D rendering pipeline can be controlled and rendered on the GPU, independently of the CPU, reducing potential bottlenecks and improving efficiency and performance.

Work Graphs can't run everything on the GPU (yet), but the feature can already execute dispatch calls, shaders, and node executions, all of which are traditionally controlled via the CPU.

According to AMD's GPU open blog, Mesh Nodes are a new extension of Work Graphs that introduce a new kind of leaf node, which drives a mesh shader and allows a normal graphics PSO to be referenced from the work graph. Mesh Nodes allow a work graph to feed directly into a Mesh Shader, "turning the work graph itself into an amplification shader on steroids."

In layman's terms, integrating mesh shaders with Work Graphs have enabled AMD to make mesh shaders substantially more efficient for the reasons discussed above. The more 3D rendering "features" get ported to Work Graphs, the more efficient the 3D rendering pipeline becomes.

"'Mesh nodes' really close the loop in terms of providing an end-to-end replacement for Execute Indirect and moving the GPU programming model forward," writes AMD architect Matthäus Chajdas. "Everything can move into a single graph and execute in a single dispatch, making it very easy to compose large applications from small bits and pieces. Moreover, problems like PSO switching, empty dispatches, and buffer memory management just go away, making full GPU-driven pipelines accessible to many more applications and use cases than before."

AMD also introduced draw calls as a function that can be used with Work Graphs. Draw calls in Work Graphs can be processed asynchronously to boost rendering efficiency.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aside from the benchmark AMD provided, AMD also showed off a demo of a 3D engine running live with Work Graphs combined with the new mesh shading and draw call functionality AMD announced.

These new features continue to expand the functionality of Work Graphs, enabling the GPU to do more rendering tasks all by itself (independently from the CPU). In the future, we could see an entire video game being rendered entirely on the GPU, aside from game logic.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

Reply

AMD showed off benchmark numbers of Work Graphs with mesh shaders running on an RX 7900 XTX, revealing a 64% performance improvement compared to normal Work Graphs without mesh shaders applied. demo shows a 64% improvement

Oh no, that's not correct. You got it wrong.

In fact the traditional "ExecuteIndirect" method took 64% longer to render a frame compared to Work Graphs, so in other words, the new method is only 39% faster.

So it's not a 64% performance improvement , rather a mere 39%. ExecuteIndirect was 64% slower.:)

Another point to be noted in this early demo is that AMD also showcased how the API-level feature "Multi-draw indirect", was prevented from being "underutilized" via work graphs.

https://gpuopen.com/wp-content/uploads/2024/03/workgraphs-drawcalls-chart.png -

digitalgriffin ReplyMetal Messiah. said:Oh no, that's not correct. You got it wrong.

In fact the traditional "ExecuteIndirect" method took 64% longer to render a frame compared to Work Graphs, so in other words, the new method is only 39% faster.

So it's not a 64% performance improvement , rather a mere 39%. ExecuteIndirect was 64% slower. :)

Another point to be noted in this early demo is that AMD also showcased how the API-level feature "Multi-draw indirect", was prevented from being "underutilized" via work graphs.

https://gpuopen.com/wp-content/uploads/2024/03/workgraphs-drawcalls-chart.png

Math checks out

1/1.64 - 1 = .39 or 39% But that is significant. So original speed CPU * .6907 = new draw time GPU.

new draw time (GPU) * 1.64 = old draw time (CPU)

Interesting claim. But as it is now, draw engines don't take advantage of all the CPU threads as is. If you have 24 threads, you can have 24 draw calls being processed. But that just doesn't happen today. And part of this is because draw calls are what's known as composited. IE: Draw Right Arm. Draw Left Arm, Draw Body. Draw Arm, Draw weapson, Draw Clothes =>Assemble Draw entire character. This is what a draw tree looks like. Thus the last step is dependant upon the first 6, creating a delay. It takes a very talented developer good with threads to be able to keep the stack of all available threads full.

Now admittingly the GPU schedular can dispatch many more draw calls at once. Basically you are moving the CPU drivers to the GPU and executing there. But until the calling threads are properly written, it's much adu about nothing. -

Also, it seems too early to say how Work Graphs API will translate to gaming performance improvements. The other issue is that it'll be up to game developers to implement the API, which will likely take time.Reply

Let's not forget that MS launched DX12 Mesh Shaders a few years ago. And right now there is only ONE game that takes advantage of them, i.e "Alan Wake 2".

The game could be played on non-supported hardware/GPU though, but the performance was god-awful, 'cause the vertex shader pipeline, i.e. the traditional fallback geometry pipeline was busted. BTW, there's no point in adding a Mesh shader pipeline to an old game though, as they're not performance limited by the vertex processing stage.

Also, the developers would need to create a whole set of polygon-rich models and worlds, and then ensure there's a large enough user base of hardware out there, to justify the time spent on going down the mesh shader route.

Most games don't use huge amounts of triangles to create the world. And it's not surprising to know that Developers kept this aspect quite light, instead using vast numbers of compute and pixel shaders to make everything look good, at least for the most part.

So yeah, even though 'Work Graphs' sounds cool, it's not sure many developers will use it. Unless, of course, the next PlayStation and Xbox consoles support it.

EDIT:

Thanks for the input, digitalgriffin ! Just saw your comment while posting this. :) -

thisisaname Reply

Just what I was thinking, it sounds great but they have to get programmers to use it.Metal Messiah. said:Also, it seems too early to say how Work Graphs API will translate to gaming performance improvements. The other issue is that it'll be up to game developers to implement the API, which will likely take time.

Let's not forget that MS launched DX12 Mesh Shaders a few years ago. And right now there is only ONE game that takes advantage of them, i.e "Alan Wake 2".

The game could be played on non-supported hardware/GPU though, but the performance was god-awful, 'cause the vertex shader pipeline, i.e. the traditional fallback geometry pipeline was busted. BTW, there's no point in adding a Mesh shader pipeline to an old game though, as they're not performance limited by the vertex processing stage.

Also, the developers would need to create a whole set of polygon-rich models and worlds, and then ensure there's a large enough user base of hardware out there, to justify the time spent on going down the mesh shader route.

Most games don't use huge amounts of triangles to create the world. And it's not surprising to know that Developers kept this aspect quite light, instead using vast numbers of compute and pixel shaders to make everything look good, at least for the most part.

So yeah, even though 'Work Graphs' sounds cool, it's not sure many developers will use it. Unless, of course, the next PlayStation and Xbox consoles support it.

EDIT:

Thanks for the input, digitalgriffin ! Just saw your comment while posting this. :) -

Alvar "Miles" Udell Would have been far better had it released with DX12 in 2015 when CPUs were comparatively weak. Something tells me with a "modern" CPU (say Zen 3 or Intel equivalent or newer) the performance gain will be smaller, if even statistically significant, especially on AMD's unified design vs nVidia's dedicated design.Reply -

gruffi Reply

That's wrong. It's indeed a 64% performance improvement. It's actually simple math. I don't know why this is so hard for people to understand. If lower means better, usually having times, then just use the reciprocal of the values.Metal Messiah. said:Oh no, that's not correct. You got it wrong.

In fact the traditional "ExecuteIndirect" method took 64% longer to render a frame compared to Work Graphs, so in other words, the new method is only 39% faster.

So it's not a 64% performance improvement , rather a mere 39%. ExecuteIndirect was 64% slower.:)

Another point to be noted in this early demo is that AMD also showcased how the API-level feature "Multi-draw indirect", was prevented from being "underutilized" via work graphs.

https://gpuopen.com/wp-content/uploads/2024/03/workgraphs-drawcalls-chart.png

In the same time ExecuteIndirect can finish only ~60.98% of the task (100%/1.64). Now normalize that to 100%, 100%*100%/60.98% = 164%. So, Work graphs shows a 64% performance improvement compared to ExecuteIndirect. -

ien2222 Reply

Actually no.gruffi said:That's wrong. It's indeed a 64% performance improvement. It's actually simple math. I don't know why this is so hard for people to understand. If lower means better, usually having times, then just use the reciprocal of the values.

In the same time ExecuteIndirect can finish only ~60.98% of the task (100%/1.64). Now normalize that to 100%, 100%*100%/60.98% = 164%. So, Work graphs shows a 64% performance improvement compared to ExecuteIndirect.

And it's easy math to check with using the equation of: Original - (original x improvement %) which will give you the value of the better performance. So, if we use 64% we'd have 1.64 - (1.64 x 64%) = .59, which is incorrect. Using 39% we have 1.64 - (1.64 x 39%) = 1, which is correct

A simpler way of showing that 64% is wrong is that we're working in a scale of 0-100%, 0% improvement gives you the same value as the original, 100% improvement means the value is 0. Given that 64% > 50% and a 50% improvement should give us half the original; 1.64/2 = .82 meaning 64% should be even less than that which is incorrect as we know the correct value is 1. -

TechyIT223 It would be better if the devs show some actual game demo footage of this new tech.Reply

Wanna see how much this makes any difference in real world gameplay😉 -

gruffi Reply

Actually yes. It's 64% more performance, not 39%. Your formula is wrong. Generally calculating the improvement, it should be "improved - (original x improvement%) = 1" or "improved = 1 + (original x improvement%)". Subtracting "original x improvement%" from the original makes absolutely no sense.ien2222 said:Actually no.

And it's easy math to check with using the equation of: Original - (original x improvement %) which will give you the value of the better performance. So, if we use 64% we'd have 1.64 - (1.64 x 64%) = .59, which is incorrect. Using 39% we have 1.64 - (1.64 x 39%) = 1, which is correct

A simpler way of showing that 64% is wrong is that we're working in a scale of 0-100%, 0% improvement gives you the same value as the original, 100% improvement means the value is 0. Given that 64% > 50% and a 50% improvement should give us half the original; 1.64/2 = .82 meaning 64% should be even less than that which is incorrect as we know the correct value is 1.

100% improvement also doesn't mean the value is 0. In this case 100% improvement would mean that the original value would have halved. If the value was 0, or at least close to 0, then the improvement would need to be infinite.

But I already showed the correct calculation before. Your calculations are nonsense. But it shows what I said before. A lot of people don't understand this math. -

ien2222 Reply

*sigh*gruffi said:Actually yes. It's 64% more performance, not 39%. Your formula is wrong. Generally calculating the improvement, it should be "improved - (original x improvement%) = 1" or "improved = 1 + (original x improvement%)". Subtracting "original x improvement%" from the original makes absolutely no sense.

100% improvement also doesn't mean the value is 0. In this case 100% improvement would mean that the original value would have halved. If the value was 0, or at least close to 0, then the improvement would need to be infinite.

But I already showed the correct calculation before. Your calculations are nonsense. But it shows what I said before. A lot of people don't understand this math.

I went to college for Mathematics and Computer Science, heck, nearly ended up with minors in physics, chemistry, music, and economics, and stuff like this is what I've done for work among other things.

This is my wheelhouse.

We aren't strictly talking about how much better one algorithm is vs the other in the absolute sense where we can have an algorithm that's 10000000000000000000000000000000% faster than the other, we're talking about the improvement in results. In this context what I said is correct, along with Metal and Griffin.

Edit: The problem is most everyone is only used to some value increasing as performance goes up i.e. using A gives us 100 units and going with B gives also 164 units. In this case it's a 64% increase with B. Easy enough, to figure out as it's (improvement - original)/original x 100%.

However, when increased performance results in decreased units, you calculate it differently. Generally the limit to which it can be reduced to is 0 or some positive number and this equation for that is: performance increase% = (original - improvement)/original x 100%. From this equation is what I derived the equation in my first post:

(O - i)/O *100% = PI%

(O-i)/O= (PI%/100%)

O-i = (O*(PI%/100%)

-i = (O*(PI%/100%) - O

i = O - (O*(PI%/100%)

improved value = original value - (original value * performance improvement%)