Intel CEO says Nvidia’s AI dominance is pure luck — Nvidia VP fires back, says Intel lacked vision and execution

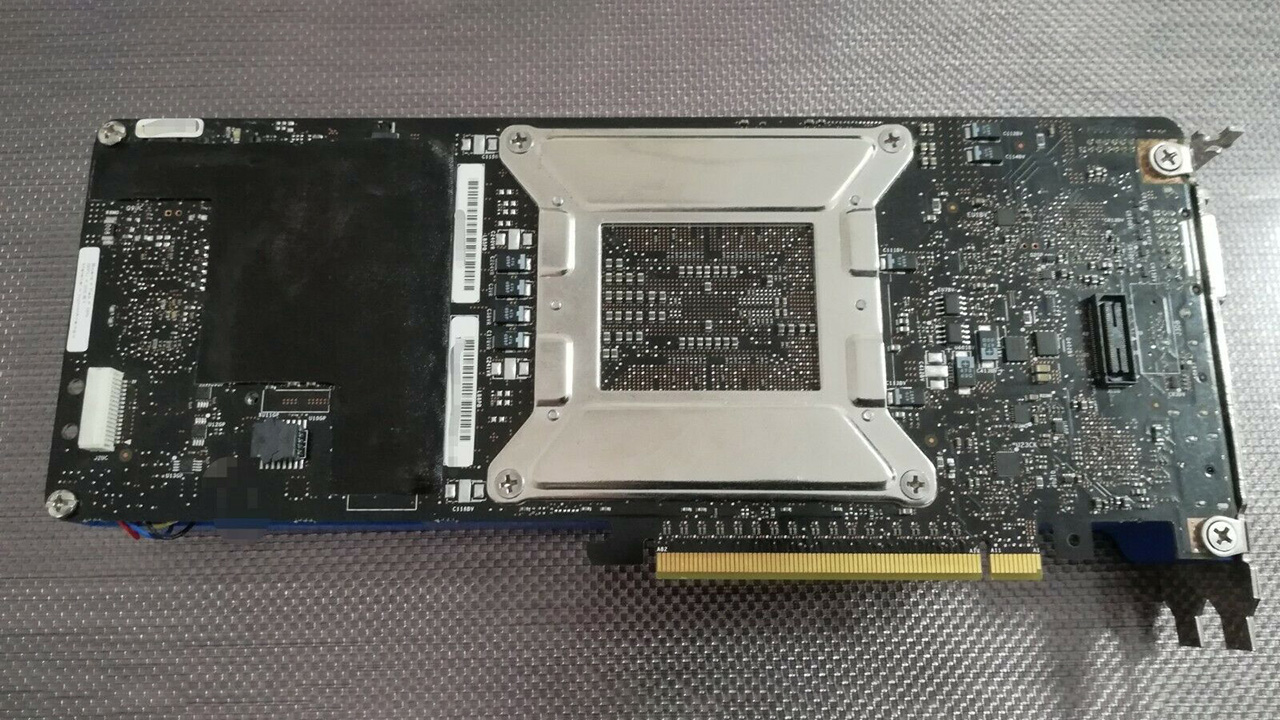

If only Intel didn’t kill his Larrabee project, laments Pat Gelsinger.

Intel CEO Pat Gelsinger has publicly characterized Nvidia’s AI industry success as being purely accidental. In a wide-ranging interview with Gelsinger, hosted by the Massachusetts Institute of Technology (MIT), the Intel boss told attendees that Nvidia CEO Jensen Huang “got extraordinarily lucky.” He went on to lament Intel giving up the Larrabee project, which could have made Intel just as ‘lucky,’ in his view. However, the VP of Applied Deep Learning Research at Nvidia, Bryan Catanzaro, took to Twitter / X earlier today to dismiss Gelsinger’s hot take on the status quo in the AI hardware industry, saying Intel lacked the vision and execution to succeed in its prior initiatives.

The Intel CEO’s highlighting of ‘lucky’ Nvidia came in response to a question about 17 minutes into the video recorded by MIT. Daniela Rus, a Professor of Electrical Engineering and Computer Science and Director of the Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT, asked, “What is Intel doing for the development of AI hardware, and how do you see that as a competitive advantage?”

Gelsinger began his answer by talking about Intel’s mistakes. According to the current CEO of Intel, the firm’s fortunes took a dive when he left, but it is again on the path to glory. He recounted his 11 years in the wilderness (at EMC, then VMWare) and the sad fate that met Larrabee.

“When I was pushed out of Intel 13 years ago, they killed the project that would have changed the shape of AI,” Gelsinger told his MIT audience, referencing the end of development work on Larrabee. Meanwhile, Jensen Huang is characterized as a hard worker who was single-mindedly pursuing advances in graphics but lucked out when AI acceleration began to be a much sought-after computing feature.

Jensen Huang “worked super hard at owning throughput computing, primarily for graphics initially, and then he got extraordinarily lucky,” stated Gelsinger. He illustrated his point by asserting that when the green shoots of AI first popped up, Nvidia “didn't even want to support their first AI project.”

Continuing to lament the loss of Larrabee, or any kind of similar development thrust at Intel, Gelsinger explained Nvidia’s dominance came partly from the fact that “Intel basically did nothing in the space for 15 years.” Don’t worry, though, as Gelsinger heralds his own return, “I come back, I have a passion, okay, we're going to start showing up in that space.”

As well as developing hardware to accelerate AI, Gelsinger is keen on what he calls his number one strategy of democratizing AI. New hardware alone isn’t the answer he says, it is also vital to “eliminate proprietary Technologies like CUDA.” In the not-too-distant future, the Intel CEO sees this democratizing force as making high-performance AI available on every machine, from modest home users to developers, enterprises, and super-powerful servers.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Interestingly, Gelsinger predicts that, largely thanks to AI, we are on the precipice of “one to two decades of sheer innovation.” He reckons that we will get AI to tap resources far beyond the large but rather simple data sets that are being used now (mostly textual). Moreover, Intel is busy executing its plans and will “build lots of fabs, so we can build lots of compute” to address AI.

Nvidia says no

Nvidia’s VP of Applied Deep Learning Research, Bryan Catanzaro, issued a concise dismissal of Gelsinger’s assertions about Nvidia benefitting from simple luck.

I worked at Intel on Larrabee applications in 2007. Then I went to NVIDIA to work on ML in 2008. So I was there at both places at that time and I can say:NVIDIA's dominance didn't come from luck. It came from vision and execution. Which Intel lacked. https://t.co/ygUJZIQWLHDecember 20, 2023

In the above Tweet (click to expand), we see a key Nvidia VP explain why he thinks “Nvidia’s dominance didn't come from luck.” Rather, he insists, “It came from vision and execution. Which Intel lacked.”

Catanzaro has worked at both companies, including spending time working on Larrabee applications in 2007, so he has insight into management decisions. However, we must temper his words with the knowledge that he is currently a green team player.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

bit_user ReplyHe went on to lament Intel giving up the Larrabee project, which could have made Intel just as ‘lucky,’ in his view.

This is such a load of garbage. Xeon Phi didn't get cancelled... at least not until it had a chance to prove that x86 just couldn't match Nvidia in either HPC or AI workloads. And the reason Larrabee, itself, got cancelled was that it just couldn't compete with existing GPUs.

He should blame himself for his decision to use x86 in Larrabee, and not the ISA of their i915 chipset (and later iGPU) graphics.

Jensen Huang “worked super hard at owning throughput computing, primarily for graphics initially, and then he got extraordinarily lucky,” stated Gelsinger.

What certainly wasn't luck was Nvidia's pivot towards HPC, their investments in CUDA (which, if you don't know, is totally separate from what games and interactive graphics use), and Nvidia's efforts to court and support users of GPU Compute in academia.

On that latter point, Nvidia created an entire academic symposium for stimulating development of GPU Compute, way back in 2009:

https://en.wikipedia.org/wiki/Nvidia_GTC

None of that is "luck". Nvidia might not have guessed that AI would be the GPU Compute application which would take them to the next level, but they made sure they were positioned to benefit from any domains where GPU Compute took off.

P.S. I'm neither a Nvidia fan, nor an Intel hater, but this revisionist history just bugs me. Credit (and blame) where it's due, please! -

Makaveli i'm liking this NV vs intel a nice change from both of them vs AMD lol.Reply

Let them duke it out in the AI space and maybe we all benefit. -

endocine Intel has got to stop making stupid comments. "Glued together", "AMD is in the rear view mirror" "Nvidia got lucky". This is just garbage, no one cares what the current CEO thinks about nvidia, how does that help intel get out of the mess they dug themselves into, for which they have no one else to blame? Is Intel blaming its uncompetitive products on "bad luck", or is the reality that other companies out engineered them, identified novel markets, and executed?Reply

Intel: show us the competitive products that are better, otherwise, you are whining about losing, people are laughing at you, and no one is buying your garbage, your GPUs which totally suck, were late, buggy, non performant and have to be priced at firesale in order to move, or your crappy CPUs on old nodes from consumer to enterprise that use insane amounts of power -

waltc3 It's very amusing to hear Gelsinger call both AMD and nVidia "lucky" (not the first time he's said this), with the proviso that the reason Intel found itself non-competitive was because Intel was merely "unlucky." Just more proof that Gelsinger was the wrong man for the job. He can't get the old, dead Intel out of his mind, the monopolistic Intel that paid companies not to ship competitor's products for years. Gelsinger is a relic from those days, and apparently his mind is still there. More's the pity for Intel.Reply

BTW, Larrabee never worked, never did what people thought it would do--which it never could do--so Intel finally cancelled it prior to launching it. Had Larrabee actually launched, it would have been so disappointing compared to the pre-launch speculation and hype by Internet pundits who had never spent a day ray tracing themselves that it would have died quickly, anyway. Intel knew it, and canceled it. It was funny in those days to see the Internet pundits squirm when all that they maintained Larrabee would be died with a whimper. So many people had egg on their faces! I thought it was quite amusing at the time, as I never bought into the Larrabee hype and said so often--the "real-time ray-tracing CPU that never was." Chip design and production execution are not accidents that fall out of the sky...! Gelsinger cannot face talking about the real reasons Intel lost its way--its obsession with being a monopoly instead of a brutally efficient competitor. Lots of that old, rancid corporate culture still lives inside of Intel, obviously. The suits have a hard time letting go of what was, but is no more. "Luck" has nothing to do with it. -

ekio Well, both are right…Reply

NGreedia would not even sell a tenth of their gpus to their best friend bitcoin farm if AI didn’t take off like that.

Intel was managed by incompetent greedy morons with short term vision for the past 15 years and now they almost collapsed.

They are literally being saved by the US government right now while Pat is pouring himself dozens of millions a year of salary…

I wish they both learn about being humble and work to get better products rather that get better stock prices… because better products make stock price skyrocket… look at AMD over the past 10 years. -

bit_user Reply

No. Did you read the article? He blamed it on the people who forced him out and killed Larrabee.endocine said:Is Intel blaming its uncompetitive products on "bad luck",

He never said Intel was "unlucky".waltc3 said:with the proviso that the reason Intel found itself non-competitive was because Intel was merely "unlucky."

Wow, we actually agree on something!waltc3 said:Larrabee never worked, never did what people thought it would do--which it never could do--so Intel finally cancelled it prior to launching it. Had Larrabee actually launched, it would have been so disappointing compared to the pre-launch speculation and hype by Internet pundits who had never spent a day ray tracing themselves that it would have died quickly, anyway. Intel knew it, and canceled it.

: )

Intel did indeed make a lot of noise about ray tracing, but Larrabee was (primarily, at least) a traditional raster-oriented GPU. It had hardware Texture engines, for instance. I'm not sure about ROPs.waltc3 said:I never bought into the Larrabee hype and said so often--the "real-time ray-tracing CPU that never was." -

atomicWAR Reply

You're on point here. Only thing I'd add is Intel has also killed their discrete gpu presence in in the late 90s with the i740 by under supporting it. They could have been light years ahead of where they are had they continued to progress with their discrete gpus instead of pivoting to iGPUs only at that time. Their lack of innovation in the gpu and AI space by extention is purely on them.bit_user said:He should blame himself for his decision to use x86 in Larrabee, and not the ISA of their i915 chipset (and later iGPU) graphics. -

bit_user Reply

This actually explains what happened to the successor of the i740:atomicWAR said:Only thing I'd add is Intel has also killed their discrete gpu presence in in the late 90s with the i740 by under supporting it. They could have been light years ahead of where they are had they continued to progress with their discrete gpus instead of pivoting to iGPUs only at that time.

https://vintage3d.org/i752.php

It's a very good site, BTW. -

-Fran- I remember being back in University and I was the only one arguing why Larabee was not going to succeed and everyone just hated me for bringing to their attention the way they were "accelerating" was just glorified emulation and that would not get them very far, specially in scaling out enterprise solutions that actually required the grunt.Reply

I think Pat is now fully delusional. I'm half convinced now. Maybe this is his last stand. "Dead man walking" all over.

Regards.