Nine Ingredients Essential To The Modern PC Experience

What Is The Modern PC Experience?

If you are reading this, PCs are likely an integral part of your life. You may spend so much time with computers that you take them for granted. But what defines a modern PC? What technologies and innovations shape your experience? How has the personal computer changed since its inception?

From expansion slots to the screen, join us as we explore all the things that make PCs what they are today.

The Expansion Bus

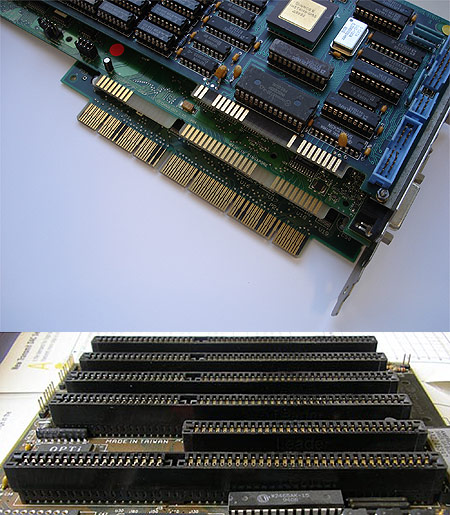

One of the key strengths of the PC is its open architecture and adaptability, historically facilitated by any number of expansion buses that let you add and upgrade certain capabilities through the use of compatible cards.

Old timers (like most of the folks writing for Tom's) will remember the eight-bit Industry Standard Architecture (ISA) bus, developed by IBM in 1981. In 1984, the superior 16-bit IBM AT bus was implemented. Sixteen-bits wide and operating at 8 MHz, it had a maximum theoretical bandwidth of just 8 MB/s.

The next major step in the evolution of the expansion bus came in 1993 with the Peripheral Component Interconnect (PCI) bus. Thirty-two bits wide and clocked at 33 MHz, PCI could move up to 132 MB/s, and it was the first bus conceptualized with plug-and-play in mind.

In 2004, we were introduced to PCI Express (PCIe). We've now reached the third generation of this standard, and PCIe 3.0 is capable of 8 GT/s. Just one lane of third-gen PCIe theoretically moves about 125 times more data per second than the 16-bit AT bus. Implemented as a 16-lane slot, PCI Express can move up to 16 GB/s in each direction.

Early PC motherboards offered very little in the way of integration, so all input and output relied on appropriate add-in cards. Over time, the industry built hard drive, USB, audio, and network controllers into chipsets and onto small-enough components that the motherboard industry could enable all of that connectivity. As lithography evolves and more features find themselves on the processor or closer to it, enthusiasts typically drop in fewer upgrades. But technologies like SLI and CrossFire demonstrate that it's still possible to populate every slot of a modern PC, emphasizing the continued importance of expansion.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Networking And The World Wide Web

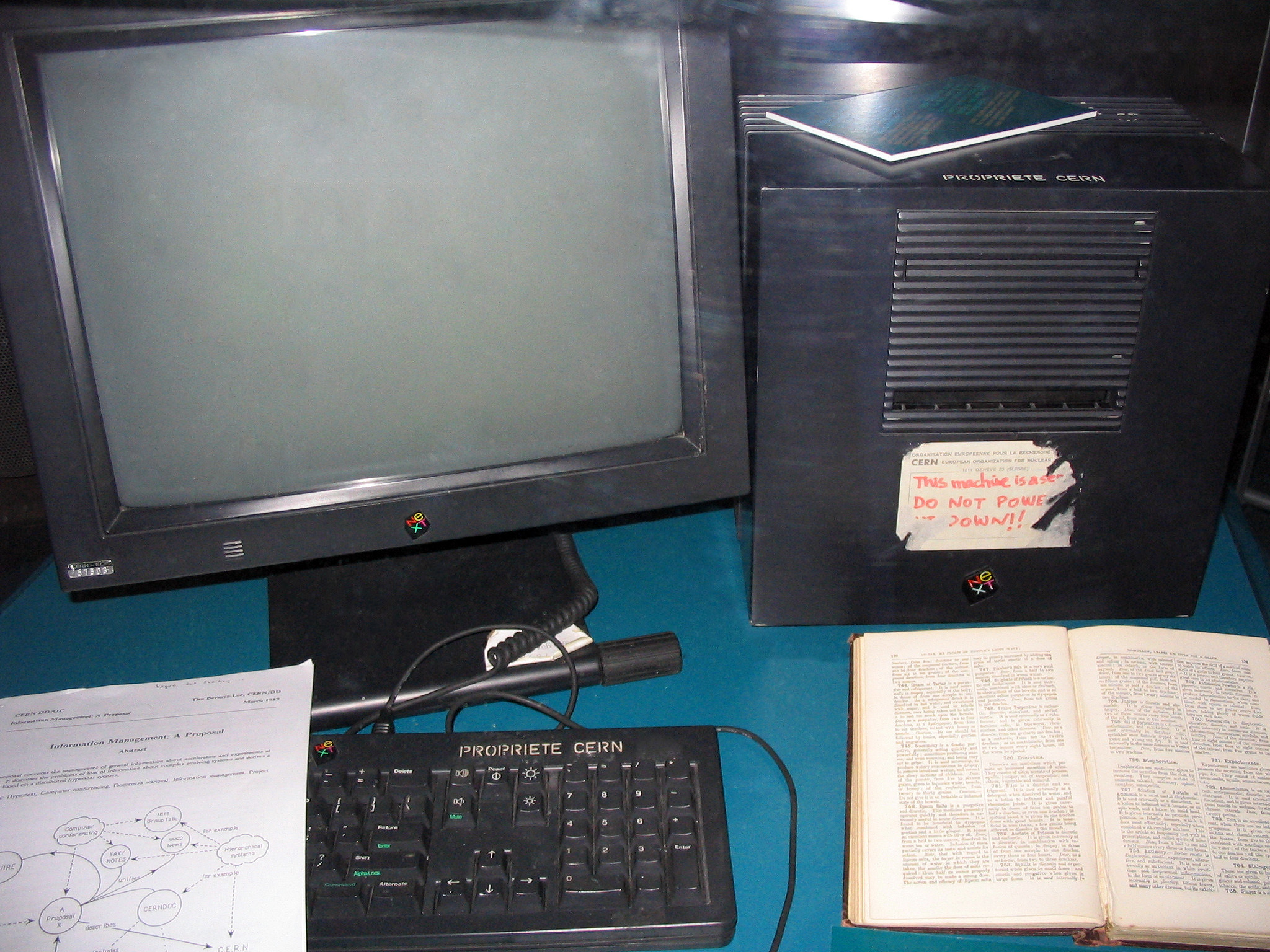

The Internet is a public, worldwide system of networked computers. Naturally, the computers themselves aren't what drive people to use this network, though. We use the Internet because of the information it hosts. That content is available on webpages, and webpages rely on a system called the World Wide Web.

The Web began in 1990, when Sir Tim Berners-Lee and colleague Robert Cailliau put together a proposal for a project titled "WorldWideWeb". Described as a web of hypertext documents viewable by browsers over a client-server architecture, they had completed the infrastructure by the end of that year. The Web server (the world's first of which is pictured to the left), Web browser, and webpages that these pioneers created followed the same structure we use to access content on the Internet today.

Everything began to take off in 1993 with the release of a browser called Mosaic. As the first piece of software capable of displaying images and text together, Mosaic is often credited for popularizing the World Wide Web by making media-rich pages possible.

The Web has been, and continues to be, a massive driving force of the PC experience. Banking, games, mail, movies, music, information, shopping, and socializing are all part of today’s online experience, and the Web increasingly has a huge impact on the way we use computers.

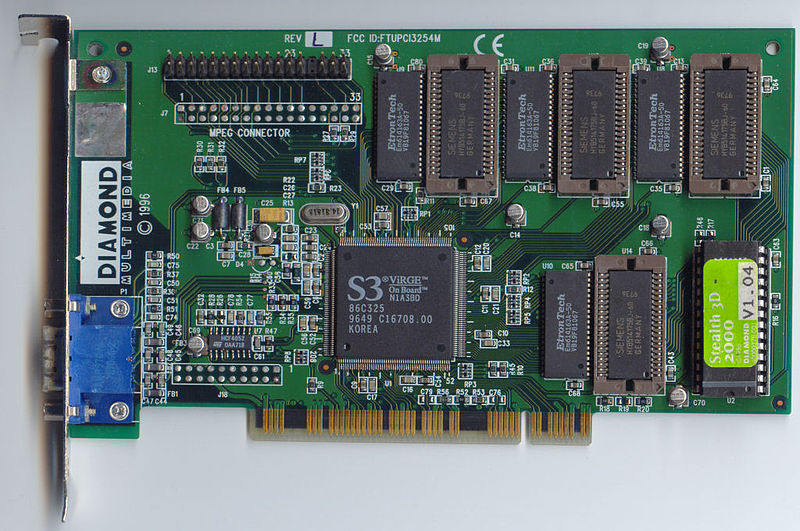

Hardware-Accelerated 3D Graphics

As the PC became commonplace in the early 1990s, the limitations of software-based real-time 3D graphics became apparent as games became increasingly complex. The first add-in 3D accelerator cards for the PC arrived in the mid-90s. S3's Virge, ATI's Rage, Matrox's Mystique, Rendition's Verite, and 3Dfx's Voodoo were among the first consumer-oriented offerings. Of these, the Verite and Voodoo were often regarded as the most effective.

Nvidia introduced its GeForce in late 1999, and coined the term Graphics Processing Unit (GPU) to describe the complex graphics chipset with support for accelerated transform and lighting. ATI debuted its Radeon line the following year, and the brand continues to live on under AMD today. Intel entered the fray with its i740 chipset in 1998, and although the company no longer offers discrete cards, its integrated Intel HD Graphics engines continue to dominate the graphics market share numbers.

With most of the other competitors long gone, AMD, Nvidia, and Intel are responsible for the graphics chipset in almost every PC sold today. Due to the broad availability of 3D graphics processing, software developers count on a majority of PCs being able to handle 3D in hardware, and graphics realism continues improving. Even today's smartphones are capable of impressive graphics performance, and this continues to shape what we expect from modern software.

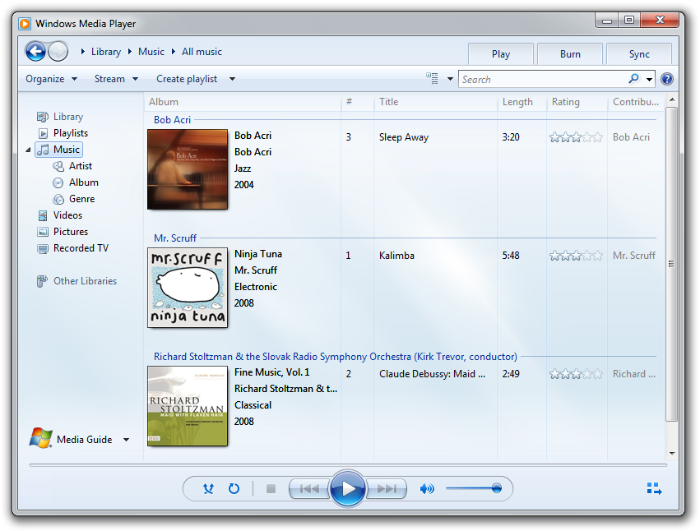

Entertainment And Digital Media

The MPEG-2 Audio Layer III standard, better known as MP3, was first released in 1993, making it possible to compress digital audio. By 1994, the format made it easy to encode larger files into a format that was easier to transfer over the Internet. Soon after, in 1995, the DVD format surfaced. It didn't take long for folks to figure out that their PCs could back up video discs, too.

File sharing services like Napster rose and fell. The Pirate Bay is fighting for its life, but torrents continue to be popular. YouTube arrived, and shows no sign of slowing down. These developments have obviously had a huge impact on the direction of media consumption on our PCs. Although they were once all about business, lots of compute horsepower and ample throughput make our machines capable entertainment-oriented hubs now, too.

The growth of entertainment on the PC is now epic. YouTube reports more than two billion views per day, and more video is uploaded in 60 days than the three major U.S. television networks have produced in the last 60 years!

Even the lowest-end graphics chipsets support hardware-accelerated video decoding, offloading this workload from the host processor, and a majority of integrated audio codecs are capable of high-quality output and even surround sound. Media consumption is now a fundamental part of the PC experience.

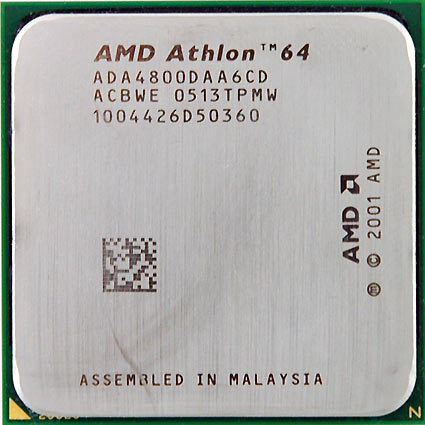

Multi-Core Processors

The first desktop-oriented dual-core CPUs surfaced toward the end of 2005. Expensive, hot, and generally underutilized by most of the software available back then, multi-core processors were fairly niche until ISVs started optimizing their applications for threading. It was much more common to see server- and workstation software able to leverage machines with two or more CPUs and dual-core chips.

Today, support is much broader, and, for the most part, applications best-suited to benefit from parallelism do. AMD's flagship desktop chips feature eight integer clusters across four modules, while Intel's top-end parts sport six physical cores able to address 12 threads through Hyper-Threading.

In fact, of the 76 desktop processors available on Newegg at the time of writing, only two are single-core models: the Sempron 145 and Celeron G440; both are previous-generation budget chips. The days of single-core processors are indeed over. Even premium smartphones boast multiple cores. That's the status quo, and an integral part of scaling up performance in a modern computing device.

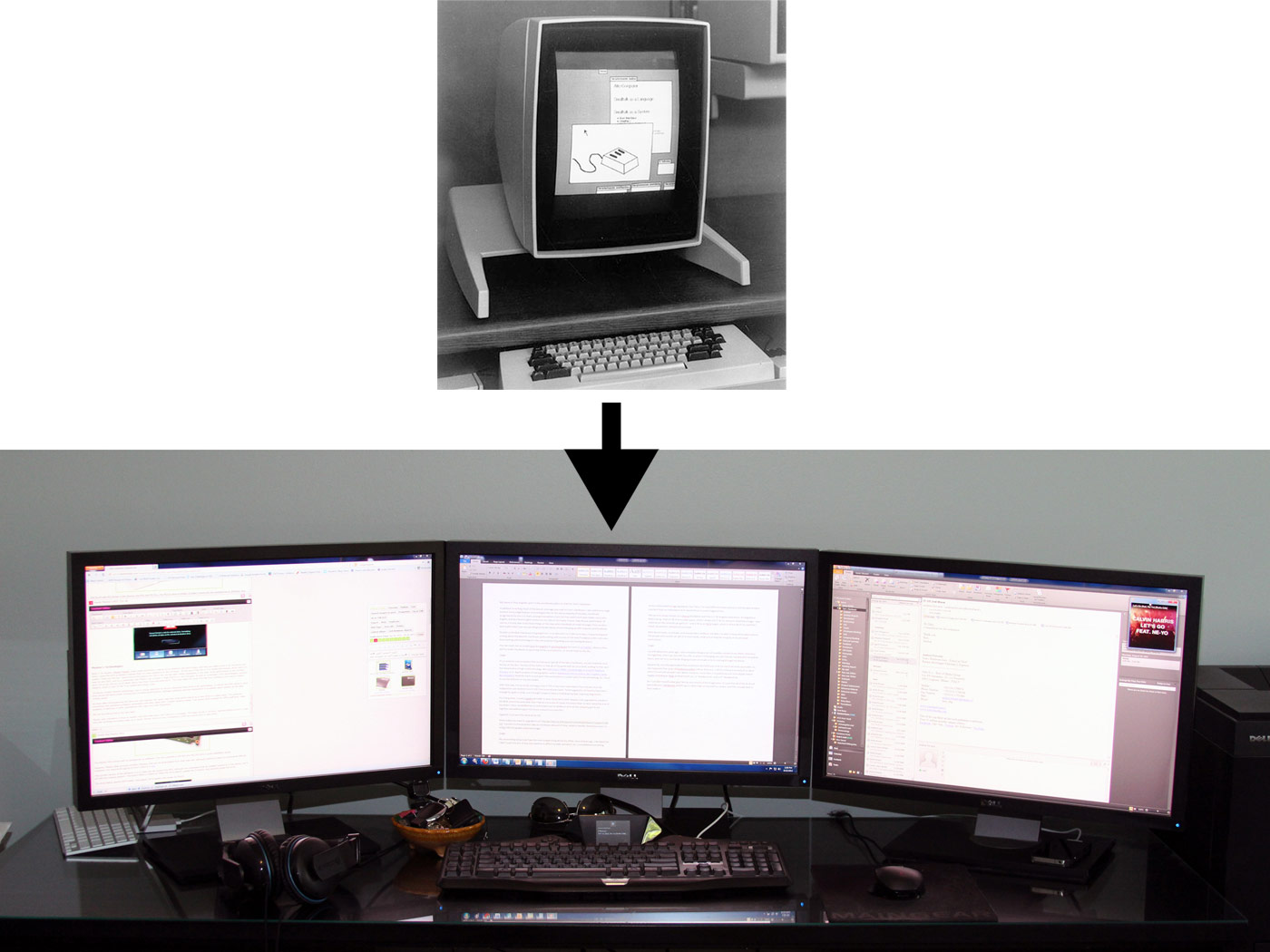

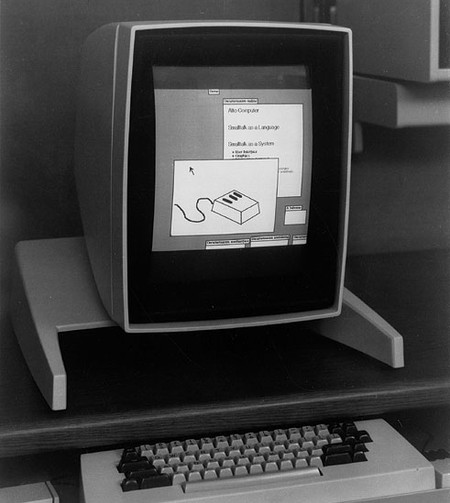

The Mouse-Driven Desktop Paradigm

In 1964, Doug Engelbart made the first computer mouse to use with a graphical user interface. Nearly a decade later, in 1973, a particularly innovative team at Xerox's Palo Alto Research Center (PARC) R&D facility took that combination a step further. Their prototype PC, dubbed the Alto, had a mouse-driven GUI complete with clickable icons, windows, folders, and the familiar desktop metaphor. Xerox's lack of vision doomed the Alto to obscurity, but the potential of its innovation didn’t go unnoticed. The basic principles behind the Alto were later reproduced by Steve Jobs with the Apple Macintosh, and Bill Gates with Microsoft Windows.

There have been no radical changes to this paradigm in the past 40 years. Icons, windows, folders, and the desktop remain fundamental components of every major operating system today. Likewise, the mouse remains the PC’s primary human interface device. And though they replace the mouse with a touchscreen, even mobile devices running Apple's iOS or Google's Android rely on icons.

The arrival of Windows 8 presents us with the first major departure from the input scheme created for the Alto four decades ago. Much of the negativity surrounding Microsoft's Windows 8 UI is tied to the company's touch-oriented design, and PC users' general discomfort with the outcome. It'll be interesting to see if enthusiasts warm to Windows 8 over time. Even if they do, though, the traditional mouse/keyboard combo will remain a fundamental part of the PC experience for years to come.

The Universal Serial Bus (USB)

There was a time when dissimilar interfaces were the norm. Do you remember when printers plugged in to the parallel port, mice hooked up to the serial port, joysticks employed a game port, and keyboards attached to a DIN connector? To top all of that off, nothing was plug-and-play. The PC peripheral input situation used to be laughable.

The Universal Serial Bus was designed to change all that. USB 1.0 emerged in 1996 with a maximum throughput of 12 Mb/s. Despite its obvious advantages, the interface didn't catch on right away. Compatible devices had to be created, operating systems needed to integrate support, and platforms required the requisite connectors. You may recall that a USB device caused a blue screen of death during Microsoft's presentation of Windows 98. Today, joysticks, printers, mice, keyboards, flash drives, cameras, and pretty much any other peripheral you can imagine are found with USB support.

USB 2.0 was released in 2000 offering up to 480 Mb/s of peak bandwidth, and the third-gen standard increased that to 5 Gb/s in 2008. We only really started to see broader adoption of USB 3.0 in 2012, but we're seeing more high-speed devices supporting it, particularly in the storage space. The convenience and ubiquity of USB makes it an important ingredient in modern PCs.

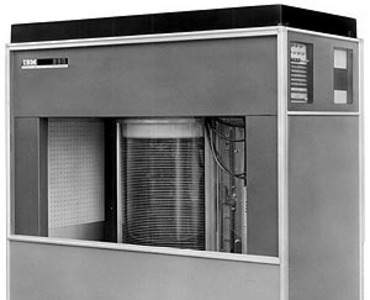

The Hard Drive

IBM pioneered the hard drive, and released the Model 350 disk storage unit in 1956. Equipped with 50 separate 24" platters, its 5 MB capacity was astounding for the time. Due to the high costs of manufacturing and maintenance, the technology was limited to data centers until the 1980s when it was offered as an option to consumers (albeit a very expensive one). By the time the '80s were over, reductions in cost made hard drives standard equipment in most PCs.

More than 20 years later, we're looking at 4 TB drives able to sustain triple-digit MB/s transfer rates. Although hard drives are outperformed dramatically by SSDs, their low cost per gigabyte practically guarantees their importance as repositories for user data moving forward. For those of us who've had to boot an operating system, load a program, and access file through separate floppy disks, the hard drive definitely earned its role as a fundamental component of the modern PC experience.

LCDs

Before liquid crystal display technology became popular, cathode ray tube-based monitors were standard fare on desktop PCs. They still retain a number of advantages, including no native resolution to worry about, great contrast levels, strong color accuracy, and fast response times. But they're bulky and they suffer from a wide range of artifacts.

A number of factors contributed to the CRT's dramatic decline, most of them related to LCDs: flat-screen displays are thinner and more desktop-friendly, they're more efficient and easier to dispose of, and, even with a 60 Hz refresh, they're easier to look at for long periods.

Of course, the implications of flat-screen panels are even more pronounced on mobility. It goes without saying that Ultrabooks, tablets, and smartphones all owe their existence to continued innovation in the display industry. So, to say the LCD earned its place on our list would be an understatement.

Don Woligroski was a former senior hardware editor for Tom's Hardware. He has covered a wide range of PC hardware topics, including CPUs, GPUs, system building, and emerging technologies.

-

demonhorde665 I though the article was about fundemental components of pc's not want's. MP3's is NOT fundemental to modern pc experience. in fact most people i know don't bother with mp3 on a pc , and rely on mp3 players or cell/phone players. also like esrever said , what about modern OS's , namely windows , with out windows its plausible that PC would never have caught on as a main stream part of life.Reply -

ta152h he AT released in 1984 was the 139, and ran at 6 MHz, not 8 MHz. The 339 came out later, and ran at 8 MHz.Reply

The next release after that was Microchannel, not PCI, and came out in 1987. The most popular computers in the world used it; it wasn't a fringe technology. It ran, originally, at 10 MHz with 32-bit bus, and allowed for bus masters, and pretty much everything a modern bus would. -

skyline4727 I'm surprised they didn't include CD or other removable media like floppy disks. How did you get programs on a computer without those.Reply -

InvalidError About LCDs... "even with a 60 Hz refresh, they're easier to look at for long periods."Reply

The reason LCDs are easier to look at has nothing to do with refresh rate, it has to do with how CRTs have to use phosphors that fade fast enough to clear the screen between refreshes since there is no other way of clearing it while LCD pixels are continuously lit by the backlight, the active matrix can hold an image for over a second between refreshes without significant fading and can be adjusted at will either way (brighter/darker), no need to wait for fading unlike CRTs where the electron gun can only make pixels brighter.

Active matrix LCDs can technically operate at close to 0Hz refresh and mobile LCD manufacturers have recently announced new LCDs supporting partial screen updates to avoid wasting power refreshing static parts of the screen all the time.

Refresh may become an issue again with OLED which behaves a lot more like phosphor: needs a refresh rate high enough to avoid noticeable fading. Unlike phosphors though, OLED pixels can actually be turned off on demand, so longer fade times are not as much of an issue beyond using more power to turn pixels on/off. -

ojas ReplyThe arrival of Windows 8 presents us with the first major departure from the input scheme created for the Alto four decades ago.

And it's half-assed, not to mention that it's not even designed with PCs in mind.