Raspberry Pi 5 successfully accelerates LLMs using an eGPU and Vulkan

You can now use Radeon RX 400 to 7000-series cards to play Doom Eternal and accelerate AI on the Raspberry Pi

A Raspberry Pi 5 hooked up to an AMD Radeon-powered eGPU has been demonstrated using the graphics hardware to accelerate running a Large Language Model (LLM). Of course, it's Pi wizard Jeff Geerling again, and in the video embedded below, he talks us through his experience of leveraging the Vulkan API support to enjoy GPU-accelerated local AI on the Raspberry Pi 5.

In our last Raspberry Pi 5 connected to an eGPU progress report, we highlighted the modern AAA 4K gaming possibilities of this unlikely pairing. Games like Doom Eternal, Crysis Remastered, Red Dead Redemption 2, and Forza Horizon 4 were demoed running at 4K on our favorite $50 SBC. With most struggling to maintain performance above say 25fps, actual enjoyment of the titles would be another question.

Geerling ended his fun and informative video, last time, with an update on the Pi 5’s LLM support. He noted that he hadn’t managed to GPU accelerate any LLMs on the Pi 5, but smaller models could run on the CPU, in the Pi’s RAM. Moreover, with AMD basically ruling out ROCm support on Arm, prospects didn’t look good.

Thankfully, in the world of enthusiast-driven tech, things can change quickly. In his latest video, Geerling reveals the answer to GPU-accelerated LLMs on the Pi 5 is the Vulkan API (with an experimental patch). Vulkan can even outperform AMD's ROCm on hardware / systems that offer the choice between, notes Geerling, so it is by no means merely a poor man’s choice.

At around two minutes into the video, Geerling walks us through his hardware setup. The most esoteric thing here are the two boards used to hook up the GPU to the Pi. He used an adaptor to convert the Pi’s PCIe express FFC connector to an M.2 slot. Into the M.2 slot, he plugged an M.2 to OCuLink adaptor, with a cable to a GPU OCuLink riser. In the video, he uses an RX 6700 XT again (you’ll need a spare PC PSU too, among several other bits and pieces).

Software setup is currently a bit more involved, requiring the user to compile their own Linux kernel, collect together a handful of drivers and patches, and more. More guidance is available via Geerling's blog.

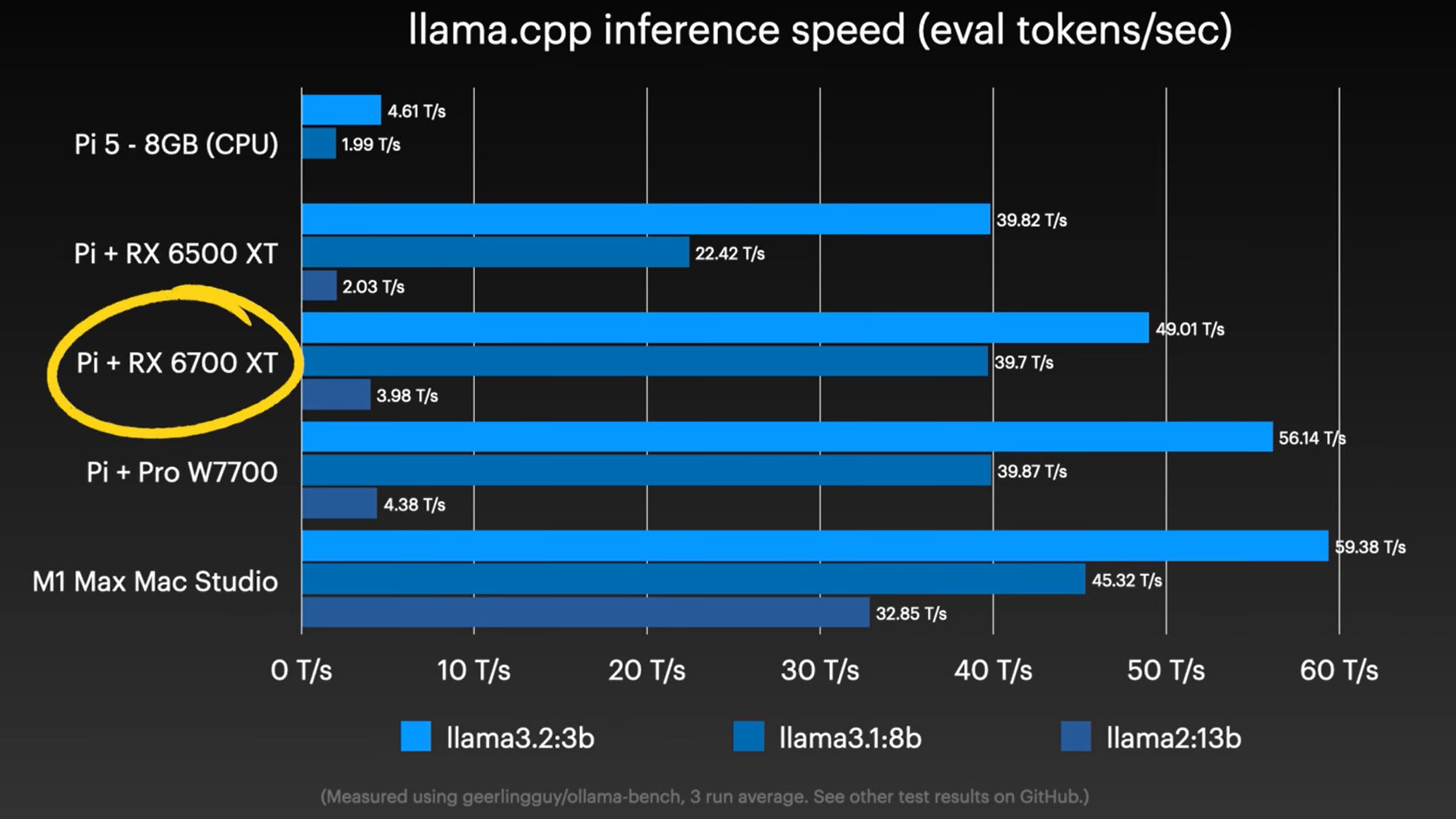

Casting more light onto the benefits of his hardware and software wrangling, the Pi enthusiast and TechTuber provides some performance figures and comparisons.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

It is interesting to hear Geerling propose the Pi plus eGPu as an alternative which is almost as fast and efficient as an M1 Max Mac Studio (64GB). He also highlighted that the cost of the whole caboodle is about $700 new, but a lot cheaper if you already have some of the bits and pieces (especially for those with a spare old GPU).

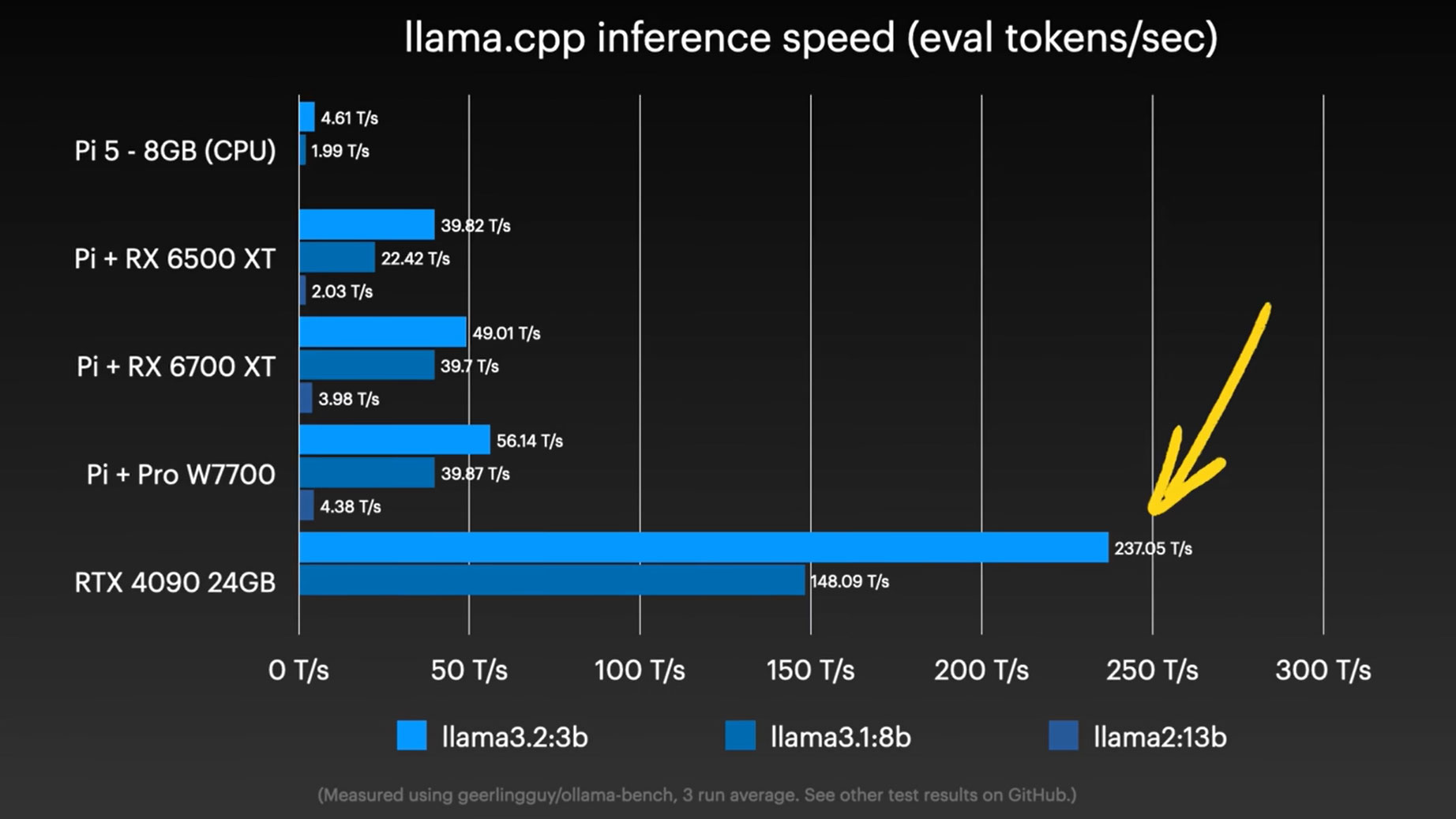

Adding the RTX 4090 benchmark to the mix (second slide) shows how much LLM performance a powerful modern PC can muster. That’s great if you want a 600W system generating hundreds of tokens per second (T/s), but for home use offline AI then 40-60 T/s should be plenty. Moreover, whoever pays your energy bill might be pleased with the ~12W system idling power consumption of this efficient Pi-based (Pi 5 plus RX 6700 XT) solution.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

bit_user Reply

I wonder why he doesn't show how fast the same x86-powered PC would run with any of the graphics cards he tested on the Raspberry Pi?The article said:Adding the RTX 4090 benchmark to the mix (second slide) shows how much LLM performance a powerful modern PC can muster. -

geerlingguy Reply

Time. I just haven't had time to install and configure each of the cards on the PC, then get Vulkan support set up and running (ROCm still isn't supported for most of these cards even on x86).bit_user said:I wonder why he doesn't show how fast the same x86-powered PC would run with any of the graphics cards he tested on the Raspberry Pi?

They may perform very slightly better, but probably not much, at least for any models that fit within VRAM. -

bit_user Reply

I understand. Thanks for replying!geerlingguy said:Time. I just haven't had time to install and configure each of the cards on the PC, then get Vulkan support set up and running (ROCm still isn't supported for most of these cards even on x86).

Good to know. If much of the time were spend loading the model onto the GPU or on host-based preprocessing, it would be interesting and enlightening. However, if it's overwhelmingly GPU-dominated, then I'd agree it's probably rather pointless to do the comparison.geerlingguy said:They may perform very slightly better, but probably not much, at least for any models that fit within VRAM.

As always, thanks for stopping by and keep up the great work!

: )

P.S. did we ever find out why Raspberry Pi didn't officially spec the Pi 5's PCIe as 3.0?