Is 80 PLUS Broken? How To Make It A More Trustworthy Certification

80 PLUS is hands-down the most popular efficiency certification program among PSU manufacturers nowadays. In today's article, we're digging into the organization's flaws, which are critical in some areas, and how we can improve upon them.

Our Approach For More Accurate Efficiency Measurements

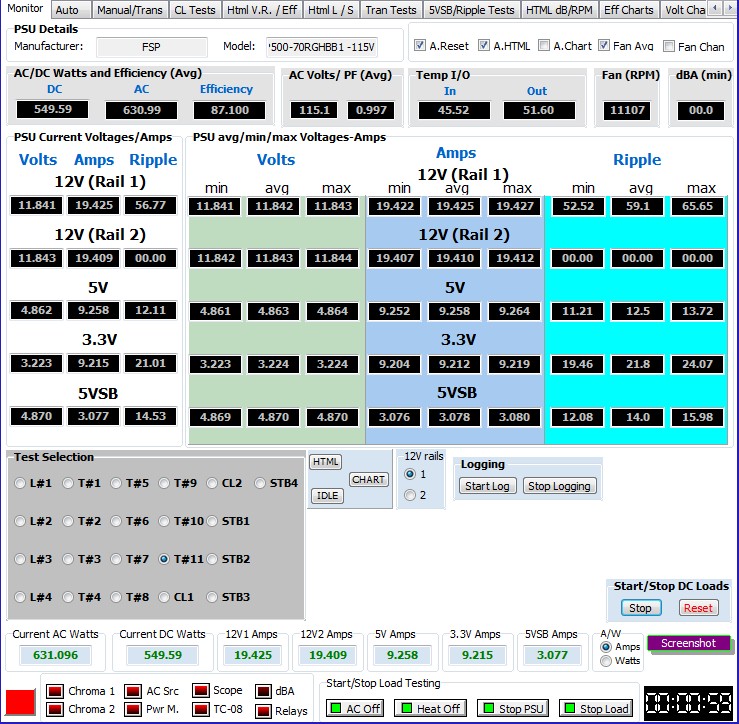

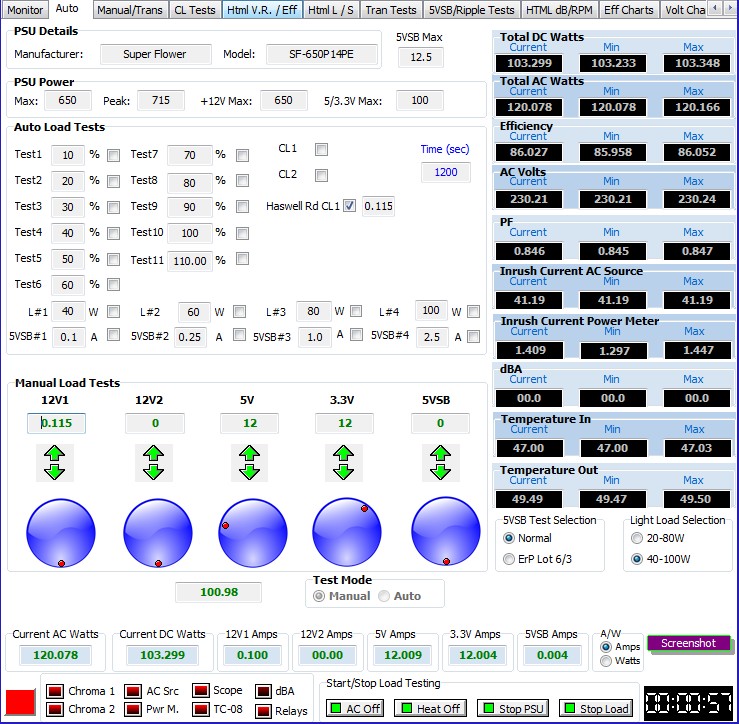

In order to address our issues with the 80 PLUS organization's methodology, we use a custom-made application that gives us full control and allows real-time monitoring of our equipment. This application keeps detailed logs that include efficiency, and we only take the average readings into consideration to provide the highest possible accuracy. We call this app Faganas ATE, named after the first custom-made loader that was compatible with it. This names derives from the Greek word "φαγάνας" which describes someone who eats a lot. In this particular case, Faganas' lunch is PSUs.

The main problem with most labs is that they don’t have a suitable method for monitoring and extracting results from all of their equipment at the same time. In our setup, we use nine different load modules. Throughout a test session, we have to constantly monitor their voltage, amperage, and/or wattage readings. This is simply not possible without using Faganas ATE.

In case monitoring in real time and analyzing the massive data that nine load testers spit out, in addition to monitoring the PSU's performance, isn't hard enough, then the added burden of applying a huge number of loads in sequence surely puts a lot of extra weight on the lab operator's shoulders. In order to apply 1600 different load patterns to any PSU, we designed a simple but effective algorithm implemented in Delphi. This language was popular back in 2010, when we begin developing Faganas. If we started today, we would probably use C# because of the top-notch Visual Studio IDE and better support.

We only consider the +12V, 5V, and 3.3V rails. If we also included 5VSB, the test would last for many days. The minimum load for each rail (usually 0W for modern PSUs with DC-DC converters) is set, along with a load step that we try to keep as low as possible. The last factor, which is extremely important, is how long each load level is applied. Even the slightest change significantly affects how long the complete test suite runs. For example, if we run a total of 1600 load combinations for 10 seconds each, the total duration is 16,000 seconds, or 4:26:40 hours. An increase of only two seconds per test (12 in total) increases that to 5:20:00 hours. That's a 20% increase with just a two-second difference in the load-apply period. As you can imagine, we have to be extra careful with this setting to achieve a combination of high accuracy and reasonable test duration. If electricity bills, the health of our equipment, and time weren't big issues, then we'd run the cross-load metrics for a couple of days to torture-test the PSUs at the same time.

MORE: Who's Who In Power Supplies

MORE: All Power Supply Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Our Approach For More Accurate Efficiency Measurements

Prev Page Low Number Of Efficiency Measurements Next Page Low Ambient Temperature Testing

Aris Mpitziopoulos is a contributing editor at Tom's Hardware, covering PSUs.

-

loki1944 I could not possibly care less how efficient a PSU is; what I care about is how reliable it is.Reply -

Sakkura I think you're being unreasonable when it comes to how many load levels to test. A review site like Tom's only looks at a handful of PSUs every year. Ecova runs the 80 Plus test on the majority of PSUs on the market. That necessitates simplified testing.Reply

It could still be updated/improved, but it's never going to be as in-depth as the very few reviews a site like Tom's does. -

waltsmith I can't agree. Until 80 PLUS became common blue screen errors due to dirty ass power being delivered to components was the norm. Even so called premium name brand PSUs suffered from this problem. Diagnosing a malfunctioning computer often involved trying up to 3 or 4 PSUs to see if it fixed the problem before even looking for anything else wrong. People that have been into computer hardware for a long time will know exactly what I'm talking about. We've come a long way, but progress is what it's all about. I applaud this article!Reply -

Chettone At least is something. Those that dont even have 80 PLUS can fry your PC.Reply

Personally I go for trusted manufacturers (based on user and tech reviews). Seasonic for example gives like 5 year warranty, that says a lot about quality. -

laststop311 EVGA makes a really good PSU the G1. for 80-90 dollars you get a 650 watt G1 with a 10 year warranty. Nice to see a company tly standing behind a product. And it's 80+ gold more than good enoughReply -

chumly @aris Why don't you send emails out to johnnyguru, guru3d, techpowerup, realhardtechx, etc, and create a standard you guys can all agree on? It's just a matter of doing it. All you guys are doing independent testing anyways. I don't think it will hurt your time budget to add a few emails and trying to get some people on board. Hell, you might make some money in the long run. Standardized testing methodology for computer hardware. Set minimums for what should be necessary for proper operation, and what is considered a failure. Then start to force the hardware companies to conform. You have a huge, reputable website behind you, you can accomplish whatever you want to. I'm interested in this as well, as probably are a lot of people.Reply -

PRabahy What would it take for you guys to start a "Toms hardware certified" division? I would pay extra for a powersupply that had that logo and I knew had passed the list of tests that you mentioned in this article.Reply -

I It's almost as though you are inventing things we don't need or care about. Ideals about that next step, and next step, and so on, come at ever increasing burdens to manufacturers, shoppers, and build costs.Reply

Like LOKI1944, I care more about reliability. To some extent the two go hand in hand, in that a more efficient design produces less heat which has a direct relation to how quickly the two (arguably) shorted lived components, capacitors and fans last, and yet when a design has greater complexity to arrive at higher efficiency, there's more to go wrong, and reverse engineering for repair becomes much more of a hassle.

Yes I repair PSU that are worth the bother, though that's starting to split hairs since most worth the bother don't fail in the first place unless they saw a power surge that fried the switching transistors.

The other problem with complexity is in cutting corners to arrive at attractive price points. "Most" PCs don't need much more than median quality 300W PSU, but those are not very common these days at retail opposed to OEM systems, so you end up paying more to get quality, and end up with a higher wattage than you need for all but your gaming system. Increase complexity and we're paying that much more still.

Anyway, PSU efficiency doesn't matter as much to me as it did in the past, like around the Athlon XP era where many motherboards had HALT disabled, and your PC was a space heater even sitting around idle. Ironically the build I'm typing on right now, uses more power for the big 4K monitor than the PC itself uses.

Maybe we need an efficiency rating system for monitor PSU! -

Aris_Mp A proper series of tests besides efficiency can also evaluate (in a degree at least) a PSU's reliability. For example any of the firecracker PSUs that is on the market today won't survive under full load, at an increased operating temperature.Reply

Moreover, efficiency testing doesn't mean that you cannot observe other parameters as well in a PSU's operation, like ripple for example. -

Aris_Mp @CHUMLY I know very well the guy at TPU so this isn't a problem :) The actual problem is that every reviewer has its own methodology and equipment so it cannot be a standard for all of us.Reply

In order to make a standard which can be followed by all reviewers you have to make sure that each of them uses exactly the same equipment and methodology. And not all reviewers can afford Chroma setups and super-expensive power meters, since most of them do this for hobby and actually don't have any serious profit.

It would be boring also if the same methodology applied to all PSU (and not only) reviewers. It is nice to have variations according to my opinion, since this way a reviewer can covers areas that the other doesn't.