The Story Of How GeForce GTX 690 And Titan Came To Be

When something impresses me, I want to know more, whether it's wine, music, or technology. Months ago, Nvidia dropped off its GeForce GTX 690 and I didn’t know whether to game on it or put it in a frame. This is the story of its conception.

Want To Know The Back-Story Of GeForce GTX 690 And Titan?

When Nvidia’s GeForce GTX 680 launched, it was both faster and less expensive than AMD’s Radeon HD 7970. But the GK104-based card was still pretty familiar-looking. Despite its conservative power consumption and quiet cooler, the card employed a light, plastic shroud.

That’s not really a big deal in the high-end space. For as far back as I can remember, $500 gaming boards employed just enough metal to support the same plastic shell, but lacked any substantial heft. Cooling fans typically ranged from tolerable under load to “…the heck were they thinking?” And power—well, I was just stoked to see a flagship from Nvidia dip below 200 W.

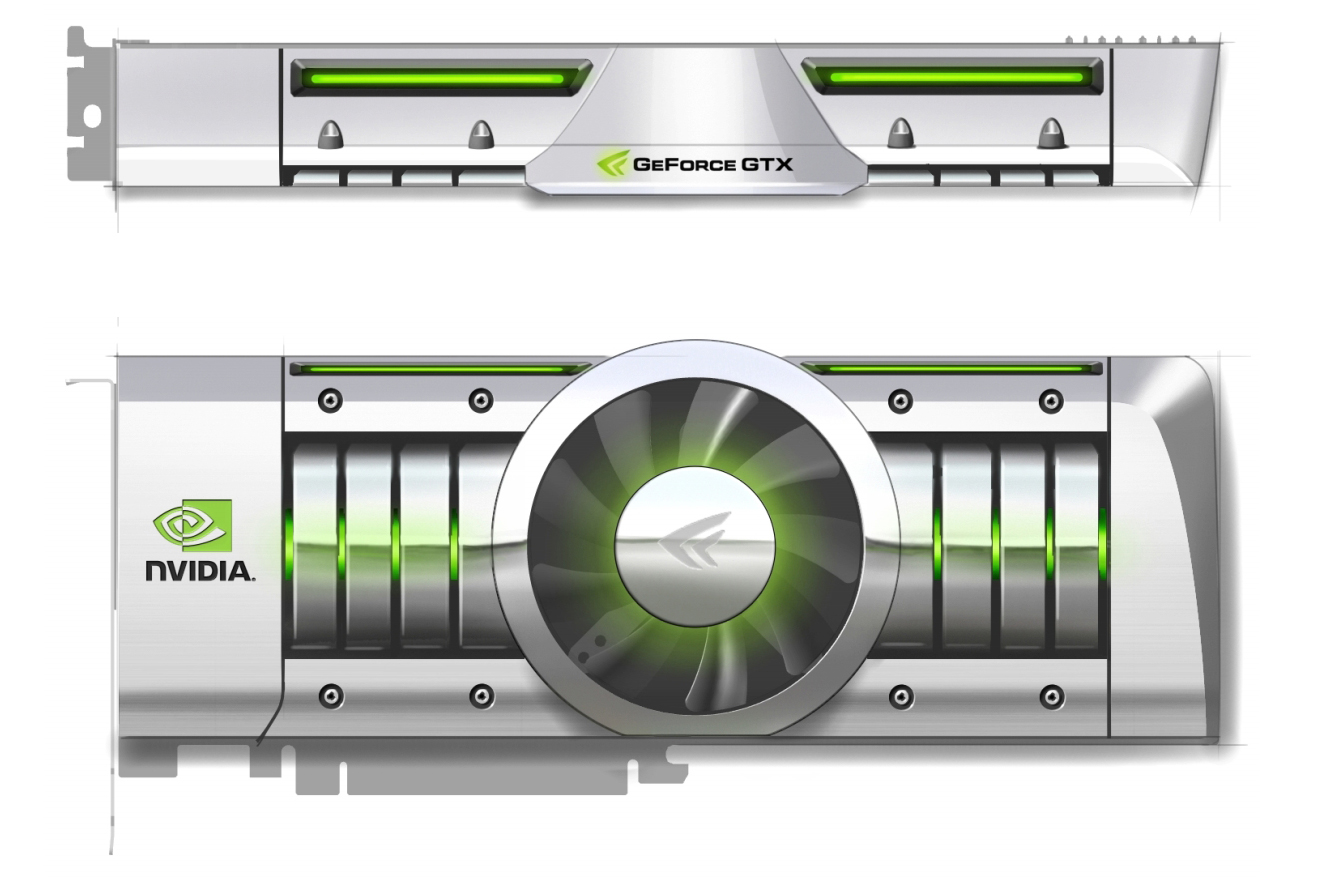

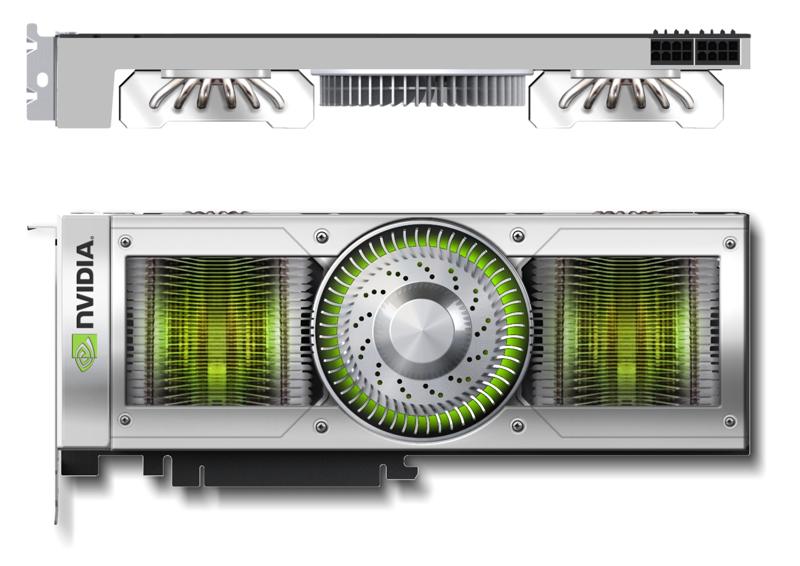

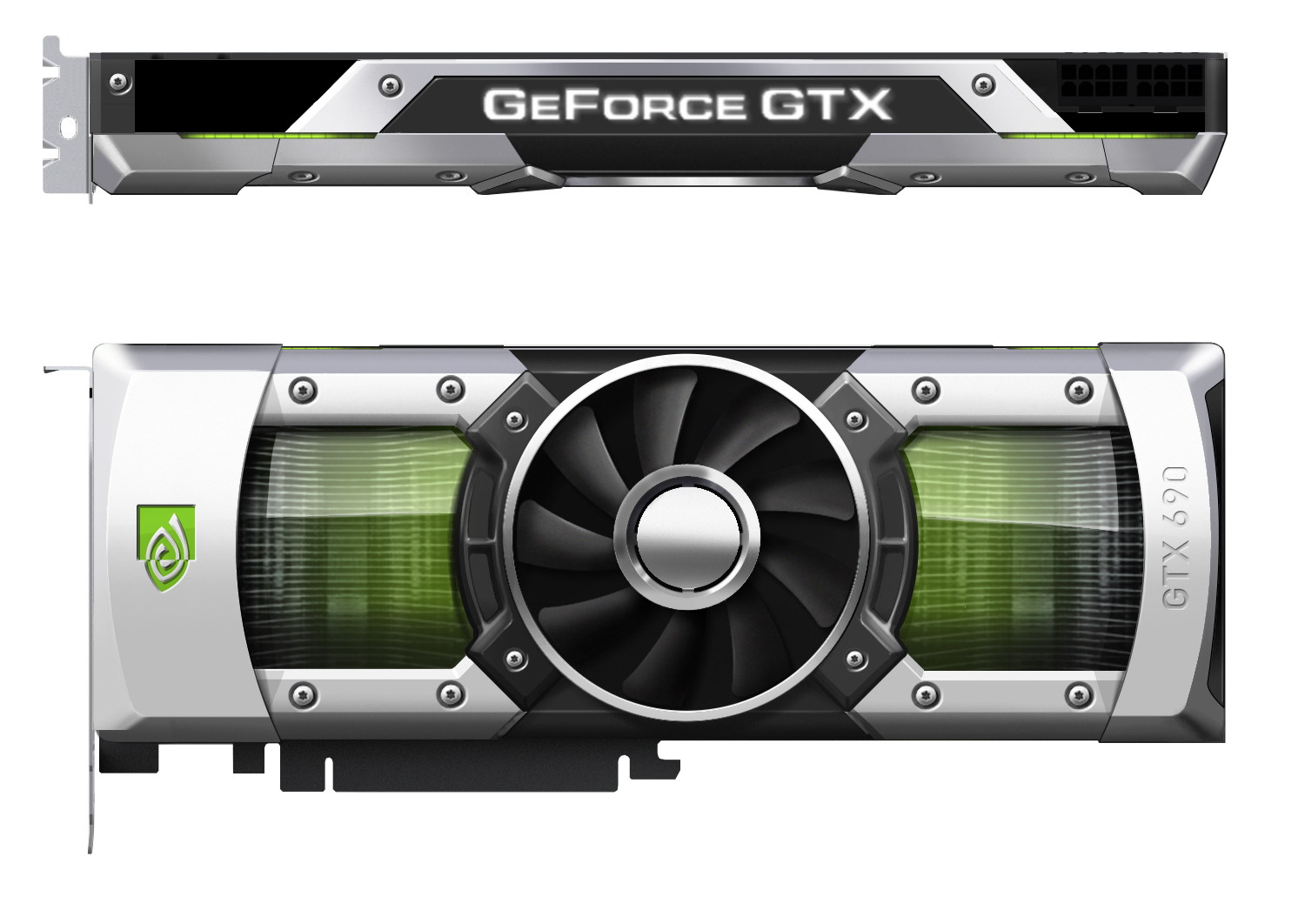

But then came GeForce GTX 690. And then Titan. And then 780. And then 770. Nvidia’s reference design for each was—and I’m going to sound like a total hardware dweeb—beautiful. Not femininely beautiful à la Monica Bellucci’s lips, but mechanically, like a DBS. The 690 introduced us to a chromium-plated aluminum frame, magnesium alloy fan housing, a vapor chamber heat sink over each GPU covered with a polycarbonate window, and LED lighting on top of the card, controllable through a software applet that companies like EVGA make available to download.

The models that followed actually used slightly different materials for the shroud, and weren’t quite as chiseled. But the aesthetic was similarly high-end. Each time a shipment of cards showed up, I was excited…and that doesn’t happen very often.

It’s Not Easy To Impress An Old-Timer

Alright, at 33 I’m not really that old. But I started reviewing hardware back when I was 18—more than 15 years ago. In the last decade and a half, only a few components really got my blood pumping. Previewing a very early Pentium III and 820-based motherboard with RDRAM months before Intel introduced the platform was cool. So too was the first time I got my hands on ATI’s Rage Fury MAXX. When AMD launched its Athlon 64 FX-51 CPU, it shipped a massive high-end system that I used for months after in the lab. And who could forget the Radeon HD 5870 Eyefinity 6 Edition, if only for the novelty?

I was easily as excited about GeForce GTX 690. I have a dead one in my office, and I swear it needs to live out the rest of its days in a frame on the wall.

Of course, when I’m evaluating a piece of hardware, good looks rank relatively low on the list of attributes that dictate a recommendation. Performance, power, noise, and pricing are also natural considerations. So, in the conclusion for GeForce GTX 690 Review: Testing Nvidia's Sexiest Graphics Card, we pointed out that two GTX 680s are faster and better equipped to handle the heat they generate. Then I brought up then-dismal availability. But all the while, I wanted someone at Nvidia to tell me what it took, at a company level, to make the card’s industrial design a reality when it clearly seemed “overbuilt.” Who was responsible? Would GeForce GTX 690 forever alter the minimum acceptable bling on a high-end gaming card?

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

At the time, Nvidia still had GeForce GTX Titan, 780, and 770 in its back pocket. When I approached Nvidia’s PR team for a more intimate look at what went into 690, it said “we’ll see.”

That was more than a year ago though, and now Nvidia’s plans are all much more public. Several months back, during a trip up to Santa Clara for a deep-dive on Tegra 4, I sat down with Andrew Bell, vice president of hardware engineering, and Jonah Alben, senior vice president of GPU engineering to talk about the conception of this design that I admired so much and what it took make a reality.

Current page: Want To Know The Back-Story Of GeForce GTX 690 And Titan?

Next Page From The Top: Shipping The Hunter Before The Tank-

CaptainTom You could build cars that go 300 MPH, get 60 MPG, and are as strong as tanks; but if it costs as much as a house... Who cares? Yeah more money buys more. What is so impressive here?Reply

Granted it sure as hell is more impressive than the gains intel makes every year, but then again everything is impressive compared to that... -

jimmysmitty Reply11631932 said:You could build cars that go 300 MPH, get 60 MPG, and are as strong as tanks; but if it costs as much as a house... Who cares? Yeah more money buys more. What is so impressive here?

Granted it sure as hell is more impressive than the gains intel makes every year, but then again everything is impressive compared to that...

If you consider that Intel is working in a much tighter TDP then it makes sense as to why they don't have massive jumps every year. With the ability to throw billions of transistors due to the 2-3x TDP, you can fit more and more every time you do a die shrink in the same area.

As well, it's not like AMD is pushing Intel to do much anyways. FX is not competitive enough to push the high end LGA2011 setup and barley pushes LGA1155 let alone 1150.

As for the design, I will admit it is beautiful. But my one issue is that with said aluminum shroud comes more weight and with more weight means more stress on the PCIe slot. Cards are getting bigger, not smaller. I remember when I had my X850XT PE. It took up one card slot and was a top end card. Even the X1800 took only one sans non reference designs. Now they take up two minimum and are pushing into 3. My 7970 Vapor-X pushes into the 3rd slot and weight a lot too.

Soon we will have 4 slot single GPUs that push into the HDD area. -

bystander @the aboveReply

Realize that GPU's do parallel processing, and a good chunk of the improvements on GPU speed is due to adding more and more processors and not just speeding up the processor itself. Intel works with CPU's, which do linear operations, and they cannot just add more processors and speed things up.

Imagine if CPU's could just add more cores and each core automatically sped things up without having to code for it. That is what GPU's can do and that is why they have been able to advance at a faster rate than CPU's. -

CaptainTom ^ Yes but Intel could get rid of the HD 4600 on the desktop i5's and i7's to add more transistors so the thing is significantly faster. Maybe it would use more power, but its better than Haswell's side-grade over Ivy Bridge.Reply -

emad_ramlawi I am AMDer when it comes to GPU`s, but got handed to Nvidia, the Titan and anything chopped off from GK110 looks impressive, its really great that the stock heat sink design is superior from the get-go, notice how many GK110 cards from different manufacturers that looks the same thing with the same heat sink, and usually same price they just slap there label on it, however in the same time, using top-notch material that costs 600-1000 is not evolutionary, and i don't believe in trick-down economy .Reply -

scrumworks Gotta "love" how Tom's is so loyal nvidia fan. Bias will never stop until couple of those key persons leave and I don't see that happening any time soon.Reply -

kartu What a biased article...Reply

690 is a dual GPU card, Titan is not.

690 is about 20% faster than Titan.

NEWSFLASH:

7990 is 25% faster than Titan.

Source: xbitlabs -

yannigr Titan is an impressive card. 690 is an impressive card. 7990 is an impressive card. The 9800 GX2 that I had in my hands 2 years ago was a monster, truly impressive card. If only it had 2GB of memory (2X1)....Reply

Anyway, all those are old news now. The article is interesting but the fact is that we are waiting to see more news about Hawaii and later about Mantle and in a few months about Maxwell. -

iam2thecrowe Reply11632406 said:What a biased article...

690 is a dual GPU card, Titan is not.

690 is about 20% faster than Titan.

NEWSFLASH:

7990 is 25% faster than Titan.

Source: xbitlabs

newsflash, the 7990 is hotter and noisier and suffers from poor frame latency, particularly when running multiple displays where nearly 50% of frames are dropped completely before they reach the monitor.......

Seriously, Toms are more often AMD biased than Nvidia, so Don't complain about just one article.