Testing Nvidia's Multi-Res Shading In Shadow Warrior 2

There are a couple ways to improve graphics performance. Either you equip GPUs with more resources, adding brute force, or get creative and come up with efficiency optimizations. Nvidia's Multi-Res Shading technology is a good example of the latter.

Originally intended for VR, calculating one scene from multiple viewports, Multi-Res Shading found another application: improving the gaming performance of graphics cards by reducing the rendering quality of certain portions of the image. Shadow Warrior 2 is the first non-VR title to benefit from this functionality. According to Nvidia, Multi-Res Shading raises performance by around 30 percent, without much degradation to visual quality. And that's exactly what we want to test: how does graphics quality hold up, and is there a quantifiable performance gain?

Shadow Warrior 2: MRS In Action

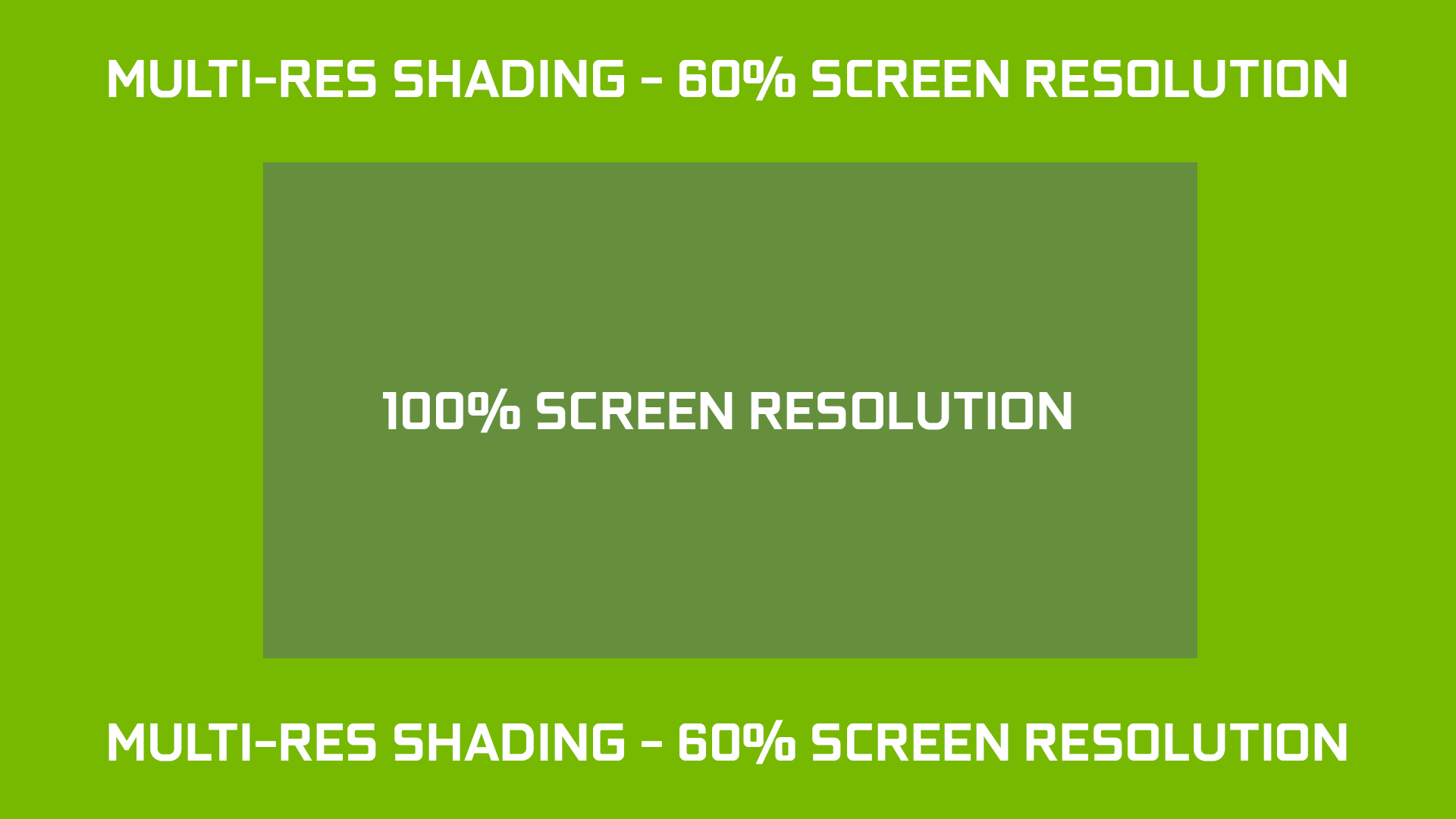

If you own either a GeForce GTX 900-series (Maxwell) or 1000-series (Pascal) card, it's possible to activate Multi-Res Shading in Shadow Warrior 2. Two options are available: Conservative and Aggressive. In the first case, the resolution along the border of the image is reduced by 40% verses 60% in the second. The size of this zone is also different according to the option you choose: 20% or 22% to the left and right, and 18% or 20% to the top and bottom of the image.

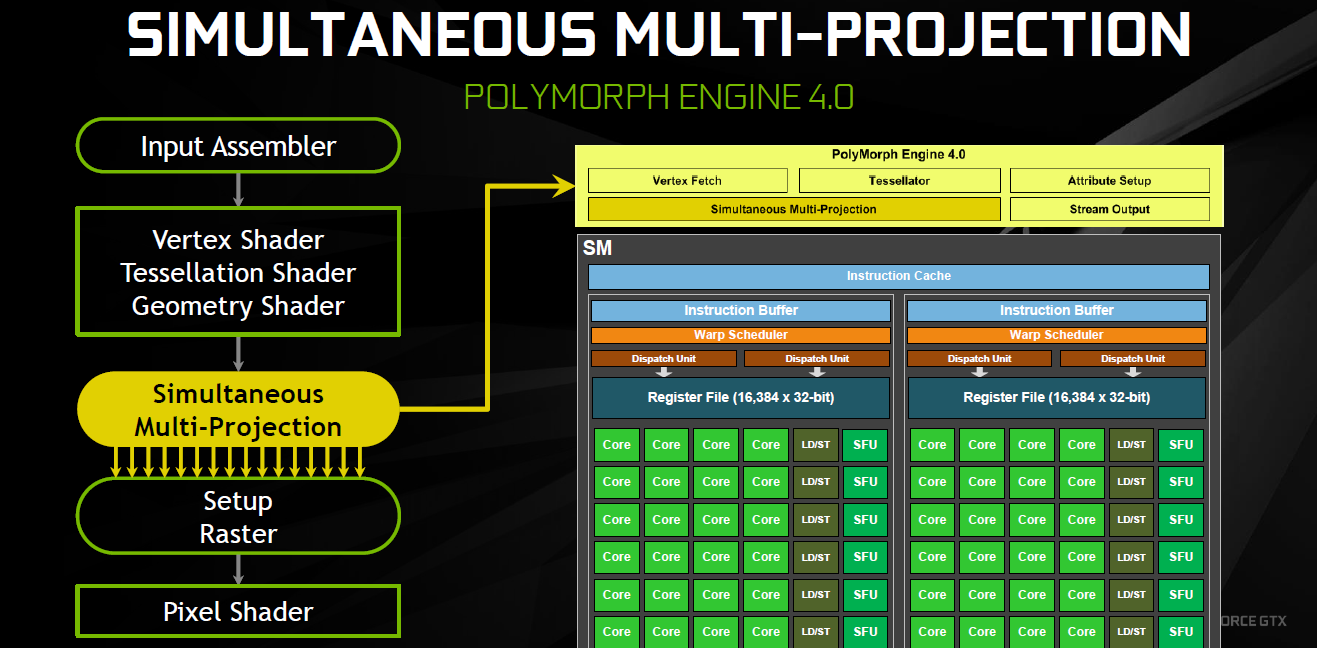

Certain effects (like lens flares) are also diminished, or even completely removed. This is one of the faults you could rightfully criticize about this technology. Resolution isn't the only attribute affected by MRS; certain details also seem to be treated differently by the GPU's PolyMorph Engine, which is responsible for handling geometry.

Besides this, there are differences between Maxwell and Pascal, too. Nvidia added a “Simultaneous Multi-Projection” block to Pascal (PolyMorph Engine 4.0), while Maxwell must be content with its “Viewport Multicast” block. In practice, Multi-Res Shading should theoretically be less efficient on Maxwell than Pascal.

MORE: All Gaming Content

Multi-Res Shading: Screen Captures

The following screen captures show several examples with MRS activated. Each trio of screen captures appears in the following sequence: MRS off, MRS low, and MRS high.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Lens flare effects are deactivated as soon as MRS is turned on. Numerous shading and lighting effects disappear as well, and aliasing appears clearly on the image's borders when we use the most aggressive MRS mode. Check the blade of the sword, for example. The contours of the image are more blurry, especially with MRS turned up.

Here again, lens flares disappear as soon as MRS is activated. The halo of light level with the hole in the cave, towards the upper-left, is only visible if MRS is turned off. The borders of the puddle of water are blurry when MRS is cranked up. The mud stain just in front of the bridge partially disappears, even though it is located at the center of the image and therefore shouldn't be affected at all.

The difference with respect to reflections on the body of the vehicle in the foreground is flagrant, with MRS imposing inferior quality. The ambient lighting of the scene is lower with MRS active, and the character to the right is pixelated when we use the aggressive MRS mode.

By removing numerous lighting effects (reflections, refractions, diffractions, scattering…), even at the center of the frame, MRS noticeably degrades the visual quality of this scene and strongly diminishes ambient lighting. The hologram to the right is particularly pixelated in the higher MRS mode.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Test Setup

Multi-Res Shading clearly has a visible impact on visual quality, especially in aggressive mode. But its primary goal is to improve performance. Let's see if this is the case. We ran our tests on the game's first scene using Ultra quality at 1080p, 1440p, and 4K using the following three graphics cards:

| Software | |

|---|---|

| Graphics Drivers | Nvidia GeForce Game Ready 375.76 |

| Operating System | Windows 10 x64 Enterprise 1607 (14393.351) |

| Storage Controller | Intel PCH Z170 SATA 6Gb/s |

Benchmark Sequence

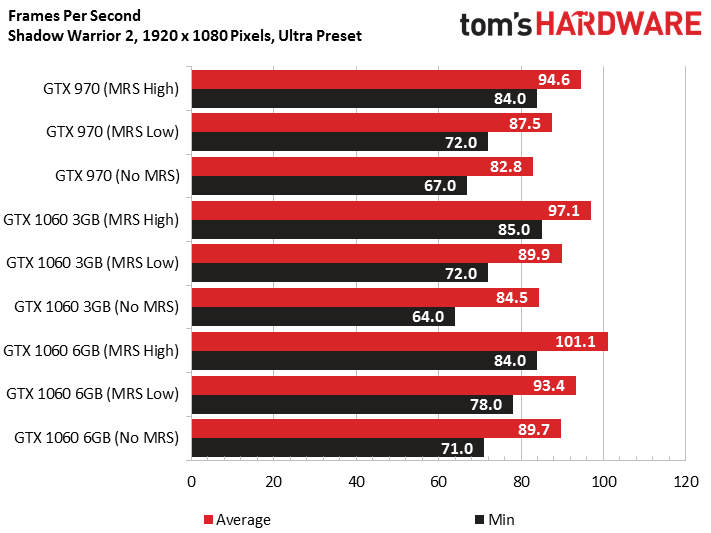

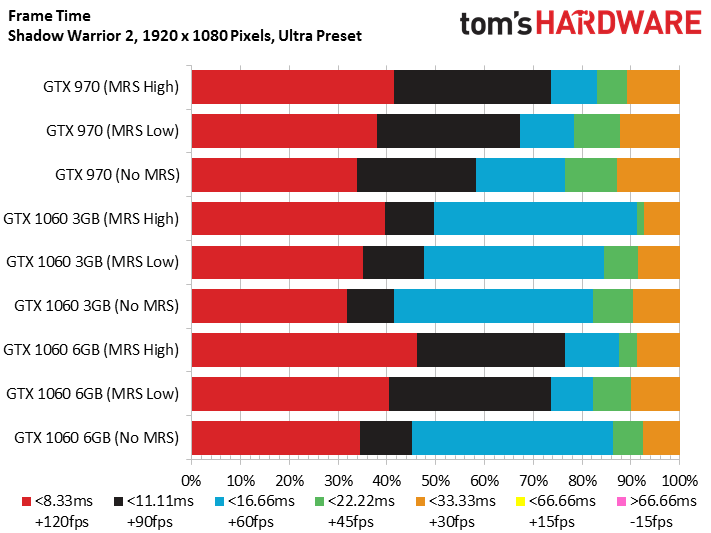

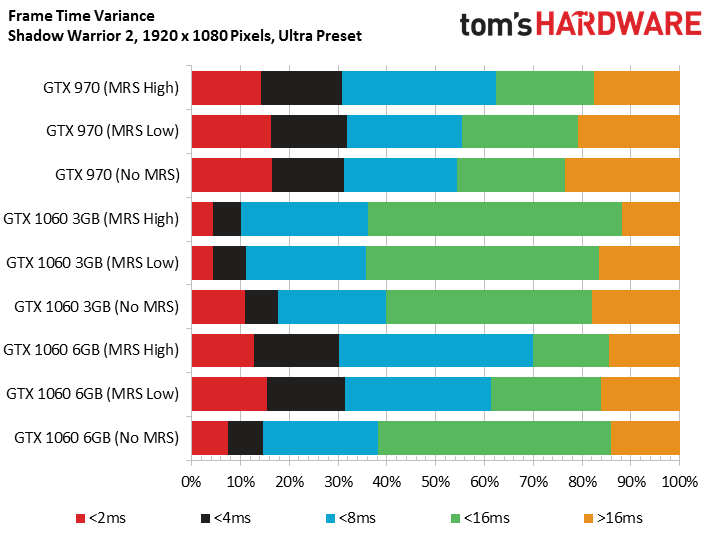

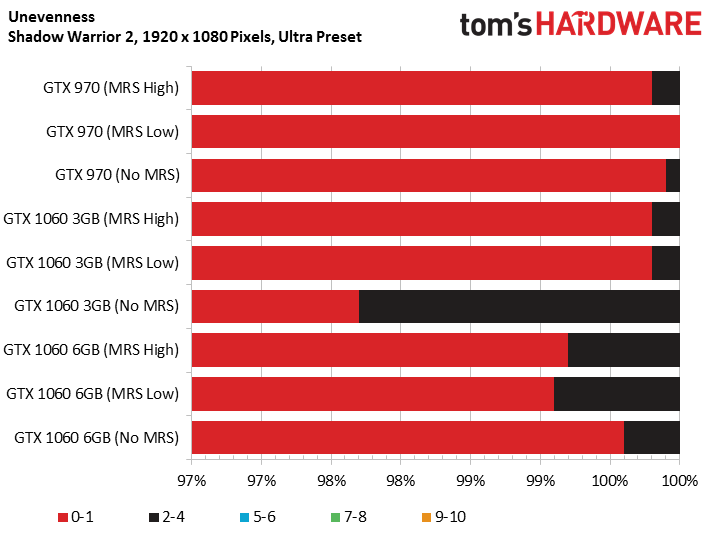

1920x1080

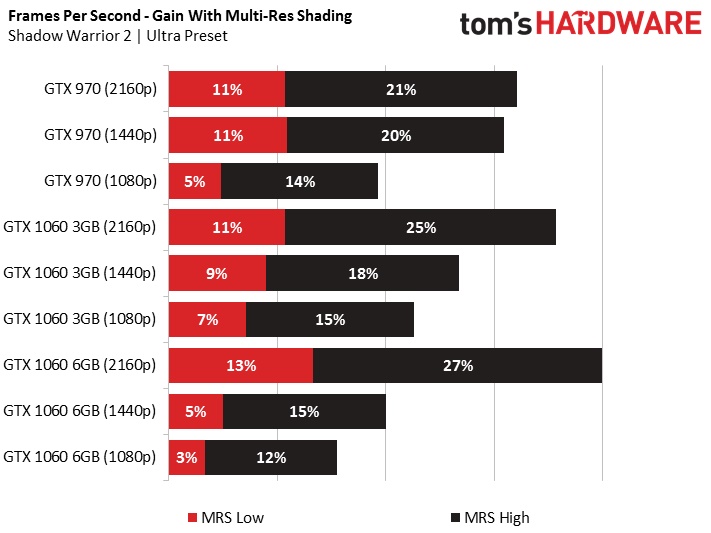

If Multi-Res Shading truly has an impact on frame rate, the performance without Nvidia's feature is already good enough that perceived smoothness is excellent in all cases. In other words: at 1080p, Multi-Res Shading is not useful with these mid-range cards.

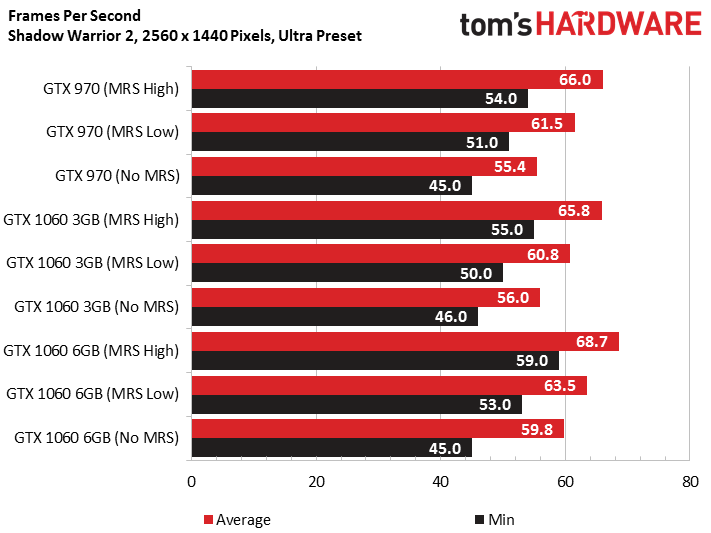

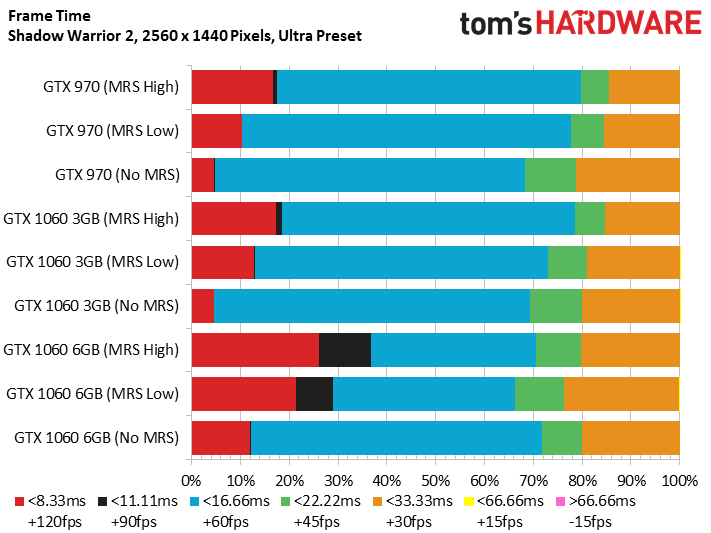

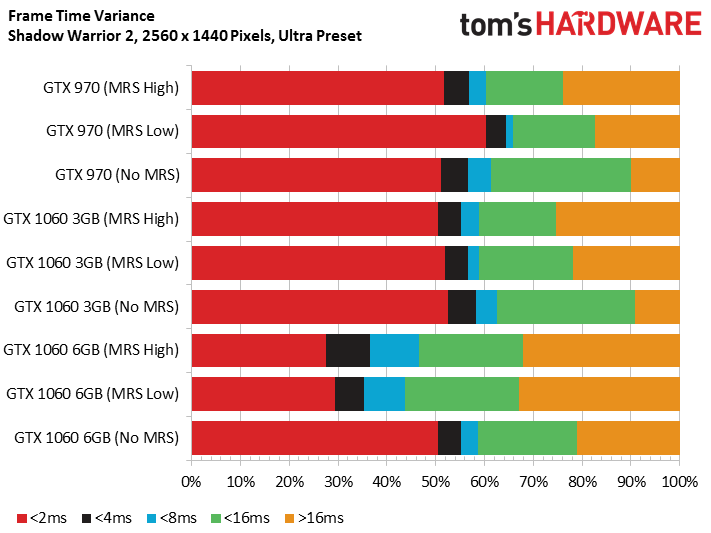

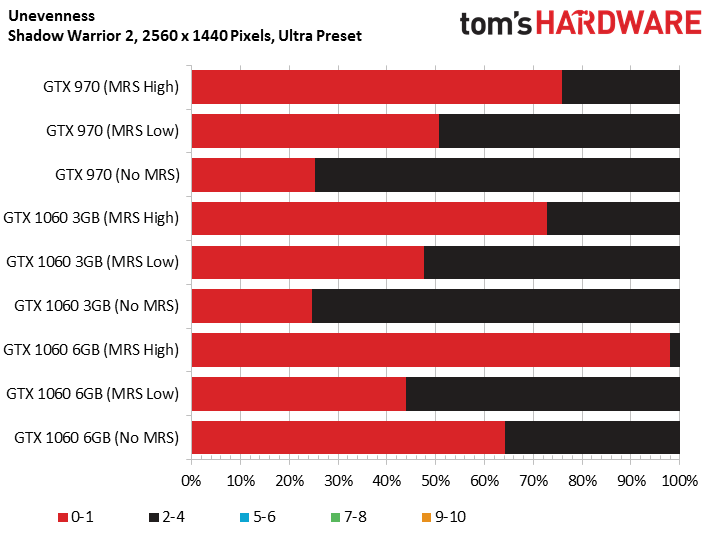

2560x1440

Here too, the average and minimum frame rate soars, regardless of the setting we specify. But activating MRS allows our three cards to never dip below 50 FPS, which is apparent in terms of perceived smoothness. MRS finds a real use at this resolution.

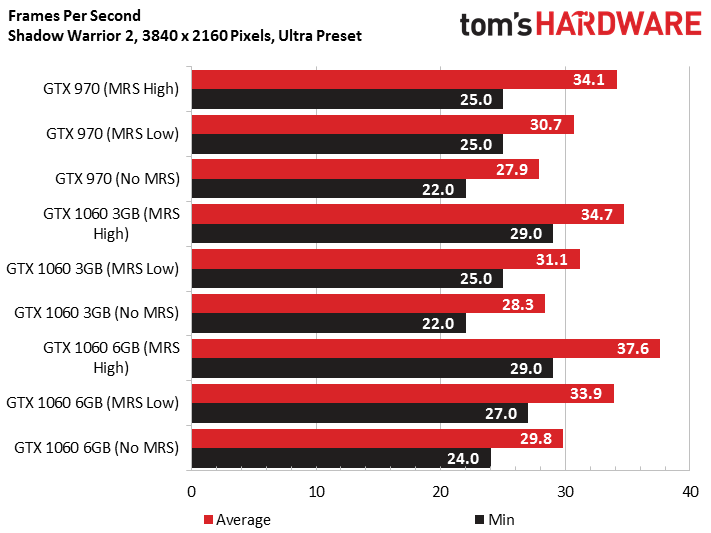

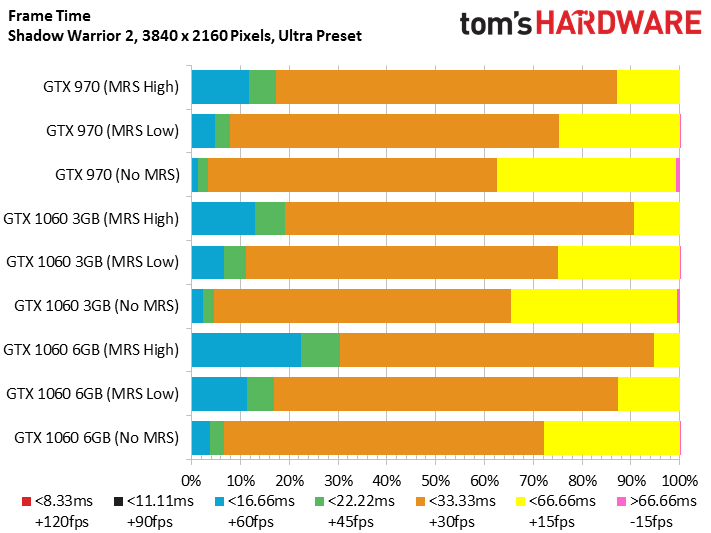

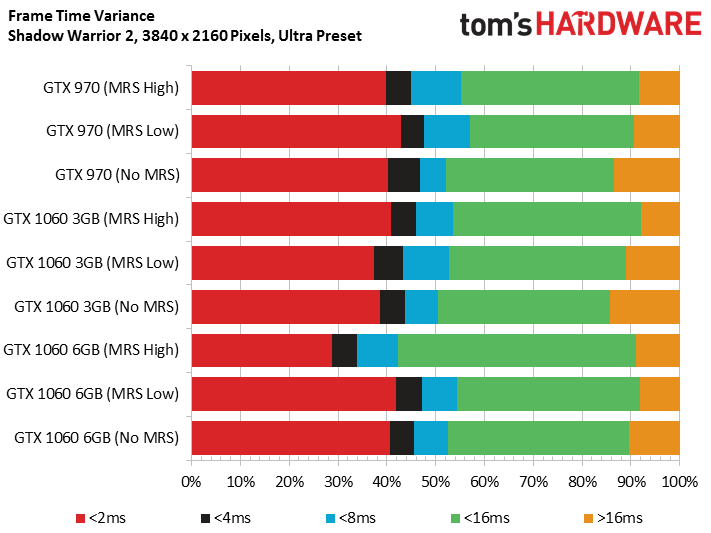

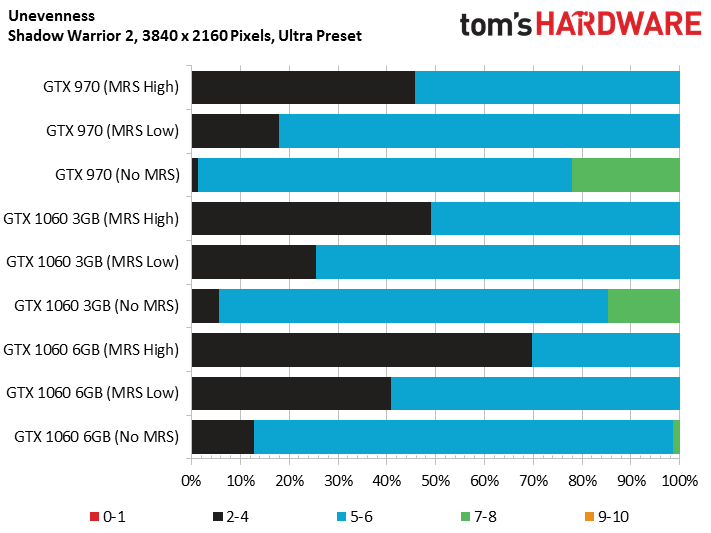

3840x2160

It is at 4K that the impact of Multi-Res shading is greatest, be it in frames per second, frame time stability, or perceived smoothness. Whatever the case, our three mid-range GeForce cards are not able to sustain a frame rate sufficient for the game to be smooth at this very high resolution, which is rather normal.

Conclusion

While Multi-Res Shading has a negative impact on visual quality that cannot be ignored, at least in this specific implementation, performance gains attributable to the feature are quantifiable. The improvement is supposed to be greater with Pascal than Maxwell, but our measurements don't appear to support this: the GeForce GTX 970 benefits very well (except in 4K) with the same visuals.

Our only disappointment is that the technology as it's exposed in Shadow Warrior 2 isn't happy with just degrading the peripheral image's resolution. It also removes some components, which also affects the scene's quality right in the middle of your screen, where it's supposed to be untouched. In the end, it is far from the screen captures provided by Nvidia.

This option will come in useful in specific cases, though. Take our results at 1440p, for example. A resolution like 1080p doesn't tax mid-range graphics cards enough to make the visual degradation worthwhile, and the GeForce GTX 970 and 1060 aren't fast enough to play at 4K, even with MRS. In the end, we recommend against using the more aggressive MRS mode because it impacts graphics quality too negatively for our liking.

MORE: Best Deals

MORE: Hot Bargains @PurchDeals