Next-Gen Video Encoding: x265 Tackles HEVC/H.265

Last month, the HEVC/H.265 standard was officially published. We recently got our hands on a pre-alpha build of x265, an HEVC encoder project from a company called MulticoreWare that's going to be licensed in much the same way as the famed x264 library.

Benchmarking Pre-Alpha x265

We got our hands on the early code and were able to run some preliminary numbers. Again, the developers say their code is still very early. It’s currently x86-only (albeit with support for advanced instruction sets like AVX2), lacks B-frame support (preventing it from achieving maximum compression), and doesn’t yet include some of the optimizations taken from x264 (like look-ahead and rate control). However, the features it does offer are good enough for some Core i7-4770K benchmarking as a precursor to what you might see in an upcoming processor review.

In the interest of transparency, we're using the following benchmark command: x265 --input Kimono1_1920x1080_24.yuv --width 1920 --height 1080 --rate 24 -f 240 -o q24_Kimono1.out --rect --max-merge 1 --hash 1 --wpp --gops 4 --tu-intra-depth 1 --tu-inter-depth 2 --no-tskip, with quantization parameters between 24 and 42. It's notable that we're employing GOP (Group Of Picture)-level parallelism to keep our quad-core -4770K busy. I'm also adding the --cpuid switch to control the instruction sets being used.

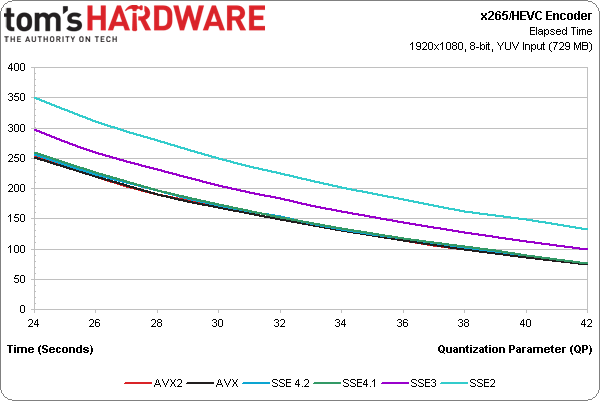

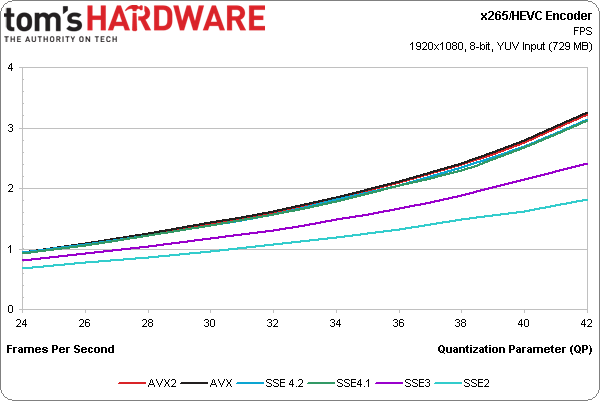

The first observation we’re able to make is that x265 currently sees the most benefit from optimizations related to SSE3 and then SSE4.1. Gains attributable to AVX and AVX2 are still relatively small. According to the lead developer, the one part of the encoder utilizing AVX2, motion search, isn’t particularly performance-critical.

The x265 team is hoping to achieve real-time 1080p30 encoding on a Xeon-based server with 16 physical cores by next month. At this early stage, however, we’re still under 4 FPS using a quad-core -4770K.

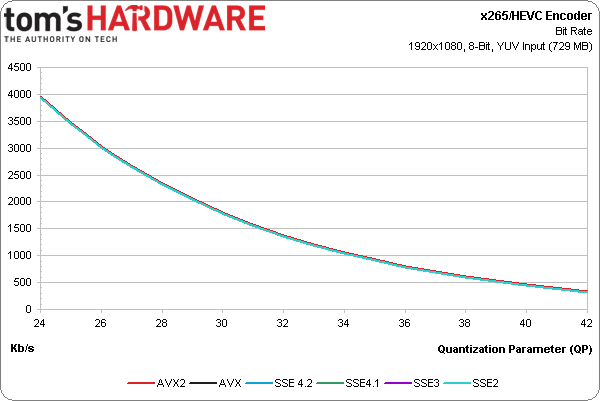

Performance scales up as the quantization parameter changes, affecting quality. Naturally, as you approach the maximum QP of 51 (we’re only testing up to 42), bit rates drop and the encoder’s speed increases.

Although QP changes affect performance and encode time, the bit rate does not vary, with one exception. For some reason, we observed a very slightly higher rate with AVX2 enabled, which is why you see the red line peeking out above the other overlapped results.

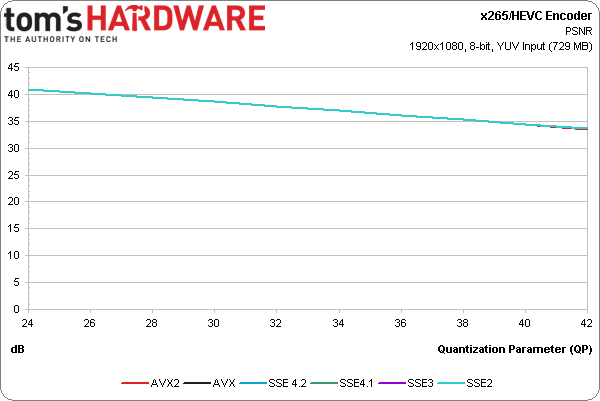

You can draw conclusions about video quality two ways: objectively, using mathematical models, or subjectively, by looking at two clips and creating your own evaluation. PSNR, or peak signal-to-noise-ratio, is the most commonly-used objective technique describing the ratio between the reference image (in our case a raw YUV file) and error introduced by compressing it. This isn’t a perfect representation of quality, but, in general, a higher PSNR corresponds to an output more representative of the original.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Again, we see consistency across each instruction set, except for AVX2, which dips a bit at the highest QPs.

Current page: Benchmarking Pre-Alpha x265

Prev Page Introducing HEVC And x265 Next Page x265 Versus x264 And CPU Utilization-

Jindrich Makovicka In addition to PSNR comparison, I'd be much more interested in the SSIM metric, which is better suited for codecs using psychovisual optimizations.Reply

PSNR can be usable for when testing varying parameters for one codec, but not so much when comparing two completely different codecs. -

CaedenV Nice intro to the new codec!Reply

And to think that this is unoptomized... Once this is finalized it will really blow 264 out of the water and open new doors for 4K content streaming, or 1080p streaming with much better detail and contrast. This is especially important with the jump to 4K video. The 16x16 grouping limit on x264 is great for 1080p, but with 4K and 8K coming down the pipe in the industry we need something better. The issue is that we really do not have many more objects on the screen as we did back in the days of 480i video, it is merely that each object is more detailed. Funny thing is that a given object will typically have more homogeneous data across its surface area, and when you jump form 1080p to 4K (or 8K as is being done for movies) then it takes a lot more 16x16 groupings which may all relay the same information if it is describing a large simple object. Moving up to 64x64 alone allows for 8K groupings that take up the same percentage of the screen as 16x16 groupings do in 1080p. -

nibir2011 Considering the CPU Load i think it wont be a viable solution for almost any home user within next 2-3 years unless CPUs gets exceptionally fast.Reply

Of course then we have the Quantum Computer. ;) -

Shawna593767 Quantum computers aren't fast enough for this, the get their speed by doing less calculations.Reply

For instance a faster per clock x86 computer might have to do say 10 million calculations to find something, whereas the quantum computer is slower per clock but would only need 100,000 calculations. -

nibir2011 Reply11211403 said:Quantum computers aren't fast enough for this, the get their speed by doing less calculations.

For instance a faster per clock x86 computer might have to do say 10 million calculations to find something, whereas the quantum computer is slower per clock but would only need 100,000 calculations.

well a practical quantum computer does not exist . lol

i think that is not the case with calculation.i think what you mean is accuracy. number of calculation wont be different; it will be how many times same calculations need to be done. in theory a quantum computer should be able to make perfect calculations as it can get all the possible results by parallelism of bits. a normal cpu cant do that it has to evaluate each results separately. SO a quantum computer is very very efficient than any traditional cpu. Speed is different it depends on both algorithm and architecture. quantum algorithms is at its infancy. last year maybe a quantum algorithm for finding out primes was theorized. I do not know if we will see a quantum computer capable of doing what the regular computers do next 30 years.

thanks

-

InvalidError Most of the 10bit HDR files I have seen seem to be smaller than their 8bits encodes for a given quality. I'm guessing this is due to lower quantization error - less bandwidth wasted on fixing color and other cumulative errors and noises over time.Reply -

ddpruitt I know it's a minor detail but it's important:Reply

H.264 and H.265 are NOT encoding standards, they are DECODING standards. The standards don't care how the video is encoded just how it's decoded, I think it should be made clear because the article implies they are decoding standards and people incorrectly assume one implies the other. x264 and x265 are just open source encoders that encode to formats that can be decoded properly by H.264 and H.265.

x264 has noticeable issues with blacks, they tend to come out grey. I would like to see if x265 resolves the problem. I would also like to see benchmarks on the decoding end (CPU Load, power usage, etc) as I see this becoming an issue in the future with streaming video on mobile devices and laptops. -

Nintendo Maniac 64 You guys should really include VP9 in here as well, since unlike VP8 it's actually competitive according to the most recent testing done on the Doom9 forums, though apparently the reference encoder's 2-pass mode is uber slow.Reply