MLPerf Client 1.0 AI benchmark released — new testing toolkit sports a GUI, covers more models and tasks, and supports more hardware acceleration paths

A useful new tool for navigating the highly fluid world of client AI performance

The AI revolution is upon us, but unlike past shifts in computing, most of us interact with the most advanced versions of AI models in the cloud. Leading services like ChatGPT, Claude, and Gemini all remain cloud-based. For reasons including privacy, research, and control, however, locally run AI models are still of interest, and it's important to be able to reliably and neutrally measure the AI performance of client systems with GPUs and NPUs on board.

Client AI remains a highly fluid space as hardware and software vendors work to define the types of workloads best suited for local execution and the best compute resources upon which to execute them. To help navigate this rapidly changing environment, the MLCommons consortium and its MLPerf Client working group maintain a client benchmark that's developed in collaboration with major hardware and software vendors.

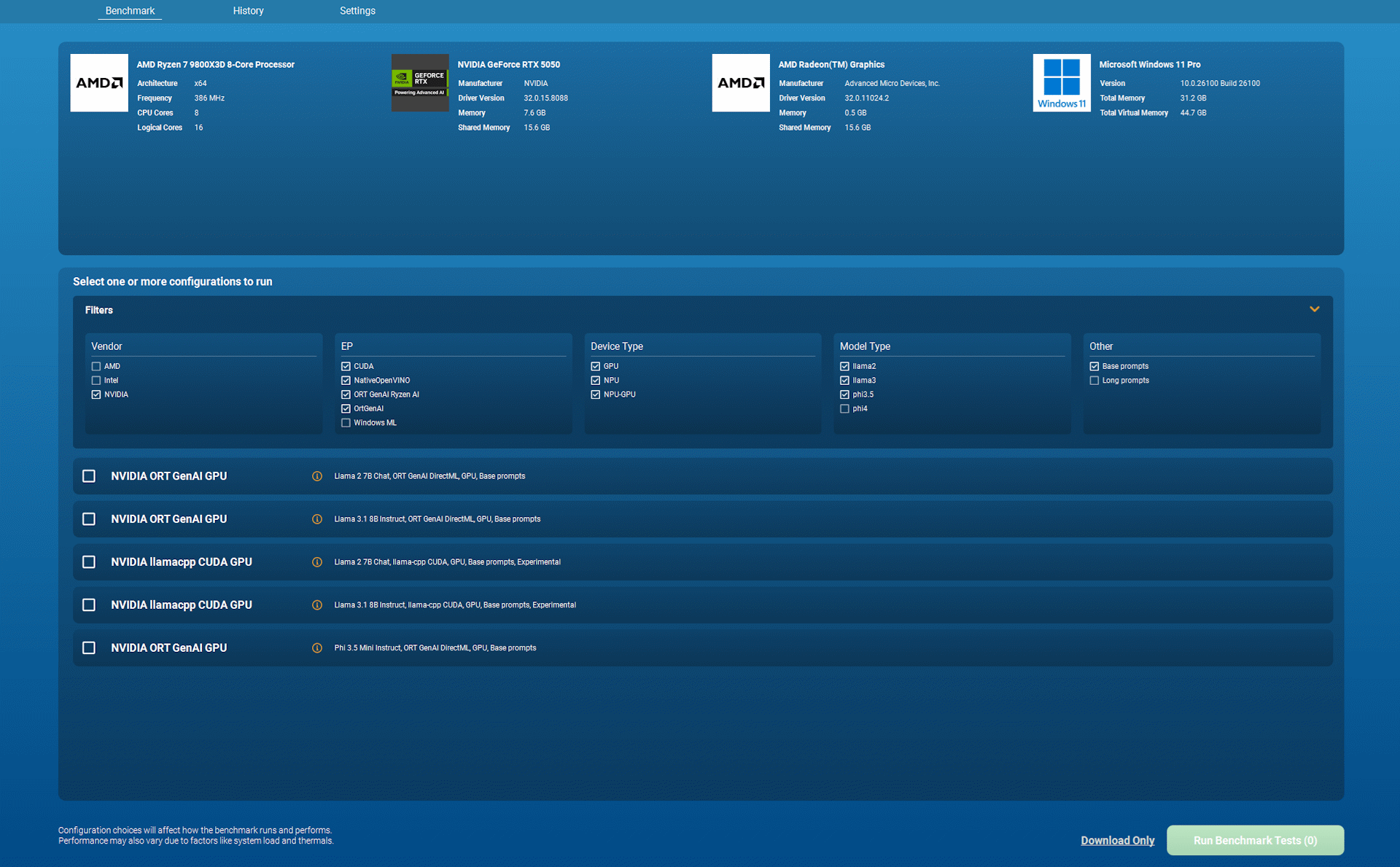

MLPerf Client 1.0 has just been released with some big improvements over the prior version 0.6 benchmark. This new tool includes more AI models, supports hardware acceleration on more devices from more vendors, and tests a broader range of possible user interactions with large language models. It also sports a user-friendly graphical interface that should broaden the appeal of this test for more casual users.

MLPerf Client 1.0 can now test performance with Meta's Llama 2 7B Chat and Llama 3.1 8B Instruct models, as well as Microsoft's Phi 3.5 Mini Instruct. It also offers support for the experimental Phi 4 Reasoning 14B model as a possible example of performance with a next-gen language model with a larger set of parameters and greater capabilities than before.

MLPerf Client 1.0 also explores performance across a greater range of prompt types. It now examines performance for code analysis, as developers might commonly ask for nowadays. It can also measure content summarization performance with large context windows of 4000 or 8000 tokens as an experimental feature.

This range of models and context sizes gives hardware testers like yours truly a more broadly scalable set of workloads across more devices. For example, some of the experimental workloads in this release require a GPU with 16GB of VRAM to run, so we can stress higher-end hardware, not just integrated graphics and NPUs.

The hardware and software stacks of client AI are fluid, and the various ways that one can accelerate AI workloads locally are frankly dizzying. MLPerf Client 1.0 covers more of these acceleration paths across more hardware than before, most notably for Qualcomm and Apple devices. Here's a list of the supported paths:

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

- AMD NPU and GPU hybrid support via ONNX Runtime GenAI and the Ryzen AI SDK

- AMD, Intel, and NVIDIA GPU support via ONNX Runtime GenAI-DirectML

- Intel NPU and GPU support via OpenVINO

- Qualcomm Technologies NPU and CPU hybrid support via Qualcomm Genie and the QAIRT SDK

- Apple Mac GPU support via MLX

Version 1.0 of the benchmark also supports these experimental hardware execution paths:

- Intel NPU and GPU support via Microsoft Windows ML and the OpenVINO execution provider

- NVIDIA GPU support via Llama.cpp-CUDA

- Apple Mac GPU support via Llama.cpp-Metal

Last but certainly not least, MLPerf Client 1.0 now has an available graphical user interface that lets users understand the full range of benchmarks they can run on their hardware and easily choose among them.

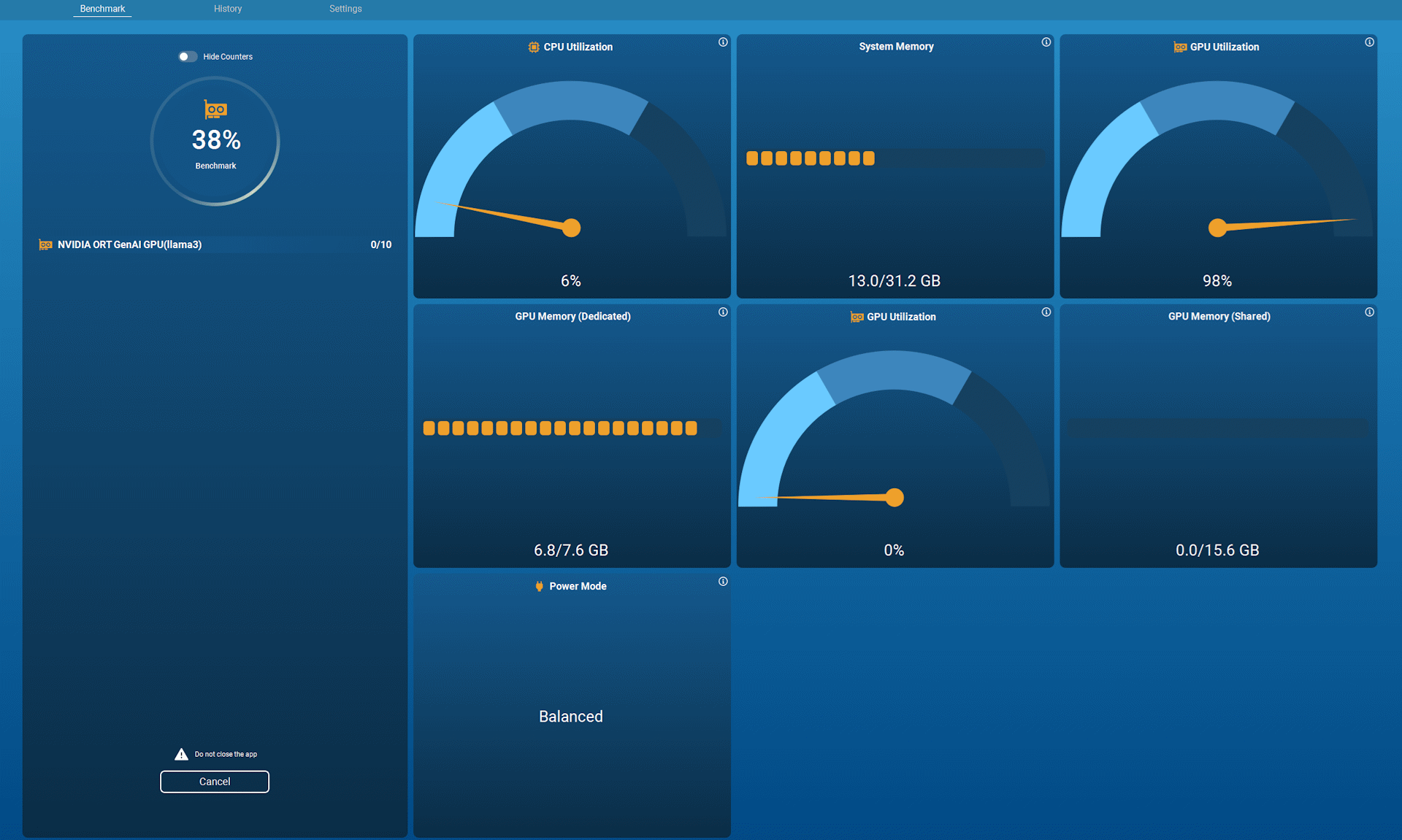

The GUI version also provides real-time monitoring of the various hardware resources on a system so that you can see at a glance whether your chosen execution path is exercising the expected GPU or NPU at a glance.

Past versions of MLPerf Client were command-line-only tools, so this new user interface should broaden the appeal of the benchmark both for casual users who just want to kick the AI tires on their GPU or NPU (or both) and for professional hardware testers who need to collect and compare results across a number of different hardware and software configurations.

MLPerf Client 1.0 is available now as a free download for all from GitHub. If you're interested in understanding how your system performs across a broad range of AI workloads, give it a shot. We've already spent some time with version 1.0 and we're excited to continue digging into it as we explore AI performance across a wide range of hardware.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

As the Senior Analyst, Graphics at Tom's Hardware, Jeff Kampman covers everything to do with GPUs, gaming performance, and more. From integrated graphics processors to discrete graphics cards to the hyperscale installations powering our AI future, if it's got a GPU in it, Jeff is on it.