Distributor claims that Nvidia has allegedly stopped taking orders on HGX H20 GPU processors

Could they be shifting production capacity to more expensive units?

Nvidia has allegedly stopped taking orders for its China-specific HGX H20 GPUs used in AI and HPC applications, reports Cailian News Agency (CNA) citing a distributor source. This may signal that the processor has been discontinued due to slow demand, or the U.S. government's new export rules that ban its sales to Chinese entities, or Nvidia is about to roll-out another China-specific GPU for AI workloads. As the news comes from two unofficial sources, we must be skeptical until more official news surfaces.

"We need to go through dealers to place orders with Nvidia," a spokesperson for a terminal manufacturer told Cailian News Agency. "Recently, many dealers have said that they will no longer accept H20 orders, and some have said that they can take orders in stock. […] Some dealers who don't take orders now may be betting on whether the H20 will be discontinued in the near future. If so, they will sell it at a higher price."

The information about possible discontinuation of Nvidia's HGX H20 does not come from one source. Wang Yu, a China-based hardware dealer, told CNA that Nvidia had ceased to accept DGX H20 orders already last month, but there was no clear notice about the move. Also, a few distributors claim to have remaining stock and are fulfilling orders, the report says.

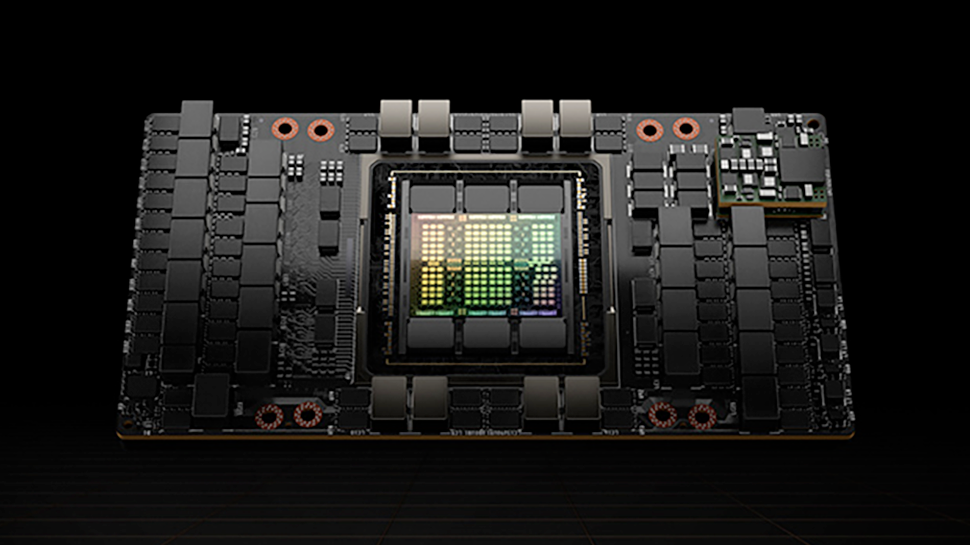

Nvidia's HGX H20 processor was specifically designed for the Chinese market in order to comply with U.S. export restrictions. While the unit seems to use Nvidia's H100 silicon, its performance is massively reduced when compared to the original GPU. A choice that was made so that Nvidia could ship it to Chinese entities.

While the HGX H20 offers just 296 INT8/FP8 TOPS/TFLOPS and 148 BF16/FP16 TFLOPS for AI tasks, the full-fat H100 delivers 3,958 INT8/FP8 TOPS/TFLOPS and 1,979 BF16/FP16 TFLOPS. The product has 96 GB of HBM3 memory, 4.0 TB/s memory bandwidth, and 8-way GPU capability, allowing to build AI servers based on HGX H20.

Despite its lowered performance, the HGX H20 still carries a steep price tag of around $10,000 - $13,000. Despite this major performance degradation and high price, the H20 was in high demand among Chinese companies as even with cut-down specifications, Nvidia's H20 reportedly outperforms competitors like Huawei's Ascend processors in real-world applications. For example, ByteDance alone was rumored to get some $200,000 HGX H200 processors, according to a report. In total, sales of Nvidia's DGX H20 were supposed to hit one million units in 2024 and generate revenue of around $12 billion assuming that the U.S. government does not ban sales of these processors to Chinese companies.

If this is the case, then some distributors may indeed be stockpiling Nvidia's HGX H20 processors to sell them when they get more expensive amid the U.S. sales ban to China. However, if the U.S. government notified Nvidia of plans to restrict sales of the HGX H20 processor, then the green company would need to notify its investors of this material information. Since Nvidia has not done this, it does not look like the U.S. government has formally restricted sales of this GPU. Meanwhile, Nvidia has not provided a formal statement on the rumored discontinuation.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

It should be noted that Nvidia might shift its production away from HGX H20 to the more powerful and expensive H100 and H200 that it can now sell not only to clients in the U.S. and Europe, but also to select customers in the UAE and Saudi Arabia.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

kerberos_20 it somewhat lines up with thisReply

https://www.tomshardware.com/pc-components/gpus/nvidia-could-lose-up-to-dollar12-billion-in-revenue-if-us-bans-new-china-oriented-gpu-analysts-believe-the-h20-will-get-the-banhammer-soon -

Al Zaidi Reply

FWIR, H20 's are simply clock crippled H100 's. Same chips. If so, how does Nvidia not lose money selling them at $13k instead of $200k? With a margin of about 50%, they would cost about $100k just to make.Admin said:Some dealers in China reportedly cease taking orders on Nvidia's HGX H20 processor.

Distributor claims that Nvidia has allegedly stopped taking orders on HGX H20 GPU processors : Read more

I am guessing China is smuggling or accessing external H100's .