Silicon Motion is developing a next-gen PCIe 6.0 SSD controller

When it is set to be available is unknown.

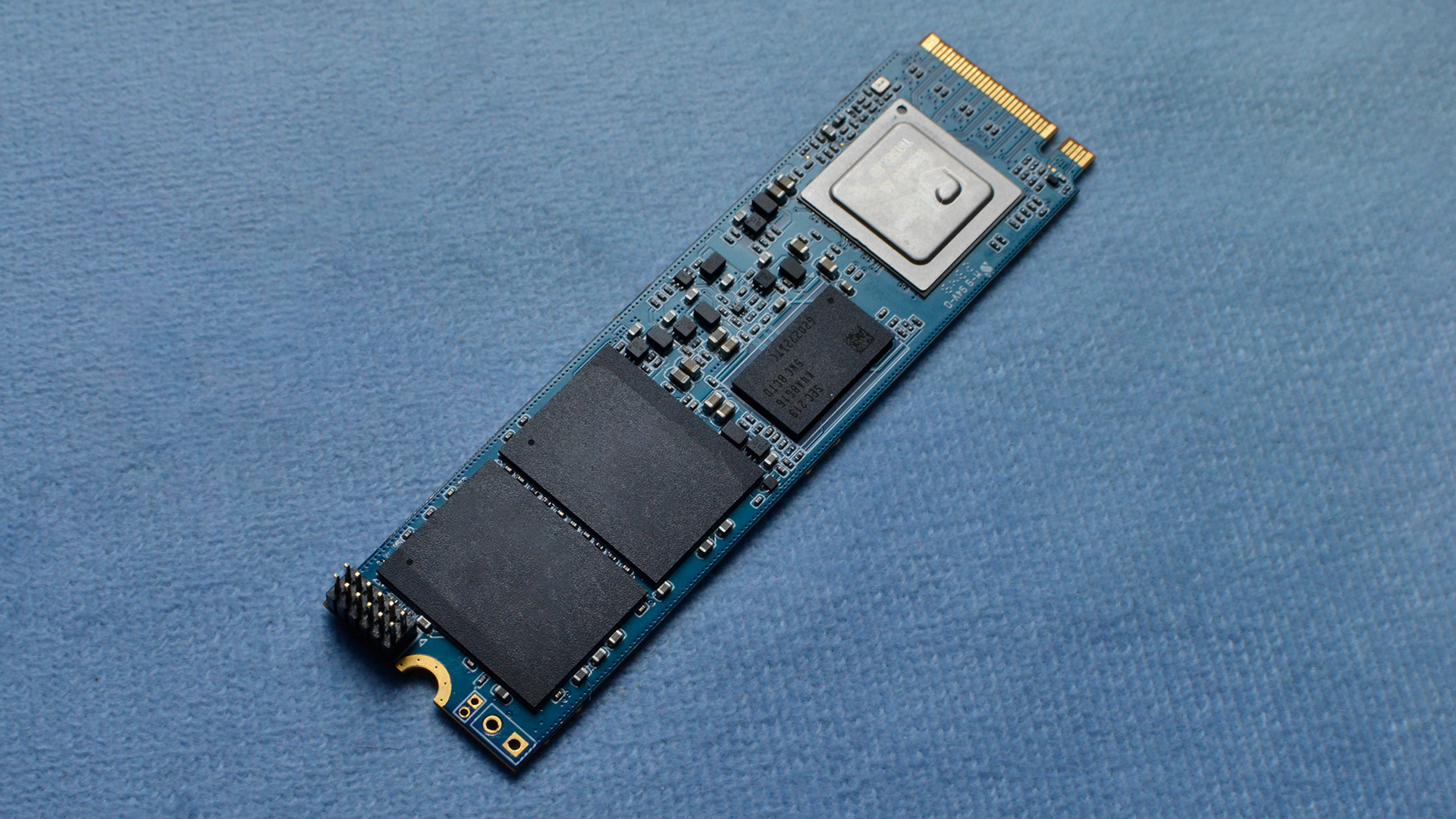

Silicon Motion is already developing its first SSD controller for drives with a PCIe 6.0 interface. The new controller is called SM8466 and belongs to the MonTitan family of SSD controllers for datacenters and enterprise.

Wallace C. Kou, chief executive of Silicon Motion made the short announcement of the company's inaugural PCIe 6.0 SSD controller in his column for ChinaFlashMarket.com. The CEO of SMI did not reveal many details about the company's SM8466 PCIe Gen6 SSD controller, though we can make an educated guess that the chip will feature a PCIe 6.0 x4 interface and will aim for an up to 30.25 GB/s bandwidth in each direction, a 2X performance increase compared to drives with a PCIe 5.0 x4 interface. Obviously, since we are dealing with a Mon Titan controller — and based on the naming scheme, we are indeed dealing with enterprise-oriented silicon — expect it to feature all the enterprise-grade security capabilities.

One of the interesting questions about Silicon Motion's Mon Titan SM8466 controller is which process technology SMI plans to use for its manufacturing. The company has never revealed the manufacturing process of its SM8366 controller introduced in 2022, though its client-oriented SM2508 PCIe 5.0 x4 controller — which powers some of the best SSDs — that is entering the market these days uses TSMC's N6 (6nm-class) fabrication process. In any case, the new SM2466 is poised to use a new production node and therefore pack more processing cores, which will enable better performance, features, and enhanced compatibility with next-generation 3D TLC and 3D QLC types of NAND memory.

At this point, we have no idea whether Silicon Motion's development of its first PCIe 6.0 x4 controller is at the early stages of development or further along. In any case, the very mention of the project indicates that the work is in progress, so expect SMI-based drives with a PCIe 6.0 x4 interface in the future.

Silicon Motion is somewhat behind its main competitor with its PCIe 5.0 SSD controllers. Phison was ahead of the whole industry with its PS5026-E26 controller with a PCIe 5.0 x4 interface. While the chip was initially used for client drives, it was designed to power both client and datacenter SSDs. As a result, the E26 competes against SMI's MonTitan SM8366 which is a more powerful controller positioned to fight for the higher-end of the enterprise market.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user This can only be aimed at the server market, given that's the only platform with PCIe 6.0 support on their roadmap. The weird part is that datacenter SSDs actually lagged consumer SSDs in embracing PCIe 5.0, by a couple years. At present, no sever platforms currently support PCIe 6.0.Reply -

thestryker Reply

This is just speculation based on what I know about the consumer market, but it could be based on manufacturing node. No client controllers until last year were manufactured on a 7nm node and I can't imagine an enterprise level PCIe 5.0 controller on anything worse.bit_user said:The weird part is that datacenter SSDs actually lagged consumer SSDs in embracing PCIe 5.0, by a couple years.

All of the 30TB+ class drives I've seen have been PCIe 4.0 so while I know these drives don't need PCIe 5.0 perhaps there's something else at play as well. -

pixelpusher220 the progress in performance not just in SSDs but in most parts of the PC echo system is seemingly pushing into overkill territory for general use. Will be interesting to see how mfrs balance the needs of the masses with the bleeding edge.Reply

PCIe 4.0 is *plenty* for all but a small subset of scenarios. And given the likely cooling needs of 6.0 speeds, almost wonder if the current 'stick it anywhere on the mobo' might need to move to a more standard and cooler friendly location. -

OldAnalogWorld Again, this is only for the server market, where it makes any sense.Reply

In the consumer segment, it would be better for these gentlemen to make new controllers for the 3.0/4.0 bus using the "3nm" process technology with a consumption of no more than 4W at peak - this is what all laptop buyers are waiting for - 4-8 TB drives with a consumption of no more than 4W. Wherever you look, there is an abundance of SSDs with a consumption of 9-10W at peak, even the top Samsung lines are like that.

Where are the 2-4-8 TB models like the Hynix P31 Gold? I can't find them in retail for the 3.0/4.0 bus, and I am generally not interested for 5.0 in laptops, and in PCs too. -

bit_user Reply

Here's a 61.44TB drive that was shipping >= 2 months ago @ PCIe 5.0 x4:thestryker said:All of the 30TB+ class drives I've seen have been PCIe 4.0 so while I know these drives don't need PCIe 5.0 perhaps there's something else at play as well.

https://www.storagereview.com/review/the-micron-6550-ion-ssd-gen5-performance-energy-efficiency-and-high-capacity-in-one-drive

I'm pretty sure I've seen announcements of that capacity and higher @ PCIe 5.0 by others, but I don't have time to go looking right now. -

bit_user Reply

When I see PCIe 5.0 NVMe drives tested, it's always using a PCIe 5.0 host. Forcing them to run at PCIe 4.0 (which is the most that many laptop M.2 slots support, anyhow) should reduce their power consumption and heat dissipation. I'd be curious to know how efficient the latest generation of PCIe 5.0-capable controllers are at that speed.OldAnalogWorld said:what all laptop buyers are waiting for - 4-8 TB drives with a consumption of no more than 4W. Wherever you look, there is an abundance of SSDs with a consumption of 9-10W at peak, even the top Samsung lines are like that.

Where are the 2-4-8 TB models like the Hynix P31 Gold? I can't find them in retail for the 3.0/4.0 bus, and I am generally not interested for 5.0 in laptops, and in PCs too. -

Geef Reply

That would be great for the 'laps' of people using laptops. I know I usually set my laptop on the table when I download a huge game from Steam. It's a 1TB Samsung drive so it gets hot during huge file transfers. Luckily not so while playing.OldAnalogWorld said:make new controllers for the 3.0/4.0 bus using the "3nm" process technology with a consumption of no more than 4W at peak -

bit_user Reply

I found this statement interesting. If you don't mind my being nosy, I'm curious to know the following:Geef said:That would be great for the 'laps' of people using laptops. I know I usually set my laptop on the table when I download a huge game from Steam. It's a 1TB Samsung drive so it gets hot during huge file transfers. Luckily not so while playing.

How fast are your steam downloads (approx. MB/s or gigabits/s, if you know; otherwise, general download speed)?

Do you know which model SSD that is?

Does your laptop have any airflow or other form of SSD cooling, in the spot where the SSD is located?

I ask because the network speed should be well below the sustained write speed of the SSD (probably), so this would only make much sense to me if the SSD has essentially no airflow or other form of direct cooling. -

OldAnalogWorld Reply

I can answer for him in general, without specifics. The problem of most laptops (architectural) is that there is practically no normal air draft through the M.2 slots and, what's more, the volume of the cases (height) physically does not allow the installation of effective passive radiators. All this together leads to catastrophic consequences when thoughtlessly installing high-performance series. And they are most often bought to replace low-consuming (and most often DRAMless) series by specially selected laptop manufacturers. Naturally, they are worse in performance and have problems with serious simultaneous read/write load without a DRAM buffer in which a copy of the translator is stored for quick search and updates of service tables. For laptops, except for rare "gaming" models, where they at least somehow thought out the place to install M.2 drives, energy-efficient and optimal in performance models with a DRAM buffer should be available.bit_user said:I ask because the network speed should be well below the sustained write speed of the SSD (probably), so this would only make much sense to me if the SSD has essentially no airflow or other form of direct cooling.

By the way, with the "3nm" technical process, nothing prevents integrating 1-2 GB of RAM directly into the controller as part of the circuit. Which will only increase overall reliability and free up some space for NAND chips on the 2280 form factor. Dramless controllers have a built-in buffer, but a paltry 32-64MB. But they were made using outdated thick technological processes. And even more so with the transition to "2 nm", a 2-4GB buffer needs to be moved inside the controller chip. The HMB buffer in PC memory will never be a normal replacement, and besides, it is also very few for some reasons. The closer and faster the memory is to the controller, the more energy efficient it is, which means that critical operations are completed faster due to PLP circuits, which are also not integrated into consumer solutions, although it is in them that the reliability and stability of the power supply is in great question...

Currently, the market is completely absurd for laptops (but not desktops) - there are practically no competitors to the Hynix P31 Gold (the P41 Platinum is already too hot for the 4.0 bus). At the same time, the P31 is already morally obsolete at the controller level in terms of the process technology. And besides, it is extremely scarce and can rarely be bought anywhere (especially outside the USA) with a 5-year warranty, and buying any SSD without such a warranty is complete madness, given the probability of failure of a particular instance - 50/50.

We, ordinary consumers for laptops, simply have no choice. Especially in terms of single-sided models for 2-4-8TB.

Of course, you can switch such models as the 990Pro to energy-saving mode, but the big question is - will such a mode ensure the operation of the drive without guaranteed surges in consumption (current) above the limits for weak M.2 slots in ordinary office laptops and business series? I have my doubts.

It would be good if there were more single-sided models from 2TB and the ability to set a mode at the level of their NVRAM that clearly limits peak consumption in order to fit within the limits for a specific series of laptops.

5.0 models with DRAM buffer are unlikely to reach optimal consumption levels in laptops even with "2nm" process technology. And 6.0+ is probably only the 30s+ of this century, not earlier... -

bit_user Reply

Can you point to other examples where logic has been mixed with DRAM, on the same die? I'm not aware of any. A quick search turned up this thread:OldAnalogWorld said:By the way, with the "3nm" technical process, nothing prevents integrating 1-2 GB of RAM directly into the controller as part of the circuit.

"You can make logic on a DRAM process, but either your logic will be slow or you'll have to increase the cost of your cost/power consumption of your DRAM cells."

https://news.ycombinator.com/item?id=14520163

I wonder how big the DRAM really needs to be, since you could just use POP packaging to hide it under the controller. I'll bet some SSDs with DRAM already do this.OldAnalogWorld said:Which will only increase overall reliability and free up some space for NAND chips on the 2280 form factor.

Is that DRAM or SRAM, though?OldAnalogWorld said:Dramless controllers have a built-in buffer, but a paltry 32-64MB.

I'll bet that's mainly due to how high-latency PCIe tends to be. CXL is much more latency-optimized, though I'm not sure if it would make HBM that much better.OldAnalogWorld said:The HMB buffer in PC memory will never be a normal replacement,

Some Crucial and Intel SATA drives (higher-end consumer models) had power loss protection capacitors built-in. I own some.OldAnalogWorld said:The closer and faster the memory is to the controller, the more energy efficient it is, which means that critical operations are completed faster due to PLP circuits, which are also not integrated into consumer solutions, although it is in them that the reliability and stability of the power supply is in great question...

I think you simply can't have a HMB (Host Memory Buffer) drive with power-loss protection, because the host CPU could go kaput and take the host memory buffer with it.

FWIW, the P31 Gold is currently available on Amazon (seller: SKHynix_USA). The 2TB model is going for $150 but has a $25 coupon. I bought one for $94, back during the SSD price crash of 2023.OldAnalogWorld said:Currently, the market is completely absurd for laptops (but not desktops) - there are practically no competitors to the Hynix P31 Gold (the P41 Platinum is already too hot for the 4.0 bus). At the same time, the P31 is already morally obsolete at the controller level in terms of the process technology. And besides, it is extremely scarce and can rarely be bought anywhere (especially outside the USA) with a 5-year warranty,

https://target.georiot.com/Proxy.ashx?tsid=45723&GR_URL=https%3A%2F%2Famazon.com%2Fdp%2FB099RHVB42%3Ftag%3Dhawk-future-20%26ascsubtag%3Dtomshardware-us-8381835858489315407-20

I was curious whether you're right about it being unrivaled on power consumption, so I had a look around. I'm not sure these are the best examples, but I found two PCIe 4.0 drives that achieve close to the same average power consumption and trounce it on efficiency (i.e. due to being faster drives, overall). I've summarized the results in a table. Each drive name is a link to the corresponding review, from where I got the data.

Model50 GB Folder Copy Avg Power (W)50 GB Folder Copy Max Power (W)50 GB Folder Copy EfficiencySK hynix P31 Gold (2TB)2.283.34446.0Samsung 990 Evo Plus (2TB)2.944.56524.3Silicon Power US75 (2TB)3.044.25646.9

Although I listed the Max power, total heat output is going to be determined by the average power. On that front, they're not too far off. I think it's conceivable that they could at least equal the P31 Gold, if put in a PCIe 3.0 slot. I wonder if any laptop BIOS lets you force the slot into 3.0 mode.

I omitted the idle power stats, because I have it on good authority that the numbers with ASPM are all just a few mW and not worth worrying about. If you're concerned about laptop battery life, you'd enable ASPM (if the BIOS even gives you a choice - otherwise it'll be on by default).

I wonder where you get this stat, because I've never seen a SSD fail. I think I heard about a couple failures at work, in desktop machines well over 5 years old, but that's still a 95% or better reliability rate at drives with 5 years of use or more.OldAnalogWorld said:and buying any SSD without such a warranty is complete madness, given the probability of failure of a particular instance - 50/50.

The launch review of the 990 Pro tested low-power mode. It doesn't seem to perform much worse, but also barely saved any power. Perhaps the most notable difference is the idle power (with ASPM disabled). See for yourself:OldAnalogWorld said:Of course, you can switch such models as the 990Pro to energy-saving mode, but the big question is - will such a mode ensure the operation of the drive without guaranteed surges in consumption (current) above the limits for weak M.2 slots in ordinary office laptops and business series? I have my doubts.

https://www.tomshardware.com/reviews/samsung-990-pro-ssd-review/2

True. They have temperature sensors, although I doubt the throttling thresholds are configurable and that doesn't necessarily help you save battery.OldAnalogWorld said:It would be good if there were more single-sided models from 2TB and the ability to set a mode at the level of their NVRAM that clearly limits peak consumption in order to fit within the limits for a specific series of laptops.