Quantum Computing May Make Ray Tracing Easier

A 190% reduction in ray tracing complexity? Oh my.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

An international team of researchers across the UK, the US and Portugal think they've found an answer to ray tracing's steep performance requirements. And according to them, the answer lies in a hybrid of classical ray tracing algorithms with quantum computing. According to the research paper (currently in preprint), ray tracing workloads aided by quantum computing can offer an up to 190% performance improvement by slashing the number of calculations required by each ray, significantly reducing the requirements of the technology.

The introduction of ray tracing in graphics technologies has marked a significant evolution in the way we render games. And yet, its adoption and performance have been relatively limited compared to how groundbreaking this technology is. Part of the reason stems from ray tracing's deep hardware and computational requirements, which can bring even the world's most powerful GPUs to their knees. In addition, the need for specialized hardware locks most users out of the technology, barring a discrete GPU upgrade that can handle such workloads.

The current surge in upscaling technologies from all GPU vendors: Nvidia's DLSS, AMD's FSR 1.0 and FSR 2.0, and Intel's upcoming XeSS were primarily built to offset the extreme performance penalties that come from enabling ray tracing. These technologies work by lowering the amount of rendered pixels to reduce the computational complexity of a given scene before applying an algorithm that reconstructs the image towards its targeted output resolution. This approach is not without caveats, despite the image quality improvements that have been continuously built into these software suites since their introduction.

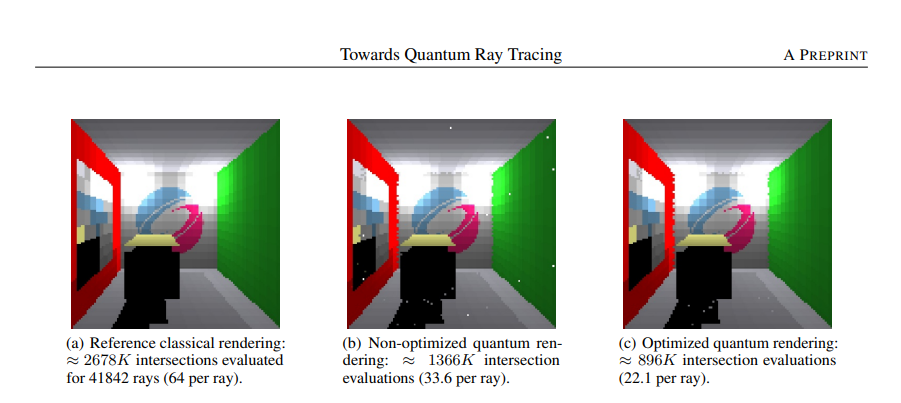

The research paper offers yet another way to significantly reduce the computational expense of ray tracing. The researchers ultimately demonstrated their claims by rendering a small, 128x128 ray traced image in three approaches: classical rendering, non-optimized quantum rendering, and optimized quantum rendering. The results speak for themselves: the classical rendering technique required computing 2,678 million ray intersections on that tiny 3D image (64 per ray). The unoptimized quantum technique nearly halved that number, requiring only 33.6 intersection evaluations per ray (for a total of 1,366 million ray intersections). Finally, the optimized quantum-classical hybrid algorithm managed to render the same image with only 896 thousand intersection evaluations, averaging out at 22.1 per ray - a far cry from the 64 per ray achieved with current rendering techniques.

Due to today's relatively low performance of quantum computers (expressed in the quantum volume metric), there was a hard limitation on the rendered scene's complexity. Rendering each of these images required hours of compute time per image. This happens partly because quantum computing devices are still developed under the NISQ (Noisy Intermediate-Scale Quantum) product category - enough for quantum simulations but not for deploying a quantum-hybrid renderer.

But considering the rhythm at which quantum computing is evolving - some might say exploding - in recent years, the researchers' vision for a medium-term hybrid rendered could help in bringing physics-accurate rendering to a broader spectrum of the population. IBM, for one, aims to expand quantum volume dramatically in the coming years, accelerating beyond the yearly doubling in quantum volume we're witnessing in the space.

While the research opens up the doors for a future, hybrid approach between classical and quantum rendering, the current state of quantum computing likely puts the researchers' results within a timeframe of years (the researchers express it as medium-term) before practical applications emerge.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

It also remains to be seen whether the algorithm's integration would require specialized quantum-capable circuits. If so, that might push both the cost and timeframe of these developments further into the future.

Yet the push towards cloud gaming - and improvements in cloud-based quantum computing - might give this new rendering system a faster time to market by offsetting hardware costs towards the big gaming companies instead of on the end-user. Quantum xCloud, anyone?

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

hotaru.hino Reply

This would fall under the umbrella of graphics rendering, so it can be used for anything that needs graphics.Mario.chamdjoko said:Does the "Quantum Computing" just for gaming, or we can use it for content creation too? -

setx "% performance improvement" is not the same % as "% reduction in complexity". You can't reduce more than 100%. Is it hard to properly copy-paste in title what original paper said?Reply -

gondor "up to 190% performance improvement " ... "significantly reducing " ...Reply

Up to 190% is hardly significant, it is not even an order of magnitude. Regular (non-quantum) GPUs will offer the same "up to 190%" performance improvement in a year or two at most. -

jp7189 Reply

The improvement will still be possible regardless of the GPU generation. The idea of reducing intersections per ray is going to be key to optimizing performance at every step of the way.gondor said:"up to 190% performance improvement " ... "significantly reducing " ...

Up to 190% is hardly significant, it is not even an order of magnitude. Regular (non-quantum) GPUs will offer the same "up to 190%" performance improvement in a year or two at most.