Why you can trust Tom's Hardware

Nvidia says the GeForce RTX 3090 isn't necessarily a gaming GPU. Never mind that it's part of the gaming brand by virtue of the GeForce name; Nvidia claims the RTX 3090 really comes into its own when doing professional content creation workloads. We've busted out half a dozen professional content creation applications and run them on our test PC. We've also run SPECviewperf 13 in both 1080p and 4K mode, which means a bunch more charts. The benefits of the RTX 3090 varied quite a bit, and we did have a few oddities in our testing (DaVinci Resolve performance was lower than expected, and we're still trying to track down the problem). Here are the preliminary results.

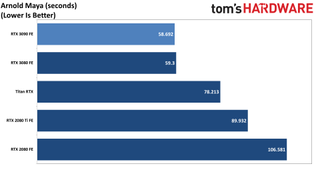

This test comes via Nvidia and uses the Kick.exe utility to render Sol — the dancing robot from Project Sol, which was part of the RTX 20-series launch. Except, it's not rendered in real time and instead uses Maya with Arnold to generate a higher-quality path tracing result. The Arnold is a bit unusual, in that the RTX 3090 was barely faster than the RTX 3080. We're still investigating things, as something on our test PC may be incorrectly configured or interfering with performance. Even so, performance was 33% faster than the Titan RTX. Also, by way of comparison, a Core i9-9900K took 902 seconds to render the scene.

Next up is Blender 2.90, and we're using both the Blender Benchmark utility (in Optix mode, not CUDA) along with several variations of an Nvidia-provided scene called Droid Race. The interesting bit with Droid Race is that besides a normal rendering mode, there are two options for handling motion blur. The "GPU Nodes" mode supports Ampere's new GPU-accelerated vector motion blur that delivers virtually identical results in less time. You can flip through the charts of the Droid Race tests along with the six Blender Benchmark scenes to see how the different GPUs compare. Overall, the RTX 3090 ends up besting the RTX 3080 by 26%. More importantly, it's over twice the performance of the Titan RTX.

DaVinci Resolve uses GPU acceleration to handle complex video filters. We have a test project, again provided by Nvidia, but our CPU utilization was very low (15-20% most of the time), and performance was lower than the expected numbers. We're still trying to figure out the root cause, but we ended up with a five-way tie in the 'Wedding Heavy Styles' scene (ignore the decimal point stuff — it was to avoid problems with our chart generation), while the 3090 was 19% faster in the 'Bride Selective Color' scene.

For ChaosGroup's Octane rendering application, we have three different scenes, rendered with and without RTX acceleration. The speedup from RTX ray tracing is typically 2-4x, and Octane is used by many rendering farms for 3D animation. Whether in RTX or non-RTX mode, the RTX 3090 is around 20% faster than the 3080. Go back to the Turing generation, however, and the RTX mode shows much greater benefits for Ampere. The 3090 is 40-50% faster than the Titan RTX in non-RTX mode, but 70-100% faster once RTX is enabled. The gap is even wider against the RTX 2080 Ti, with the 3090 leading by 94% on average.

There are two tests here: one using GPU accelerated H.264 encoding and the second rendering a preview of a clip within Premiere Pro. The encoding test ends up being pretty much a five-way tie among the GPUs. The 2080 FE comes in first, oddly enough, followed by the 2080 Super and then on down the line — minor differences in GPU clock speed are the likely factor. The other test shows more of a benefit for GPUs with lots of memory. The RTX 3090 completed the 'in to out' preview render 22% faster than the Titan RTX, but it was 44% faster than the RTX 3080.

Next up, we have two rendering tests for V-Ray, both using GPU acceleration. The RTX 3090 again claims the top spot, beating the 3080 by 24% the Titan RTX by 70%, and more than doubling the performance of the RTX 2080 FE.

Last, we have the industry standard SPECviewperf 13, which we ran in both 1080p and 4K modes. Several of these test applications benefit from some of the features enabled on the Titan RTX (but not on GeForce cards), so there are situations where Titan RTX still ends up faster — and there are other professional tests where the Quadro cards would be even faster still. Where are the Quadro Ampere cards? They haven't been announced yet, but Nvidia is holding its GPU Technology Conference (GTC) from October 5-9, which seems like a good place to announce new Quadro cards.

By the numbers, the RTX 3090 ended up beating the RTX 3080 by anywhere from 4-21% in the SPECviewperf 13 tests. The biggest leads were in catia-06, energy-02, and medical-03, while the smallest lead was in sw-03 at 4K. Overall average performance was 11% faster than the 3080. The Titan RTX really mixes things up, however.

Compared to the Titan RTX, the RTX 3090 is up to 32% faster (in maya-05, medical-02, and showcase-02). It does a bit better at 4K than 1080p in some other tests … but then there's catia-06 and snx-03. The Titan RTX is nearly twice as fast as the 3090 in catia-06, and about 20 times faster in snx-03. Obviously, if either of those software packages are things you use, the 3090 isn't going to be a great fit. Overall, for SPECviewperf, the 3090 ends up 35% slower than the Titan RTX, but it's heavily skewed by the massive gulf in snx-03 performance.

As a potential professional GPU solution, the RTX 3090 FE has a lot of promise. That's especially true for AI research, where the deep learning performance of GA102 at least comes somewhat close to the far more costly Nvidia A100. The large size of the RTX 3090 FE does present some problems, however. While we wouldn't recommend using dual 3090 cards for SLI and gaming, multi-GPU solutions aren't as uncommon with proviz workstations and AI research. The difficulty would be figuring out how to cram more than two RTX 3090 FE cards into most cases. Liquid cooling or other options might be required, and we'll be interested in seeing what Nvidia does with its Ampere Quadro cards. We've already heard of at least one manufacturer who plans on putting up to four RTX 3090 cards in a workstation, which could potentially beat even the A100. Scaling out to hundreds of GPUs is a different story, but for a more modest setup, there's a lot of potential in the RTX 3090.

Is the RTX 3090 Any Good for Cryptocurrency Mining?

One last compute-related test to mention is cryptocurrency mining. Yeah, we know, but it's a possibility. The good news (not for miners) is that even at 105 MH/s, which is what the RTX 3090 manages in Ethash, that would only net about $2.50-$3.00 per day in profit — assuming prices don't crash or spike, naturally. That's already down to about half of what we saw in terms of profitability at the time of the RTX 3080 launch, and with the higher entry price it simply doesn't make much sense to use a 3090 for mining. 500 days or more of 24/7 mining to break even? Yeah, no thanks. Not to mention, it's not at all good for the environment to burn energy just crunching random numbers. Please, just leave the GPUs for the games and imaging professionals. #GrumpyGamer

Current page: GeForce RTX 3090: Pro-Viz Benchmarks and Compute

Prev Page GeForce RTX 3090: Bonus Round 4K Ultra, Ray Tracing, and DLSS Gaming Next Page GeForce RTX 3090: Power, Temperatures, Fan Speed, Clocks, and NoiseJarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

VforV "Titan-class card" - again with this nvidia BS?Reply

It's not, it's a catchphrase from nvidia and the media keeps playing this tune. It's only a more expensive 3080 Ti, which will be proven soon. A halo product for people with more money than sense.

"Fastest current graphics card, period" - Wrong, it's not, it's based on the game and if it's optimized for nvidia (DLSS) or AMD (and no DLSS). In the first case it wins in the latter one it gets beaten by RX 6800 XT and RX 6900 XT, sometimes by a mile... as much as it beats them in the first case with DLSS ON, but then AMD does it by raw power. So it's 50/50, period.

"8K DLSS potential" - yeah, sure "potential". If you count dreaming of 8k gaming potential too, then yes. Again nvidia BS propagated by media still.

"Needs a 4K display, maybe even 8K" - no it does not "need" an 8k display unless you want to be majorly disappointed and play at 30 fps only. That is today, with today games and today's graphics demands, but tomorrow and next gen games you can kiss it goodbye at 8k. Another nvidia BS, again supported by the media.

GJ @ author, nvidia is pleased by your article. -

Loadedaxe Titan Class card? No offense, but to Nvidia it is. The rest of the world its a 3080Ti.Reply -

HideOut Mmmm 2 months late for a review? Whats happened to this company. Late or missing reviews. Nothing but rasberry Pi stuff, and sister publication anantech is nearly dead.Reply -

Blacksad999 Reply

Yeah, the Raspberry Pi stuff is getting annoying. They brought out their 5800x review way after the fact, too. Kind of odd. I understand it's a difficult year to review things, but months later than most review sites isn't a good look when that's supposed to be the sites specialty.HideOut said:Mmmm 2 months late for a review? Whats happened to this company. Late or missing reviews. Nothing but rasberry Pi stuff, and sister publication anantech is nearly dead.