Ubisoft, Nvidia, and Inworld AI partnership to produce "Neo NPC" game characters with AI-backed responses

Speaking to NPCs a la the infamously-faked "Project Milo" may be coming to future Ubisoft games, for real.

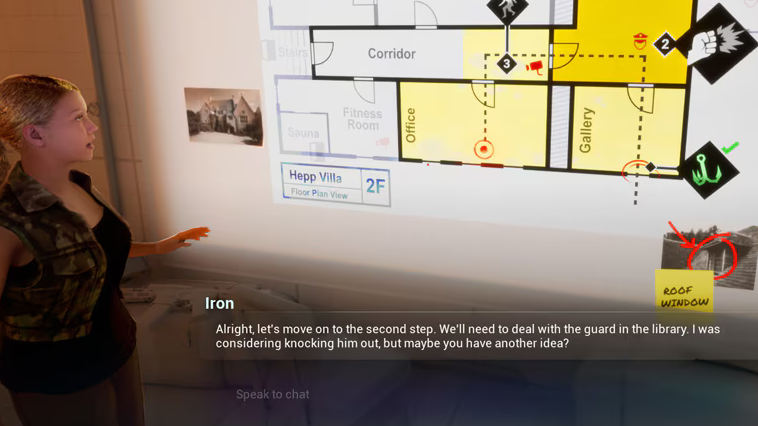

During this year's Game Developer's Conference (GDC 2024), Ubisoft has unveiled the product of a collaboration between itself, Inworld AI, and Nvidia's Audio2Face tech, demonstrating "Neo NPCs" capable of speaking directly to human players. This is made possible through the mighty, sometimes-terrifying, sometimes-quite-underwhelming power of generative AI, and is something we've been expecting to see since Inworld first popped up as an Xbox partner last year.

Interestingly, this actually isn't the first-ever example of interactable AI NPCs. An indie murder mystery game on Steam titled Vaudeville released on Early Access in June of 2023 and made significant waves for its litany of conversation-capable NPCs.

Like Neo NPCs, Vaudeville leveraged Inworld AI for its characters, but the programming/voices were still ultimately quite limited. As one of the first-ever examples of modern AI leveraged in game design, Vaudeville showed some potential, but let's be realistic here: it's no L.A. Noire, a similarly-themed all-time classic detective game from Rockstar Games.

Compared to Vaudeville, though, these "Neo NPCs" seem to be considerably more advanced, although the on-floor demos at GDC are limited. Even so, it's to be expected a company with the resources of Ubisoft would be able to utilize AI technology to a greater extent than a small indie developer.

Say what you like about stagnation in Ubisoft's writing and game design (and we're sure some of you have plenty to say about that), the last thing you could accuse a modern Ubisoft game of is having low production values. To overcome the uncanny valley and even come close to providing a truly immersive experience with generative AI-driven NPCs, the resources available to a AAA studio may just be necessary— though Guillemette Picard insists otherwise in the original blog post.

Another aspect focused on with "Neo NPCs" is that they can still have pre-written backstories and motivations. In fact, that's leveraged to help keep the character and their answers immersed in the setting, despite whatever the player might be saying that goes against those goals. Narrative Director Virginie Mosser said, "It's very different. But for the first time in my life, I can have a conversation with a character I've created. I've dreamed of that since I was a kid."

As cool as this advancement is, it's important to note that Ubisoft's Neo NPCs are currently limited to a few playable show floor experiences and nothing that players can actually use yet. However, the existence of this tech could mean a not-so-distant future where an Assassin's Creed game involves the player fully speaking for the historical character they're embodying in that title.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

As genuinely cool as all of that sounds, we don't want to understate the potential negative impact this could have on actual games writing and voice acting moving forward. Compelling voice and writing talent have been responsible for some of the most memorable moments in video games for decades— to such an extent AAA devs have been doing facial capture with real-life prestige actors for quite some time, now.

Christopher Harper has been a successful freelance tech writer specializing in PC hardware and gaming since 2015, and ghostwrote for various B2B clients in High School before that. Outside of work, Christopher is best known to friends and rivals as an active competitive player in various eSports (particularly fighting games and arena shooters) and a purveyor of music ranging from Jimi Hendrix to Killer Mike to the Sonic Adventure 2 soundtrack.

-

hotaru251 wanna bet they list games that sue this as "AAAA" even though it wont really add any benefit to player? (very few games have any reason to talk to an npc)Reply -

Sleepy_Hollowed Unless models and ML processors are forever backwards and forward compatible, this is a very expensive tax write off.Reply

I wouldn’t touch this as a dev unless the ML can be ran on python as software emulation on-computer. -

Notton It's noobisoft, they are going to over promise and under deliver, like they always have.Reply

I am honestly surprised they haven't been under investigation for fraud and stock manipulation, etc. -

baboma VTi2l_hjVRsView: https://youtu.be/VTi2l_hjVRsReply

Dialog for the 1st NPC (Bloom) is pretty on-point, the 2nd one not so much. My question would be how much storywriting work is required to get to this level of conversation for Bloom, keeping in mind that this is just one NPC out of potentially many.

The text-to-speech can probably be done with on-device NPU, but the LLM response at this point would probably require cloud-based connection (read: Internet connection). This would likely entail upsell to a recurring payment model (read: subscription).

Personally I would rather just to have the responses conveyed in text form, and skip the T2S along with facial expression & lip sync effects. It's less work, and believability/immersion wouldn't be any worse than that shown in the demo. Even if the demo's response lag & stuttering were fixed, it would still feel like talking to an animated mannequin. -

jeffy9987 Reply

no AAAA is for live service games AAAAA is for games POWERED BY AI or maby AIAAAA patent pendinghotaru251 said:wanna bet they list games that sue this as "AAAA" even though it wont really add any benefit to player? (very few games have any reason to talk to an npc) -

tamalero Reply

Not to mention has a game function goes.. generating multi branches for conversations would be incredible tiresome if you're just looking to complete a quest and get to the point.baboma said:VTi2l_hjVRsView: https://youtu.be/VTi2l_hjVRs

Dialog for the 1st NPC (Bloom) is pretty on-point, the 2nd one not so much. My question would be how much storywriting work is required to get to this level of conversation for Bloom, keeping in mind that this is just one NPC out of potentially many.

The text-to-speech can probably be done with on-device NPU, but the LLM response at this point would probably require cloud-based connection (read: Internet connection). This would likely entail upsell to a recurring payment model (read: subscription).

Personally I would rather just to have the responses conveyed in text form, and skip the T2S along with facial expression & lip sync effects. It's less work, and believability/immersion wouldn't be any worse than that shown in the demo. Even if the demo's response lag & stuttering were fixed, it would still feel like talking to an animated mannequin.

This whole dynamic convos would be somewhat fun for completely random non essential NPCS.