Google ATAP's Ivan Poupyrev Talks Projects Soli And Jacquard

We managed to catch up to the very busy head of Google ATAP's Projects Soli and Jacquard, Ivan Poupyrev. In our video interview, we talk about how the projects came about and what's next for them.

Google's annual I/O developer conference is its main forum to announce what the future holds for its products and services. This year the important announcements were split between two days. The first day's keynote went over everything that was new with Google's core platforms, such as Android. However, conspicuously missing from this presentation was anything about some of the more exciting and revolutionary projects that Google is working on. It wasn't until day two's morning ATAP (Advanced Technology and Projects) session that we learned about what, quite frankly, are some of the most interesting things being worked on in the great Googleplex.

The ATAP division was formerly Motorola's advanced projects department and is the only part of that company Google held onto when it divested the rest of it to Lenovo. Headed by Regina Dugan (formerly head of DARPA), ATAP is where all of Google's wildest ideas are born -- as she said at I/O, ATAP is "a small band of pirates trying to do epic sh*t." Two of the most epic projects shown were Project Soli and Project Jacquard.

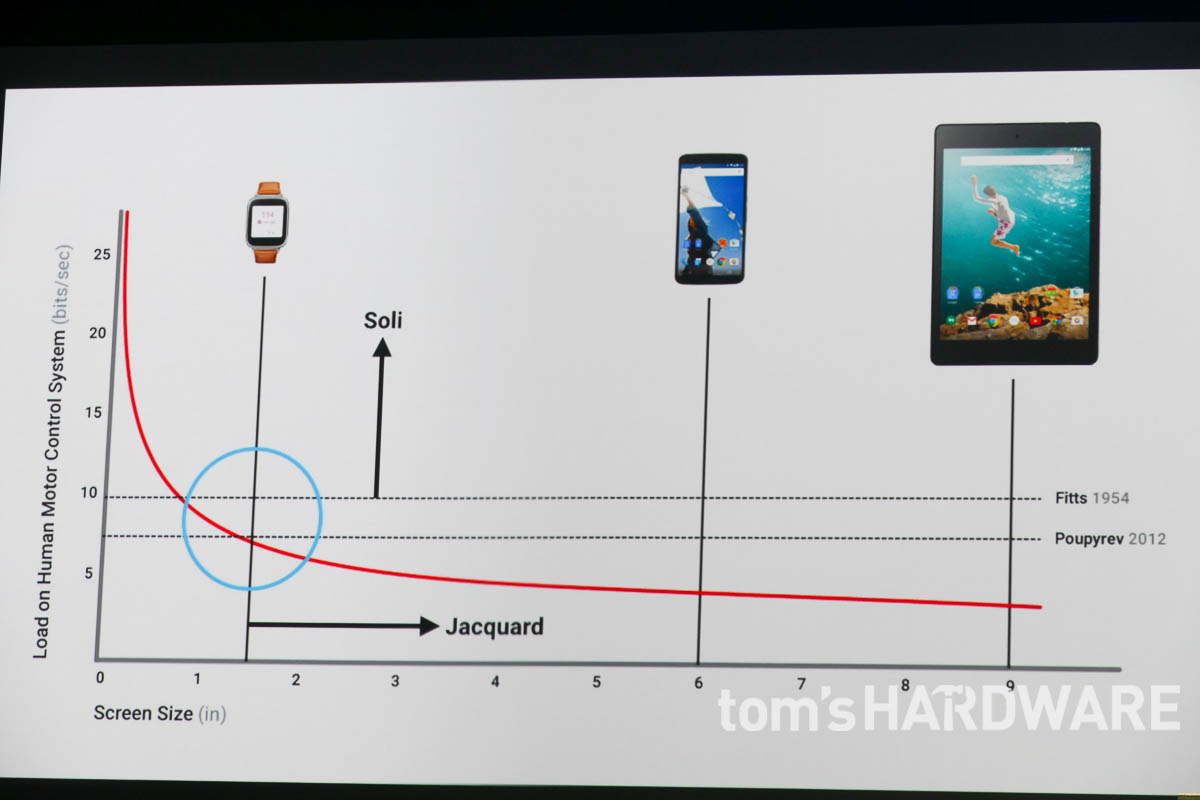

To introduce these projects, Google used the slide above to show how, as the screen size of a device gets smaller, the load on one's motor control system increases dramatically once you go below 2 inches. What this demonstrates is that interacting effectively with small-screen devices like smartwatches using traditional touchscreen interfaces is challenging and often frustrating. Project Soli is a new, potentially revolutionary, method of interacting with wearable tech that uses radar.

Project Jacquard is also a new method of gesture interaction with your devices. In contrast to Soli, instead of being focused on controlling small-screened devices, Jacquard is about using a much larger area -- in this case, fabric -- to control virtually anything. The demos Google had set up at I/O let you control both a phone and a group of lightbulbs simply by touching some cloth.

In our video below, we talked to Ivan Poupyrev, ATAPs head of both these projects. In the interview, he talks about how Soli and Jacquard came to be and what comes next for them.

Project Soli

According to Poupyrev, the genesis of Project Soli was that Google was looking for new ways to interact with wearable technology without adding physical controls such as buttons (looking at you, Apple Watch). Gesture-based interaction seemed like the best choice, but existing gesture tech isn't designed to understand "tiny" precise movements.

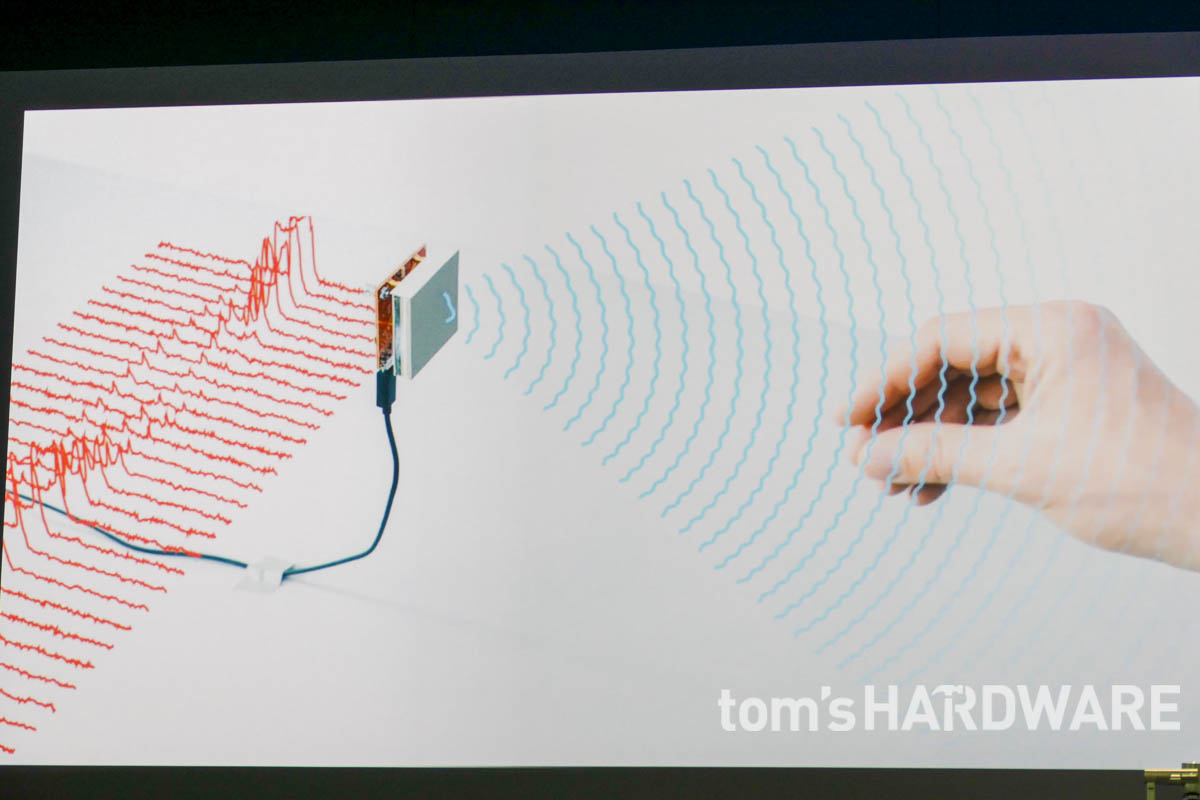

One of the big challenges was to be able to find a "sensor for gestures interaction that was small enough to fit into a wearable [device] but powerful enough to track the position of your fingers and hand." It also has to track through occlusions, be able to measure distance, and be able to work in all lighting conditions. Poupyrev's team discovered that radar would be perfect -- other than the fact that the hardware required was much too big to fit into a wearable.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

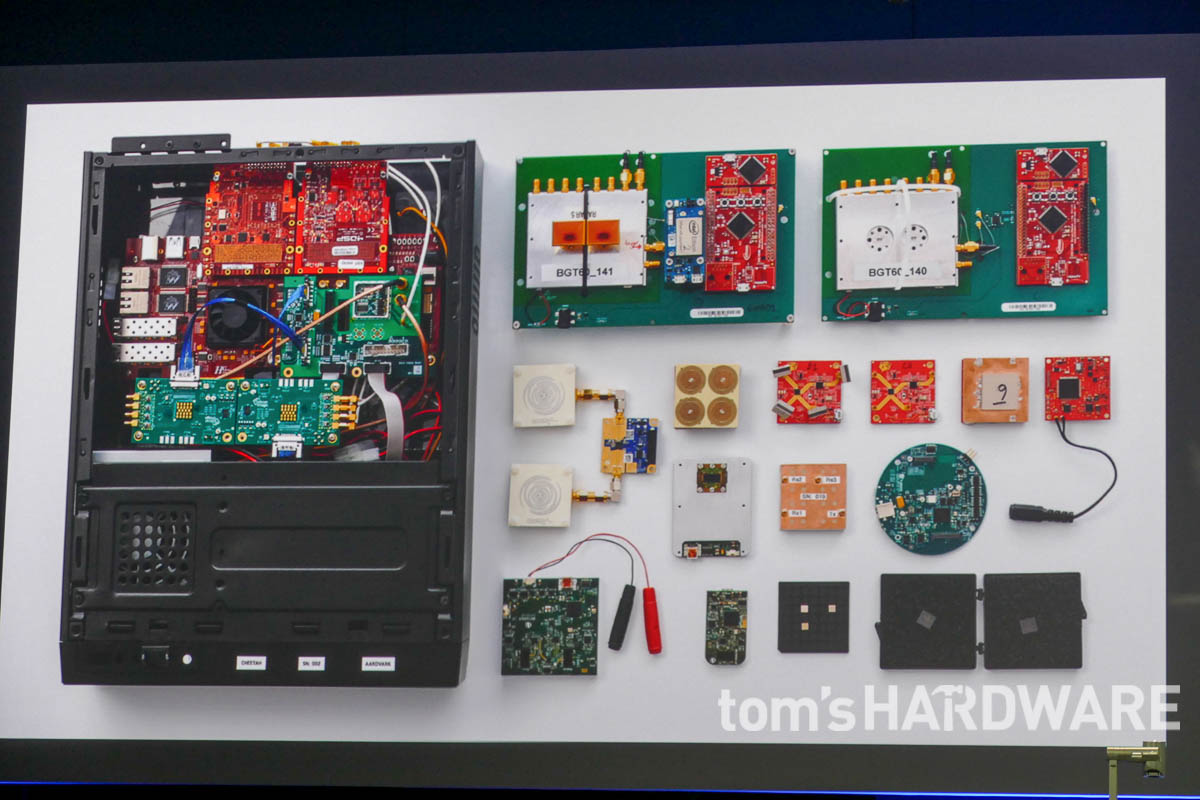

Impressively, in the span of ten months, the team was able to design and build a sensor that fulfilled all these requirements. The image above shows the progression in this short space of time. From left to right, a huge box to the finished Project Soli chip in the bottom right, which you can also see below. When asked how they achieved this goal in such a short space of time, Poupyrev said that it was a combination of "hard work, talent, focus." Also, the compressed schedule that all ATAP projects operate under pushes teams to perform at their best.

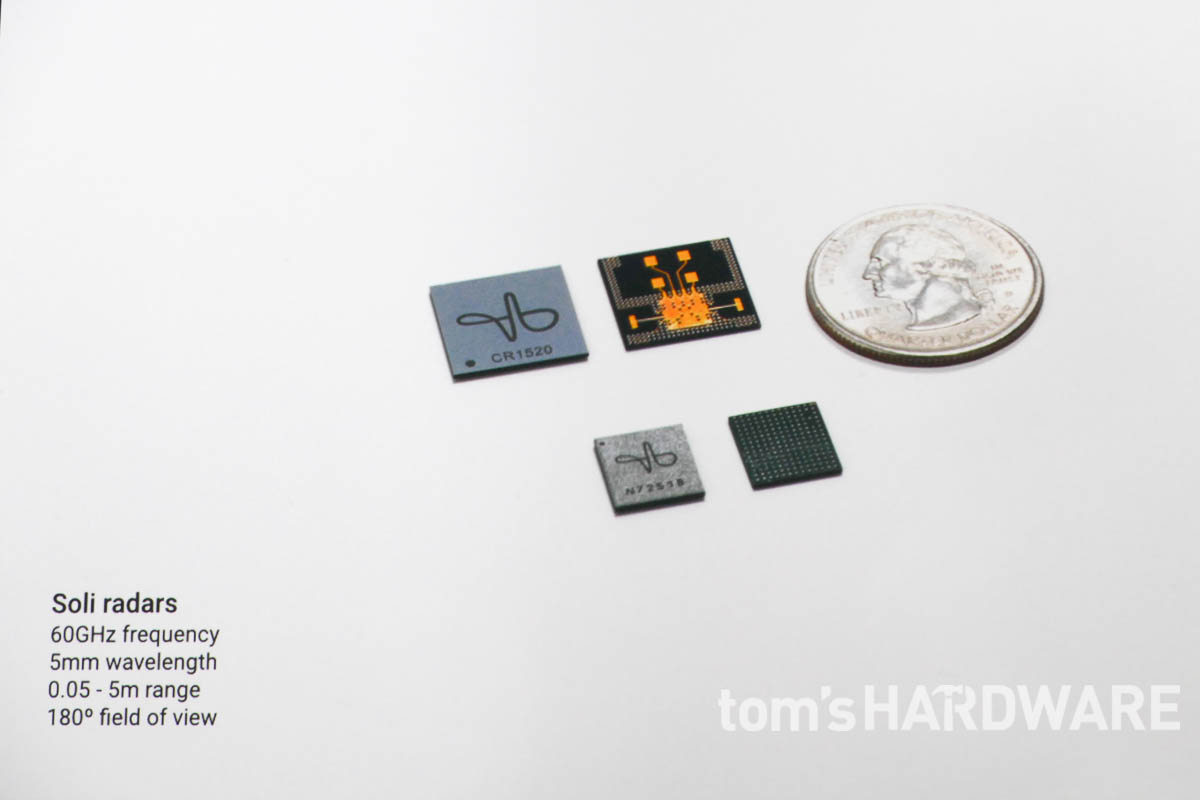

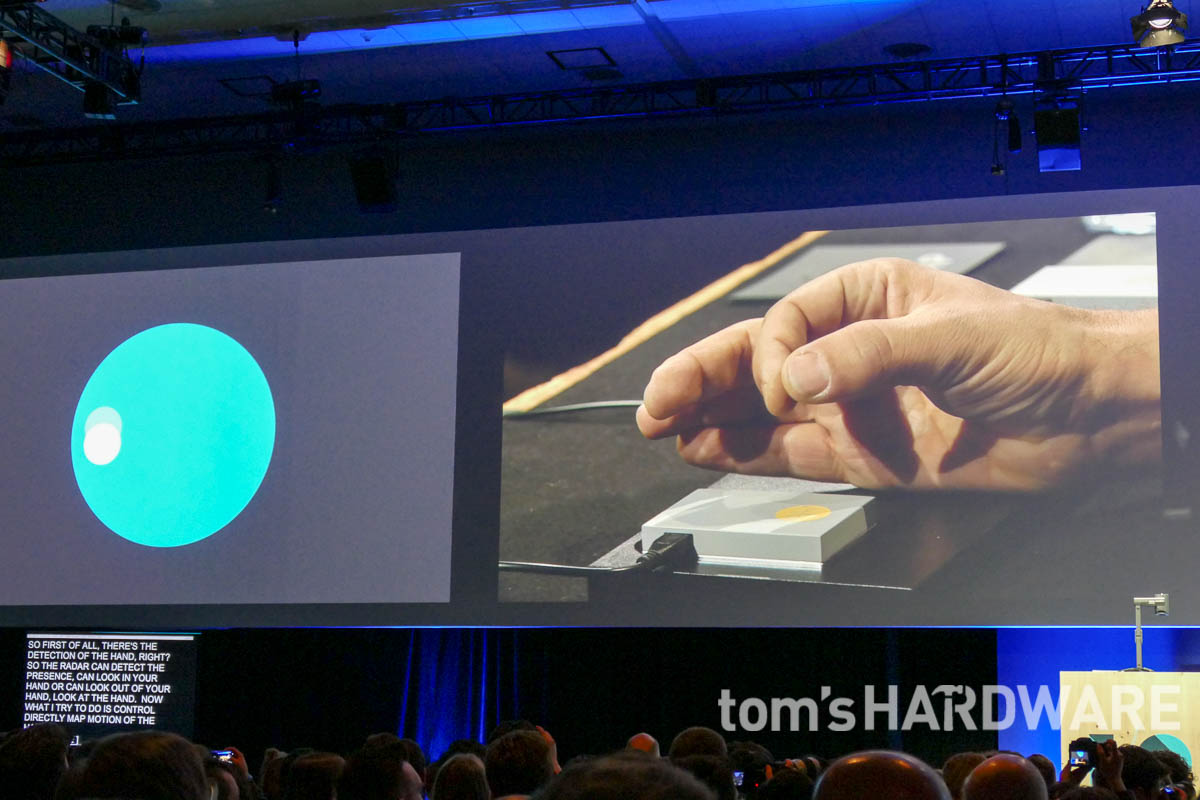

As you can see, the final Project Soli chip shown at I/O is small enough to fit in a wearable device. Seeing as the Project is incomplete, there is a chance it could get even smaller. On the I/O show floor, there were some demo stations that showed a graphical representation of what your hand and finger motion looks like to the sensor, which you can see in our video.

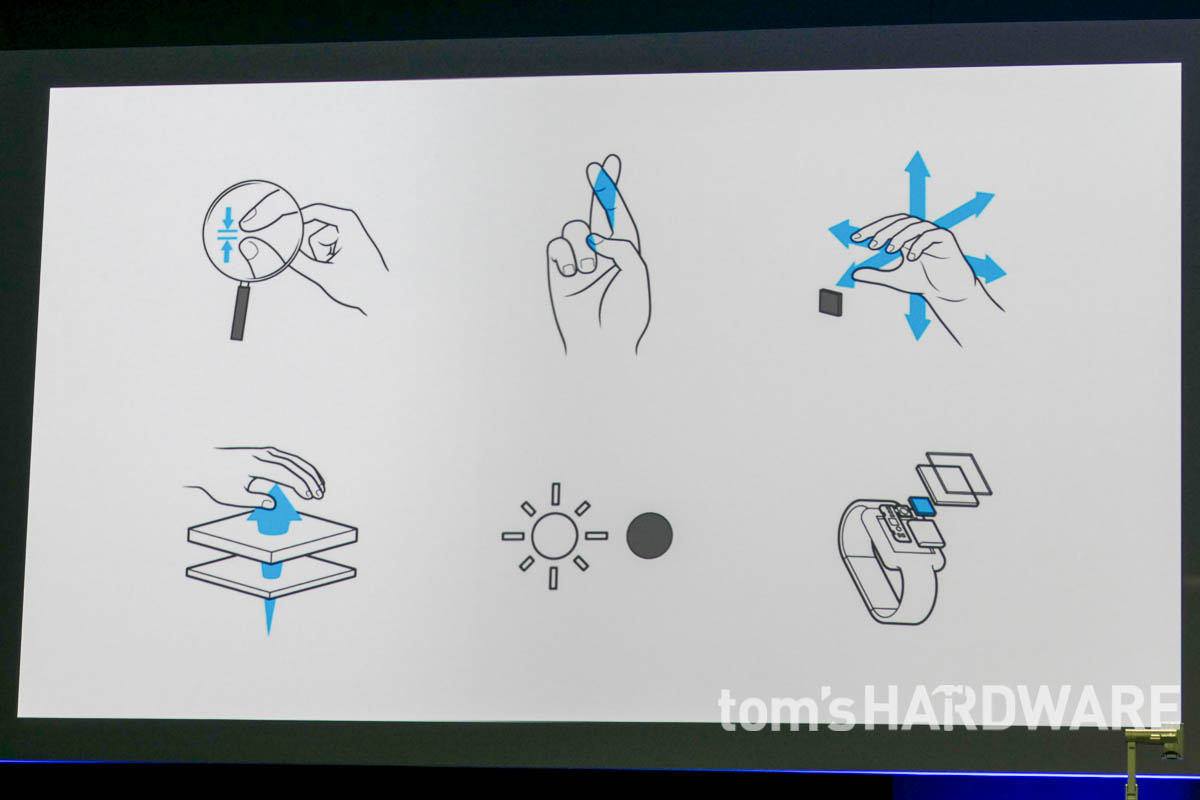

During the ATAP presentation, Poupyrev showed off some more precise gestures and gave some examples of how they could be used for real world interactions. Above you can see him rubbing his thumb and index finger together to simulate turning a dial (we guess a dig at Apple's Digital Crown). In another demonstration, he changed the time on a simulated watch face and used distance to control whether the hours or minutes were adjusted, depending on how far your hand was from the sensor. It certainly was a very "epic" demo.

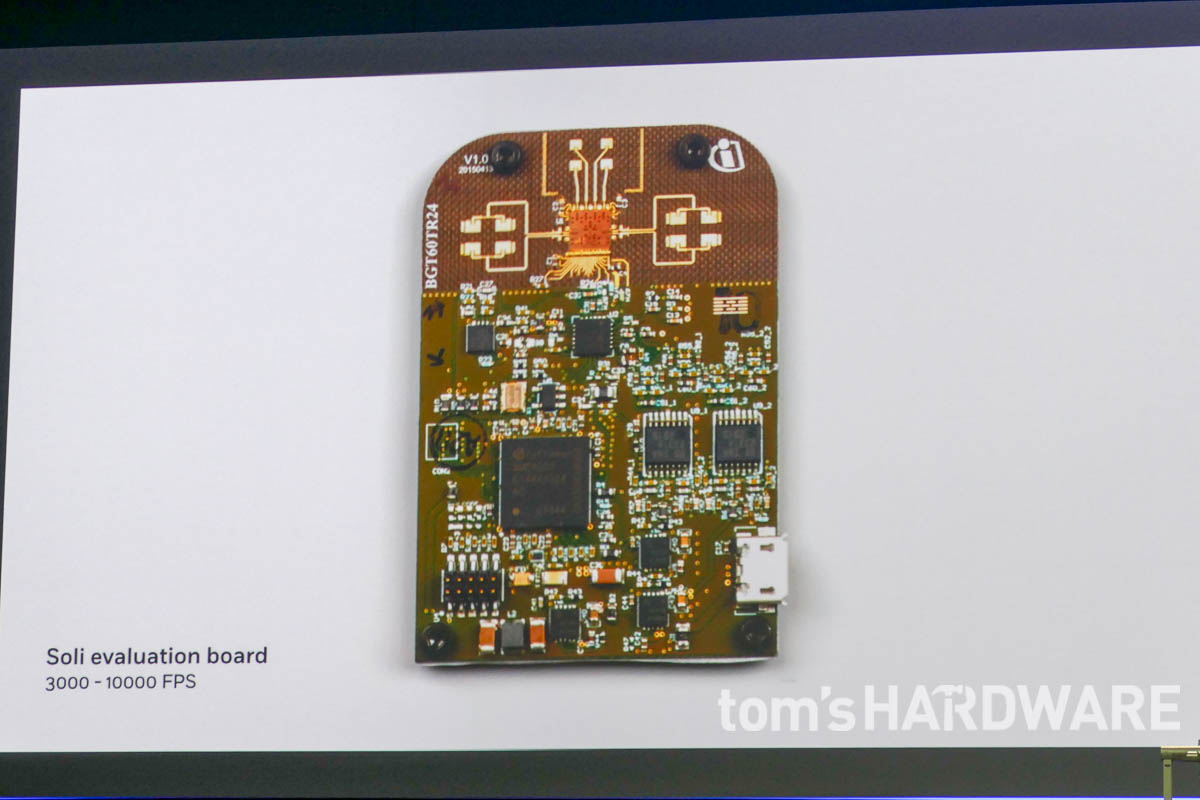

To close out the part of our interview on Soli, Poupyrev said that the developer board above will be available to a small group of devs either late this year or early the following. This means that we are still a ways away from seeing this sensor technology in a shipping device. Still, it is a very impressive technology, and it certainly could be used for more than just controlling a smartwatch.

One of the best uses we could think of was for gesture control for both Augmented Reality glasses and Virtual Reality HMD's. While all the gesture examples shown at I/O were of close-range interactions, the sensor has a range of 5cm to 5m, so it should easily be able to precisely track hands in AR and VR. If you were to mount a number of them in different positions on the headset, you could have 360-degree gesture tracking coverage.

For more information about Project Soli, you can watch Google ATAP's video on YouTube.

Project Jacquard

If you are even somewhat familiar with the scope of different wearable technology, you are probably aware that there is already smart clothing. Thus, at first glance, Google's Project Jacquard doesn't seem all that revolutionary, unlike Soli. At a high-level, Jacquard is simply integrating capacitive touch into fabric so that you can control your phone, computer or even household appliances such as lights from your sleeve. However, Poupyrev said while others have done this, Google wants to "move beyond novelty."

Project Jacquard is all about figuring out how to manufacture tech-infused clothing at the same scale as traditional garments. To achieve this, Poupyrev's team had to come up with new materials and ways of integrating them into the processes of a huge, long-established global industry. An industry that sells 12 billion garments a year, substantially more than any tech product sells.

When we spoke to Poupyrev, we initially expressed surprise that Google was getting into the smart clothing space. He told us that it wasn't surprising that "Google would move into the fashion side of things." One of the running themes and challenges of all wearable technology has been how to make them "attractive and beautiful."

Clothes are already wearables that are "functional, beautiful and fashionable." With smart garments, you aren't trying to make technology fashionable, but taking an already "fashionable object" and making it "smart and intelligent."

During the presentation, Poupyrev talked about how much time they spent learning about the textile industry. In our interview, he told us that the big challenge was creating an "interactive textile that can be woven on standard [textile] looms."

This involved designing new conductive yarn that could not only be produced in the wide variety of colors required by the fashion industry, but that could also stand up to the industrial processes of textile manufacturing. Then after the fact, the same yarn has to be washable, ironable and able to withstand the everyday wear-and-tear clothes go through.

Along with the yarn itself, Google had to come up with new textile production techniques so as to allow only parts of the textile to have the conductive thread. This is so a garment can just have touch sensitive panels of fabric in select areas, rather than over its whole area. You can see a small panel on the demonstration fabric below. The ATAP team is also designing the connectivity of the fabric to electronics and smart garment software.

At the show, we got to try out some Project Jacquard infused fabric, and it worked exactly as you'd expect. In the demo above, we could pause and play the music on the phone by tapping the panel, and swipe left/right or up/down to change track or control the volume. At another demo station, Google had some Philips Hue lightbulbs hooked up, and you could turn them on/off or change their color with a swipe.

Along with the announcement of the Project itself, the other significant revelation at I/O was who Google's first official partner would be: It's Levi's, the iconic jeans brand. This is quite significant and points to the level of confidence that both Google and a huge established brand like Levi's has in this technology (you can read Levi's blog post about this here). Moreover, remember, Levi's isn't just jeans – it makes a wide range of clothing types, and also owns the well-known business-casual brand Dockers. Unfortunately, nothing other than the partnership was announced, so don't expect to see any smart clothes from Levi's in the immediate future.

The last part of the Jacquard I/O presentation was Poupyrev showing off one of the first garments made using the project's tech. This was the cream suit jacket he was wearing (that can be seen at the top of this article). To make it, Google went to Savile Row in London, England, home to some of the world's best tailors. You can also see that he is wearing the very same jacket in our interview video, but we inexplicably forgot to ask him about it on camera!

When we asked Poupyrev where they were with the Project, he said they were half-way through, and the Levi's partnership was the next step. They now need more expertise in how specific garments are made and who they are designed for, as the first challenge of figuring out how to mass produce the smart fabric.

Poupyrev closed with a prediction that "if the stars align, if it turns out that our idea is actually great, and we solve our technical challenges and problems, then in 20 years everyone will be wearing interactive clothes."

For more information about Project Jacquard, you can watch Google ATAP's video on YouTube.

What Comes Next For ATAP?

Both Project Soli and Jacquard, along with Project Ara, demonstrate that there is some impressive and innovative technology being developed in Google's skunkworks. What else could they be working on behind closed doors that we don't know about yet? We'll certainly be keeping a close eye on all the developments coming from this department and keep you up to date on any news.

We cannot wait until we can control the modular phone in our pocket with the touch panel on our jacket sleeve. Then we can put on our augmented/virtual reality glasses that we can control with tiny gestures made by our fingers and watch 360-degree videos shot with Google's Jump camera.