Intel's AI PC chips aren't selling well — instead, old Raptor Lake chips boom

Intel's AI PC revolution will have to wait.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Times are already tough for Intel, but now it turns out its new, heavily-promoted AI PC chips aren't selling as well as expected, thus creating a shortage of production capacity for its older chips. The news comes as the CEO announced looming layoffs and a poor financial report sent the company's stock tumbling.

Intel says its customers are buying less expensive previous-generation Raptor Lake chips instead of the new, and significantly more expensive, AI PC models like the Lunar Lake and Meteor Lake chips for laptops.

During the earnings call, Intel announced that it currently faces a shortage of production capacity for its 'Intel 7' process node, and the company expects this shortage to "persist for the foreseeable future." That's an unexpected shortage to have, as Intel's current-gen chips use newer process nodes from TSMC instead of Intel's older 'Intel 7' node. Intel is a master at production capacity planning, so its disclosure points to an unexpected surge in sales of the older 'Intel 7' products.

Intel explained that the shortage of its 7nm production capacity is due to an unexpected surge in demand for its "N-1 and N-2" products, a reference to its two prior-generation chip families. This trend is occurring in both the consumer and data center markets.

"What we're really seeing is much greater demand from our customers for n-1 and n-2 products so that they can continue to deliver system price points that consumers are really demanding," explained Intel's Michelle Johnston Holthaus. "As we've all talked about, the macroeconomic concerns and tariffs have everybody kind of hedging their bets and what they need to have from an inventory perspective. And Raptor Lake is a great part. Meteor Lake and Lunar Lake are great as well, but come with a much higher cost structure, not only for us, but at the system ASP price points for our OEMs as well."

Bernstein Research's Stacy Rasgon pressed Holtahaus about the implications for the company's upcoming Panther Lake chips, which are set to launch at the end of the year, especially given that the looming tariff disruptions have not yet occurred.

Holthaus said the Panther Lake launch remains on track and the company expects continued success in the commercial market, which she said typically precedes broader consumer adoption. Notably, she did not directly address the company's expected next-gen AI PC adoption for consumer laptops. Regardless, the company also continues its expansive work to promote and cultivate a growing developer ecosystem to unleash the power of its AI wares.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

However, the fact is that AI still doesn't seem to have the 'killer app' that would send waves of customers to stores to purchase an expensive new laptop. Instead, most of the new features revolve around built-in features in existing applications, such as chat and productivity software, that are more nuanced and not quite flashy enough to spark a wave of adoption.

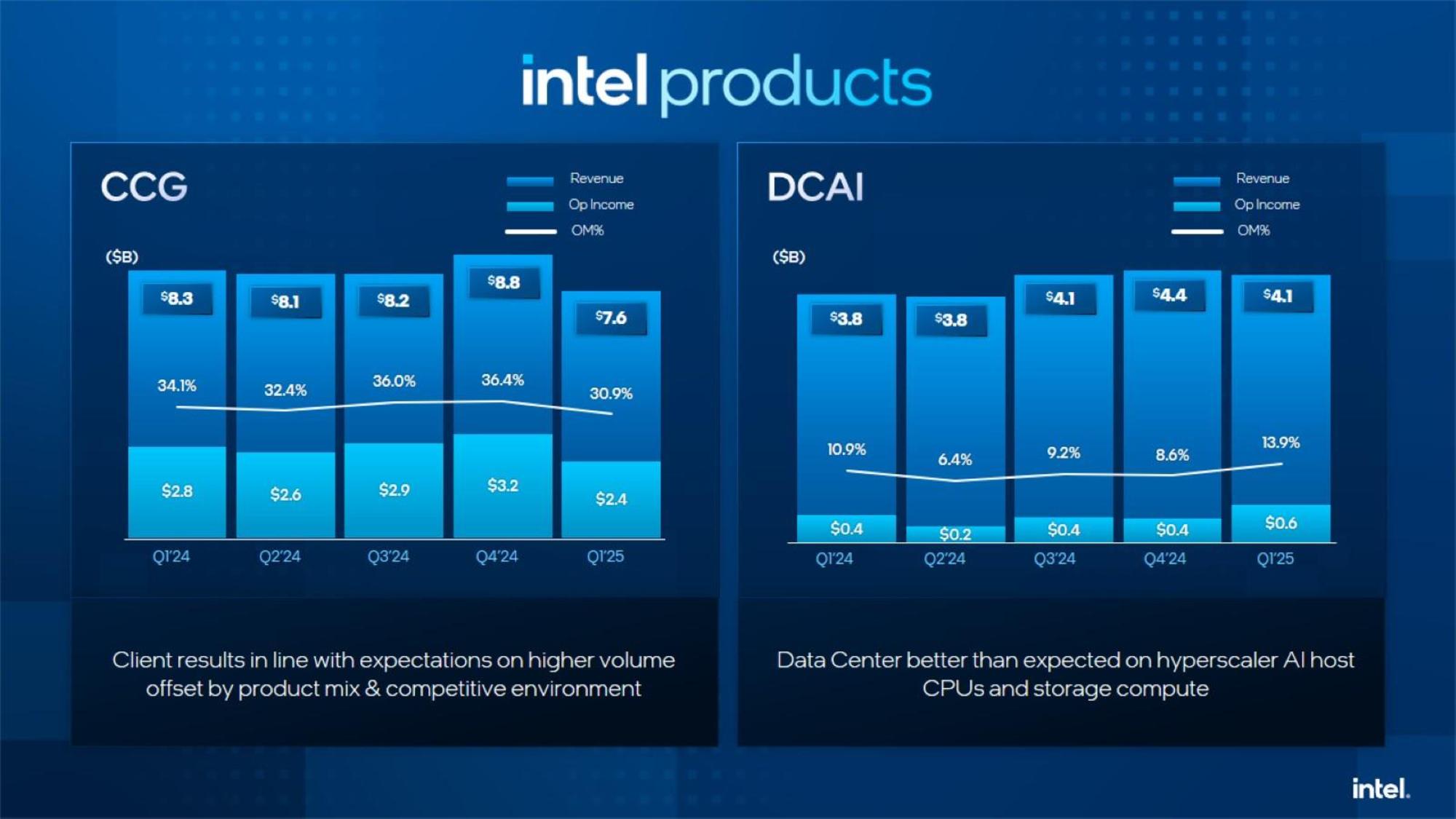

As you can see above, Intel's Q1 financial results for its Client Computing Group (CCG), which addresses the consumer chip market, are less than stellar, as revenue slumped 8% compared to the same period last year.

It is also noteworthy that Intel's last two generations of chips have been plagued by persistent reliability issues that necessitate full chip replacements for impacted products. Those chips are fabbed on the 'Intel 7' process node, so some of the unexpected production capacity constraints could be impacted by larger-than-expected numbers of returns.

Naturally, Intel's take on the state of the AI PC revolves around its own products, which have obviously seen lackluster uptake, so we're especially interested to hear AMD's take on the matter when it reveals its results in ten days. We'll also have in-depth reporting on CPU market statistics shortly thereafter to track the impact of potential share losses for Intel. Stay tuned.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Gururu I don't even know what AI means anymore. So does it just mean that the hardware runs ChatGPT faster?Reply -

JRStern Reply

Doesn't even do that.Gururu said:I don't even know what AI means anymore. So does it just mean that the hardware runs ChatGPT faster?

They'd like it to, but they either know it doesn't or ... ok they know, but it's a secret!

AI means whatever marketing wants it to mean, it mostly means "you need to send Intel money now!"

Someday this will all work out, but Intel's going to need to develop actual expertise in software (and marketing) first or it will happen but they'll be gone. -

JRStern So somebody tell me, what kind of price difference is this?Reply

More than $100?

Don't you get more raw performance with the newer chips, besides this (unused) NPU? -

Mr Majestyk NPU = Near Pointless Unit.Reply

Nice smack up the side of the head to Microsoft to, We don't want your copilot trash -

sygreenblum Reply

Your just saying that because most people won't use it or don't need it, and those that do will certainly be using a dedicated GPU with 50 times more performance, instead.Mr Majestyk said:NPU = Near Pointless Unit.

Nice smack up the side of the head to Microsoft to, We don't want your copilot trash

But you see, most people and professionals isn't Intels market for this chip. Honestly, I'm not actually sure what market they're targeting but it seems like there should be one, considering they designed a chip for something right? -

usertests Reply

I'd be fine with an NPU, if it was supported. If you can't easily run LLMs, Stable Diffusion, etc. on it on Windows or Linux, then there's not much point. If it's slower than the iGPU for these tasks, there's even less of a point. "Copilot" is another matter entirely but it might not even use the NPU for most of its stuff. Like that MS Paint image generation that uses a central server and wants you to pay, rather than using an NPU at all.Mr Majestyk said:NPU = Near Pointless Unit.

Nice smack up the side of the head to Microsoft to, We don't want your copilot trash -

JRStern Reply

As I understand it NPU was copied from a similar unit on many cell phones that's used for picture editing.usertests said:I'd be fine with an NPU, if it was supported. If you can't easily run LLMs, Stable Diffusion, etc. on it on Windows or Linux, then there's not much point. If it's slower than the iGPU for these tasks, there's even less of a point. "Copilot" is another matter entirely but it might not even use the NPU for most of its stuff. Like that MS Paint image generation that uses a central server and wants you to pay, rather than using an NPU at all.

Somewhere in the future is "neuromorphic" computing which with any luck will be about 6 orders of magnitude more efficient than current LLMs and something like an NPU will be useful at the edge.

But this ain't that. -

hotaru251 Reply

the top of line core ultra cpu they have atm is Intel® Core™ Ultra 9 Processor 285K which is around $600 for desktop version.JRStern said:So somebody tell me, what kind of price difference is this?

The 14900k is 450-500

the 13900k is 400(ish)

laptop ofc have premium prices so liekly looking at a 300 or so difference -

usertests Reply

Sure, they've been around for at least 8 years in ARM SoCs (such as Apple A11 in 2017). And Intel included a tiny Gaussian and Neural Accelerator (GNA) in Cannon Lake in 2018. Today's NPUs accelerate ML-focused low-precision operations and aren't "neuromorphic" in the sense of mimicking a brain.JRStern said:As I understand it NPU was copied from a similar unit on many cell phones that's used for picture editing.

Somewhere in the future is "neuromorphic" computing which with any luck will be about 6 orders of magnitude more efficient than current LLMs and something like an NPU will be useful at the edge.

But this ain't that.

The main point of contention right now is that a decent amount of die area is going to the NPU. Lisa Su even joked about the NPU size with a Microsoft exec. iGPUs could be made larger instead, while performing some of the same operations, which is what Sony chose for PS5 Pro. The NPUs ought to have superior TOPS/Watt (has anyone on the planet benchmarked this?) which is theoretically useful in laptops. If it gets used.

It's plausible that they will get bigger or hold a steady proportion of the die. For example, the area used by XDNA stays the same on a new node but performance increases from 50 to 100 TOPS.