Intel shares Microsoft's new AI PC definition, launches AI PC Acceleration Programs and Core Ultra Meteor Lake NUC developer kits at AI conference

Meteor Lake dev kits use Asus NUCs optimized for AI development work.

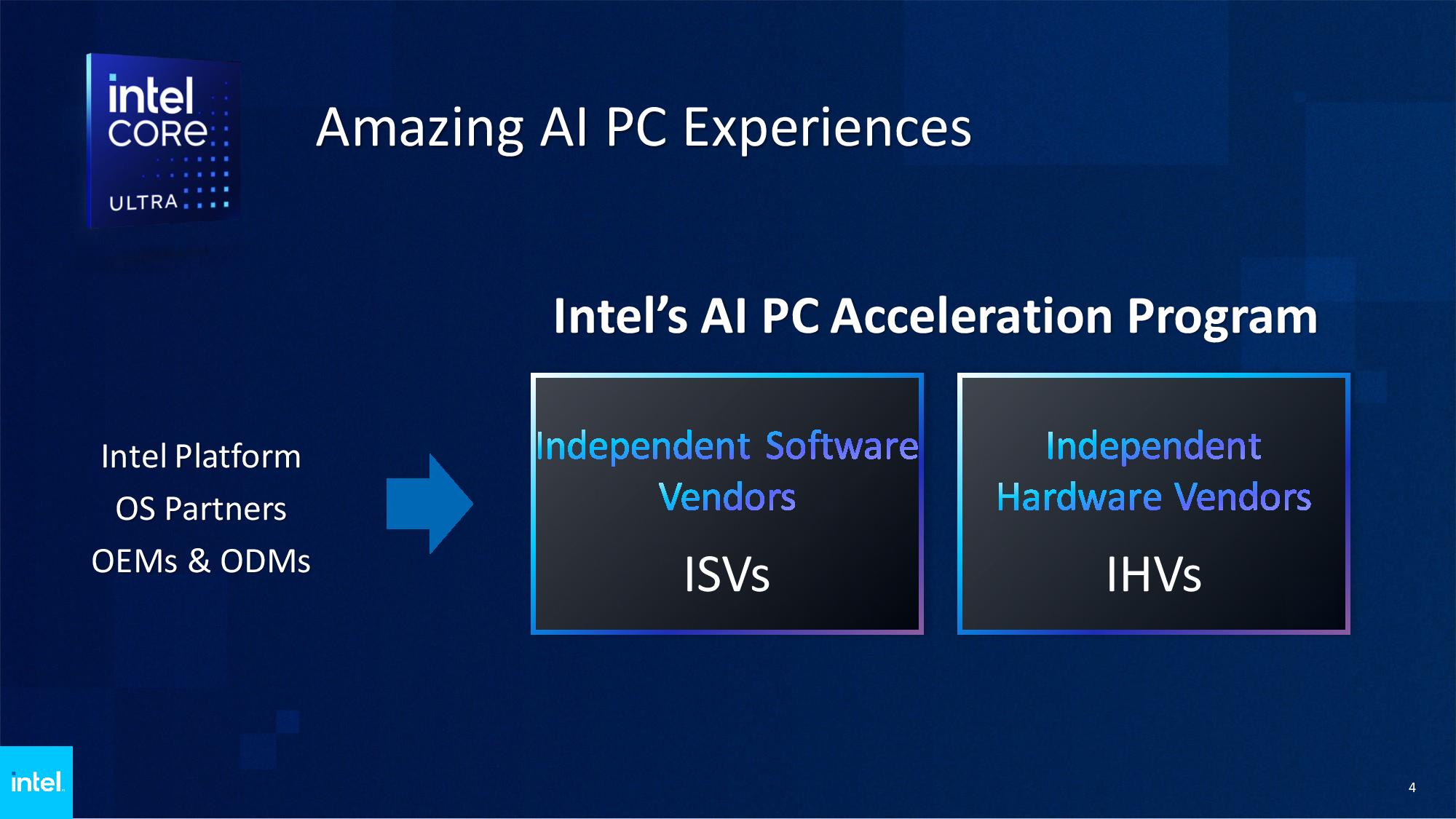

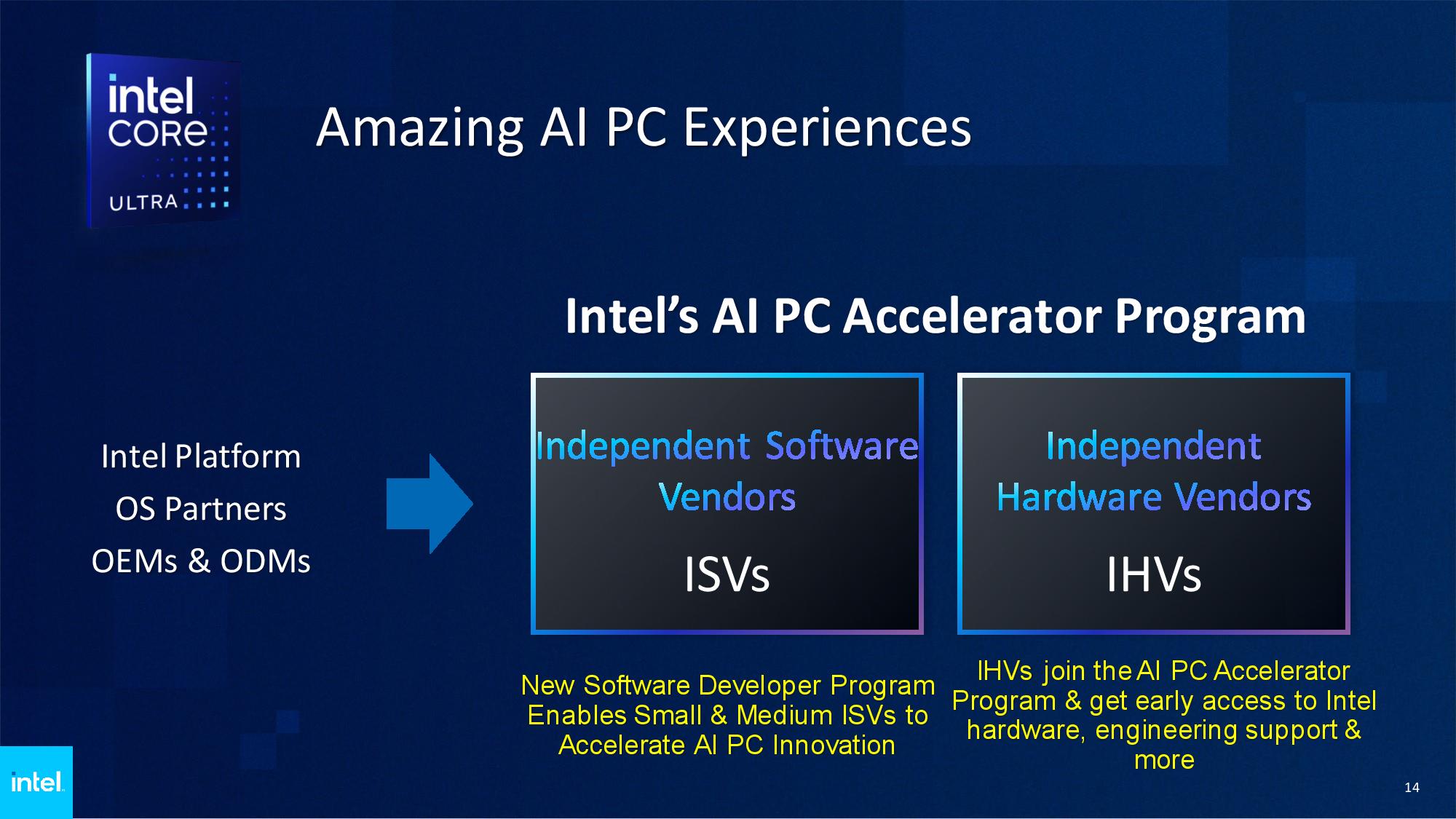

Intel announced two new extensions to its AI PC Acceleration Program in Taipei, Taiwan, with a new PC Developer Program that’s designed to attract smaller Independent Software Vendors (ISVs) and even individual developers, and an Independent Hardware Vendors (IHV) program that assists partners developing AI-centric hardware. Intel is also launching a new Core Ultra Meteor Lake NUC development kit, and introducing Microsoft’s new definition of just what constitutes an AI PC. We were given a glimpse of how AI PCs will deliver better battery life, higher performance, and new features.

Intel launched its AI Developer Program in October of last year, but it's kicking off its new programs here at a developer event in Taipei that includes hands-on lab time with the new dev kits. The program aims to arm developers with the tools needed to develop new AI applications and hardware, which we’ll cover more in-depth below.

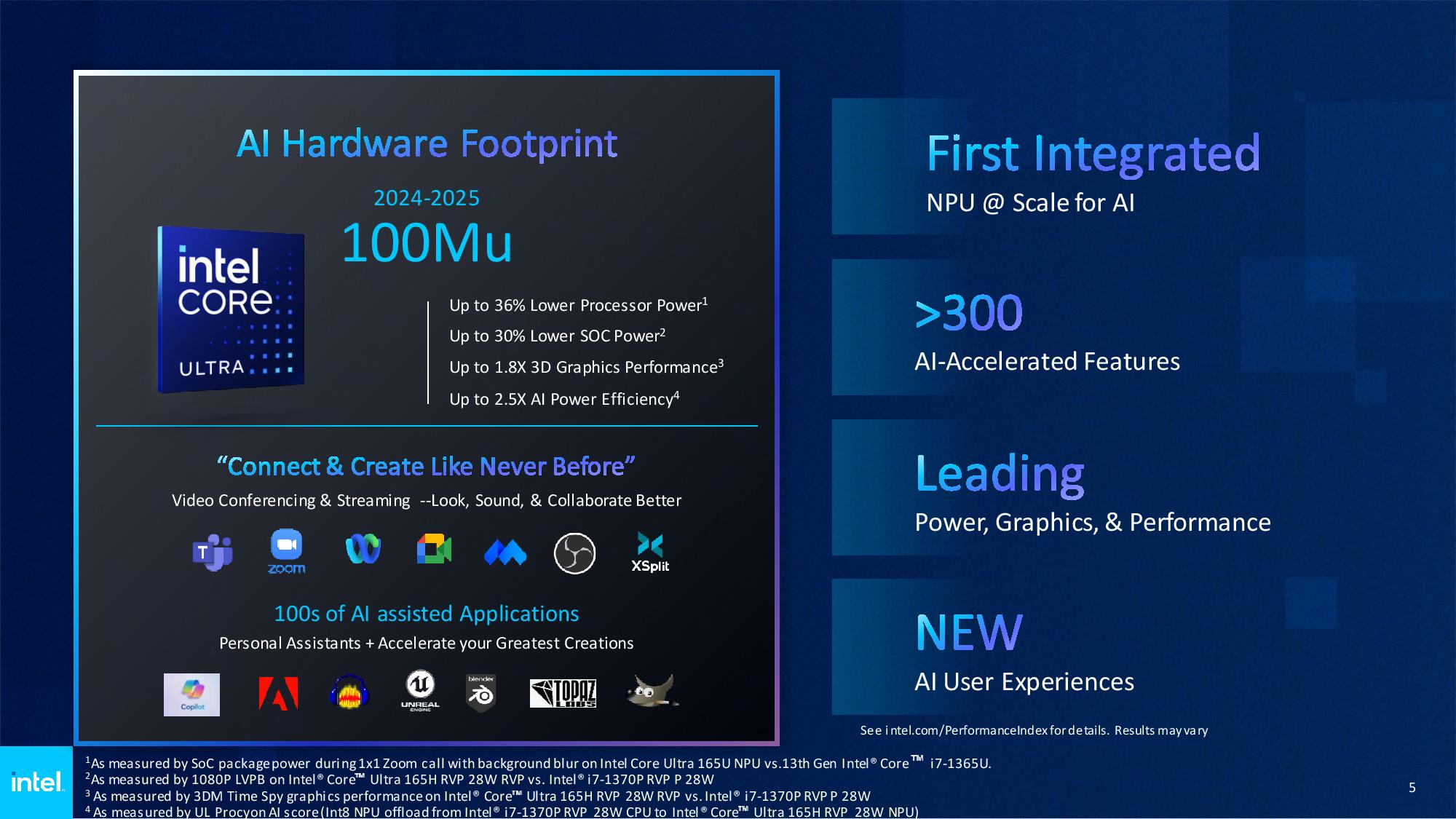

Intel plans to deliver over 100 million PCs with AI accelerators by the end of 2025. The company is already engaging with 100+ AI ISVs for PC platforms and plans to have over 300 AI-accelerated applications in the market by the end of 2024. To further those efforts, Intel is planning a series of local developer events around the globe at key locations, like the recent summit it held in India. Intel plans to have up to ten more events this year as it works to build out the developer ecosystem.

The battle for control of the AI PC market will intensify over the coming years — Canalys predicts that 19% of PCs shipped in 2024 will be AI-capable, but that number will increase to 60% by 2027, highlighting a tremendous growth rate that isn’t lost on the big players in the industry. In fact, AMD recently held its own AI PC Innovation Summit in Beijing, China, to expand its own ecosystem. The battle for share in the expanding AI PC market begins with silicon that enables the features, but it ends with the developers that turn those capabilities into tangible software and hardware benefits for end users. Here’s how Intel is tackling the challenges.

What is an AI PC?

The advent of AI presents tremendous opportunities to introduce new hardware and software features to the tried-and-true PC platform, but the definition of an AI PC has been a bit elusive. Numerous companies, including Intel, AMD, Apple, and soon Qualcomm with its X Elite chips, have developed silicon with purpose-built AI accelerators residing on-chip alongside the standard CPU and GPU cores. However, each has its own take on what constitutes an AI PC.

Microsoft’s and Intel’s new co-developed definition states that an AI PC will come with a Neural Processing Unit (NPU), CPU, and GPU that support Microsoft’s Copilot and come with a physical Copilot key directly on the keyboard that replaces the second Windows key on the right side of the keyboard. Copilot is an AI chatbot powered by an LLM that is currently being rolled into newer versions of Windows 11. It is currently powered by cloud-based services, but the company reportedly plans to enable local processing to boost performance and responsiveness. This definition means that the existing Meteor Lake and Ryzen laptops that have shipped without a Copilot key actually don't meet Microsoft's official criteria, though we expect Microsoft's new definition to spur nearly universal adoption of the key.

While Intel and Microsoft are now promoting this jointly developed definition of an AI PC, Intel itself has a simpler definition that says it requires a CPU, GPU, and NPU, each with its own AI-specific acceleration capabilities. Intel envisions shuffling AI workloads between these three units based on the type of compute needed, with the NPU providing exceptional power efficiency for lower-intensity AI workloads like photo, audio, and video processing while delivering faster response times than cloud-based services, thus boosting battery life and performance while ensuring data privacy by keeping data on the local machine. This also frees the CPU and GPU for other tasks. The GPU and CPU will step in for heavier AI tasks, a must as having multiple AI models running concurrently could overwhelm the comparatively limited NPU. If needed, the NPU and GPU can even run an LLM in tandem.

AI models also have a voracious appetite for memory capacity and speed, with the former enabling larger, more accurate models while the latter delivers more performance. AI models come in all shapes and sizes, and Intel says that memory capacity will become a key challenge when running LLMs, with 16GB being required in some workloads, and even 32GB may be necessary depending on the types of models used.

Naturally, that could add quite a bit of cost, particularly in laptops, but Microsoft has stopped short of defining a minimum memory requirement yet. Naturally, it will continue to work through different configuration options with OEMs. The goalposts will be different for consumer-class hardware as opposed to workstations and enterprise gear, but we should expect to see more DRAM on entry-level AI PCs than the standard fare — we may finally bid adieu to 8GB laptops.

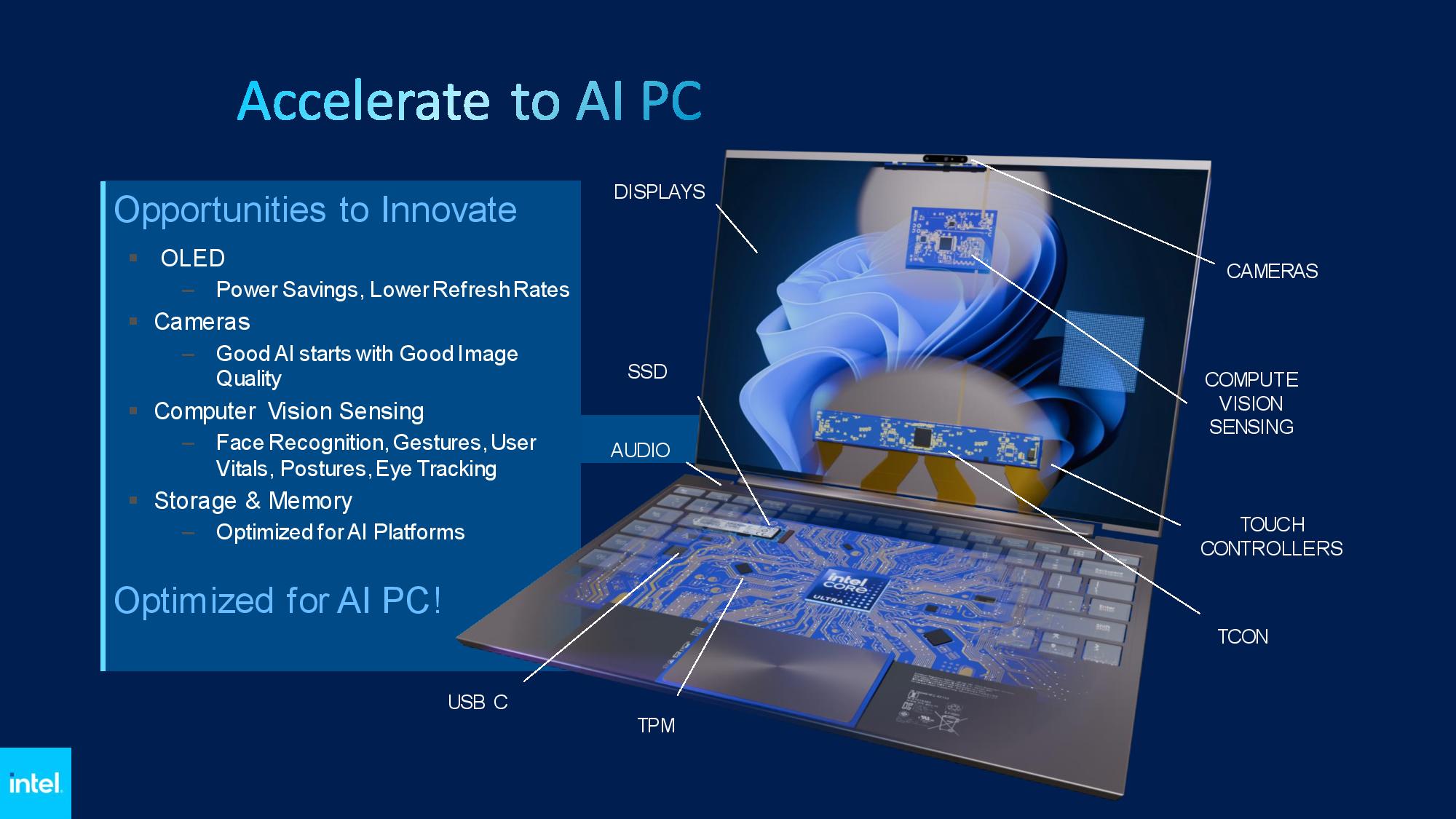

Intel says that AI will enable a host of new features, but many of the new use cases are undefined because we remain in the early days of AI adoption. Chatbots and personal assistants trained locally on users' data are a logical starting point, and Nvidia's Chat with RTX and AMD's chatbot alternative are already out there, but AI models running on the NPU can also make better use of the existing hardware and sensors present on the PC.

For instance, coupling gaze detection with power-saving features in OLED panels can enable lower refresh rates when acceptable, or switch the screen off when the user leaves the PC, thus saving battery life. Video conferencing also benefits from techniques like background segmentation, and moving that workload from the CPU to the NPU can save up to 2.5W. That doesn’t sound like much, but Intel says it can result in an extra hour of battery life in some cases.

Other uses include eye gaze correction, auto-framing, background blurring, background noise reduction, audio transcription, and meeting notes, some of which are being built to run on the NPU with direct support from companies like Zoom, Webex, and Google Meet, among others. Companies are already working on coding assistants that learn from your own code base, and others are developing Retrieval-Augmented Generation (RAG) LLMs that can be trained on the users’ data, which is then used as a database to answer search queries, thus providing more specific and accurate information.

Other workloads include image generation along with audio and video editing, such as the features being worked into the Adobe Creative Cloud software suite. Security is also a big focus, with AI-powered anti-phishing software already in the works, for instance. Intel’s own engineers have also developed a sign-language-to-text application that uses video detection to translate sign language, showing that there are many unthought-of applications that can deliver incredible benefits to users.

The Core Ultra Meteor Lake Dev Kit

The Intel dev kit consists of an ASUS NUC Pro 14 with a Core Ultra Meteor Lake processor, but Intel hasn’t shared the detailed specs yet. We do know that the systems will come in varying form factors. Every system will also come with a pre-loaded software stack, programming tools, compilers, and the drivers needed to get up and running.

Installed tools include Cmake, Python, and Open Vino, among others. Intel also supports ONNX, DirectML, and WebNN, with more coming. Intel’s OpenVino model zoo currently has over 280 open-source and optimized pre-trained models. It also has 173 for ONNX and 150 models on Hugging Face, and the most popular models have over 300,000 downloads per month.

Expanding the Ecosystem

Intel is already working with its top 100 ISVs, like Zoom, Adobe and Autodesk, to integrate AI acceleration into their applications. Now it wants to broaden its developer base to smaller software and hardware developers — even those who work independently.

To that end, Intel will provide developers with its new dev kit at the conferences it has scheduled around the globe, with the first round of developer kits being handed out here in Taipei. Intel will also make dev kits available for those who can’t attend the events, but it hasn’t yet started that part of the program due to varying restrictions in different countries and other logistical challenges.

These kits will be available at a subsidized cost, meaning Intel will provide a deep discount, but the company hasn’t shared details on pricing yet. There are also plans to give developers access to dev kits based on Intel’s future platforms.

Aside from providing hardware to the larger dev houses, Intel is also planning to seed dev kits to universities to engage with computer science departments. Intel has a knowledge center with training videos, documentation, collateral, and even sample code on its website to support the dev community.

Intel is engaging with Independent Hardware Vendors (IHVs) that will develop the next wave of devices for AI PCs. The company offers 24/7 access to Intel’s testing and process resources, along with early reference hardware, through its Open Labs initiative in the US, China, and Taiwan. Intel already has 100+ IHVs that have developed 200 components during the pilot phase.

ISVs and IHVs interested in joining Intel’s PC Acceleration Program can join via the webpage. We’re here at the event and will follow up with updates as needed.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

hotaru251 So by their own slide they basically say only intel PC's can be ai pcs? As doesn't say a system w/ this stuff but specifically an intel one.Reply

-

usertests Reply

AMD and Qualcomm can meet these requirements.hotaru251 said:So by their own slide they basically say only intel PC's can be ai pcs? As doesn't say a system w/ this stuff but specifically an intel one.

On Intel's side, maybe Intel Core Ultra is the only brand that qualifies (for now). -

-Fran- So Microsoft ready to screw AMD yet again? XDReply

AMD, come on... Haven't you ever heard of "fool me once..."?

Help Valve grow SteamOS and Microsoft will come begging your way.

Regards. -

cknobman Intel better start focusing on ditching x86 or at least diversifying and limiting its use cases.Reply

In anything other than the most demanding workloads ARM is taking over fast.

So Intels AI will be irrelevant to most regular consumers who are buying ARM based PC's. -

NeoMorpheus Reply

Came here just to say the same thing.-Fran- said:So Microsoft ready to screw AMD yet again? XD

AMD, come on... Haven't you ever heard of "fool me once..."?

Help Valve grow SteamOS and Microsoft will come begging your way.

Regards.

I honestly thing that MS is waiting for intel to have a better gpu so they can dump AMD on the next Xbox.

AMD needs to stop being nice and start being more selfish. -

usertests If you guys are not joking and are taking the Intel marketing slide at face value, then you can't be saved.Reply -

TerryLaze Reply

ARM has been "taking over fast" since the 60ies...cknobman said:Intel better start focusing on ditching x86 or at least diversifying and limiting its use cases.

In anything other than the most demanding workloads ARM is taking over fast.

So Intels AI will be irrelevant to most regular consumers who are buying ARM based PC's.

Also I don't know if you were sleeping the last years but miniPCs and handhelds with x86 have practically flooded the market, so x86 is taking over a good chunk of what used to be an arm only domain. -

Roland Of Gilead Would it be possible to have an NPU on an add in card like PCIE to enable it's use on those older systems that don't have a native NPU? I wonder would PCIE be fast enough to shift the workloads around, or even if it's practicable.Reply -

Notton Reply

There are several of those out already.Roland Of Gilead said:Would it be possible to have an NPU on an add in card like PCIE to enable it's use on those older systems that don't have a native NPU? I wonder would PCIE be fast enough to shift the workloads around, or even if it's practicable.

They are called AI Accelerator Cards.

It seems like the idea kind of took off around 2018, and then abruptly ended in 2021.

Asus used to sell one.

I am guessing AI Accelerator cards for desktops died because it was more cost effective to buy nvidia GPUs, like the RTX 3060 12GB. -

usertests Reply

They still exist, in PCIe and M.2 form factors. Most consumers aren't going to (and shouldn't) pick one over the likes of an RTX 3060 12GB, but if your use case calls for it, you can get it. I think these are mainly going into mini PCs for "edge AI" capabilities.Notton said:There are several of those out already.

They are called AI Accelerator Cards.

It seems like the idea kind of took off around 2018, and then abruptly ended in 2021.

Asus used to sell one.

I am guessing AI Accelerator cards for desktops died because it was more cost effective to buy nvidia GPUs, like the RTX 3060 12GB.

https://www.cnx-software.com/2023/01/02/150-axelera-m2-ai-accelerator-214-tops/

Now that integrated "NPUs" are eventually coming to almost every new Windows PC sold (starting with x86 and Qualcomm ARM laptops), there may be slightly increased interest in getting separate cards for desktops. In theory someone might want separate graphics and AI acceleration capabilities to avoid doing gaming + an AI task simultaneously on the GPU. But that will continue to be a niche concern and by the time it possibly matters for gaming, all new CPUs will have an NPU.