Nvidia Chat with RTX runs a ChatGPT-style application on your GPU that works with your local data — RTX 30-series or later required

An interesting tool to query your own data, assuming it's already in the right file format.

Nvidia is the first of the top four AI giants to release a free chatbot dedicated to local offline use. The new Chat with RTX app allows users to use open language models locally without connecting to the cloud. The only real drawback with "Chat with RTX" is its sky-high system requirements and huge download size, particularly when compared with cloud solutions. You'll need an RTX 30-series GPU or later with at least 8GB of VRAM and Windows 10 or 11 to run it.

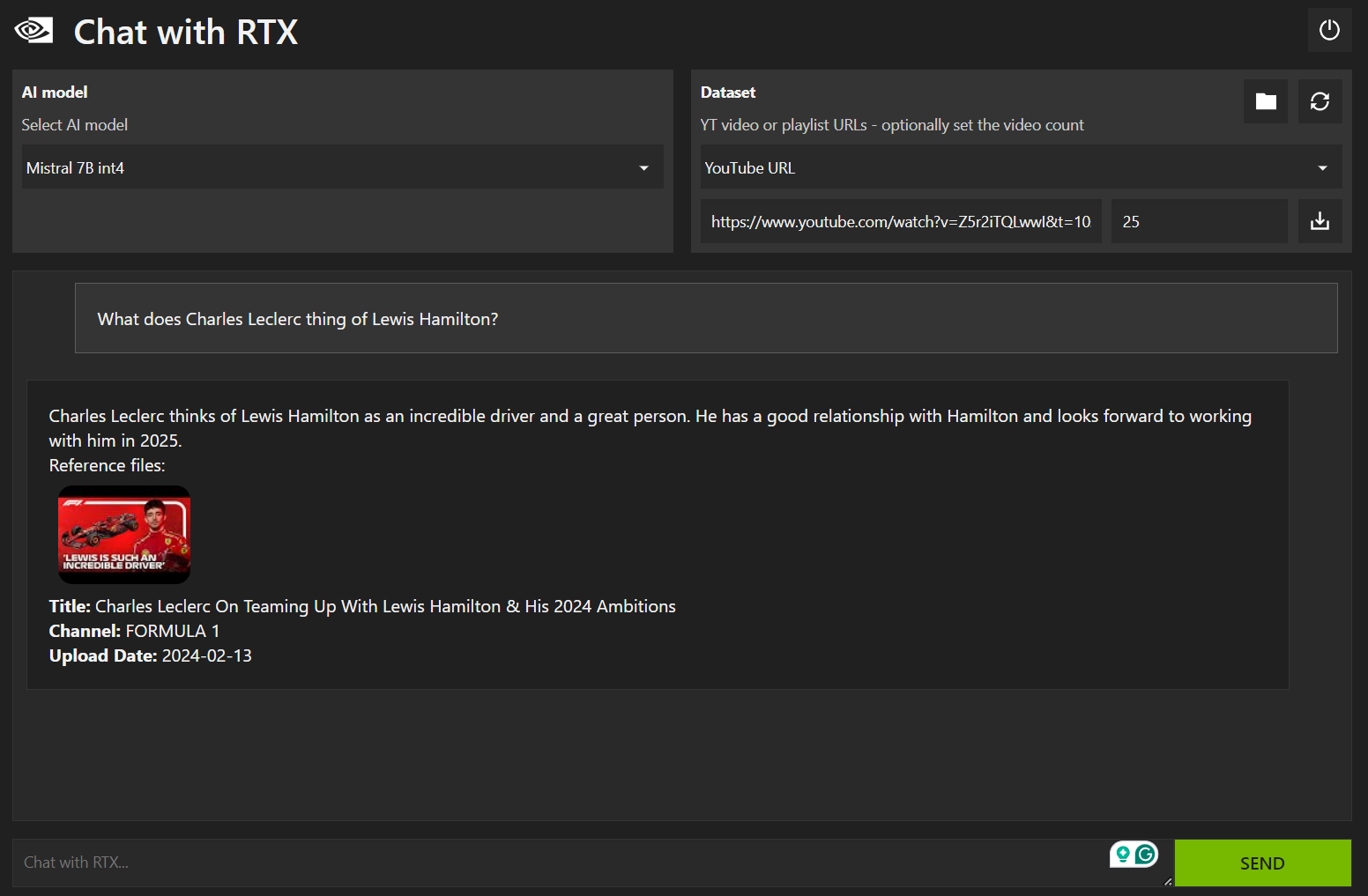

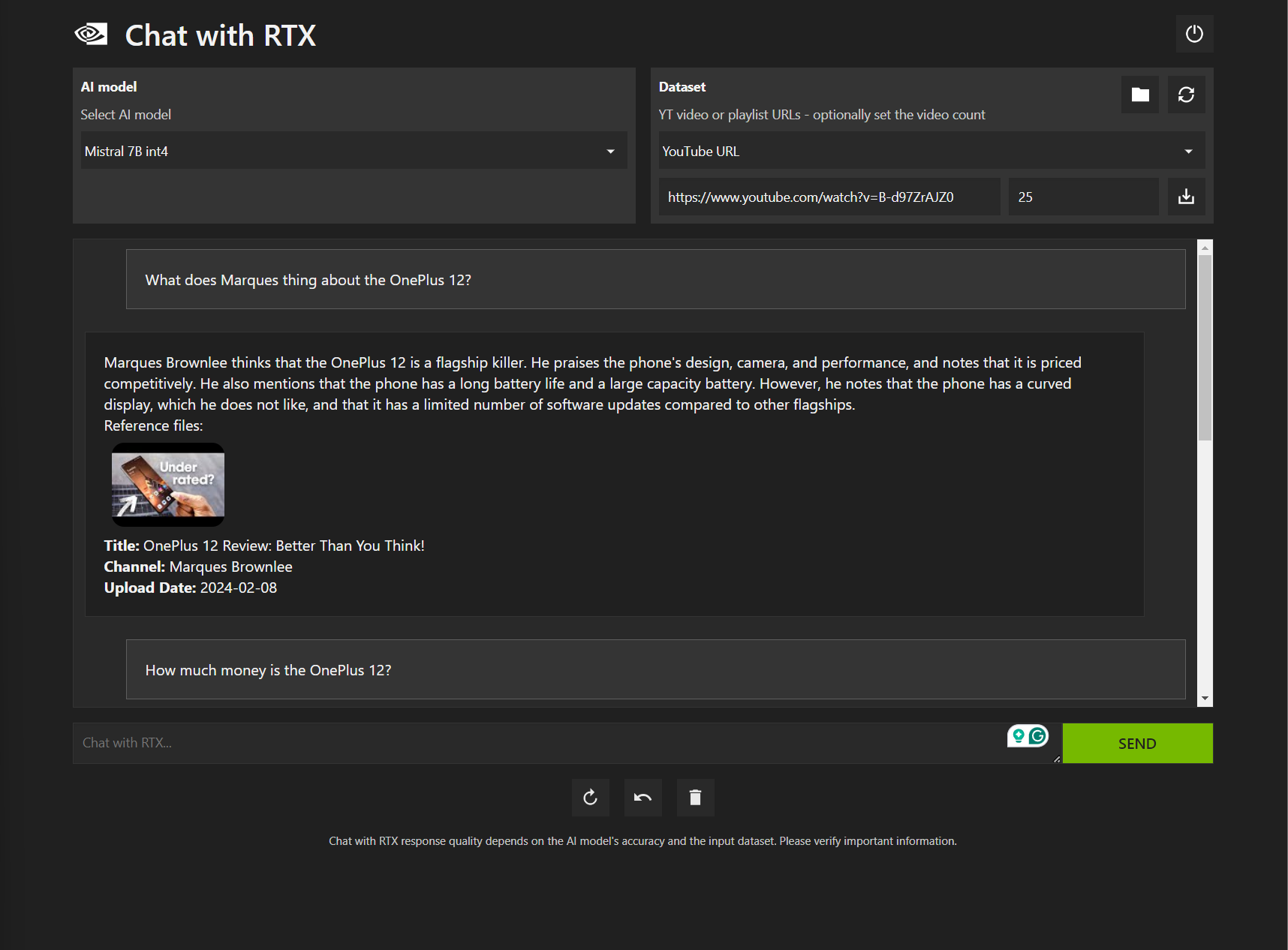

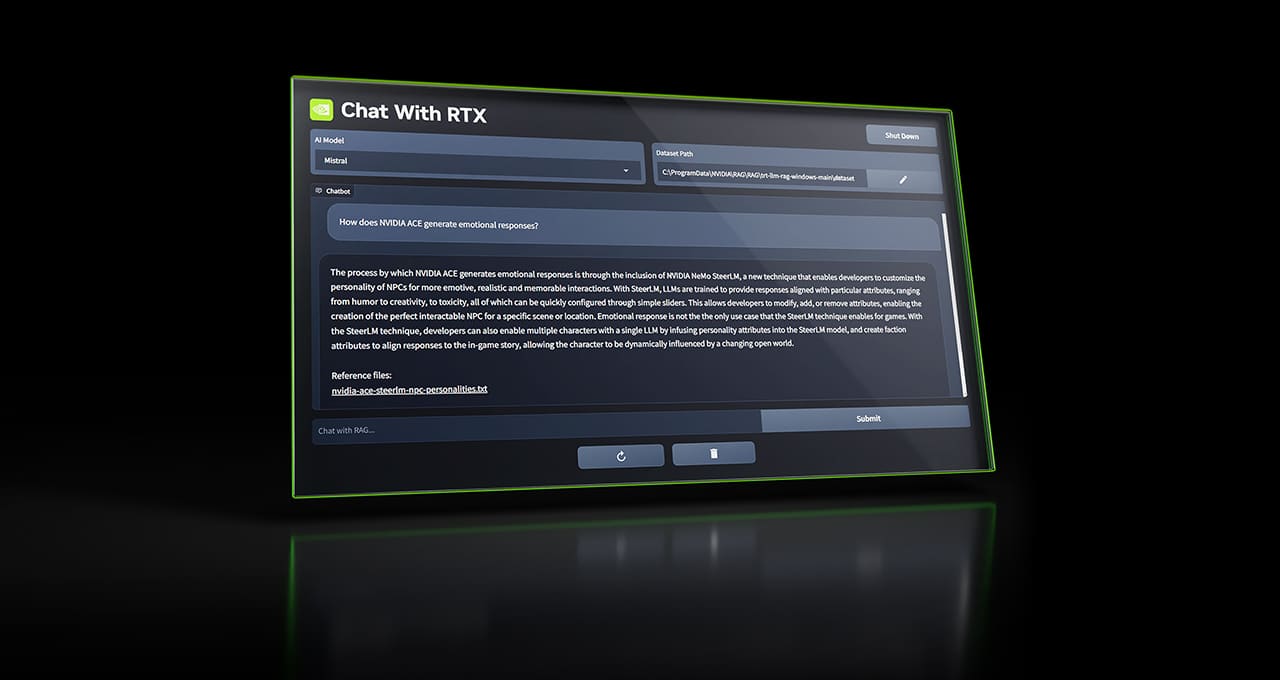

Chat with RTX's main selling point is its offline capabilities and user customizability. Nvidia's chatbot allows you to specify your own files so the chatbot can produce answers custom-tailored to your needs. You can import various file formats, including .txt, PDF, Word, and XML documents. YouTube videos can also be downloaded and parsed by the chatbot.

For example, I fed Chat with RTX two YouTube videos, one from MKBHD and one from Formula 1. I asked the chatbot about specific topics in each video, and it responded with concise answers taken directly from each video. Once you feed it a question that correlates with a topic in a file or video that you've injected into the chatbot, it will let you know which file/video its answer came from.

It's a pretty cool application that gives you more control than online solutions like Copilot and Gemini. However, its localized design does have a drawback in that it won't search the internet for answers. Depending on the use case, this can be a positive or a negative.

Chat with RTX utilizes the Mistral or LIama 2 open-source language models combined with Retrieval-Augmented Generation (RAG) — RAG being the mode of tuning the responses with your own data. To bring it to desktops, Nvidia is using its TensorRT-LLM software to allow the app to better utilize the tensor cores of RTX 30- or RTX 40-series GPUs.

The actual application starts out as a 35GB download. Once you extract that, you then have to complete the installation process, which downloads some additional data (we didn't track exactly how much). It also goes through a compilation process to target your specific GPU, which can take 15 minutes, give or take. Parsing your local files for additional context will also take time, which varies depending on how many files are present. We put 150 files into a folder, and it took about three minutes to customize the app with our local data.

The application is in its demonstration phase, so don't expect it to produce perfect answers. RTX 20-series owners are out of luck as well. It's not entirely clear why the original Turing architecture GPUs aren't supported, though Nvidia suggested time constraints were a factor and that it could make its way to 20-series cards in the future.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

Notton "The only real drawback with "Chat with RTX" is its sky-high system requirements and huge download size, particularly when compared with cloud solutions."Reply

Not once does the article mention what that size would be. -

salgado18 I think this is the best future of AI as a personal assistant: running locally. Nvidia has tensor cores, AMD has AI accelerators in their CPUs, 8GB of VRAM is not much anymore, and "huge download size" feels like less than most newer games. In one or two years all of these will be common in most PCs and notebooks, and in a couple more they will be low specs.Reply -

edzieba ReplyRTX 20-series owners are out of luck as well. It's not entirely clear why the original Turing architecture GPUs aren't supported, though Nvidia suggested time constraints were a factor and that it could make its way to 20-series cards in the future.

The same happened with DLSS video upscaling (RTX Video), Turing support was added 8 months after launch. -

brandonjclark Reply

35.1GBNotton said:"The only real drawback with "Chat with RTX" is its sky-high system requirements and huge download size, particularly when compared with cloud solutions."

Not once does the article mention what that size would be. -

JarredWaltonGPU Reply

I've added a clarification. It's 35GB, plus some other stuff gets downloaded during the installation phase. It's not clear how much additional data was downloaded, probably at least 4GB (there were two ~1.5GB LLMs).Notton said:"The only real drawback with "Chat with RTX" is its sky-high system requirements and huge download size, particularly when compared with cloud solutions."

Not once does the article mention what that size would be. -

palladin9479 This is the start of where AI will actually be useful, as a local assistant to the owner. We absolutely do not want it phoning mothership and telling some guy in India everything it's worked on and everything you've been doing with it.Reply -

salgado18 Reply

I wouldn't call that huge. That's half of what most new games require, and it's an entire AI system. Especially in preview, it's an acceptable size. Even Photoshop asks for 20Gb of free space (not the program size, but minimum requirement). With 1TB SSDs becoming cheaper and more available, it's large, but not huge.JarredWaltonGPU said:I've added a clarification. It's 35GB, plus some other stuff gets downloaded during the installation phase. It's not clear how much additional data was downloaded, probably at least 4GB (there were two ~1.5GB LLMs).

And you can't fairly compare it to cloud, right? Everything cloud is not on your PC, so it's expected to be small, or even nonexistent, like web pages. -

JarredWaltonGPU Reply

For what it does, and for Nvidia's ~300Mbps speed cap (I swear, for such a big company, downloads should be much faster!), it's pretty huge. I suspect a big part of the issue is that it includes a bunch of Python repository data. I would expect only about 2GB is required once everything is finished compiling.salgado18 said:I wouldn't call that huge. That's half of what most new games require, and it's an entire AI system. Especially in preview, it's an acceptable size. Even Photoshop asks for 20Gb of free space (not the program size, but minimum requirement). With 1TB SSDs becoming cheaper and more available, it's large, but not huge.

And you can't fairly compare it to cloud, right? Everything cloud is not on your PC, so it's expected to be small, or even nonexistent, like web pages. -

abufrejoval I have been running both Llama-2 and Mistral models in any size and combination available from Hugging face for since months so I don't expect this "packaged" variant to be all that different.Reply

But it's still very interesting to see that Nvidia is offering this to the wide public, when there were times when non-gaming use of consumer hardware was something they fought with a vengance.

It is obviously something people have done privately or not so privately for many months and in China evidently a whole industry developed around being able to do that even with multiple GPUs at once, even if all of these consumer variants were originally made "extra side" so nobody could fit more than one into an ordinary desktop.

With 8GB as a minimum spec, I'd be expecting this to be 7B models, the old "golden middle" of 35B Llama models that used to just fit at 4 bit quantization into the 24GB of an 3090 or 4090 get left out for Llama-2... for some reason, while 70B only fits with something like 2-bit quantization, which hallucinates too much for any use.

Honestly, it's not been that much better with larger quantizations or even FP16 on 7B models like Mistral, which were hyped quite a bit but failed rather badly when I tried to use them.

For me only models you can run locally are interesting at all, I refuse to use any cloud model voluntarily or unvoluntarily (Co-Pilot).

I can't say that I'm extremely interested in models that are way smarter than I, for me the level of a reasonably trained domestic would be just fine: it's mostly about them doing the chores I find boring, not about them trying to tell me how to fix my life.

But the big issue there is that I found them to be relatively useless, prone to hallucinate with the very same high degree of confidence as when they hit the truth. They have no notion of when they are wrong and currently simply won't do the fact checking we all do when we come up with our "intuitive" answers.

And their ability to be trained on the type of facts I'd really want my domestics to not ever get wrong, that's neither there nor all that reliable, either.

I hope y'all go ahead and try your best to get any use out of this, the earlier this bubble of outrageous overestimation of capabilities bursts, the better for the planet and its people.

At least you can still game with your GPU, much more difficult to do something useful with bitcoin ASICs... -

JarredWaltonGPU Reply

312abufrejoval said:I have been running both Llama-2 and Mistral models in any size and combination available from Hugging face for since months so I don't expect this "packaged" variant to be all that different.

But it's still very interesting to see that Nvidia is offering this to the wide public, when there were times when non-gaming use of consumer hardware was something they fought with a vengance.

It is obviously something people have done privately or not so privately for many months and in China evidently a whole industry developed around being able to do that even with multiple GPUs at once, even if all of these consumer variants were originally made "extra side" so nobody could fit more than one into an ordinary desktop.

With 8GB as a minimum spec, I'd be expecting this to be 7B models, the old "golden middle" of 35B Llama models that used to just fit at 4 bit quantization into the 24GB of an 3090 or 4090 get left out for Llama-2... for some reason, while 70B only fits with something like 2-bit quantization, which hallucinates too much for any use.

Honestly, it's not been that much better with larger quantizations or even FP16 on 7B models like Mistral, which were hyped quite a bit but failed rather badly when I tried to use them.

For me only models you can run locally are interesting at all, I refuse to use any cloud model voluntarily or unvoluntarily (Co-Pilot).

I can't say that I'm extremely interested in models that are way smarter than I, for me the level of a reasonably trained domestic would be just fine: it's mostly about them doing the chores I find boring, not about them trying to tell me how to fix my life.

But the big issue there is that I found them to be relatively useless, prone to hallucinate with the very same high degree of confidence as when they hit the truth. They have no notion of when they are wrong and currently simply won't do the fact checking we all do when we come up with our "intuitive" answers.

And their ability to be trained on the type of facts I'd really want my domestics to not ever get wrong, that's neither there nor all that reliable, either.

I hope y'all go ahead and try your best to get any use out of this, the earlier this bubble of outrageous overestimation of capabilities bursts, the better for the planet and its people.

At least you can still game with your GPU, much more difficult to do something useful with bitcoin ASICs...

It doesn't parse the tables for meaning, basically, so if I never did a text comparison of the 7900 XT and the 4080 Super, it just hallucinates an answer. LOL