Sponsored by QUE Publishing

Computer History 101: The Development Of The PC

From Tubes To Transistors

From UNIVAC to the latest desktop PCs, computer evolution has moved very rapidly. The first-generation computers were known for using vacuum tubes in their construction. The generation to follow would use the much smaller and more efficient transistor.

From Tubes...

Any modern digital computer is largely a collection of electronic switches. These switches are used to represent and control the routing of data elements called binary digits (or bits).Because of the on-or-off nature of the binary information and signal routing the computer uses, an efficient electronic switch was required. The first electronic computers used vacuum tubes as switches, and although the tubes worked, they had many problems.

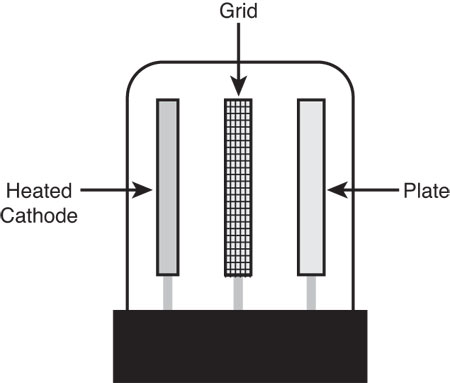

The type of tube used in early computers was called a triode and was invented by Lee De Forest in 1906. It consists of a cathode and a plate, separated by a control grid, suspended in a glass vacuum tube. The cathode is heated by a red-hot electric filament, which causes it to emit electrons that are attracted to the plate. The control grid in the middle can control this flow of electrons. By making it negative, you cause the electrons to be repelled back to the cathode; by making it positive, you cause them to be attracted toward the plate. Thus, by controlling the grid current, you can control the on/off output of the plate.

Unfortunately, the tube was inefficient as a switch. It consumed a great deal of electrical power and gave off enormous heat—a significant problem in the earlier systems. Primarily because of the heat they generated, tubes were notoriously unreliable—in larger systems, one failed every couple of hours or so.

...To Transistors

The invention of the transistor was one of the most important developments leading to the personal computer revolution.The transistor was invented in 1947 and announced in 1948 by Bell Laboratory engineers John Bardeen and Walter Brattain. Bell associate William Shockley invented the junction transistor a few months later, and all three jointly shared the Nobel Prize in Physics in 1956 for inventing the transistor. The transistor, which essentially functions as a solid-state electronic switch, replaced the less-suitable vacuum tube. Because the transistor was so much smaller and consumed significantly less power, a computer system built with transistors was also much smaller, faster, and more efficient than a computer system built with vacuum tubes.

The conversion from tubes to transistors began the trend toward miniaturization that continues to this day. Today’s small laptop PC (or netbook, if you prefer) and even Tablet PC systems, which run on batteries, have more computing power than many earlier systems that filled rooms and consumed huge amounts of electrical power.

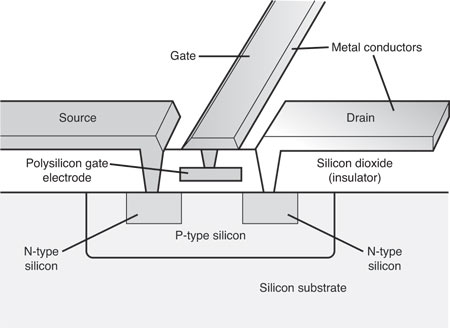

Although there have been many designs for transistors over the years, the transistors used in modern computers are normally Metal Oxide Semiconductor Field Effect Transistors (MOSFETs). MOSFETs are made from layers of materials deposited on a silicon substrate. Some of the layers contain silicon with certain impurities added by a process called doping or ion bombardment, whereas other layers include silicon dioxide (which acts as an insulator), polysilicon (which acts as an electrode), and metal to act as the wires to connect the transistor to other components. The composition and arrangement of the different types of doped silicon allow them to act both as a conductor or an insulator, which is why silicon is called a semiconductor.

MOSFETs can be constructed as either NMOS or PMOS types, based on the arrangement of doped silicon used. Silicon doped with boron is called P-type (positive) because it lacks electrons, whereas silicon doped with phosphorus is called N-type (negative) because it has an excess of free electrons.

MOSFETs have three connections, called the source, gate, and drain. An NMOS transistor is made by using N-type silicon for the source and drain, with P-type silicon placed in between. The gate is positioned above the P-type silicon, separating the source and drain, and is separated from the P-type silicon by an insulating layer of silicon dioxide. Normally there is no current flow between N-type and P-type silicon, thus preventing electron flow between the source and drain. When a positive voltage is placed on the gate, the gate electrode creates a field that attracts electrons to the P-type silicon between the source and drain. That in turn changes that area to behave as if it were N-type silicon, creating a path for current to flow and turning the transistor “on.”

A PMOS transistor works in a similar but opposite fashion. P-type silicon is used for the source and drain, with N-type silicon positioned between them. When a negative voltage is placed on the gate, the gate electrode creates a field that repels electrons from the N-type silicon between the source and drain. That in turn changes that area to behave as if it were P-type silicon, creating a path for current to flow and turning the transistor “on.”

When both NMOS and PMOS field-effect transistors are combined in a complementary arrangement, power is used only when the transistors are switching, making dense, low-power circuit designs possible. Because of this, virtually all modern processors are designed using CMOS (Complementary Metal Oxide Semiconductor) technology.

Compared to a tube, a transistor is much more efficient as a switch and can be miniaturized to microscopic scale. Since the transistor was invented, engineers have strived to make it smaller and smaller. In 2003, NEC researchers unveiled a silicon transistor only 5 nanometers (billionths of a meter) in size. Other technology, such as Graphene and carbon nanotubes, are being explored to produce even smaller transistors, down to the molecular or even atomic scale. In 2008, British researchers unveiled a Graphene-based transistor only 1 atom thick and 10 atoms (1 nm) across, and in 2010, IBM researchers created Graphene transistors switching at a rate of 100 gigahertz, thus paving the way for future chips denser and faster than possible with silicon-based designs.

Integrated Circuits: The Next Generation

The third generation of modern computers is known for using integrated circuits instead of individual transistors. Jack Kilby at Texas Instruments and Robert Noyce at Fairchild are both credited with having invented the integrated circuit (IC) in 1958 and 1959. An IC is a semiconductor circuit that contains more than one component on the same base (or substrate material), which are usually interconnected without wires. The first prototype IC constructed by Kilby at TI in 1958 contained only one transistor, several resistors, and a capacitor on a single slab of germanium, and it featured fine gold “flying wires” to interconnect them. However, because the flying wires had to be individually attached, this type of design was not practical to manufacture. By comparison, Noyce patented the “planar” IC design in 1959, where all the components are diffused in or etched on a silicon base, including a layer of aluminum metal interconnects. In 1960, Fairchild constructed the first planar IC, consisting of a flip-flop circuit with four transistors and five resistors on a circular die only about 20 mm2 in size. By comparison, the Intel Core i7 quad-core processor incorporates 731 million transistors (and numerous other components) on a single 263 mm2 die!

Current page: From Tubes To Transistors

Prev Page The First Electronic Computers Next Page Birth Of The Personal ComputerGet Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Tom's Hardware is the leading destination for hardcore computer enthusiasts. We cover everything from processors to 3D printers, single-board computers, SSDs and high-end gaming rigs, empowering readers to make the most of the tech they love, keep up on the latest developments and buy the right gear. Our staff has more than 100 years of combined experience covering news, solving tech problems and reviewing components and systems.

-

raclimja FIRST!Reply

i still have my pentium 2 gathering dust on my closet

improvements in technology is AMAZING -

dogman_1234 I liked it. Love history; and the history of computerized technology. Can't wait to see the next 50 years.Reply -

mayankleoboy1 just one question:Reply

why this article? in the whole wide range of PC, why this?

you could have done the second part to the Antiliasing article. -

cangelini mayankleoboy1just one question: why this article? in the whole wide range of PC, why this?you could have done the second part to the Antiliasing article.Reply

That's still on its way. It's very data-intensive and Don has been plugging away at it. -

SteelCity1981 Reply2006: Microsoft releases the long-awaited Windows Vista to business users. The PC OEM and consumer market releases would follow in early 2007:

It should really read.

2006: Microsoft releases the long-awaited Windows Vista to business users. The PC OEM and consumer market releases would follow in early 2007 and the vast majority of people quickly downgraded back to Windows XP:

lol -

madsbs Pics or it didn't happen!Reply

Where are the illustrations for this rather interesting piece? -

jj463rd One thing that I disliked about the Timeline of Computer Advancements was leaving out Douglas Englebart and the Mother of All Demos in 1968(if you don't know about him you know very little about computer history )and giving accolades instead to Xerox.Reply