Vertex Shaders and Pixel Shaders

Pixel Shader Pipeline

The programmable pixel pipeline introduces a brand new pixel rasterization concept to DirectX along the lines of vertex shaders. They even have the same kind of name... pixel shaders.

There are a number of reasons that pixel shaders should rock the world, primarily related to the overcomplicated compatibility-checking necessary for the fixed-function pipeline. In pixel shaders, Microsoft has introduced an all-or-nothing style of compatibility. If a graphics card supports pixel shader (PS) 1.0, then you can guarantee that the graphics card supports all of the instructions available in PS1.0, as well as a fixed number of registers for texture coordinates, temporary variables, constants and vertex interpolated values.

The hassle of compatibility should become a thing of the past, leaving the hardware developers as the only people suffering from a sore head.

What Do Shaders Let Me Do?

In this section, I'll describe a few of the typical things that you'll want to use vertex and/or pixel shaders for when writing a game.

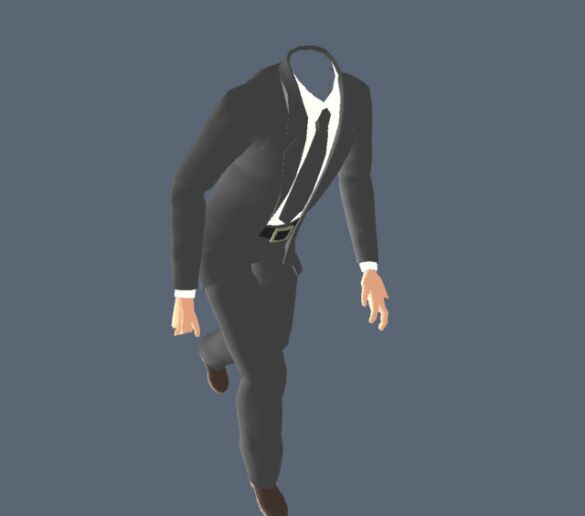

Matrix Palette Skinning

Matrix palette skinning.

"Skinning" is something that should be familiar to most people involved with character animation in modern games. You may remember the days when characters had a split halfway up the leg as they ran along. Some clever folks figured out a couple of ways to reduce the presence of those splits. Initially they found that you can add some extra polygons across the split in the geometry; later, people realized that you could bend a single cylinder in order to give a continuous piece of geometry. Nowadays, skinning allows you to use the cylinder trick, in a far more general way.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

What the cylinder trick basically does is move the vertices along the cylinder, from one orientation to another. Skinning allows each vertex to be affected by up to four different orientations. Each of these orientations is described using a matrix. Generally, each matrix will be associated with a "bone" in the animation "skeleton" for the model.

A number of bones may be needed for a model; for a simple skeleton, we may have two feet, two lower legs, two upper legs, lower torso, upper torso, neck, two upper arms, two lower arms and two hands. That's a total of 15 different bones. For more detailed models, you may want bones for multiple ribs in the upper torso, perhaps each finger, and maybe even each bone in the finger. In that case, you can easily reach 50-100 bones.

As I've already mentioned, each vertex will be affected by 4 different bones from the matrix palette. This means that each vertex will need to store an array of four indices per vertex, along with three blending weights (and an implied fourth blending value).

In DX8, there are two ways to carry out matrix palette skinning. First, an addition has been made to the fixed-function pipeline so that it can carry out matrix palette skinning using a 256 matrix palette. Second we can use the vertex shader layer to calculate skinning.

Each method has some drawbacks. The vertex shader method is limited to using the vertex shader constant memory, which only contains enough space for 24 matrices, and some of that memory is going to be used by a transformation matrix (-1 matrix), lighting data (at least -1 matrix), and data for whatever material model you use.

The fixed-function skinning routines are only currently accelerated on more recent MATROX graphics card and have no support from nVidia and ATI. But, then again, only the GF3 and R8500 support vertex shaders. So, you might think that it would be a good idea to use these fixed-function methods on older hardware. However, in profiling you'll generally find that the fixed-function palette skinning is actually slower than vertex shader emulation.

In general, it's going to be far more sensible to use the vertex shader method. So, for anything more complicated than the most basic skeletons, you'll need to split the model up into zones, which reference no more than 20 matrices.

Bump-Reflection Mapping

Bump-reflection-mapping.

This is a fairly standard use of the pixel shader architecture. Using the fixed-function pipeline, it's possible to render bumpy surfaces using a variety of methods. The most powerful method is the DP3 instruction available on most modern hardware. The pixel shaders introduce a way to use data from one texture to read values from a different texture.

In the case of bump-reflection mapping, you can use the bump texture to modify your reads into a reflection map. In simple language, you have a bumpy, reflective surface.

The ability to read one texture using another is one of the main advances made by the pixel shader architecture, and is commonly referred to as a "dependent texture read". The implementation, as it exists in the first generation of pixel shaders, isn't quite as powerful as it initially seems, mainly due to the rigid way that it must be used. All dependent texture reads occur in the first block of instructions in the pixel shader, and can only access data from the texture coordinates and textures passed in from the vertex shader. Once your texture reads are complete, they can be blended together using blend instructions.

For bump-reflection mapping, an orientation matrix must be passed into the pixel shader using the vertex shader's texture coordinate output registers. This matrix requires three sets of texture coordinates. The remaining set of texture coordinates will be used to reference the bump-map. The result is that bump-reflection mapping takes an entire pass of rendering. If you also want to render a diffuse texture, you'll have to do that in the first pass, then render the reflection on the second pass.

This is definitely a step up from the fixed-function pipeline, where this effect is impossible, but it is a fairly complicated process.

Current page: Pixel Shader Pipeline

Prev Page Vertex Shader Processing Next Page Making The Most Of The Vertex Shader Architecture Within A Game