New Microsoft Tech Translates Speech in Near Real Time

We're one step closer to a universal translator thanks to the efforts of Microsoft Research.

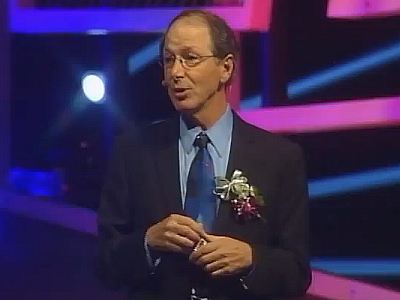

On October 25 during Microsoft Research Asia’s 21st Century Computing event in Tianjin, China, the company's Chief Research Officer Rick Rashid demonstrated new speech-to-speech translation technology that's capable of not only converting English into spoken Mandarin Chinese in real time, but keeps the user's voice intact as well.

In a blog posted on Thursday, Rashid said Microsoft's new software translator is based on a new technique called Deep Neural Networks, or DNN. It ditches the currently-standard "hidden Markov modeling" technique (which is based on training data from several speakers) in favor of human brain behavior in order to better recognize and mimic proper speech patterns.

By taking the gray matter route, Rashid said his team has seen a 30-percent reduction in translation errors when compared to the older Markov method. That means only one out of seven or eight words are incorrect compared to the old method's one in every four or five words error rate.

"While still far from perfect, this is the most dramatic change in accuracy since the introduction of hidden Markov modeling in 1979, and as we add more data to the training we believe that we will get even better results," he said in the blog.

The demonstration consisted of two steps. As he spoke to the audience, the system converted his speech into text. It then located the Chinese equivalent of each word (the easy part, he said) and reordered them to be appropriate for Chinese dictation – an extremely important step for correct translation between languages, he said.

"Of course, there are still likely to be errors in both the English text and the translation into Chinese, and the results can sometimes be humorous. Still, the technology has developed to be quite useful," he said.

In the next step, the text was quickly converted into spoken Chinese while retaining the properties of his own voice. "It required a text to speech system that Microsoft researchers built using a few hours speech of a native Chinese speaker and properties of my own voice taken from about one hour of pre-recorded (English) data, in this case recordings of previous speeches I’d made," he added.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Despite the team's achievements thus far, Rashid acknowledged that the results still aren't perfect – there's much work that still needs to be done in order to reach a Star Trek level of quality. "The technology is very promising, and we hope that in a few years we will have systems that can completely break down language barriers," he said.

To see and hear how this new translation system works, check out his presentation below.

Kevin Parrish has over a decade of experience as a writer, editor, and product tester. His work focused on computer hardware, networking equipment, smartphones, tablets, gaming consoles, and other internet-connected devices. His work has appeared in Tom's Hardware, Tom's Guide, Maximum PC, Digital Trends, Android Authority, How-To Geek, Lifewire, and others.

-

fuzzion viper666Oh Star Trek how you helped innovation...Reply

They gave us the tablet, pc, universal translator, stun gun,etc -

guru_urug This is really good innovation. Please don't ruin it fighting over patents. Let the whole world reap the benefits. Speech translation has global applicationsReply -

jkflipflop98 fuzzionThey gave us the tablet, pc, universal translator, stun gun,etcReply

Let's not get carried away here. There's a huge gulf between painting a wooden block to look like a stun gun and actually making one. Yeah, ST had ideas ahead of it's time, but that's all they were. . . ideas and grown men playing pretend.

It took an actual smart guy to make those things real.

-

noblerabbit Knowing Microsoft, When someone speaks to translate: 'I fed my dog this morning' , the fifth language at the end of the line will say: 'You kicked my cat tomorrow, you assclown'Reply -

friskiest Its good to see innovation being treated the right way,. its getting appreciated by both sides with no bashing or pointless remarks!Reply

Good job!!!