AMD Files Patent for Hybrid Ray Tracing Solution

It's nearly impossible to discuss graphics tech in 2019 without bringing up real-time ray tracing. The rendering technique has been popularized by Nvidia, Microsoft, and an increasing number of game developers over the last few months. AMD's stayed pretty quiet about how it plans to support hardware-accelerated ray tracing, but a patent application published on June 27 offered a glimpse at what it's been working on.

AMD filed the patent application with the U.S. Patent and Trademark Office (USPTO) in December 2017. It describes a hybrid system that enables real-time ray tracing using a variety of software and hardware methods rather than relying on just one solution. The company said this approach should allow it to overcome the shortcomings associated with previous attempts to bring ray tracing to the masses.

In the application, AMD said that software-based solutions "are very power intensive and difficult to scale to higher performance levels without expending significant die area." It also said that enabling ray tracing via software "can reduce performance substantially over what is theoretically possible" because they "suffer drastically from the execution divergence of bounded volume hierarchy traversal."

Basically: using software to enable ray tracing on hardware that hasn't been optimized for the rendering technique requires a significant performance sacrifice. Most people don't like it when their hardware is hamstrung by software, even if it's supposed to enable some fancy new graphics, and the inability to handle other processing tasks at the same time can also make the graphics look worse anyway.

AMD didn't think hardware-based ray tracing was the answer either. The company said those solutions "suffer from a lack of programmer flexibility as the ray tracing pipeline is fixed to a given hardware configuration," are "generally fairly area inefficient since they must keep large buffers of ray data to reorder memory transactions to achieve peak performance," and are more complex than other GPUs.

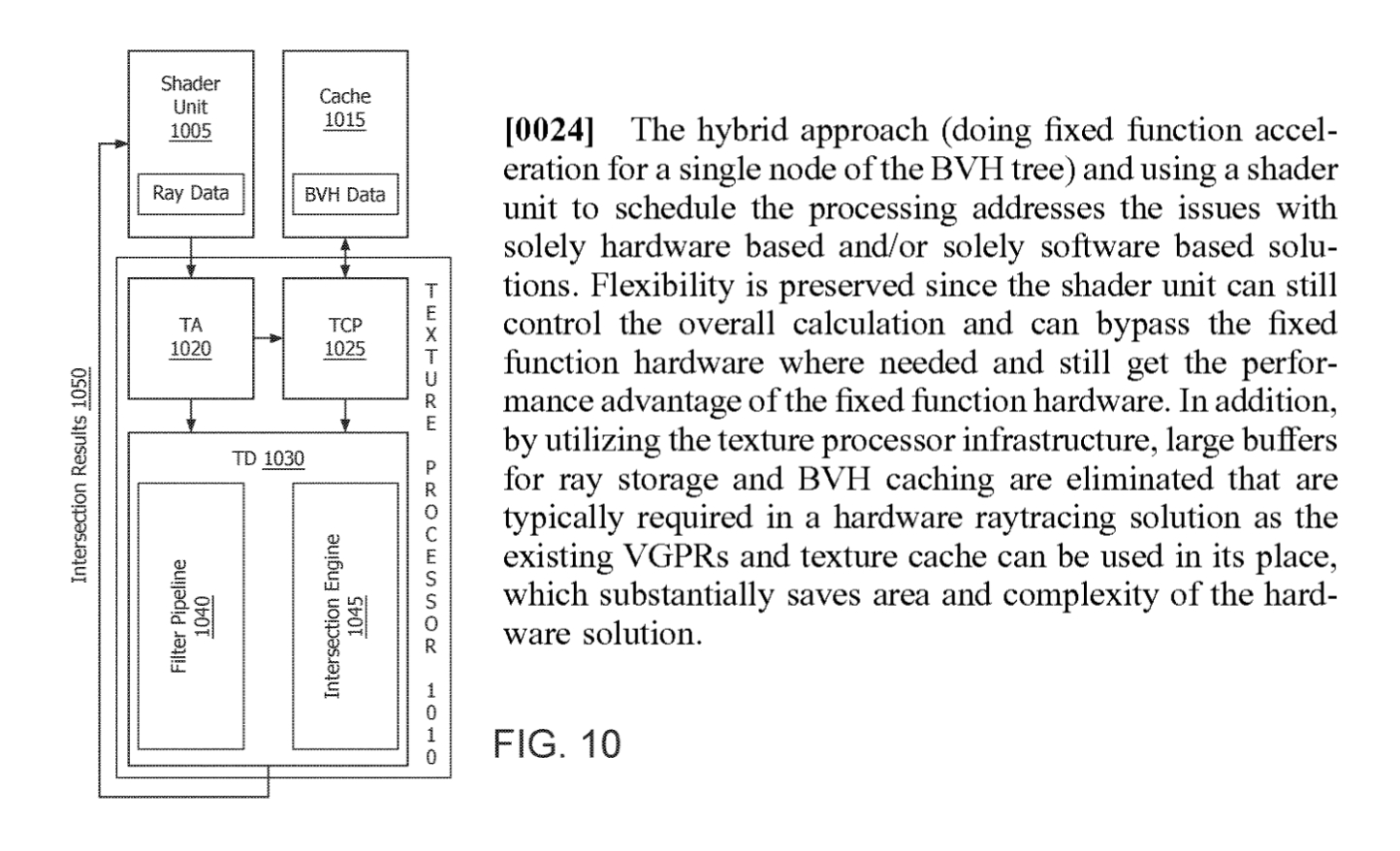

So the company developed its hybrid solution. The setup described in this patent application uses a mix of dedicated hardware and existing shader units working in conjunction with software to enable real-time ray tracing without the drawbacks of the methods described above. Here's the company's explanation for how this system might work, as spotted by "noiserr" on the AMD subreddit:

It's worth noting that this application was filed a year-and-a-half ago; AMD might have developed a new ray tracing system in the interim. But right now it seems like the company doesn't want to go the exact same route as Nvidia, which included dedicated ray tracing cores in Turing-based GPUs, and would rather use a mix of dedicated and non-dedicated hardware to give devs more flexibility.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Not that AMD's in much of a rush. When the company announced its latest Navi-based graphics cards, the company didn't include any hardware devoted to ray tracing, and told us that it believes it will be a few years before real-time ray tracing catches on anyway. It might be a while before we see the solution described in this application--and that's assuming it ever makes its way to a real product.

Nathaniel Mott is a freelance news and features writer for Tom's Hardware US, covering breaking news, security, and the silliest aspects of the tech industry.

-

shmoochie Can't wait to see it implemented and benchmarked. So far, Nvidia has had to work directly with developers to optimize their ray tracing to any satisfactory level. It would be fantastic for everyone if AMD found a workaround that give developers more flexibility.Reply -

spiketheaardvark Seems like dedicated hardware makes "balancing" the cards more difficult. performance in games could swing widely depending on if the game hits the right ratio of shader verses ray-tracing work loads. If you have more general purpose hardware, it might not be quite as efficient but results may be more consistent.Reply -

jimmysmitty Replyspiketheaardvark said:Seems like dedicated hardware makes "balancing" the cards more difficult. performance in games could swing widely depending on if the game hits the right ratio of shader verses ray-tracing work loads. If you have more general purpose hardware, it might not be quite as efficient but results may be more consistent.

Not shader vs ray tracing, rasterization vs ray tracing.

And while it is hard with new hardware it will still be the superior option as ray tracing always has been a performance killer. Even when Intel was working on Larrabee and was showing off ray tracing using it, and doing it better than AMD or nVidia at the time, they used a very old game and a lower resolution than we were used to at the time.

However eventually we will either find the balance, where RT gets used in some areas with rasterization being used everywhere else, or the hardware will evolve to a point where it is much easier to perform RT and nVidia/AMD/Intel and software devs will find a balance in working with it.

This is how it starts. Tesselation was something AMD pushed harder than nVidia and at first very few devs wanted to use it because it killed performance in games. However after time went on hardware got better, nVidia and AMD both worked on it and now its used in most every game. -

bit_user Reply

Actually, I think @spiketheaardvark meant what he said. The point being that if most of the time is spent in shading, then Nvidia's RT cores could sit idle, whereas if the scene is bottlenecked by ray-intersection tests, then shader cores could mostly sit idle.jimmysmitty said:Not shader vs ray tracing, rasterization vs ray tracing.

So, I guess the idea is that you involve the shaders in more of the ray-tracing pipeline and then focus on adding more shaders with less area devoted to specialized ray-intersection hardware. This way, the shader cores are busy no matter whether the scene is shading-intensive or ray-intersection intensive. -

spiketheaardvark Reply

bit_user is correct. I was not reffering to rasterization instead of raytracing as a rendering method. A huge chunk of a GPU are the shader cores. With dedicaded hardware, if you have a game that has a very heavy raytrace work load and insufficient resources to process it those shaders(and a big part of your GPU) is spending a lot of time idle. Kinda like dropping a 2080 into an old core2 duo machine, or a 750 into a machine with an OC 9900k.bit_user said:Actually, I think @spiketheaardvark meant what he said.

So is the future of gaming GPU a bunch of asic put together or to become more general (and thus more like a cpu)? AMD has been pushing toward more general compute for a long time. Nvidia has gone the opposite way with these raytracing cores.