What is Ray Tracing and Why Do You Want it in Your GPU?

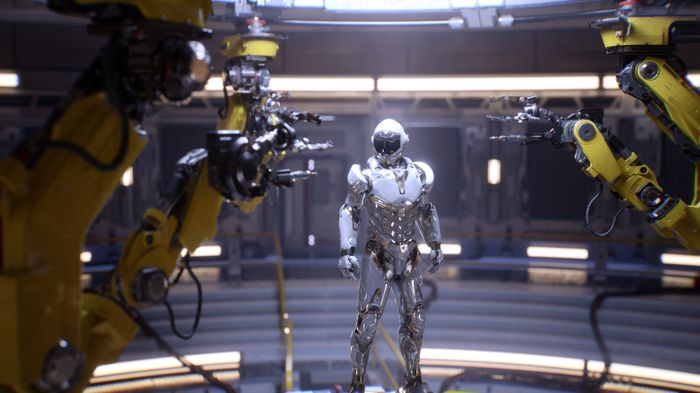

Back in 2018, Nvidia announced its newest generation server-class Quadro graphics processors, the Quadro RTX family, which could handle ray tracing in real-time. Nvidia will tell you that ray tracing is the holy grail of graphics rendering technology, but what, exactly, is it? And why is it so important?

To put it simply, ray-tracing is a rendering technique that produces photorealistic graphics with true-to-life lighting and shadow formations. The process accounts for the physical properties of rendered objects and their material composition to accurately simulate how light interacts with them, including the level of light reflection, refraction or absorption.

How it Works

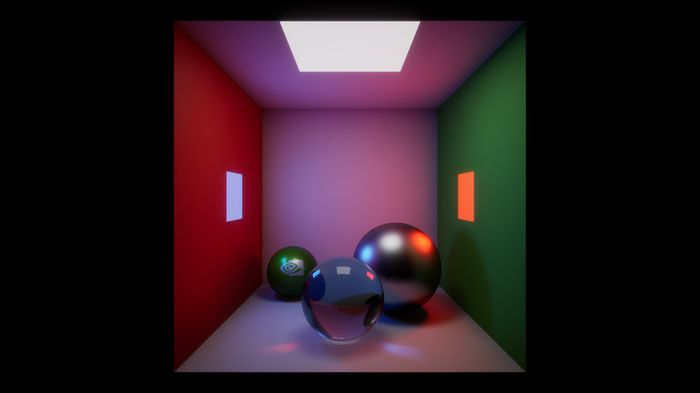

The ray-tracing rendering engine maps the trajectory of the light rays that reach the viewport (read: your eye) by working backwards and casting rays—one for each pixel of your display-- in a straight line from the viewport and capturing the point where the rays intersect with a digital surface. The material properties of the object’s surface, such as the color, reflectiveness, and opacity, inform the color of the object and how it interacts with light rays. Because the rays propagate from your viewport, the lighting and shadows interact naturally when you change the viewing angle.

Ray tracing calculates how light rays would bounce off surfaces. It also determines where shadows would form, and whether light would reflect from another direction to illuminate that space. As a result, ray-traced graphics can produce shadows with soft, smooth edges—especially when there’s more than one light source in the scene.

Ray tracing also has the distinct advantage of being able to simulate transparent materials such as glass or water, and how light refracts when it passes through such objects.

No Rasterization, No Shaders

Traditional computer graphics rely on a technique called rasterization, which converts 3D rendering into a 2D output for your monitor. Raterized graphics then rely on complex shaders to give the scene a sence of depth. With ray-traced graphics, the depth is an integral part of the scene and you don’t need a shader to bring it to life.

Computationally Intensive

Ray tracing technology isn’t new, and we can almost guarantee that you’ve encountered ray-traced graphics before. Hollywood uses ray tracing in movies to blend digital special effects seamlessly with live-action film. However, ray tracing is extremely computationally intensive, and it can take days or even weeks to process those scenes with a traditional render farm, which is why ray-traced graphics for games exist only in pre-rendered cut-scenes.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Nvidia has been pushing ray-tracing technology for at least a decade. In 2008, it acquired a ray-tracing company called RayScale, and two years later at Siggraph 2010, it showed the first interactive ray-tracing demo running on Fermi-based Quadro cards. After witnessing the demo first-hand, we surmised that we would see real-time ray-tracing capability “in a few GPU generations.”

A few generations turned into six generations, but Nvidia finally achieved real-time ray tracing with the new Quadro RTX lineup. Following the release of the original Quadro RTX family of professionally-oriented GPUs, the consumer-centric GeForce RTX 20 Series brought real-time ray tracing hardware to gamers. Those capabilities strengthened with the arrival of the GeForce RTX 30 Series (Ampere) in 2020. We should also mention that some older Pascal-based GPUs, like the GeForce GTX 1650 Ti, support slower, software-based ray tracing.

However, Nvidia isn't the only one with hardware ray tracing support. AMD's current-generation Radeon RX 6000 Series (RDNA 2) GPUs support. In addition, Intel's new Arc family of laptop- and desktop-based GPUs also natively support hardware ray tracing.

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

-

Martell1977 I would surmise that this will be especially important in VR games/programs. I wonder what effect this new design will have on power consumption and if it will be overclockable as part of OCing the GPU core, individually or even at all.Reply

AMD's response to this should make things interesting. -

bloodroses So, current graphics are raster based. Now that real-time ray tracing is coming, will graphics completely switch to that or will they be a hybrid of it?Reply

What's really kind of interesting is that Intel's Larabee was a flop because it couldn't handle raster graphics well. Ray-tracing was a different story though. With the acquisition of ex-AMD employee Raja Koduri, they could be in a good position to become a major GPU threat. -

bit_user Reply

Nvidia already announced hybrid techniques, such as RT-based anti-aliasing. Plus, there's the obvious possibility of using RT just for certain aspects like indirect illumination and reflection.21230640 said:So, current graphics are raster based. Now that real-time ray tracing is coming, will graphics completely switch to that or will they be a hybrid of it? -

bit_user Reply

All GPUs can do it in software, but not very fast (better than CPUs, but still not good enough for pure raytraced gaming).21230706 said:I was under the impression that Vega supported ray tracing.

As far as we know, Vega has no hardware engine like the RT cores. -

jimmysmitty Reply21230640 said:So, current graphics are raster based. Now that real-time ray tracing is coming, will graphics completely switch to that or will they be a hybrid of it?

What's really kind of interesting is that Intel's Larabee was a flop because it couldn't handle raster graphics well. Ray-tracing was a different story though. With the acquisition of ex-AMD employee Raja Koduri, they could be in a good position to become a major GPU threat.

This is nothing new. Ray Tracing has been around for a long time and talked about. As you mentioned Larrabee was a beast for Ray Tracing however at the time (circa 2008) AMD and nVidia were not so much. Its a very compute heavy way to do graphics.

They were supposed to move to a mix of both a while ago though. Back in 2011/2012 when DX11 was new they were showing it off again as the next big thing. However as said both AMD and nVidia still couldn't push 60FPS ray tracing.

There is a lot of talk of moving to Ray Tracing. There was a lot of talk then too. However unlike before where it is just a possible add-in to DirectX Microsoft has a API directly tied to it, DXR. nVidia has made RTX which is a hardware based tie to DXR. How it will work though, any DX12 GPU will support DXR, per Microsoft. However they will be doing it via Software. The Titan V and this new GPU from nVidia will support RTX which means it will be able to do DXR via Software and Hardware.

Guess we now wait to see AMDs answer to this. They are not particularly competitive in the GPU market and really needs to at least keep up with nVidia tech wise. -

bit_user Reply

Raytracing can still utilize shaders of various sorts. The "classic" type of shader is a little program used to evaluate the color of an object, at a given point on its surface. While these would work a bit differently, for ray tracers, it's still legit to procedurally texture objects vs. using texture maps.21230415 said:Raterized graphics then rely on complex shaders to give the scene a sence of depth. With ray-traced graphics, the depth is an integral part of the scene and you don’t need a shader to bring it to life.

PBR is an alternative, but not one I foresee completely replacing procedural shaders.

https://en.wikipedia.org/wiki/Physically_based_rendering -

Giroro Throwing custom hardware and raw horsepower at Ray tracing is not a good solution, because it doesn't solve the fact that Ray Tracing is intrinsically too computationally expensive.Reply

A game that uses ray tracing is always going to be limited to simpler scenes at a slower framerate than the current methods of 'faking it', which were designed from an efficiency-first perspective. It's like it doesn't matter how hard you push a heavy block to slide it across the ground, its never going to be as fast or as easy as rolling a cylinder.

We either need to find a far more efficient algorithm for computing rays, or work needs to start on inventing an entirely new method of realistically lighting 3D scenes. -

bit_user Reply

One way to look at it is like fixed-point vs. floating-point arithmetic. For a long time, floating point was relegated to scientific computation. Eventually, it became cheap enough to put in mass-market CPUs and it's now used in lots of domains (audio processing, graphics, etc.) without a second thought.21231828 said:Throwing custom hardware and raw horsepower at Ray tracing is not a good solution, because it doesn't solve the fact that Ray Tracing is intrinsically too computationally expensive.

A game that uses ray tracing is always going to be limited to simpler scenes at a slower framerate than the current methods of 'faking it', which were designed from an efficiency-first perspective. It's like it doesn't matter how hard you push a heavy block to slide it across the ground, its never going to be as fast or as easy as rolling a cylinder.

But you're off the mark, on a few points. First, ray tracing scales with scene complexity better than rasterization. More importantly, it handles realism better than traditional rasterizer hacks. Things like reflection, refraction, depth-of-field, motion blur, area lighting, and global illumination are much better and more naturally handled by ray tracing. Taken together, what this means is that it's actually the easiest approach, when you're trying to achieve a high degree of complexity and realism.