8-bit PC Hercules Graphics Card from 1984 gets revisited — Hercules GPU from the IBM era shaped the modern graphics we know today

A Herculean leap in graphics.

If you've been around long enough to remember a time before modern-day GPUs were a thing, the name "Hercules Graphics Card" (HGCC might ring a bell. It quietly revolutionized PC graphics back in the early 80s for businesses, ushering in a new era of innovation in display hardware; you could say, Hercules walked so Nvidia and ATI could run. Even though it looks primitive by today's standards, it's always interesting to look back at how impactful old technology was when even the concept of a GPU was abstract. Let's go on a bit of a retrospective journey, courtesy of The 8-Bit Guy on YouTube, who explains not only the legend of Hercules in his video but also the inner workings of the actual hardware.

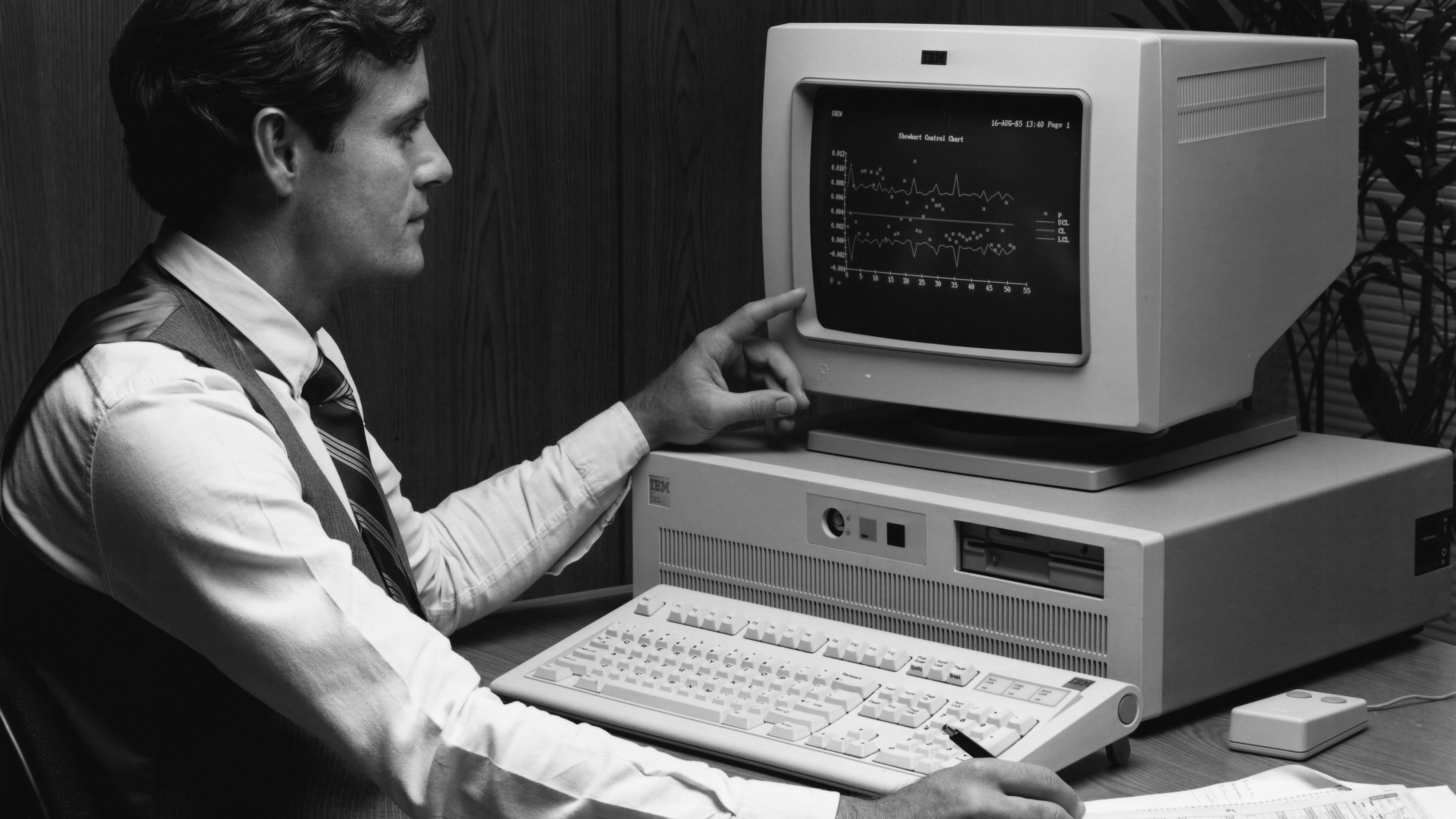

Before modern GPUs were capable of true graphical rendering, IBM PCs dominated the corporate world with unmatched credibility. Businesses essentially only needed to sift through spreadsheets and charts, handle basic accounting, and process large amounts of text in documents. For this, "graphics" weren't required; only numbers and letters were needed, which were far simpler to display. Think of it like a printer; seldom is one used for actual pictures with precise details, and more so for just text.

At the time, IBM PCs offered two display options: Color Graphics Adapter (CGA) and Monochrome Display Adapter (MDA). The former could show color but had a max resolution of 640×200, so text looked coarse. Comparatively, the MDA was much sharper at 720×350 but was limited to monochrome, and incapable of displaying graphics whatsoever... which made it cheaper and, thus, more widespread. In comes the Hercules Graphics Card, possessing the best of both worlds.

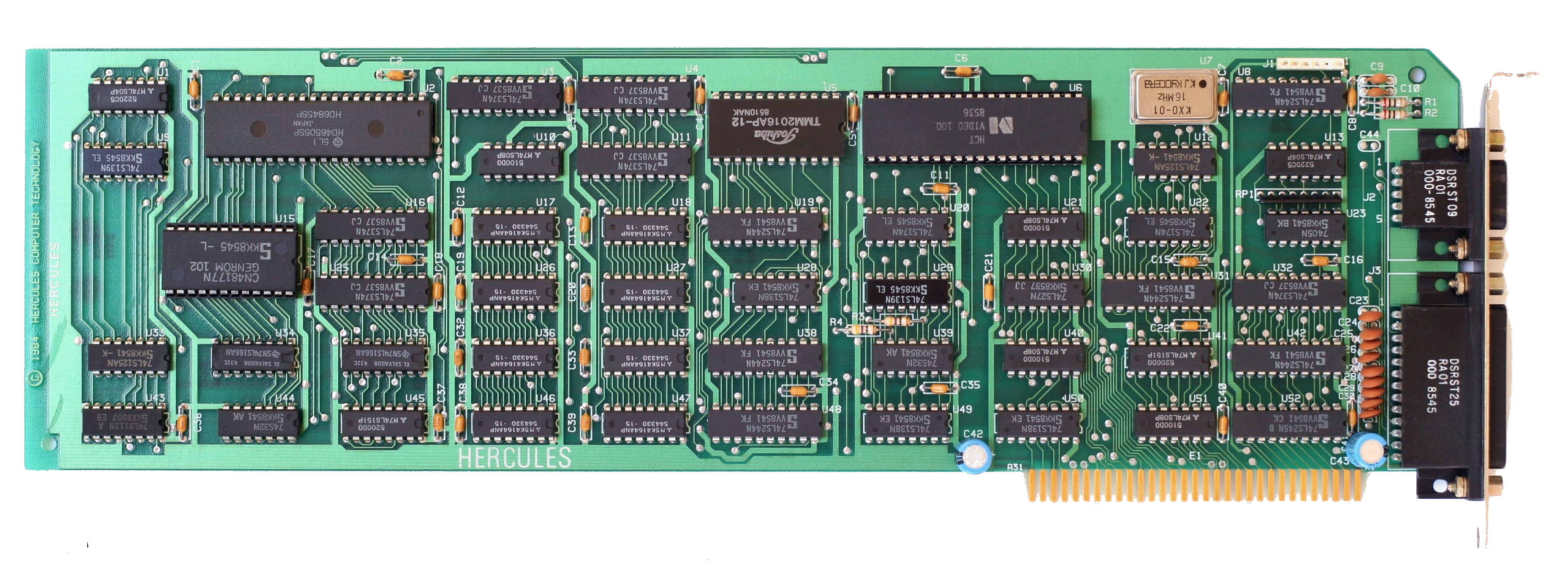

It combined text-only MDA processing with the pixel-addressable graphics mode introduced by CGA, preserving the quality of both. You'd get the same sharp text with backward compatibility for existing MDA slots and monitors, alongside high-res graphics at 720×348 pixels. Not only that, but it also introduced a 32KB frame buffer — effectively an early form of VRAM — that allowed programs to directly address video memory, which no IBM display adapter had done before.

Hercules didn't invent the frame buffer concept, but it was the first MDA-compatible adapter with that power. Before it, both MDA and CGA display adapters would just tell the monitor to display certain characters on the screen, specifying where to place them, which took up a sizeable chunk of memory since it was just stored code rather than actually drawing complex shapes or images. By giving Hercules its own 32KB of memory, programs could write data directly to it and avoid clogging the CPU's main bus, enabling more complex graphics that "render" much faster.

The video then discusses how many manufacturers tried to clone Hercules cards, with more knockoffs than real ones, often combining CGA and Hercules on a single board with DIP switches. Even some ATI cards later on offered dual-mode support, allowing them to emulate CGA and Hercules. Our host also showed the Hercules Graphics Card Plus, the next-gen version of the same concept, with double the memory, a smaller overall footprint, and dedicated "RAM Font" support.

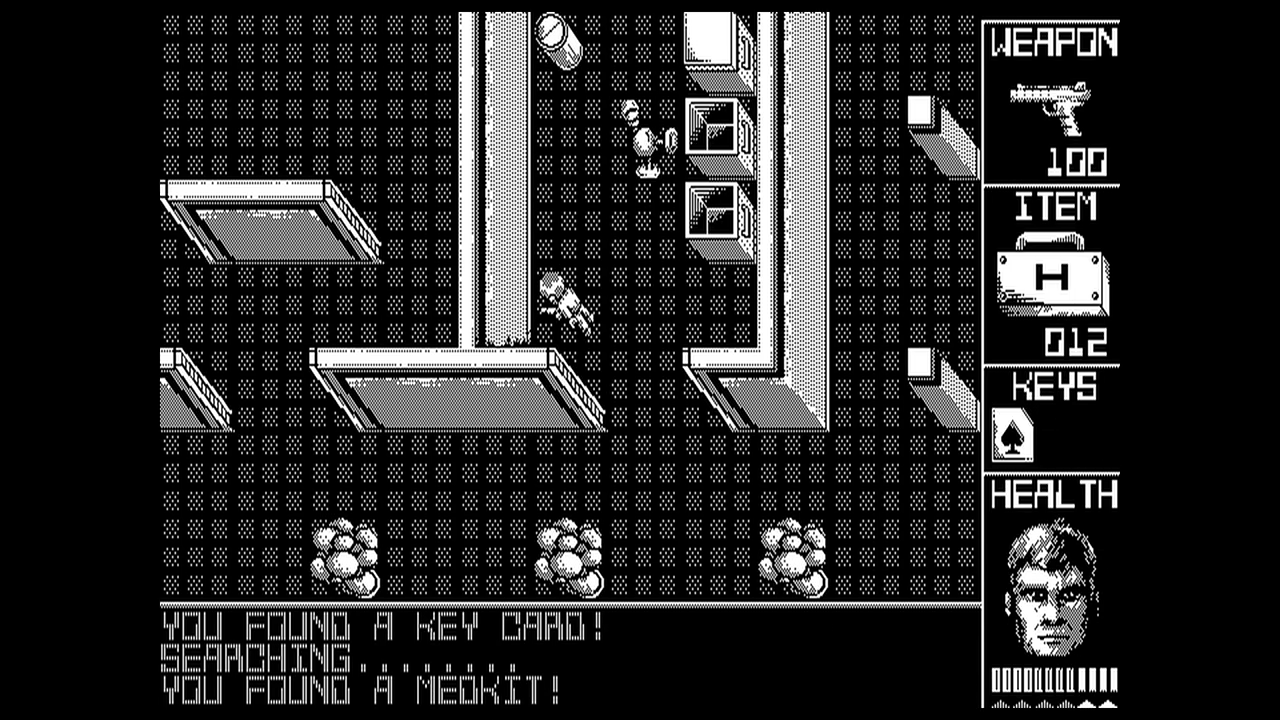

Beyond businesses, the HCG made quite a stir in the gaming community, with many games ported to work with the new standard. Some would require CGA emulation; others would essentially be glorified CGA games that scaled up the resolution, resulting in jagged lines. But there were a few that were truly optimized for the Hercules, such as Sim City and Microsoft Flight Simulator 3. According to MobyGames, 536 games supported Hercules, while 1900+ supported CGA.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The narrator actually goes on to show their own games from back in the day that supported Hercules but employed CGA techniques, so they didn't look all that great. So, they went in and recently rehauled two of them — Planet X3 and Attack of the Pesky Robots — demonstrating a "night and day difference" between forced scaling (from 200 to 350 horizontal pixels) and proper native support. 8-Bit Guy talks about a lot more games in his video, using a real MDA monitor from the era, so make sure to check it out.

Fast forward a few years to the tail end of the 16-bit era (early 90s), and Windows 3.1 had just come out. It was barely usable on CGA but looked much better on Hercules, with our host jokingly pointing out the only downside: the lack of color, which would make Solitaire difficult to play. Before this, in 1987, Hercules released the InColor card, which added color to the previously monochrome-only Hercules, but only six games supported it, and IBM's competing EGA standard outshone it in every way.

That was a trip down memory lane featuring a true OG of the graphics world. Without the bold and creative detour that the Hercules card represented, the PC industry might’ve been a little less daring. Like many a standard of those times, it served as a stepping stone in the right direction, marred by flaws but necessary to progress. Competition is often cited as the key to innovation, and Hercules proved itself a worthy combatant through the lens of history.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

call101010 meh , back in these days no one looked for PCs to game on ... ANY console was FAR better than Anything PC ... more over Other PCs like Amiga had 4000 colors and higher resolution and SOUND.Reply

Windows PC's started competing in gaming AFTER Soundblaster card was released AND the VGA Cards . B4 that no one even looked at them for gaming. -

bit_user Reply

Well, you couldn't match the clarity and sharpness of monochrome. Anything color had a shadow mask. Even worse were the machines which used televisions for color monitor, because composite video had lots of problems with color fringing, dot crawl, and even luma resolution was quite limited.call101010 said:Amiga had 4000 colors and higher resolution and SOUND.

Amiga did release a high-res monochrome monitor, but that wasn't until 1988. As the article noted Hercules came out in 1982. You really can't compare tech across a gap like that. Those are two different epochs in personal computing. -

Evildead_666 Did AI write this ?Reply

Attack of the PETSCII robots.

Either that or there is absolutely no proofreading any more.... -

Evildead_666 Reply

MCGA was great for gaming, especially if it was all you had.call101010 said:meh , back in these days no one looked for PCs to game on ... ANY console was FAR better than Anything PC ... more over Other PCs like Amiga had 4000 colors and higher resolution and SOUND.

Windows PC's started competing in gaming AFTER Soundblaster card was released AND the VGA Cards . B4 that no one even looked at them for gaming.

256 colours in 320x200 :) -

call101010 Reply

Amiga 1000 was released in 1985 , 640x256 resolution and 12bit colors (4000) had VGA out for monitors , and composite for TV . at that time the PC was stuck with CGA gaming and EGA was a new thing on PCs with 256 colors only...bit_user said:Well, you couldn't match the clarity and sharpness of monochrome. Anything color had a shadow mask. Even worse were the machines which used televisions for color monitor, because composite video had lots of problems with color fringing, dot crawl, and even luma resolution was quite limited.

Amiga did release a high-res monochrome monitor, but that wasn't until 1988. As the article noted Hercules came out in 1982. You really can't compare tech across a gap like that. Those are two different epochs in personal computing. -

bit_user Reply

I get it, but if you want clear text or line graphics, then color is actually counterproductive. When you want to turn on a pixel on a color monitor, it's not just a blob of light, it's fragmented into RGB dots. The shadow mask is there to ensure the red gun only hits the red phosphors, and same goes for green and blue, but it breaks up the continuity of the image, and yet the edges are no sharper because the shadow mask is (usually) much finer than the pixel resolution of the image.call101010 said:Amiga 1000 was released in 1985 , 640x256 resolution and 12bit colors (4000) had VGA out for monitors ,

Shadow mask closeup cursor

I have some personal experience with the matter. My dad bought a PC in the mid-80's and went for Hercules, instead of CGA or EGA. I hated it, because obviously I wanted color. He was more interested in CAD, and for that you want sharp line graphics, and he was right.

Later, when I started working, I had a chance to see some old 19" Sun black & white monitors that were run at 1280x1024. They were incredibly clear and sharp. I had started using tiny fonts, so I could fit more on a 1600x1200 screen, but issues like convergence were my bane. These older Sun monitors had clearer text at that resolution than my PC at the time.

Amiga obviously knew this, which is why they later introduced a monochrome monitor of their own, as I mentioned above.

EGA was actually 16-colors out of a 64-color palette. I would later get one of those (used), when my mom got the above-mentioned machine. Massive step forward, for someone who valued color and gaming, but still no VGA.call101010 said:and composite for TV . at that time the PC was stuck with CGA gaming and EGA was a new thing on PCs with 256 colors only...

PCs didn't reach 256 colors until VGA came out in 1987, with the IBM PS/2. But, it didn't really go mainstream until a couple years later. I think PS/2 was pretty expensive, and probably not common outside of schools and businesses. My dad later got a 386 with a XGA (Tseng ET4000) that could manage 256 colors at up to 1024x768 on our tiny 13" CRT monitor. -

magbarn I still remember playing maniac mansion on a IBM PC with a Hercules card on the IBM monochrome monitor with the slowest phosphors that you've ever seen.Reply -

derwood5555 I remember it well. I was 17 at the time and I worked an after school job in the testing department at Hercules. I tested each board after assembly. Did that for about 6 months before graduating high school in 1984. Hercules was a good company to work for. Kevin and Van were in charge. They had a good product and Van was always coming up with improvements on his own designs.Reply -

call101010 Reply

It puzzles me how backward in technology PC's were compared to their competitors back in the 1980's and still they survived and all other Personal computers disappeared.bit_user said:PCs didn't reach 256 colors until VGA came out in 1987, with the IBM PS/2. But, it didn't really go mainstream until a couple years later. I think PS/2 was pretty expensive, and probably not common outside of schools and businesses. My dad later got a 386 with a XGA (Tseng ET4000) that could manage 256 colors at up to 1024x768 on our tiny 13" CRT monitor.

I think they owe their success to Microsoft who ignored all other platforms and focused on IBM PC's ...

Sun and Dec Alpha and Amiga and Atari ST , all were 5 years ahead and still they failed to stay.

True Sun and Dec Alphas were expensive , but I dont get it how Atari ST and Amiga failed to eliminate PC's. Maybe because they allowed third party compatible PC ? but again that hurt IBM personal computer department as well . -

GeorgeLY Additionally Hercules had much longer shelf life than other adapters: it had frame buffer at the different address (0xB0000 if I remember correctly) than mainstream cards, which allowed it to be installed alongside them, thus resulting in two monitors setup. Many developers had them for two monitor debugging. I bought one in 1995 for around $100 specifically for that.Reply