Arm's New Cortex-M55 and Ethos-U55 Make Leaps for On-Device AI-Inferencing

The offerings allow IoT devices to do AI inferencing without connecting to the internet.

Arm, the maker of embedded processors and CPUs for various mobile devices, today announced its new Cortex-M55 processor architecture and Ethos-U55 neural processing unit (NPU). These chips are said to increase the level of AI processing power over their predecessors.

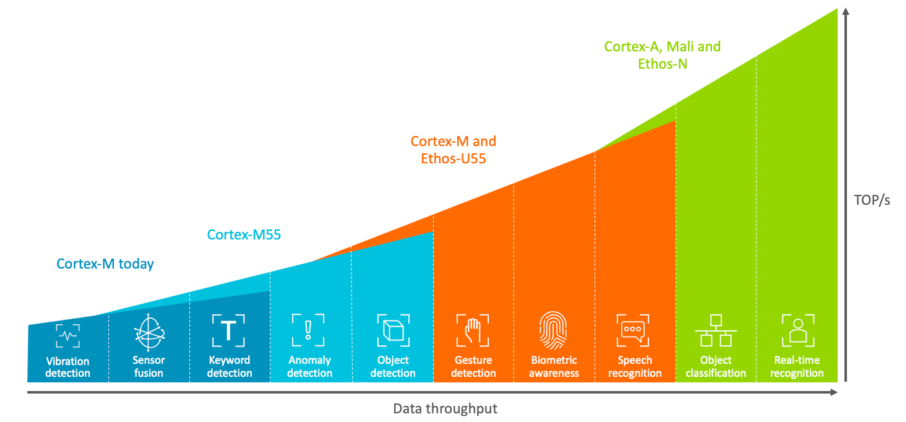

The Cortex-M55 CPU focuses on machine learning performance. It offers five times faster digital signal processing (DSP) performance and a 15 times increase in machine learning inferencing than previous Cortex-M generations. This will allow small Internet of Things (IoT) devices to perform their own inferencing without a networked connection -- something that previously was mostly limited to more powerful desktops or bigger, purpose-built devices.

With an embedded Cortex-M55, the Ethos-U55 NPU offers a jump in performance that enables more complex tasks, such as gesture detection and speech recognition. The pairing of these two architectures nets a 480-fold improvement in machine learning performance over their predecessors, which is just the jump needed to make on-device inferencing possible for a variety of AI tasks. The Ethos-U55 is customizable, and being a complete package makes it fit in much smaller, low-power applications.

“Enabling AI everywhere requires device makers and developers to deliver machine learning locally on billions, and ultimately trillions, of devices,” said Dipti Vachani, senior vice president and general manager, Automotive and IoT Line of Business, Arm. “With these additions to our AI platform, no device is left behind as on-device machine learning on the tiniest devices will be the new normal, unleashing the potential of AI securely across a vast range of life-changing applications.”

The Cortex M-series CPUs aren't manufactured by Arm itself. Instead, Arm licenses out the designs, and its partners bake it into their own silicon. This allows a wider reach, and with the designs being highly customizable, device manufacturers can further tweak the silicon design to optimize its performance within their offering.

Developments such as the Cortex-M55 and Ethos-U55 NPU will eventually lead to more AI-capable devices that are small. These chips are set to show up in various IoT devices, mobile phones and more.

One example could be a doorbell that's able to recognize your face or voice locally, without the need to transmit that data to the internet for remote inferencing. This would also address one of the main concerns about today's AI implementations. Without needing to transmit our data to remote servers for inferencing, we can rest knowing that our conversations stay inside our homes' walls.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Niels Broekhuijsen is a Contributing Writer for Tom's Hardware US. He reviews cases, water cooling and pc builds.

-

bit_user ReplyOne example could be a doorbell that's able to recognize your face or voice locally, without the need to transmit that data to the internet for remote inferencing. This would also address one of the main concerns about today's AI implementations. Without needing to transmit our data to remote servers for inferencing, we can rest knowing that our conversations stay inside our homes' walls.

Oh, they'll still be networked. That's the whole point of smart devices - the doorbell cam recognizes who's at the door and sends a notification to your phone (via the cloud). And, when you enroll new faces for it to recognize, that data will go the the cloud, so that multiple devices can share it (among other reasons).

Pushing inferencing out to the edge is mostly a cost-savings, so that device makers don't have to shoulder expensive fees from processing all of the devices' video on the cloud. The benefit to consumers is that you don't need as much bandwidth (although we're told 5G will solve that) and your device can still work when you're in a dead spot or have other connectivity issues.

Although this can be pro-privacy, I don't believe it will. Device makers have too much to gain by scraping, collecting, mining, and selling people's data. Consumer also benefit from connectivity and automatically getting updated deep learning models that better fit their device's usage.