Chinese Exascale Supercomputer Prototype Tested with AI Workloads

Chinese researchers test prototype of Phytium-powered supercomputer.

Chinese scientists have started to test a prototype of locally developed exascale supercomputer using convolutional neural networks (CNN) AI workloads. As reported by Next Platform the Tianhe-3 machine runs Armv8-based Phytium 2000+ (FTP) and the Matrix 2000+ (MTP) processors that were primarily designed for traditional supercomputing with full FP64 precision.

Supercomputing and artificial intelligence are poised to become important competitive advantages that technologically developed companies and countries have against others in the coming years. Exascale supercomputers will be instrumental for traditional simulations, data analytics, and scientific discoveries, whereas AI supercomputers will be used for various pathfinding tasks, such as drug discovery or space exploration that do not necessarily require FP64 precision. The key thing is that to be competitive, supercomputing sites will need to support both AI and HPC workloads, which is why modern supercomputers use both CPUs and GPUs to have maximum flexibility and to support all kinds of capabilities.

Being behind their American rivals, Chinese developers are exploring multiple approaches to exascale systems, including 'traditional' CPU + GPU architecture and hybrid processors with vector units. Yet another approach tested by Chinese researchers is Tianhe-3 machine that uses proprietary Armv8-based Phytium 2000+ (FTP) and the Matrix 2000+ (MTP) processor/node architecture, reports Next Platform.

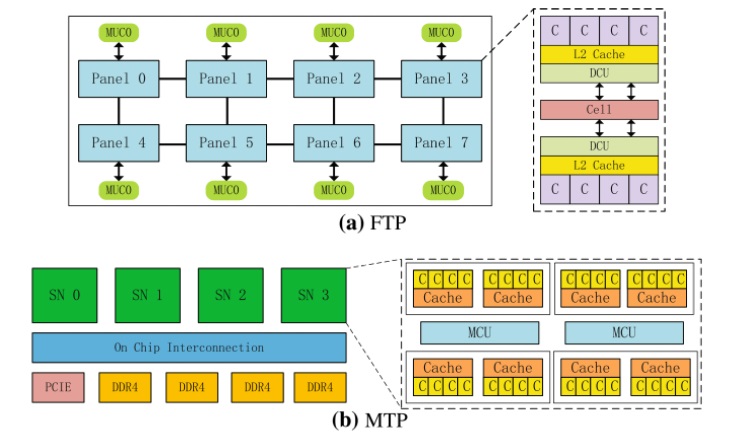

The Phytium-designed FTP part has 64 cores distributed over eight eight-core 'panels' each featuring its own memory controller unit (MCU), 2MB L2 cache per every four cores, and 32KB L1 cache per core. The 64-core FTP operates at 2.20 GHz and delivers 614.4 FP64 GFLOPS performance. To put the number into contest, Fujitsu's 48-core A64FX processor for the Fugaku supercomputer has a theoretical peak performance of 3.3792 FP64 TFLOPS. Meanwhile, the MTP has four 'super nodes' featuring 32 cores and two MCUs per node. The report does not disclose power consumption of FTP and MTP, it also omits peak performance of the latter.

To test the supercomputing architecture, the researchers used a CNN AI workload with a single sample input, but found that the node is "weak and the processor performance itself cannot be fully exerted." Meanwhile, CNN performance with multiple inputs was found to be considerably better as there was a "huge improvement of both two processes (GEMM and Conv2D with the increase in input samples." Still, while FTP could approach its peak performance, MTP could not be fully exploited because of low memory bandwidth.

"We evaluated the training performance of CNN on the Tianhe-3 prototype," the abstract description of the performance evaluation reads. "The performance of image convolution and matrix multiplication on the FTP and MTP was tested to evaluate the single-node performance, and the Allreduce element was tested to evaluate the scalability of the distributed training on the prototype cluster. We also qualitatively analyzed the performance bottlenecks of CNN on the FTP and MTP processors by Roofline model and provided some optimization suggestions for improving the CNN on the Tianhe-3 prototype."

It is noteworthy that unlike upcoming exascale supercomputers developed in the U.S., Tianhe-3 was not optimized for AI workloads, so the very fact that it can run them is significant. Meanwhile, peak FP64 performance of the Phytium 2000+does not seem to be very high when compared to Fujitsu's A64FX, so it remains to be seen whether it is reasonable to use such a CPU for exascale systems. Furthermore, with Phytium blacklisted by the U.S. government, it is unclear if the CPU developer can use a more advanced fabrication process to scale its Arm architecture for supercomputers to gain performance and lower power consumption.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.