Cyberpunk 2077 Phantom Liberty Will Be Very CPU Intensive

Phantom Liberty is expected to hit 90% CPU usage on 8-core chips.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Cyberpunk 2077 developer Filip Pierściński has revealed on X (Twitter) that the game's upcoming Phantom Liberty DLC will be extremely demanding on system hardware, particularly CPUs, with native support for 8-core processors. The CPU demands are so high that Pierściński warns gamers to check the condition of their CPU cooling system to suss out any potential problems.

He recommends running an application like Cinebench to make sure the cooling is adequate for keeping the CPU temperatures in check under sustained loads. Pierściński says the new expansion will average 90% CPU utilization on an 8-core chip, which basically amounts to a CPU stress test. Cooling will also be pushed even harder, due to higher ambient temperatures generated by any graphics card that might be dumping heat into the case interior.

Before release CP2077 2.0 and PL please check conditions of your cooling systems in PC. We use all what you have, so workload on CPU 90% on 8 core is expected. To save your time please run Cinebench or similar and check stability of your systems 😉https://t.co/TWOAkP0ONuSeptember 11, 2023

Pierściński doesn't reveal why the new expansion pack is so CPU-demanding. But if previous Cyberpunk 2077 updates are anything to go by, it's probably related to a combination of ray-tracing (i.e. BVH tree generation) combined with all the crowds and other NPCs. The last major graphics update that came to Cyberpunk 2077 was the game's RT Overdrive mode, which drastically improved the game's visual fidelity by replacing all rendering with "path tracing." As you'd expect, RT Overdrive is substantially more demanding on system hardware, and requires high levels of upscaling (4X) plus potentially DLSS 3 Frame Generation to achieve 60 fps or more on RTX 40-series GPUs.

Ray tracing is not only extremely intensive on the GPU, but also CPU intensive, which is easy to forget. Much of the initial setup work for the GPU ray tracing is currently done on the CPU, specifically the creation of the Bounding Volume Hierarchy (BVH) structure that helps to optimize the ray/triangle intersection calculations. The more complex a game world, in terms of geometry, the more CPU intensive the BVH generation can become. Still, we don't know for certain that ray tracing is the primary culprit for the upcoming high CPU demands.

The only additional details the developer gave regarding CPU utilization, related to SMT (Symmetric Multi-Threading, or two threads per core) usage with the new expansion pack. Pierściński said SMT support will be natively supported, without any mods, confirming that the Phantom Liberty will ship with a patch fixing the game's notorious CPU thread utilization issues that are present with the current game, allowing the game to utilize more CPU threads more effectively.

What CPU and Cooler Do You Need For Phantom Liberty?

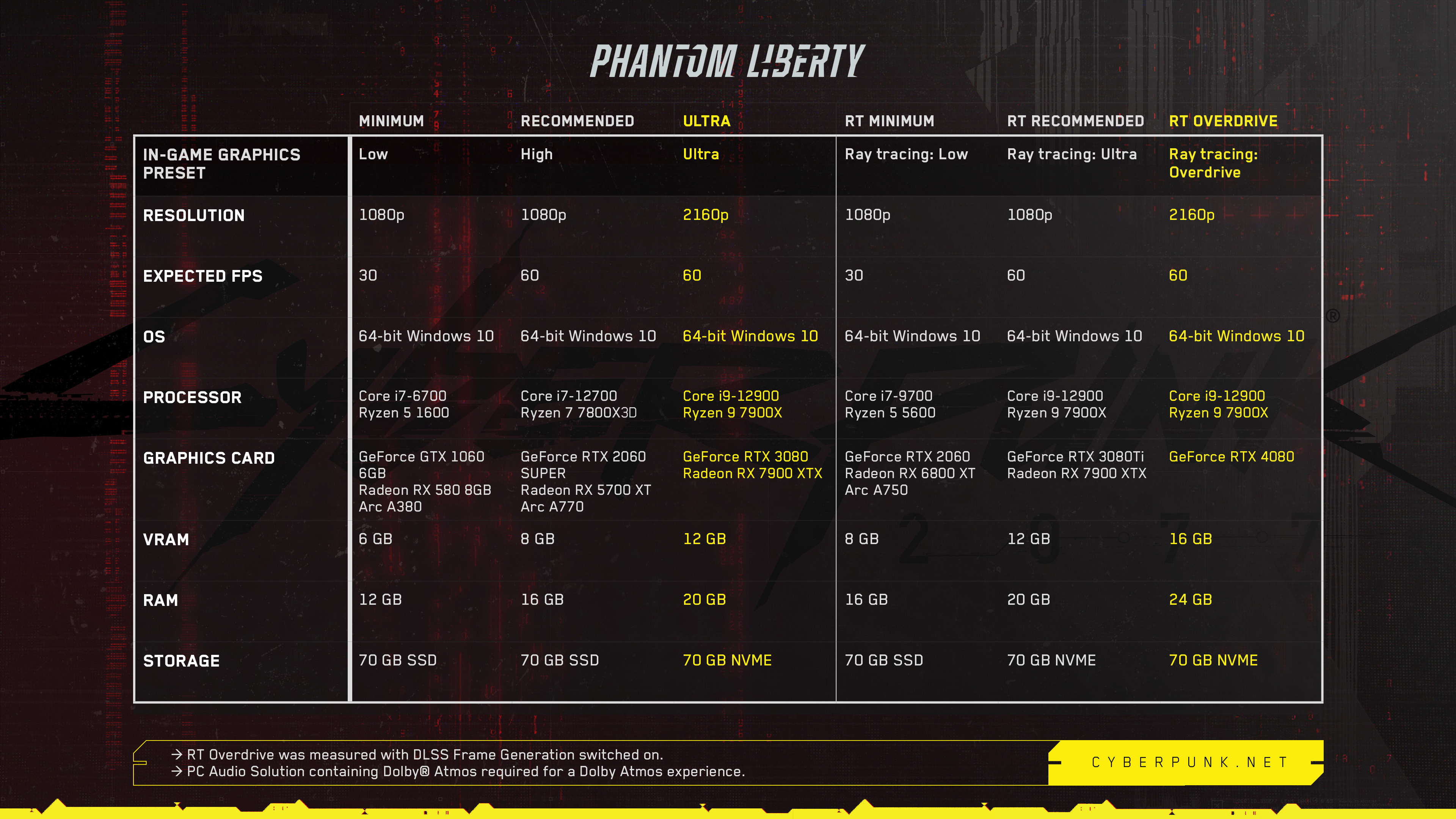

The Cyberpunk 2077: Phantom Liberty system requirements are already available and account for the increased CPU demands. For lower-quality presets without ray tracing, an i7-6700 or Ryzen 5 1600 will supposedly suffice, but for RT Overdrive a Core i9-12900 or Ryzen 9 7900X is recommended. Again, that suggests the 90% utilization of an 8-core CPU involves ray tracing of some form.

CD Projekt Red's system requirements effectively suggest buying one of the Best CPUs for gaming, like the Ryzen 9 7950X3D, 7900X3D, or Ryzen 7 7800X3D. Alternatively, Intel's Core i9-13900K or i7-13700K should do nicely. In our previous Cyberpunk 2077 tests, we've found that all five of these chips perform well in the game, offering virtually identical performance between each other. They also meet or exceed Phantom Liberty's 8-core "sweet spot."

If you just want to play the game, perhaps without ray tracing, older 4-core and 6-core CPUs should suffice. Your GPU of choice will also play a role, as mainstream parts like an RTX 3060 or RX 6700 XT aren't likely to need as potent of a CPU to keep them busy.

As for cooling, our general advice is to try and keep peak CPU temperatures below 90C, and preferably 80C or less, though the most recent chips tend to spike as high as 95C or more even with some of the Best CPU Coolers. Older generation chips like the i9-9900K or Ryzen 9 5900X as an example won't tend to run nearly as hot.

If you want to test your PC, fire up Cinebench and set it to loop for an hour or two if you want to see how your system currently manages sustained CPU intensive workloads, and we recommend HWiNFO64 for logging and monitoring temperatures. Again, keep in mind that a game will inherently put more of a stress on your system, as it will also heat up your graphics card and not just the CPU. You could also run FurMark as a background task to keep your GPU busy, if you're looking for worst-case temperatures — but do keep an eye on temperatures if you do that, as it's definitely possible to push your system too hard with that combination.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

gman68 Not gonna upgrade my CPU or GPU for a DLC. Just turn down the settings. If the gameplay doesn't justify the cost of the DLC, no amount of light-show is going to make a difference to me. Although my system will probably handle it fine with medium/high settings anyway.Reply -

Order 66 I have nothing against Cyberpunk but I have never played the game. I also don't really feel like upgrading my 7700x right now.Reply -

PEnns I am beginning to think game developers are in bed with GPU makers planning how to screw.......us peasants.Reply -

salgado18 On one side, if most of the CPU usage is for RT, I'll be very disappointed. Why can't game developers push other boundaries like NPC AI by using up on the CPU? I hope that's what they are doing, not just "moar graphs".Reply

In the other side, anyone who uses up 90% of 8 cores can use 50% of 16 cores, or even 90%. If threading is well made, it can saturate anything. Ok, I think there's a point where it won't help much (8 cores give the maximum feature), but I really hope it can scale sideways too. Especially since they ask for current-gen CPUs, then last gen 12 or 16 cores should work nicely.

We need benchmarks! Go as far back as Zen 2 if possible! -

colossusrage Someday a developer is going to create a game that has awesome physics, awesome AI/NPCs, awesome graphics, and it will run well, scaling on various hardware.Reply -

thestryker Reply

Frostbite has historically been extremely good at this (at least in the Battlefield games) where it will scale to whatever you've got. This is why back in the BF4 days AMD CPUs weren't as far behind Intel as they'd get much higher utilization than most games. I would love to see more games that scaled well and I thought the theory GPG used with Supreme Commander was great in that they broke up their game systems and they would spread out across whatever cores were available. It sure at least seems like this sort of thing is barely done anymore.salgado18 said:On one side, if most of the CPU usage is for RT, I'll be very disappointed. Why can't game developers push other boundaries like NPC AI by using up on the CPU? I hope that's what they are doing, not just "moar graphs".

In the other side, anyone who uses up 90% of 8 cores can use 50% of 16 cores, or even 90%. If threading is well made, it can saturate anything. Ok, I think there's a point where it won't help much (8 cores give the maximum feature), but I really hope it can scale sideways too. Especially since they ask for current-gen CPUs, then last gen 12 or 16 cores should work nicely.

We need benchmarks! Go as far back as Zen 2 if possible!

Can't tell if joke, but CrysisPEnns said:I am beginning to think game developers are in bed with GPU makers planning how to screw.......us peasants. -

NeoMorpheus I trully expect at least 3 hit pieces every day blaming nvidia and intel for everything wrong with the game, just like the non stop barrage of negative articles about Starfield and AMD.Reply -

martinezkier.dacoville Lol even Cyberpunk 2077 was already cooking my i7-8700 and thats with a 150w tdp tower cooler with fans at 100%.Reply -

blacknemesist Reply

7900XTX beats a 4090 except on 4k and only by a small margin.NeoMorpheus said:I trully expect at least 3 hit pieces every day blaming nvidia and intel for everything wrong with the game, just like the non stop barrage of negative articles about Starfield and AMD.

Context man, context.