Cyberpunk 2077 CPU Scaling, What Processors Work Best?

Stacking up the chips

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Cyberpunk 2077 is the most hotly-anticipated game in recent history, but it has proven to be punishing for even the most well-equipped PCs. We set out to test the new game with a wide range of the best CPUs on the market to determine which processors provide the best performance in a wide range of resolutions, but you can also see how the individual processors stack up in other games in our CPU Benchmarks Hierarchy page. We've tested AMD's Ryzen 9, 7, 5, and 3 processors across several generations, along with Intel's Core i9, i7, i5, and i3 processors as well.

This article focuses entirely on CPU performance: If you're looking for a closer look at how the game performs with a range of different GPUs, be sure to check out our in-depth Cyberpunk 2077 performance analysis. Thus far, our CPU testing has been with the current 1.04 patch, bringing minor performance enhancements over the original game code. However, CD Projekt Red has announced that it plans to release a new 1.05 patch for PCs soon (consoles already have the patch) with some performance enhancements, but the impact of those changes remains to be seen.

We'll update our testing after the new patch, but except for select AMD models' performance, we don't expect huge changes to our CPU benchmarks hierarchy we've created below. We could see slight increases in each processor's overall performance, but we expect that the performance deltas between the chips, and the rankings, will remain similar. Besides, we've already applied the 'fix' for Ryzen processors.

Cyberpunk 2077 has certainly earned a reputation for buggy performance, and one of the most surprising errata revolves around how the game code identifies AMD's Ryzen processors. Resourceful redditors have discovered that the game code significantly harms performance on some AMD processors because it apparently checks to see if your CPU uses Team Red's 2011-era Bulldozer platform and, if not, disables multi-threading.

The enthusiast community discovered an easy-to-apply patch, which you can learn about here, that corrects the issue on select AMD models. CD Projekt Red says that it will also include similar code in the upcoming 1.05 patch. However, the game developer says the patch will only address Ryzen models with six or fewer cores, and that eight, 12, and 16 core models are unaffected. Our testing says otherwise — we continue to see performance gains with eight-core Ryzen 3000 and 5000 series processors with the fix applied, so we'll have to see how the upcoming patch addresses the eight-core models. The remainder of the pending changes listed for the 1.05 patch don't appear to have massive implications for the game engine.

In either case, we've included testing both with and without the patch applied for the Ryzen 3000 (Zen 2) and 5000 (Zen 3) series processors with eight or fewer cores, but we found that the patch does little to improve performance with the Ryzen 1000 (Zen) and Ryzen 2000 (Zen+) models. For the older Ryzen models, we've found that the change introduces buggy behavior or performance regressions, so we've excluded those extra tests.

With the preamble out of the way, let's see how the Cyberpunk 2077 CPU performance hierarchy stacks up.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Cyberpunk 2077 CPU Benchmarks Setup

We tested with the highest number of NPC's available and followed the same benchmark methodology used for our Cyberpunk 2077 PC Benchmarks, Settings, and Performance Analysis article.

We begin our test sequence at the entrance to V's apartment building and walk a path down the stairs, across the street to the left, hang a right at the vending machine, then head across the street towards the two policemen on the other side. From there, we proceed to the right across the pavilion, down another set of stairs, and to the building on the far side. We walk this same path a minimum of three times (manually) for each and every data point you see below, provided we don't get hit by a car in the game, or get distracted by Twitter and miss our queue, both of which require extra benchmark runs. Suffice it to say, I've walked this same path in Cyberpunk 2077 so many times that I'm seeing the benchmark sequence in my dreams.

Be aware that performance can, and will, vary in other scenes and locations (up to a 20% performance range), but this scene gives us a good-enough sense of the type of performance you'll see with the various CPUs.

Our test systems easily outweigh the recommended minimum system requirements for Cyberpunk 2077. We outfitted our test systems with the Gigabyte GeForce RTX 3090 Eagle to reduce the GPU bottleneck as much as possible. Be aware that you'll see a smaller performance delta with lesser graphics cards. If you're already shackled by a GPU bottleneck, you might not see appreciable gains with the same processors we've tested below.

We strove to remove memory as a potential bottleneck, too, so we equipped our tests system with 32GB of DDR4 memory spread across two dual-rank DIMMs. We assigned the memory to operate at each respective processor's stock specifications, but be aware that additional memory tuning/overclocking could yield higher performance results. Likewise, single-rank memory kits could also result in less performance. As we noted in our recent look at how Cyberpunk 2077 runs with varying memory kits, you can definitely run the game with 8GB of memory, and 16GB should be sufficient, but using two dual-rank DIMMs (or four single-rank DIMMs) can improve performance. If you're not running a top-end GPU, memory capacity probably won't matter much.

You can see a further breakdown of our test system at the end of the article.

Cyberpunk 2077 CPU Scaling RT Ultra Settings Benchmarks

We included the highest-end models of the 1000, 2000, 3000, and 5000 series Ryzen processors. We've also included the Core i9, i7, i5, and i3 models from Intel's 9th- and 10th-generation processors. We'll add testing with HEDT models after we assess performance impacts from the upcoming 1.05 patch.

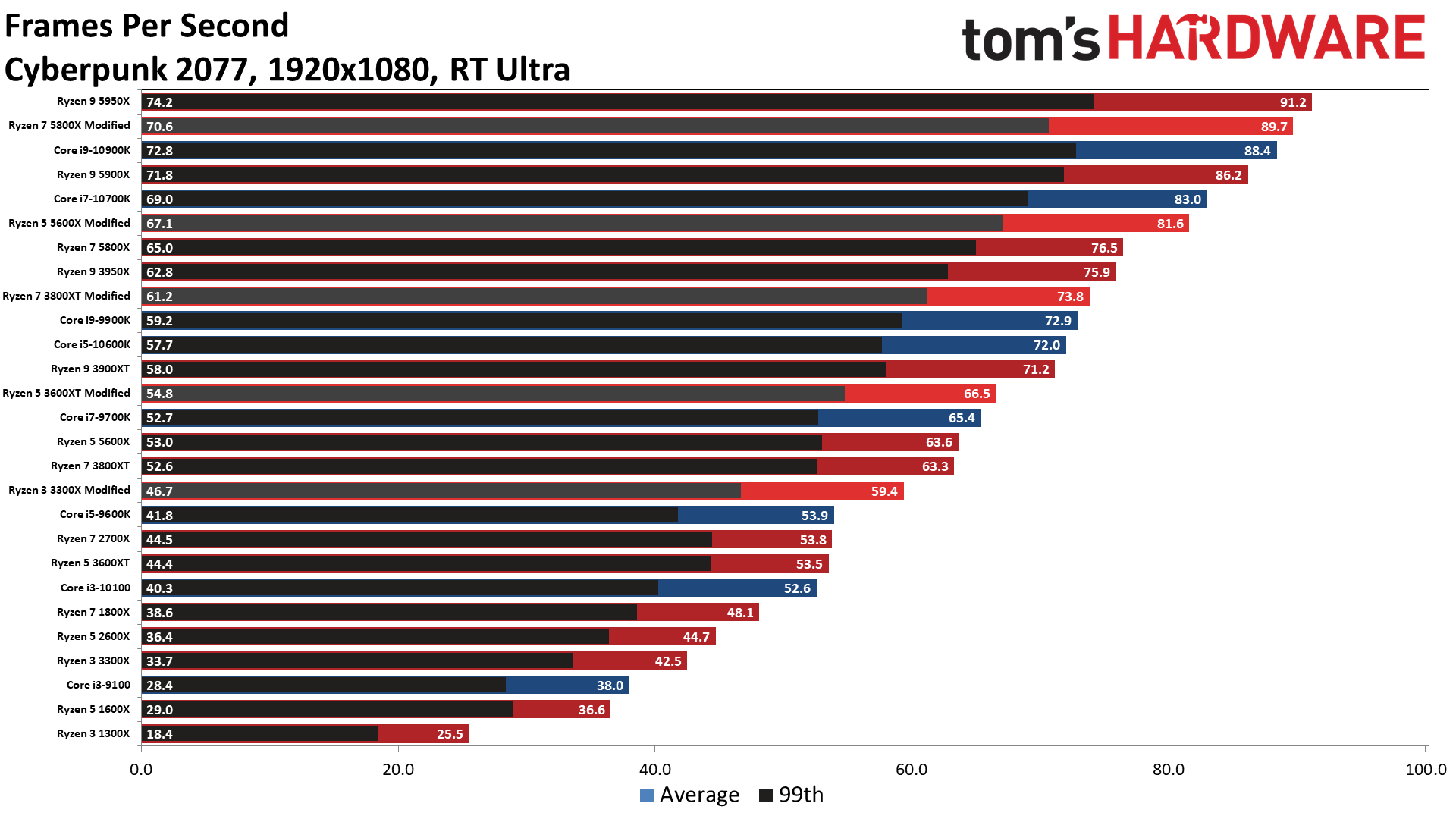

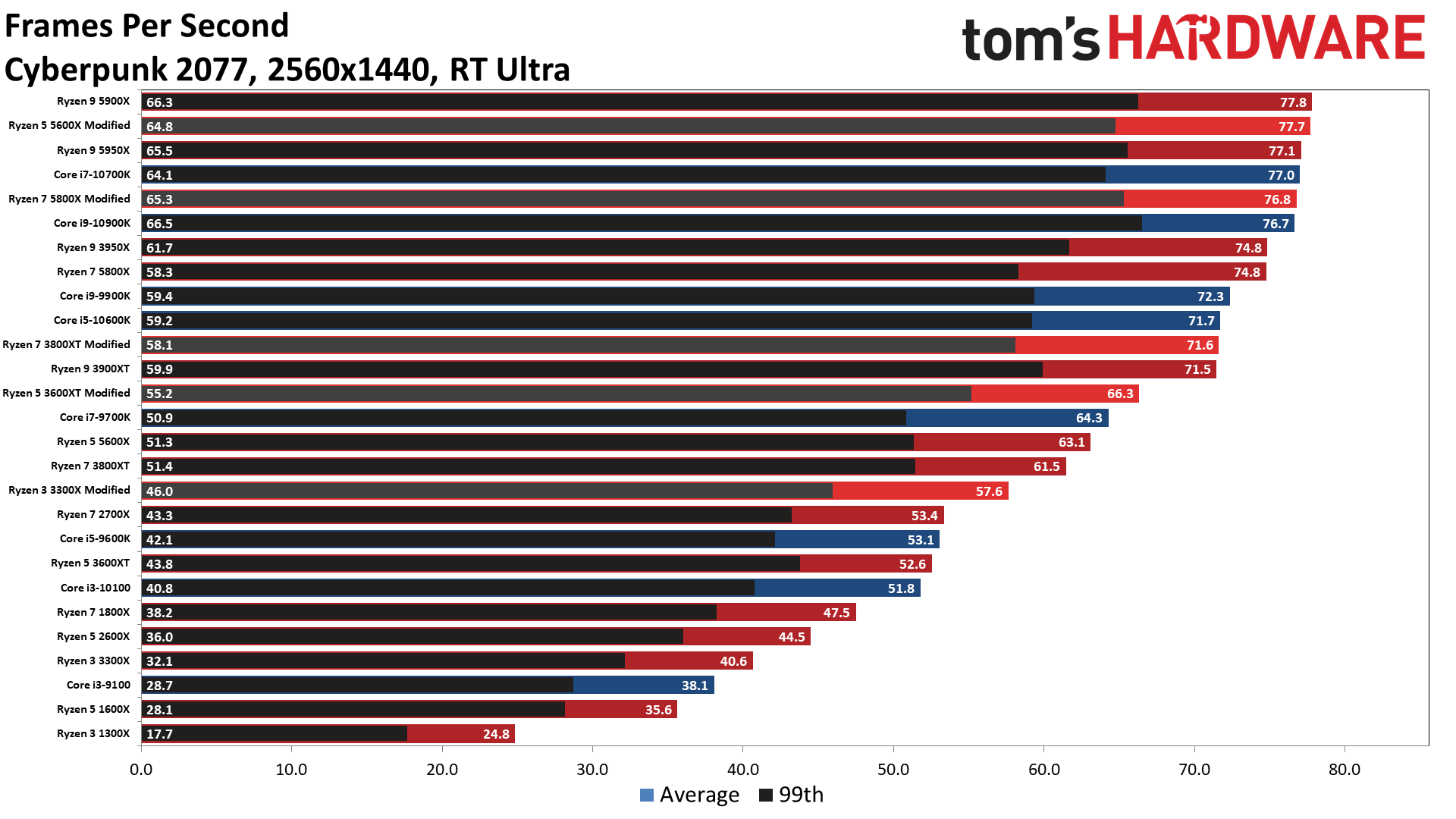

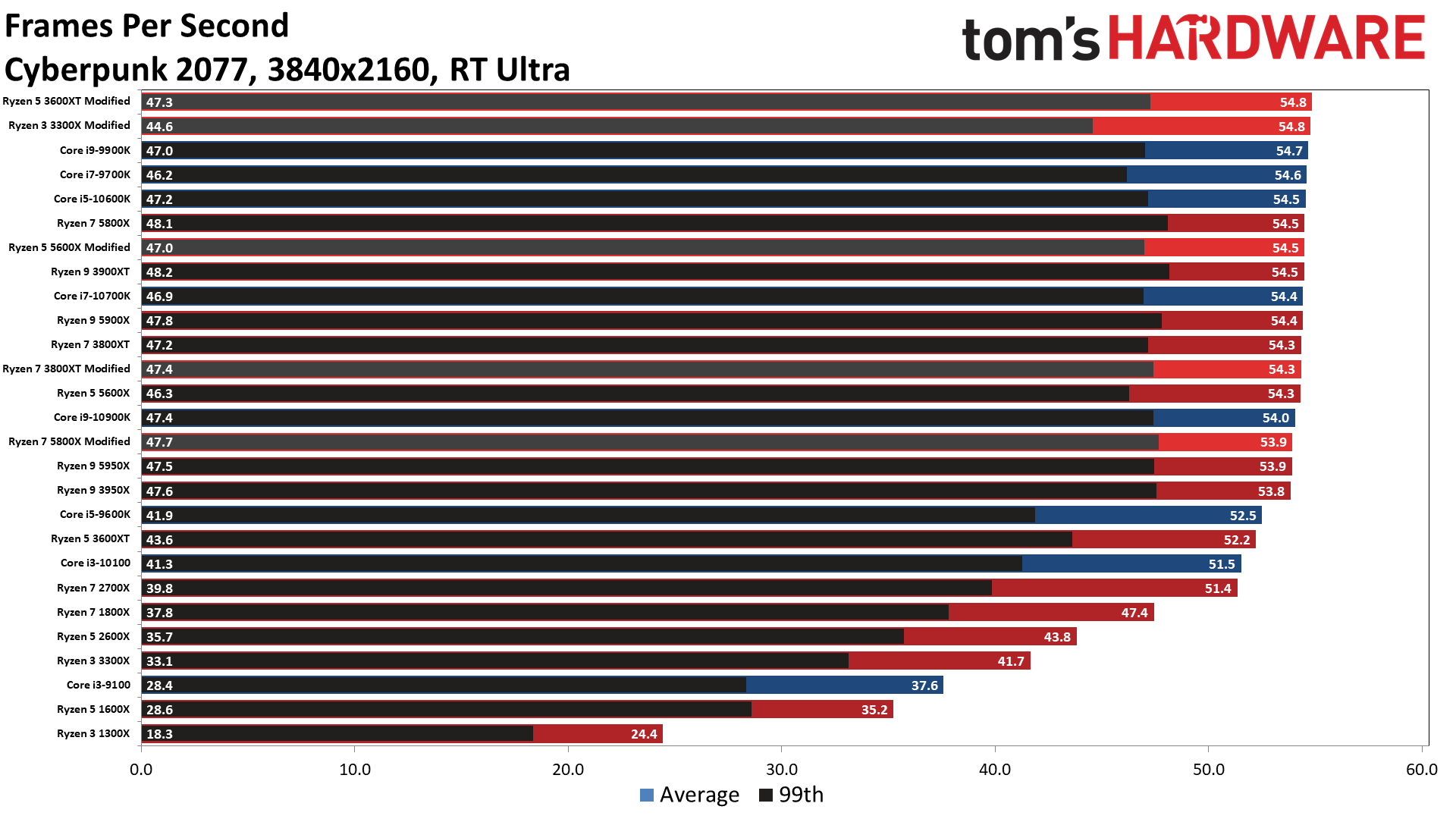

Processors marked in the charts with 'Modified' have the 'Ryzen fix' applied. We tested with three resolutions at various settings, but our first album of results focuses on the RT Ultra preset. This enables DLSS Auto (which uses the Quality mode at 1080p, Balanced mode at 1440p, and Performance mode at 4K), and also engages ray tracing, making it the most demanding preset. After we applied the patch, it also yielded big advances for a few of the AMD Ryzen processors.

AMD's Ryzen 9 5950X takes the lead at 1080p RT Ultra, trailed closely by the Ryzen 7 5800X, 5900X, and Core i9-10900K. You'll notice that these top-end processors are closely matched — most users would be hard-pressed to notice a difference between these CPUs.

The eight-core 16-thread Ryzen 7 5800X only makes its way into the upper tier of the chart after applying the fix. The performance improvement is also apparent with the eight-core Ryzen 7 3800XT too, casting some doubt on CD Projekt Red's claims that the 'Ryzen bug' doesn't impact AMD's eight-core processors.

Stepping down the chart, we see the Core i7-10700K trailing the chart-topping Ryzen 9 5950X by 9%. We also see a substantial gap between the 9th and 10th gen Intel processors, with the fastest 9th gen processor, the Core i9-9900K, trailing the Core i9-10900K by 17%, and the Core i7-10700K by 12%.

A quick look at the bottom of the chart shows how far AMD has come since it's Zen 1 and Zen+ processors. The Ryzen 7 1800X falls behind Intel's current i3-10100 despite having twice as many cores and threads, and the Ryzen 7 2700X only matches the Core i5-9600K. The SMT bug still affects those Ryzen CPUs, but using the modified code made the game unstable, so it's not currently a solution.

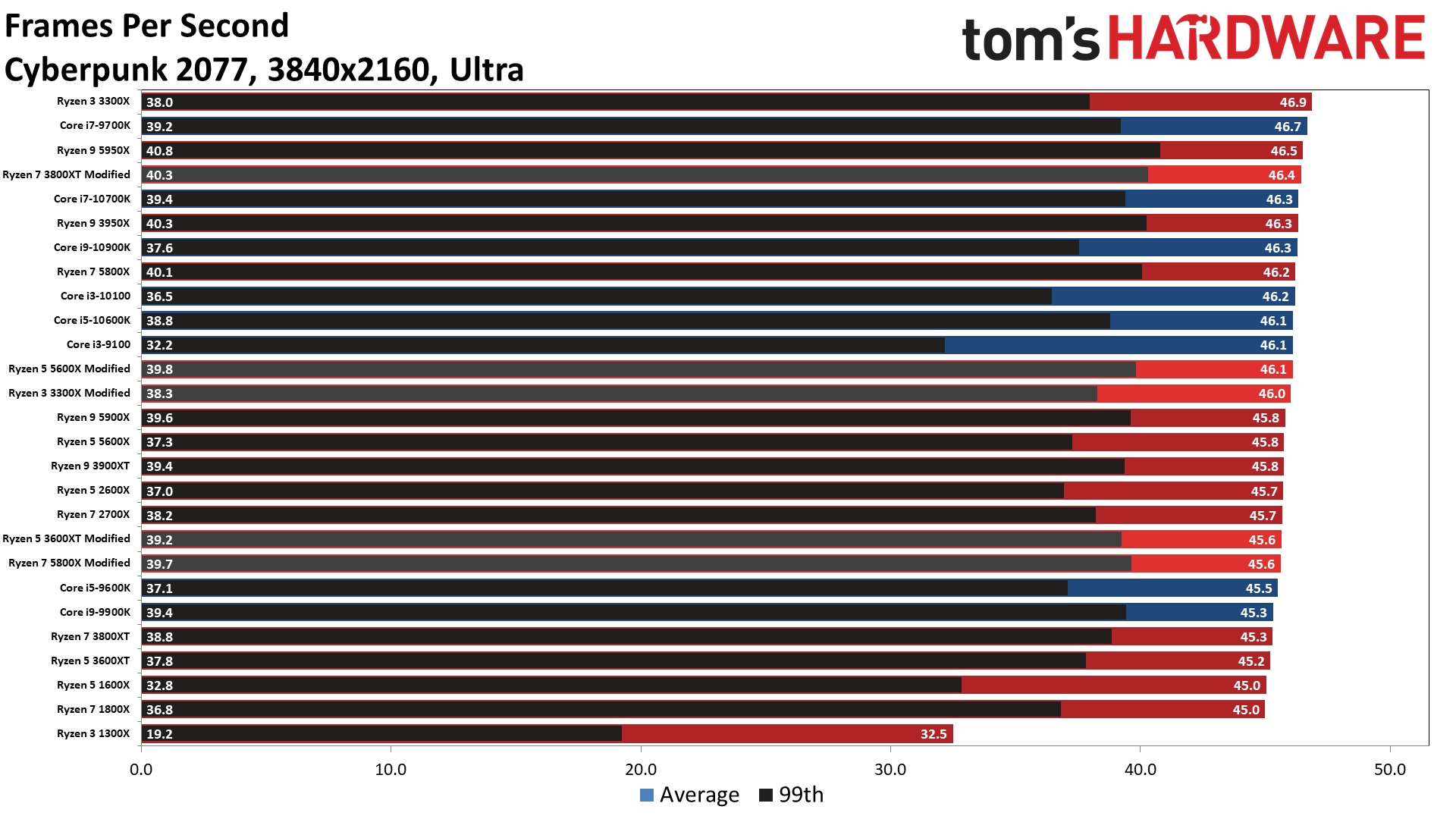

Flipping through the album to the QHD results shows that the deltas between the chips shrink tremendously as we increase resolution. Bear in mind that we're testing with the ridiculously-priced RTX 3090, so the difference between these chips will shrink dramatically with lesser GPUs. The 4K resolution, and the resulting GPU bottleneck, stands as the great equalizer, though, dragging all of the chips (except the Ryzen 3 1300X) into closer competition.

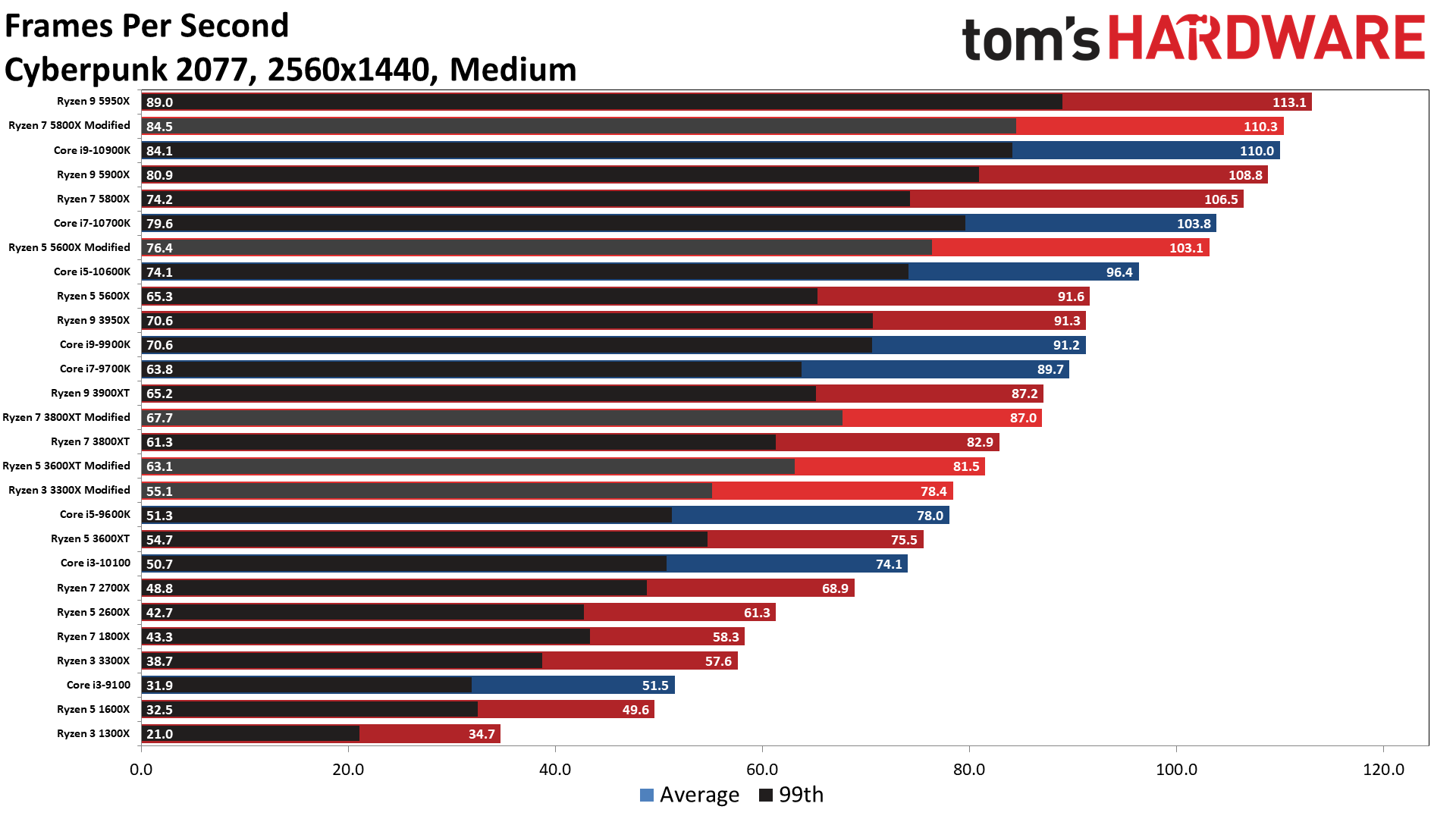

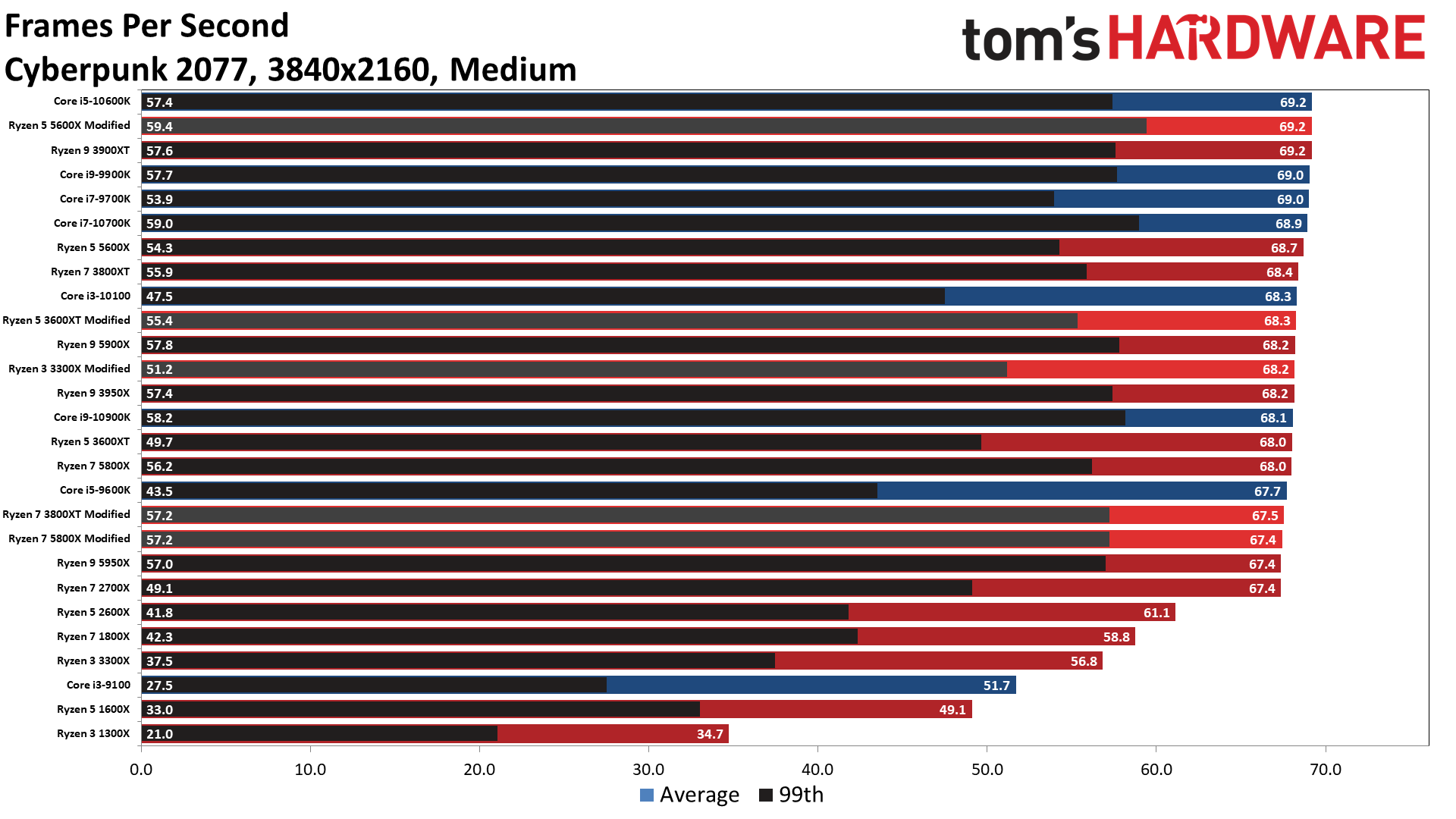

Cyberpunk 2077 CPU Scaling Medium Preset Benchmarks

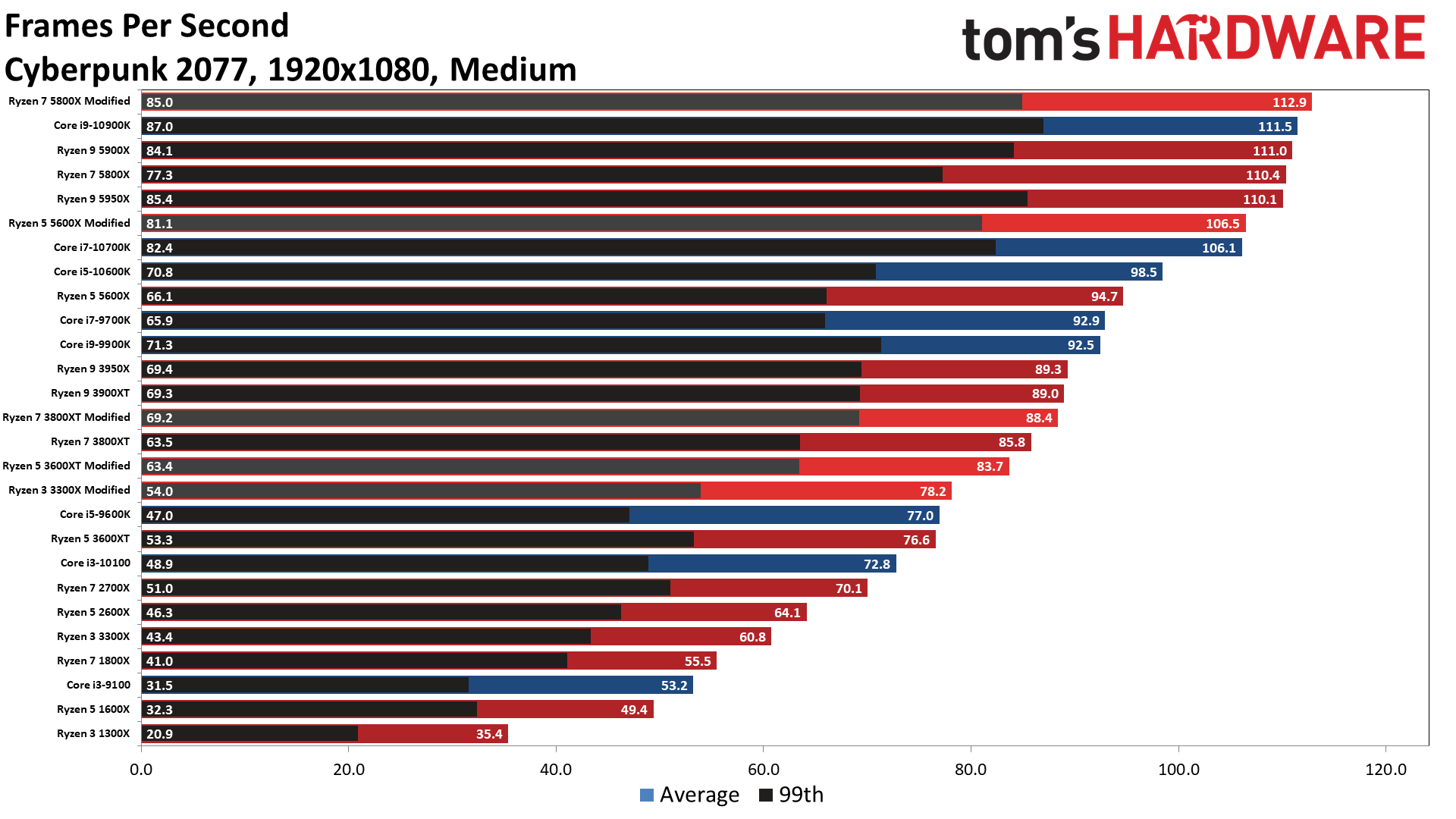

Dropping all the way down to the medium preset, maximum performance is much higher, and CPU bottlenecks continue to play a significant role. This preset features lower fidelity settings than the Ultra preset below, so we see more substantial deltas between the processors.

These standard rasterization tests stress the processor differently than the RT Ultra settings above, and at 1080p, we see the 'patched' Ryzen 7 5800X take a slight lead over the rest of the test pool. However, we see several of the same AMD contenders appear in the top five, with the Ryzen 9 5900X and Ryzen 9 5950X landing within a few percent of the leader.

AMD's core-heavy Ryzen 3000 models are impressive in this series of tests, but the Ryzen 5 5600X, our go-to recommendation for gamers, holds its own with 106.5 fps, basically matching the more expensive Core i7-10700K. Given the mostly-reasonable pricing for both the 5600X and the 10700K, it's a tough sell to step up to significantly more powerful chips from either camp, at least if you're looking for the best bang for your buck.

On the opposite end of the spectrum, the first-gen Ryzen processors struggle to keep pace in the 1080p tests, and hitches and stuttering were outwardly visible during testing. If you're playing at this low of a resolution, a second-gen or newer Ryzen processor is a better fit.

Again, it's readily apparent that the magnitude of the performance gains relies heavily upon how close the system is to a GPU bottleneck. Performance deltas steadily decline as we increase the resolution; however, unlike with the RT Ultra settings in the previous series of 4K tests, we see a bit more differentiation between the processors at the highest resolution. Anything below a Ryzen 7 2700X will introduce visible stuttering and hitching, and the Ryzen 3 1300X is a very rough experience. (We'd call it unplayable, but some people have lower standards.)

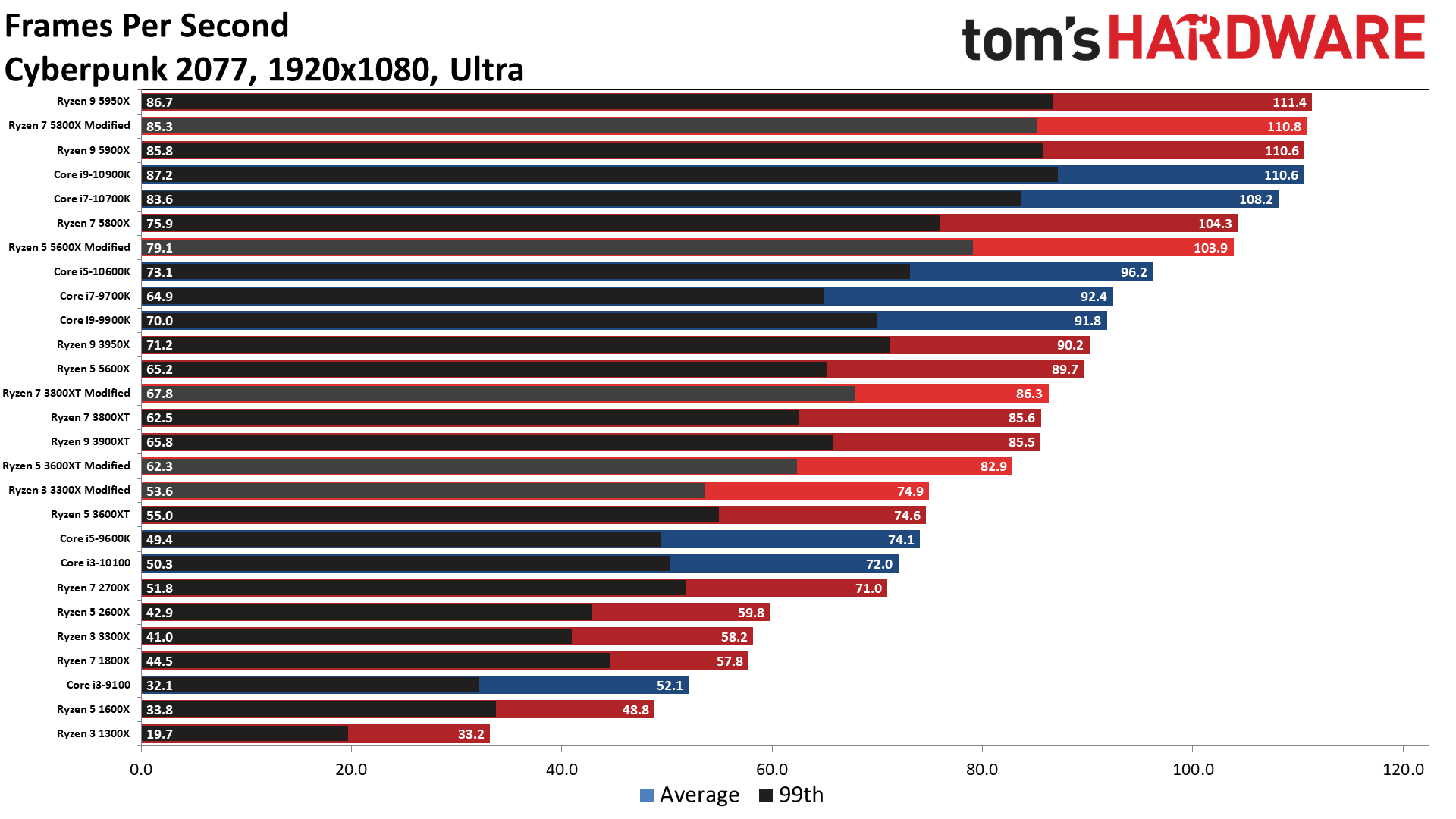

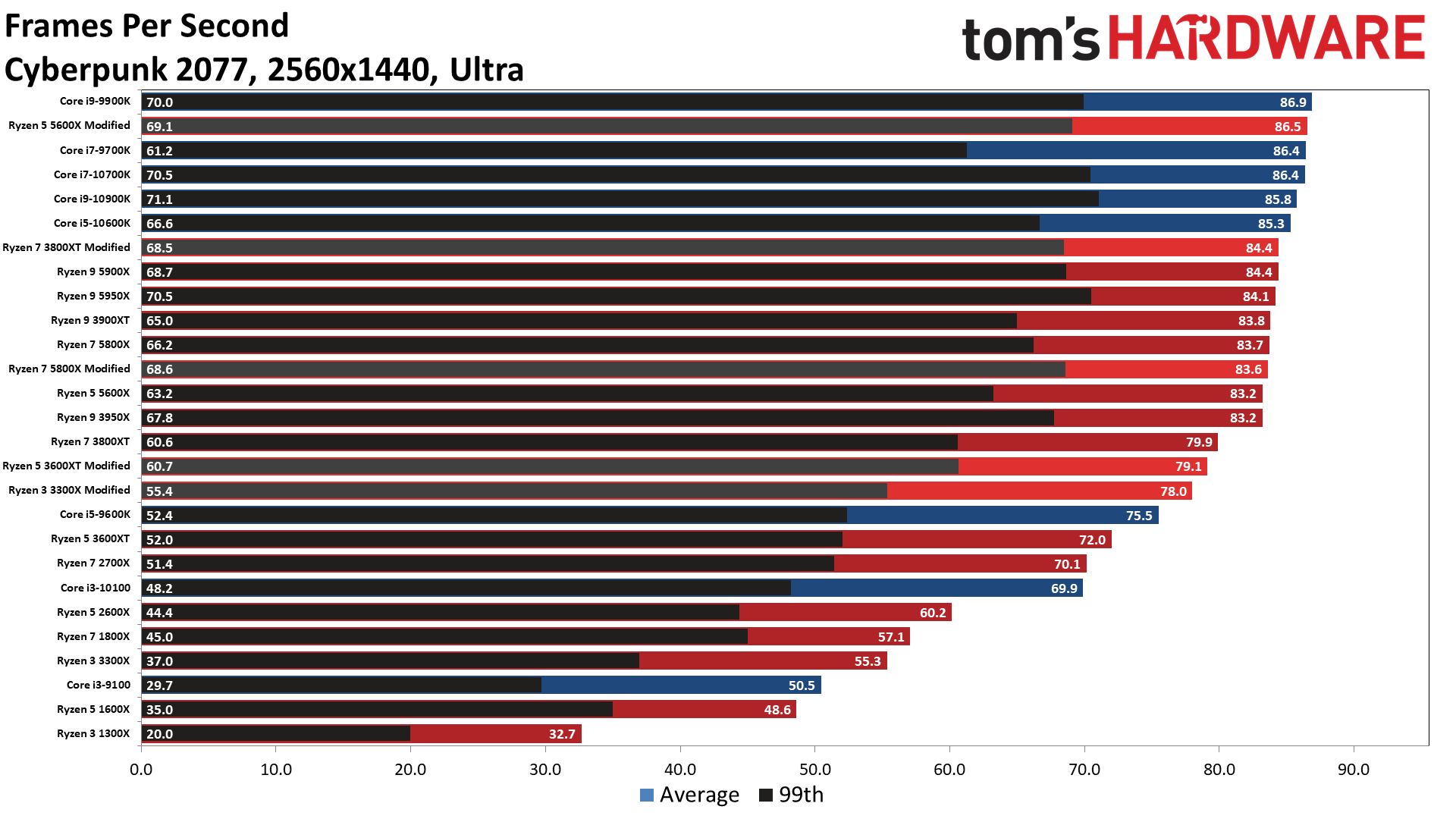

Cyberpunk 2077 CPU Scaling Ultra Preset Benchmarks

Finally, we turn the dial up to the Ultra preset, but without ray tracing active. As expected, the gains again shrink as we increase resolution and push closer to a graphics bottleneck that makes CPU performance less of a factor.

Again, the Ryzen 9 5950X, 5900X, Ryzen 7 5800X, and 10900K all push the RTX 3090 to the limits during the 1080p tests, meaning we'll have to wait for faster GPUs to see any meaningful difference between these chips at these resolution and fidelity settings. We don't see any massive changes to the performance hierarchy with these settings — the chips largely stack up the same as we saw with the 1080p medium preset, albeit with some differences in average framerates.

It's interesting to look at some of the other differences present thanks to the memory configurations we're using for testing. For example, Jarred tested the Core i9-9900K at DDR4-3600 with 16-18-18 timings, and performance was around 14% higher than the i9-9900K running at official Intel settings (DDR4-2666), even with tighter 14-14-14 timings. AMD's Zen 2 and Zen 3 chips have an advantage in that they officially support up to DDR4-3200, without overclocking.

At a bare minimum, many enthusiasts enable the XMP profile in the BIOS (which might be called A-XMP, AMP, DOHC, or some other name on AMD platforms). With better memory, this can definitely improve performance, but it technically voids the warranty.

Cyberpunk 2077 CPU Scaling Thoughts

Cyberpunk 2077 is one of the most demanding games on the market, and it's pretty much impossible to devise one series of tests that can give a definitive single answer for how your CPU will perform in the massive number of possible hardware configurations. As such, you should view these tests as basic indicators of how the processors stack up when paired with the highest-end gaming GPU that money can buy, and then adjust your expectations based on your GPU.

If you have an older chip and need to know how it stacks up compared to the chips in this article, head over to our CPU Benchmarks Hierarchy, which has in-depth performance testing of chips spanning back to...well, seemingly forever, Those performance rankings should give you an idea of where you'll land.

Naturally, the differences between our test subjects will diminish rapidly with lesser graphics cards. Still, if you're determined enough, you could even scrape by with integrated graphics with below-bare-minimum settings. Not that we'd recommend that to anyone, but the overriding point is that with some time and patience, you can adjust the fidelity settings to make do with a slower GPU.

On the flip side of the coin, playing the game with a capable GPU can be a punishing experience for your processor. Today's newest high-end chips, namely the Ryzen 9 and 7 5000 series and 10th-gen Intel i9 and i7 chips, are obviously the best fit if money is no object and you're trying to squeeze out every last frame. However, if you're looking for the best blend of price and performance, mid-range modern CPUs, like Intel's Core i5 and AMD's Ryzen 5, are still the best.

Even the previous-gen Core i5-9600K and Ryzen 5 3600XT held up relatively well during our testing, but those should be considered a bare minimum if you're looking to game with higher-end GPUs.

Be sure to hit our article covering the recommended system specifications for different price points, and our expansive Cyberpunk 2077 testing with a wide spate of GPUs to see where your system could slot in based on your hardware configuration.

In the meantime, we're eagerly awaiting the Cyberpunk 2077 1.05 patch to see how much it impacts overall game performance. We're also working on adding a few HEDT models to the tests above, not to mention 7th- and 8th-gen Intel chips, so stay tuned.

| Intel Socket 1200 (Z490) | Intel 9th- and 10th-Gen processors |

| Row 1 - Cell 0 | MSI MEG Z490 Godlike / MEG Z390 Godlike |

| Row 2 - Cell 0 | 2x 16GB Corsair Dominator DDR4-3600 - Stock: DDR4-2933, DDR4-2666 |

| AMD Socket AM4 (X570) | AMD Ryzen 5000, 3000, 2000 and 1000 Series |

| MSI MEG X570 Godlike / MSI MEG X470 Godlike | |

| Row 5 - Cell 0 | 2x 16GB Corsair Dominator DDR4-3600 - Stock: DDR4-3200, DDR4-2666 |

| All Systems | Gigabyte GeForce RTX 3090 Eagle |

| 2TB Intel DC4510 SSD | |

| EVGA Supernova 1600 T2, 1600W | |

| Row 9 - Cell 0 | Open Benchtable |

| Windows 10 Pro version 2004 (build 19041.450) | |

| Cooling | Corsair H115i |

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

cewhidx I dont know yet what my average fps is but I can say that the game is running smooth as silk so far with my configuration:Reply

Ryzen 5 3600

EVGA RTX 2080 XC Gaming

Corsair Vengeance DDR4 3200 32 GB

1440p GSync 144hz Monitor

Pretty much maxed out settings with version 1.04, All RTX options enabled with DLSS on balanced.

I'll update after trying 1.05, but so far after 3 hours of playing, Ive not had any bugs / slowdowns or anything. -

willburstyle06 ReplyAdmin said:We test a wide range of both Intel and AMD processors to determine which processors perform best in a variety of resolutions.

Cyberpunk 2077 CPU Scaling, What Processors Work Best? : Read more

Was the 9900K tested at stock 4.7GHz all cores? In general were the CPU’s all tested at stock? I have mine at 5.1GHz and I’m trying to see how it compares to this test -

Evill-Santa What really poor selection of CPU's. You will see a high % of gamers have older CPU's as they get swapped a lot less than GPU's, especially intel's with their more expensive upgrade path. As per the Nov 2020 a bit under 75% of gamers have Intel CPU's yet you have chosen only 2 generations of intel CPU's. (though you have done better with AMD) So it's not really a "wide selection" of nearly 75% of gamers is it?Reply

Looks like you have taken a wide selection of what is easy for you to test, rather than what would be useful for a large volume of your user base. You have provided good feedback for AMD CPUS's (~25% of market) but very shallow feedback on the intel generations.

I get it, AMD is all the rage and it will probably be my next CPU, but this would be a much more useful article if you catered for what would be the more likely scenario of the gamers CPU.

Using CPU performance websites is not really a great way for someone to work out where their CPU sits. -

Geef ReplyAt a bare minimum, many enthusiasts enable the XMP profile in the BIOS

The only enthusiast who doesn't enable it are the <<Removed by moderator>> ones, or the ones who possibly forgot to last time they reset their settings and they smack their head when they realize what they've done. -

Conahl ReplyEvill-Santa said:What really poor selection of CPU's. You will see a high % of gamers have older CPU's as they get swapped a lot less than GPU's, especially intel's with their more expensive upgrade path. As per the Nov 2020 a bit under 75% of gamers have Intel CPU's yet you have chosen only 2 generations of intel CPU's. (though you have done better with AMD) So it's not really a "wide selection" of nearly 75% of gamers is it?

Looks like you have taken a wide selection of what is easy for you to test, rather than what would be useful for a large volume of your user base. You have provided good feedback for AMD CPUS's (~25% of market) but very shallow feedback on the intel generations.

I get it, AMD is all the rage and it will probably be my next CPU, but this would be a much more useful article if you catered for what would be the more likely scenario of the gamers CPU.

Using CPU performance websites is not really a great way for someone to work out where their CPU sits.

or, what they tested, is what they had on hand to test, ever consider that ? what is on hand/easy to test vs what they have available to test, could be 2 different things. -

Minh Nguyen the 1.05 patch pretty much does the hex fix, so the default will be the "modified" resultsReply -

Paul Alcorn Replywillburstyle06 said:Was the 9900K tested at stock 4.7GHz all cores? In general were the CPU’s all tested at stock? I have mine at 5.1GHz and I’m trying to see how it compares to this test

yes, the processors are all tested in stock configs. Head to our 9900K review to see what that chip looks like overclocked - you can kinda reverse engineer the numbers there to get a decent sense of how it does in gaming with an OC. -

Paul Alcorn ReplyEvill-Santa said:What really poor selection of CPU's. You will see a high % of gamers have older CPU's as they get swapped a lot less than GPU's, especially intel's with their more expensive upgrade path. As per the Nov 2020 a bit under 75% of gamers have Intel CPU's yet you have chosen only 2 generations of intel CPU's. (though you have done better with AMD) So it's not really a "wide selection" of nearly 75% of gamers is it?

Looks like you have taken a wide selection of what is easy for you to test, rather than what would be useful for a large volume of your user base. You have provided good feedback for AMD CPUS's (~25% of market) but very shallow feedback on the intel generations.

I get it, AMD is all the rage and it will probably be my next CPU, but this would be a much more useful article if you catered for what would be the more likely scenario of the gamers CPU.

Using CPU performance websites is not really a great way for someone to work out where their CPU sits.

Time is a factor, plus the patch was looming, so I went with what I had. The patch doesn't seem to make any significant changes, so as mentioned in the article, we'll soldier on with 7th and 8th gen Intel next.

We don't suggest that you visit CPU benchmark sites for relative performance comparisons. We suggest that you go to our CPU benchmark hierarchy, which has testing performed with solid and repeatable test methodologies in a static test environment. :) -

TimmyP777 ReplyGeef said:The only enthusiast who doesn't enable it are the <<Removed by moderator>> ones, or the ones who possibly forgot to last time they reset their settings and they smack their head when they realize what they've done.

XMP has caused a plethora of problems over the last decade. Especially back when it was more an Intel feature being brought to AMD boards.