Cyberpunk 2077 RT Overdrive Path Tracing: Full Path Tracing, Fully Unnecessary

This is why fully ray traced rendering isn't ready for prime time, yet.

In its continuing bid to become the next Crysis, Cyberpunk 2077 recently released the "technology preview" of its RT Overdrive mode. That language is important, because it rightly suggests that most people either can't or shouldn't try out the fully path traced rendering mode for what was already a demanding game. Do you already own one of the best graphics cards? RT Overdrive could leave you longing for an upgrade.

Well, maybe. While the fully path traced version of the game is supposed to make everything look amazing, there's a reason most of the comparisons we've seen show RT Overdrive image quality versus pure rasterization-based rendering. If you're already running RT Ultra settings, the improvements aren't nearly as noticeable — they're still there, but it turns out hybrid rendering does a pretty good job of capturing many of the benefits of ray tracing without having to cast 635 rays per pixel (on average, according to Nvidia).

And let's also be clear about the headline. When we say "fully unnecessary," we're not talking about CD Projekt Red and Nvidia working together to release the full path tracing update. We're saying that if you don't have a powerful graphics card, you don't need to feel left out. It can make the game look better in various ways, but don't let FOMO convince you of the need to upgrade, at least not just yet.

The RT Overdrive settings are a tour de force for Nvidia's RTX hardware, including the beefed-up ray tracing cores, Shader Execution Reordering, Opacity Micro-Maps, and DLSS 3 Frame Generation. Maybe in two more generations of hardware, this is the sort of rendering we'll use in future state-of-the-art games. But that last item in particular, Frame Generation, deserves a bit more investigation.

As I've stated on several occasions, Frame Generation doesn't feel nearly as fast as the inflated numbers in charts might lead you to believe. You can see a full breakdown of five games with DLSS 3 support in our RTX 4070 review, but my take is that a 50% increase in frames to screen via Frame Generation feels more like a 10–20 percent improvement in performance. It will look better to someone looking over your shoulder watching you play than it feels, though, so maybe it's great for streamers?

But don't be concerned about some of those proprietary technologies limiting access to RT Overdrive mode. For better or worse, you can give path tracing a shot on everything from the original RTX 20-series up through the 40-series, as well as AMD's RX 6000- and 7000-series GPUs and Intel's Arc GPUs. Thankfully, Cyberpunk 2077 also has support for FSR 2.1 and XeSS 1.1 upscaling algorithms, so there's still a chance that non-Nvidia cards will manage playable framerates.

And that's what we set out to determine: Just how well can the various graphics cards run RT Overdrive mode, and how much upscaling will you need to hit playable framerates? (Spoiler: On non-Nvidia cards, the answer is "a lot.")

RT Overdrive Image Quality Enhancements

Let's first start with a look at visual fidelity. Here I've captured the game's benchmark on an RTX 4090 running at 1080p using four different settings. Of course, you don't need an RTX 4090 to run Cyberpunk 2077's RT Overdrive mode. That's the point of this story. Also, you don't need RT Overdrive mode — it doesn't make the story or the gameplay in Cyberpunk 2077 any better (or worse). But, spoiler alert: If you want a snowball's chance in Hades of running the game well while using full path tracing, you're probably going to want an Nvidia RTX 40-series or a high-end 30-series part.

The built-in benchmark is nice because it follows a set path that lasts just over a minute, allowing (somewhat) perfect comparisons between the various rendering modes. But it's also during the daylight hours, so once you head outside, comparisons become a bit less representative of what you might experience while playing the game in the evening hours.

Anyway, in the top-left is RT Overdrive running at native 1080p with no upscaling. The top-right has RT Overdrive with DLSS Quality mode and Frame Generation enabled (the "actually useful" mode for most people), while the bottom-left uses the previously maximum quality RT Ultra preset with DLSS Quality upscaling and Frame Generation. Finally, in the bottom right is the Ultra preset (no ray tracing) running at native resolution.

Now, if you watch that video and think, "So, what's all the hubbub about," you're not alone. To be fair, there are areas where the extra RT effects of Overdrive mode are more apparent — the benchmark sequence isn't a great representation of the biggest changes. But you'll find a lot of the game looks quite similar to the previous RT Ultra hybrid rendering mode. If you want an easier way to compare the quality, here's a gallery of three different scenes, using the same settings as in the video.

RT Overdrive Native

RT Overdrive DLSS Quality plus Frame Generation

RT Ultra DLSS Quality plus Frame Generation

Ultra (no Ray Tracing) Native

RT Overdrive Native

RT Overdrive DLSS Quality plus Frame Generation

RT Ultra DLSS Quality plus Frame Generation

Ultra (no Ray Tracing) Native

RT Overdrive Native

RT Overdrive DLSS Quality plus Frame Generation

RT Ultra DLSS Quality plus Frame Generation

Ultra (no Ray Tracing) Native

First, it's important to note that many of the "differences" in the above images are just part of the dynamic nature of the game engine. There's swirling smoke and pulsing lights in the bar at the start, for example, and those are never the same between runs. The same goes for particle effects in the outside world, and the various NPC entities are randomly generated at runtime, so changes in clothing also occur.

Mostly, you'll notice in those screenshots that RT Overdrive looks similar to RT Ultra, only with much lower performance — and DLSS Quality mode plus Frame Generation mitigates that quite a bit, at least at 1080p with an RTX 4090. There are some nuances, but you have to search for them, and in normal gameplay there are lots of areas where you won't really notice the difference.

The best-case results for Overdrive looking substantially better are in darker areas with multiple light sources. Outside, in sunlight? Not so much. Sure, having muzzle flashes accurately light up the environment can look pretty cool, but it doesn't fix the numerous other complaints people have with the game. It's the age-old story of better graphics not inherently making for a better game.

The Ultra preset without any ray tracing still looks good as well, though the missing graphics effects become easier to spot — screen space reflections, less accurate lighting and shadows, and that sort of thing. The shadows in the bar scene for example have relatively crisp outlines, which isn't how they would actually look in the real world (due to the numerous light sources), and the puddle on the sidewalk doesn't reflect things that aren't visible on the screen.

RT Overdrive plus FSR 2.1 Quality mode

RT Ultra plus FSR 2.1 Quality mode

Ultra (no ray tracing) plus FSR 2.1 Quality mode

RT Overdrive plus FSR 2.1 Quality mode

RT Ultra plus FSR 2.1 Quality mode

Ultra (no ray tracing) plus FSR 2.1 Quality mode

RT Overdrive plus FSR 2.1 Quality mode

RT Ultra plus FSR 2.1 Quality mode

Ultra (no ray tracing) plus FSR 2.1 Quality mode

RT Overdrive plus FSR 2.1 Quality mode

RT Ultra plus FSR 2.1 Quality mode

Ultra (no ray tracing) plus FSR 2.1 Quality mode

RT Overdrive plus FSR 2.1 Quality mode

RT Ultra plus FSR 2.1 Quality mode

Ultra (no ray tracing) plus FSR 2.1 Quality mode

This second set of images was all captured on an AMD Radeon RX 6700 XT — certainly no slouch of a graphics card when it comes to regular games, and still one of the best graphics card values with a starting price of $369. Now, you can clearly see the changes in the various scenes when looking at RT Overdrive versus RT Ultra or just regular Ultra modes (all of these images have 2x FRS 2.1 Quality upscaling mode enabled, incidentally). But do the path traced versions always look better? That's more difficult to say.

Some of the screenshots show areas that get lighter thanks to path tracing. Others have areas that get darker. It's all supposed to look more realistic. But sometimes, in a game, realism isn't all that great. Maybe I want to be able to see into the dark corner better, for example, and maybe I prefer having an unrealistic looking NPC where I can still see their face, even if it should be in shadow. Whatever.

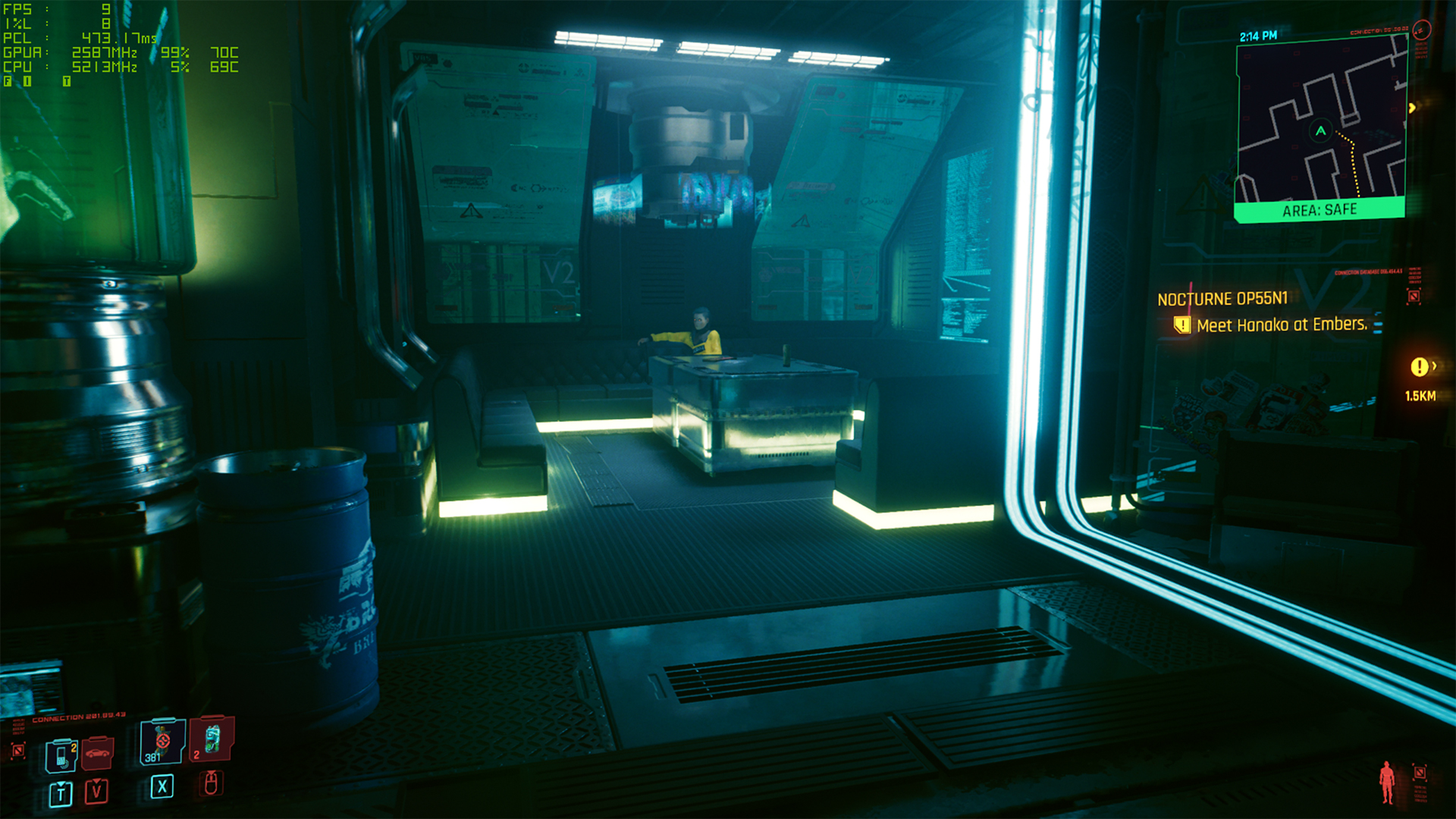

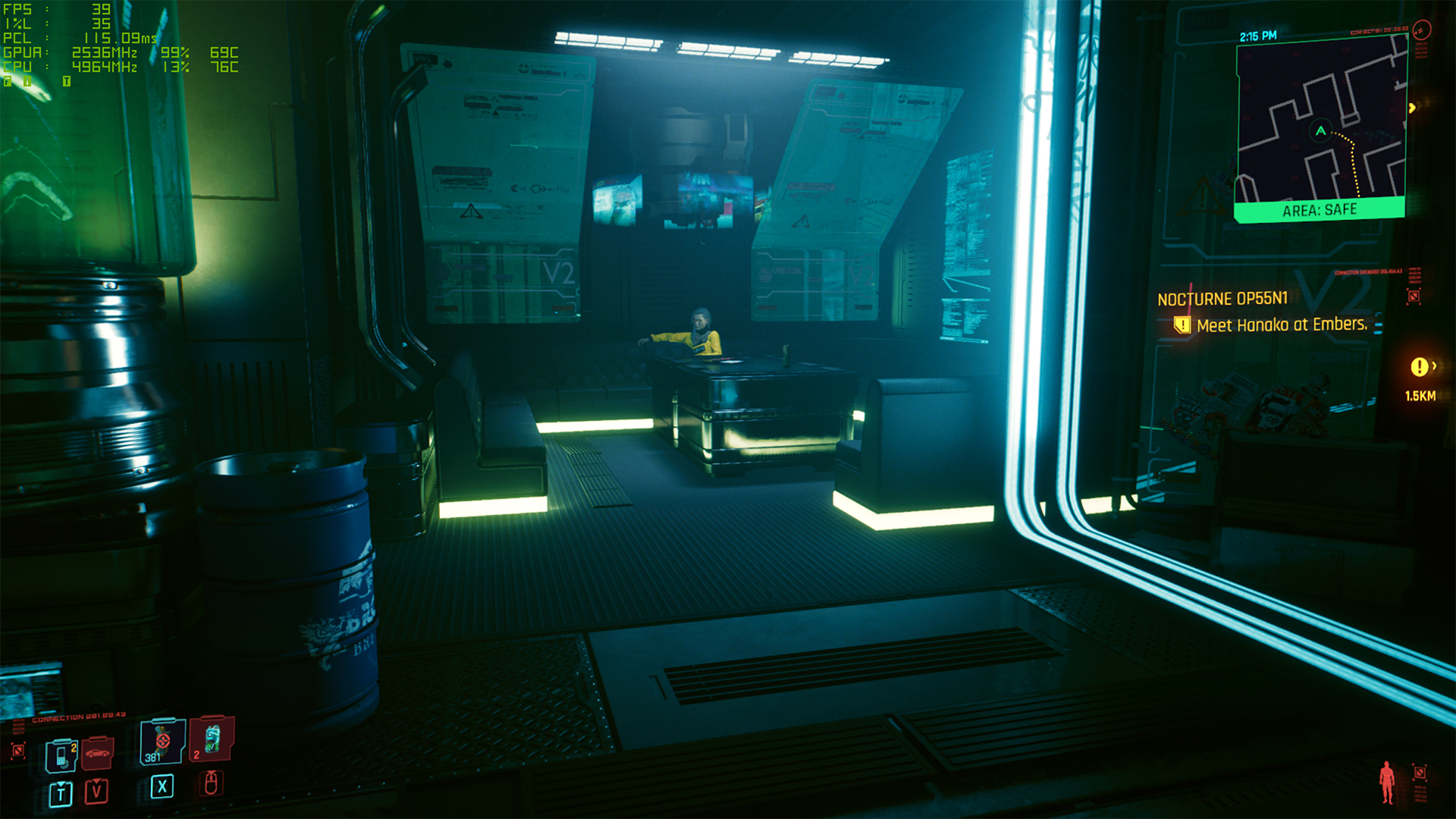

But the bigger issue isn't just whether or not path tracing can look better — let's just go ahead and all agree that it can. It gives you better shadows, better lighting, better reflections, and no glowing people. The issue is whether the costs of full path tracing are worth the performance hit. If you have an RTX 4090, sure, probably. If you have an AMD GPU? Hell no! The 6700 XT manages a reasonably playable 40–60 fps in RT Ultra mode, or 100–120 fps using the Ultra preset. RT Overdrive drops it down into the 9–15 fps range.

Cyberpunk 2077 RT Overdrive is supposed to be a look at the future of gaming, and if so, most gamers are probably just fine sticking with hybrid rendering for quite some time. Ray tracing or path tracing do have some advantages in that there's no need to "pre-bake" the lighting. Everything from shadows to reflections to ambient occlusion can be calculated in real time. That could potentially mean less work for artists and level designers, but most gamers would likely prefer saving money on the cost of a new graphics card rather than theoretically helping a corporation save on game development costs. But as long as most gamers aren't using full path tracing — like if they're going to end up with single digit framerates because of it — that just means the developers have to do both hybrid and ray traced modes. That means path tracing ends up costing more money to develop and support, rather than less.

If you're not feeling wowed by the lack of major improvements in graphics fidelity compared to the existing RT Ultra preset shown here, don't worry. RT Overdrive can look better than even these images show. RT Overdrive's path tracing could also provide you with a good excuse to upgrade your graphics card, because the performance requirements are Mt. Everest steep. Future games may put full path tracing to better use, but that only matters if you can run those games, and for most people that's going to be a stretch. Which brings us to the benchmarks.

Cyberpunk 2077 Overdrive Test Setup

We're using our standard 2023 GPU test PC, with a Core i9-13900K and all the other bells and whistles. For Cyberpunk 2077 RT Overdrive, we're going to include results from a decent collection of Nvidia, AMD, and Intel graphics cards — not everything, but enough to give you a reasonable estimate of where any "missing" cards might land.

We've tested using four different settings: RT Overdrive without any upscaling (native), RT Overdrive with DLSS 2 / FSR 2 / XeSS upscaling in Performance mode (4X upscaling), RT Overdrive with DLSS 2 / FSR 2 upscaling in Ultra Performance mode (9X upscaling), and the previously existing RT Ultra settings with DLSS 2 / FSR 2 / XeSS upscaling in Performance mode. For the RTX 40-series GPUs, we'll also run each of those options with Frame Generation (aka DLSS 3) enabled. It's a lot of benchmarks.

We're also testing at 1920x1080, 2560x1440, and 3840x2160, to provide a comprehensive look at performance. There's a catch, however: We're absolutely not going to test every possible DXR-capable graphics card at every one of those settings. We've probably already run too many "useless" benchmarks, considering the early state of the RT Overdrive release and the abysmal performance you'll get on some GPUs. Native rendering will get down into the single digits pretty quickly — hence the inclusion of Ultra Performance upscaling. The 4K Native results for example are going to be somewhat sparse, and they're mostly to show just how demanding that setting would be if we didn't have upscaling algorithms.

There are now hundreds of games with some form up upscaling support, so expectations should be pretty familiar by now. The difference is that full path tracing means there's a far stronger correlation between pixels rendered and framerates. 1920x1080 means Cyberpunk 2077 has to render just over two million pixels. Performance mode upscaling cuts that number down to around 500K pixels, while Ultra Performance mode means the GPU only has to spit out about 230K pixels. If scaling were perfectly tied to pixels rendered, we'd see a 4X and 9X improvement in framerates for Performance and Ultra Performance upscaling, respectively.

Nvidia says RT Overdrive mode on average performs 635 RT calculations per pixel rendered, so running at 640x360 (Ultra Performance mode with 1080p) will be a lot easier, particularly on the less RT-capable GPUs. And with that out of the way, let's get to the benchmarks.

Cyberpunk 2077 RT Overdrive: Native Performance

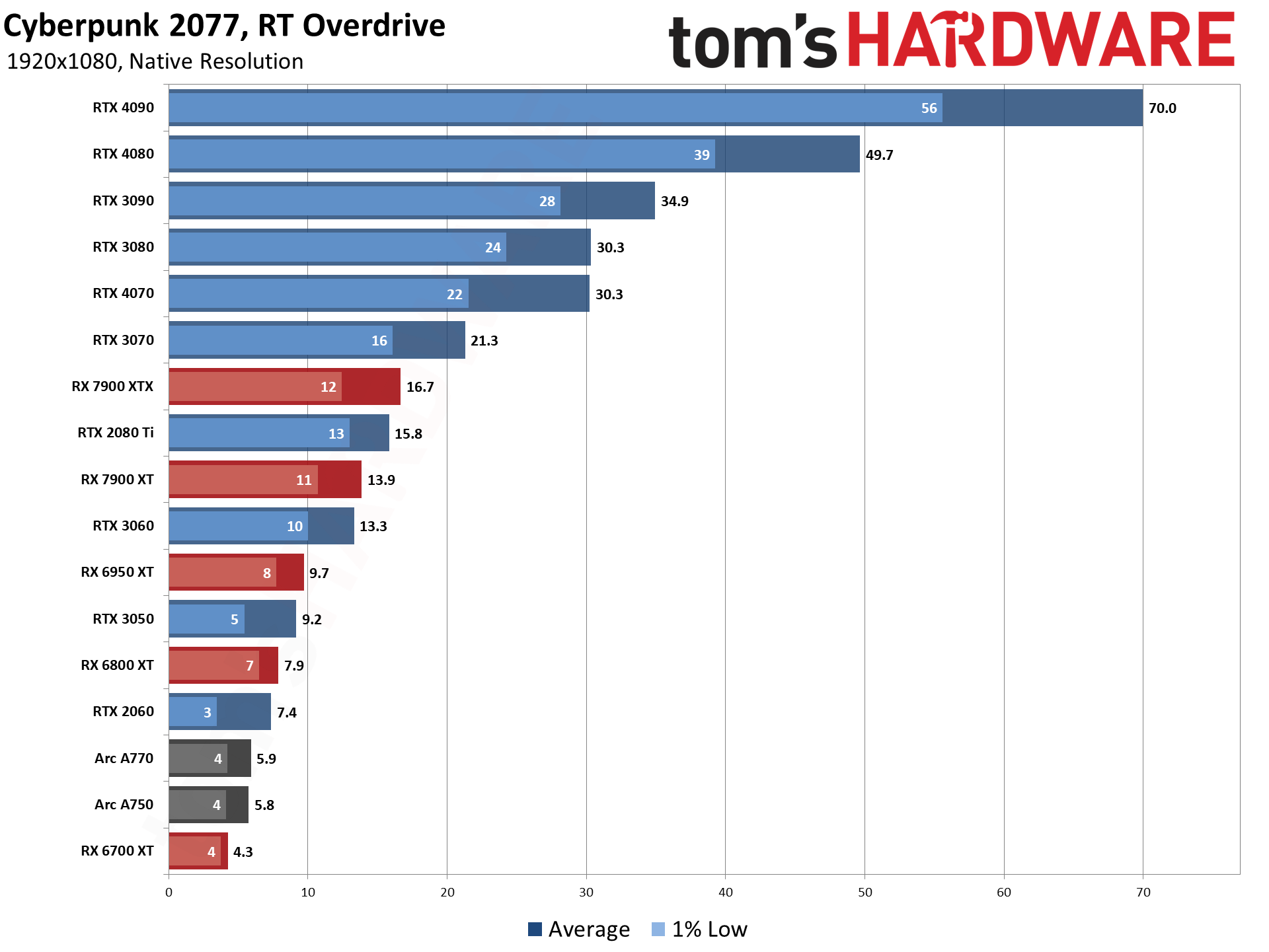

Disclaimer: This is most assuredly not the way most people will want to play around with RT Overdrive. Still, it's an interesting look at theoretical worst-case ray tracing performance. We've tested all of the cards for the 1080p results, and then we tested progressively fewer cards at 1440p and 4K. Frame Generation (without upscaling) is also an option that we've tested on the RTX 40-series parts.

While we might hope for some measurement of pure ray tracing performance, keep in mind that this is a single game engine, running code that was almost certainly optimized primarily for Nvidia GPUs, so it's definitely not an agnostic look at the ray tracing hardware capabilities. We'll have more to say on that in the "Pure RT" section.

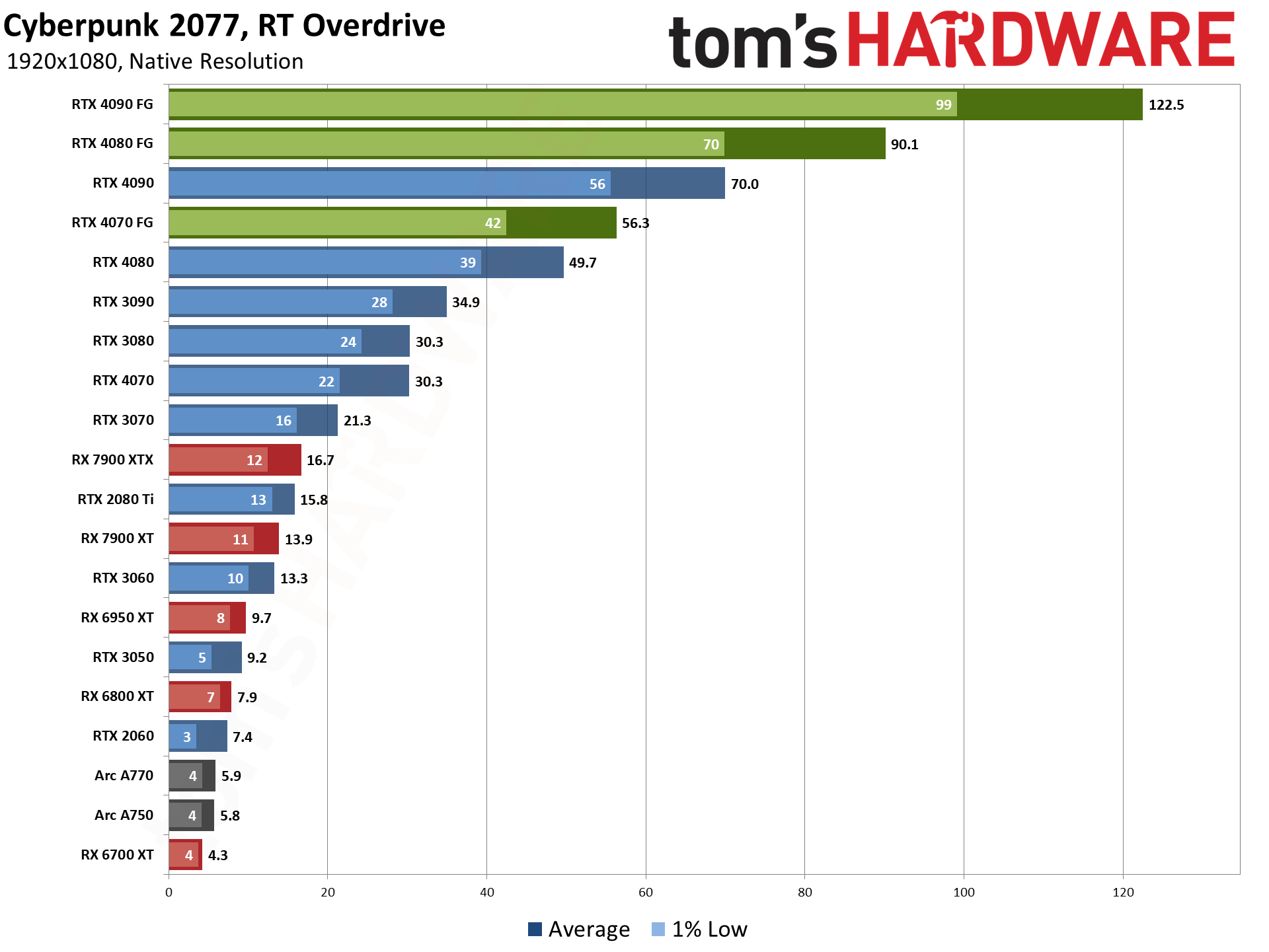

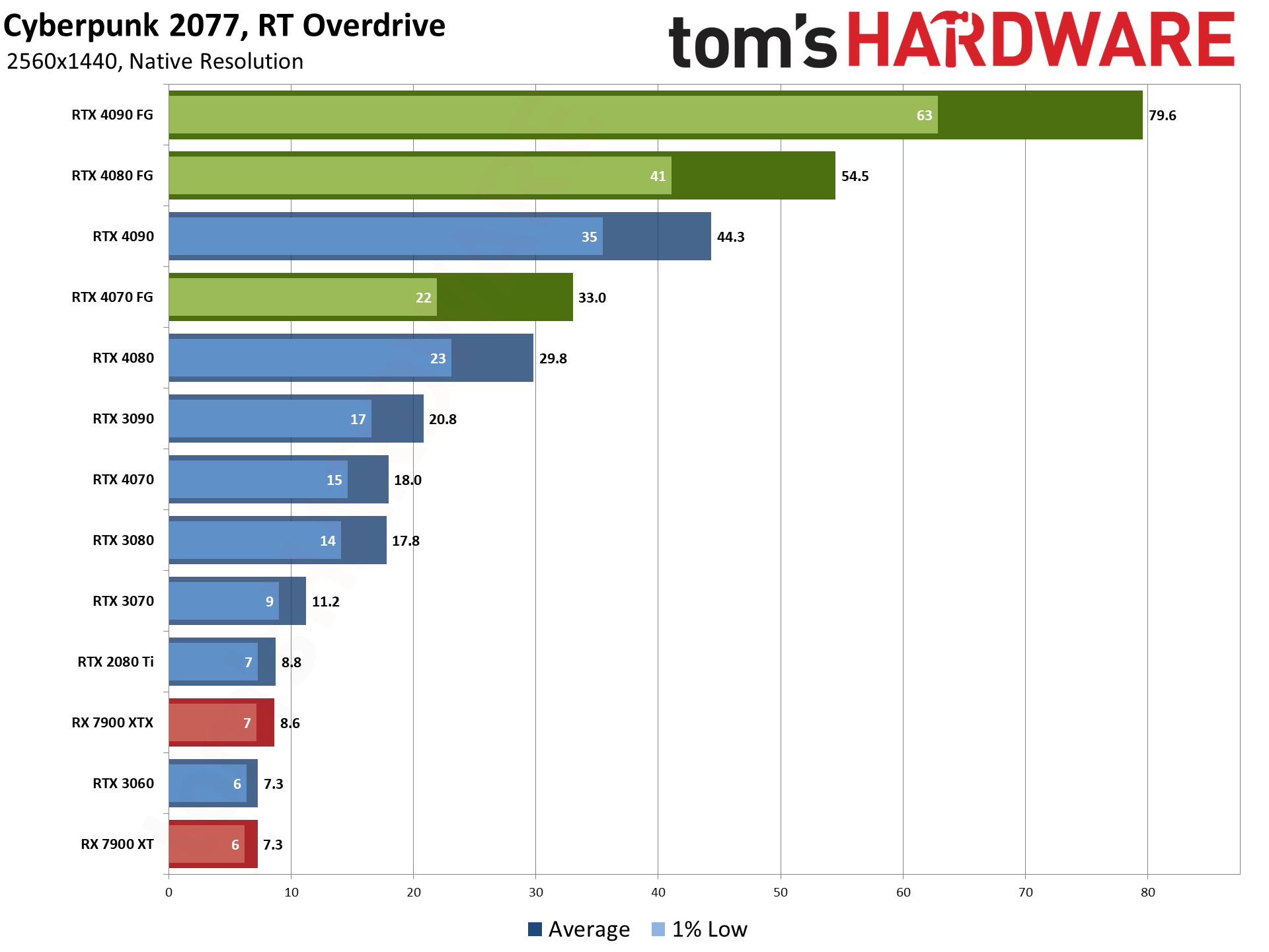

First, the good news: All of the RTX 40-series cards can break 30 fps at native 1080p. Sure, only the RTX 4090 can break 60 fps without help, but turning on Frame Generation boosts performance into the 50 fps and higher range on all of the cards. Nvidia's previous generation RTX 30-series parts are a bit less impressive, with the RTX 3080 just barely hitting 30 fps average while the 3090 gets a little bit of breathing room with a 35 fps result.

AMD and Intel GPUs on the other hand prove completely incapable of handling even 1080p native with full path tracing. The RX 7900 XTX can't even reach 20 fps, though it does just barely manage to beat Nvidia's first generation RTX 2080 Ti. The Arc A750 and A770, along with RX 6950 XT, RTX 2060, and other slower cards all fall into the single digits.

But why stop there? Looking at 1440p native performance, not even the RTX 4080 can break 30 fps without some form of AI enhancement. The RTX 4090 still manages a reasonably playable 44 fps, but nothing else will suffice. Even with Frame Generation, the RTX 4070 just barely squeaks past 30 fps, though the 4080 now reaches 55 fps and the 4090 sits at a comfortable 80 fps.

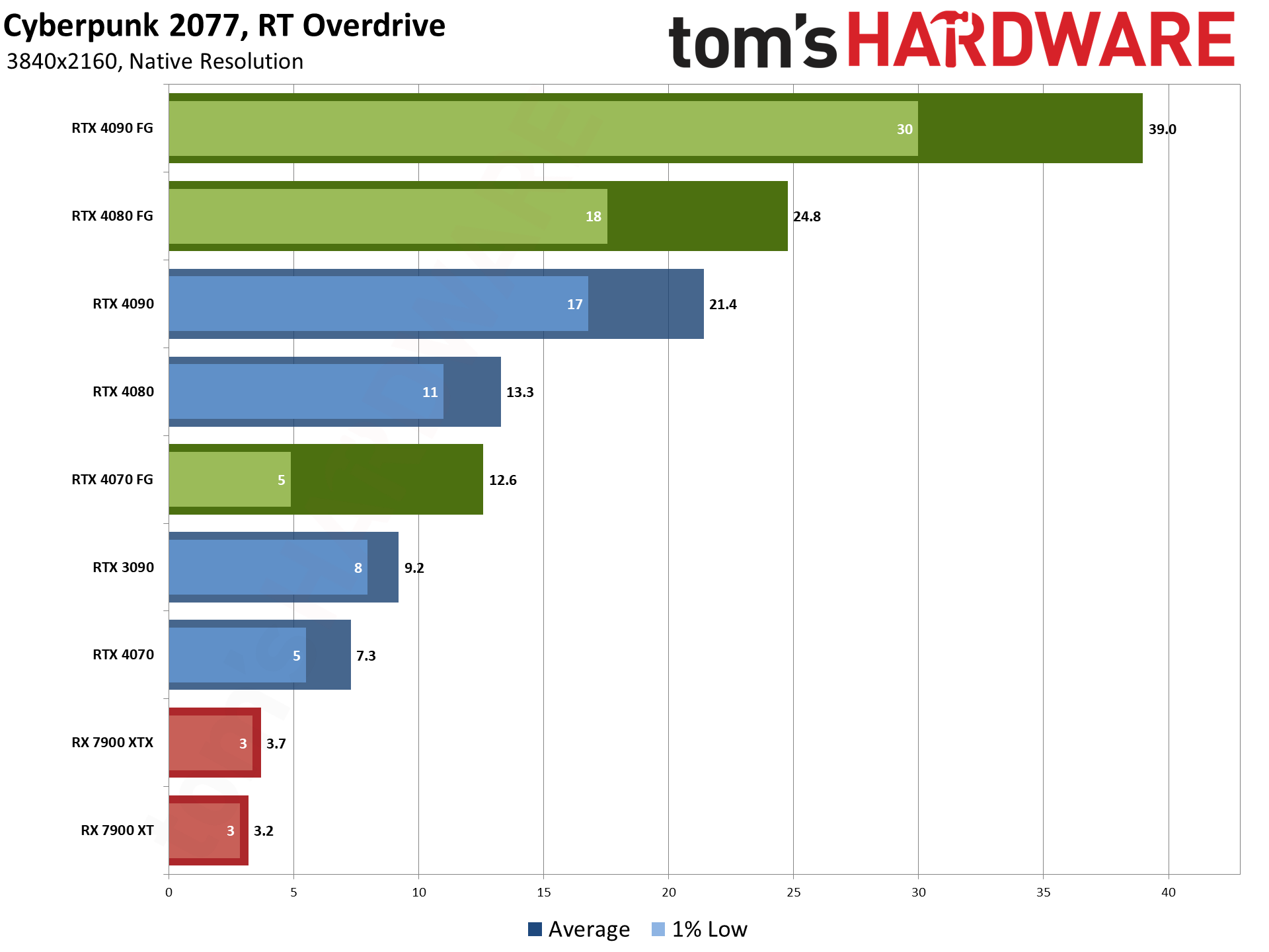

Finally, at 4K native, even the RTX 4090 manages just 21.4 fps. Frame Generation will push that up to 39 fps, an 82% improvement, but obviously more help is going to be needed.

While we're on the subject of native performance, though, notice a few things. First, performance scales almost perfectly with the number of pixels being rendered. The 4080 and 4090 deliver slightly better than linear scaling, but most of the remaining GPUs match up with the 78% and 125% increase in pixels when going from 1080p to 1440p, and from 1440p to 4K. Let's take two examples.

RX 7900 XTX got 16.7 fps at 1080p and 8.6 fps at 1440p, slightly worse than the target 9.4 fps of linear scaling (1.78X decrease) with pixels rendered. From 1440p to 4K, it dropped to 3.7 fps (3.8 fps would have been a 2.25X reduction). Nvidia's RTX 3090 went from 34.9 fps to 20.8 fps, a 1.68X reduction. Then moving to 4K dropped it to 9.2 fps, a 2.26X decrease. Which bodes well for upscaling techniques, as Performance mode can cut the number of pixels that need to be path traced down to one-fourth.

The other thing we want to note is that Frame Generation only gets Nvidia so far. The RTX 4070 for example got 33 fps at 1440p with FG enabled, but it still feels and plays like ~19 fps. The same goes for 4K on the 4090: 39 fps "performance" that feels like 21 fps. Also, Frame Generation totally broke down on the RTX 4070 at 4K. While the average increased to 12.6 fps from 7.3 fps, there were severe rendering errors. Our best guess is that the OFA (Optical Flow Accelerator) just isn't cut out to interpolate between frames when there's too much change happening. Or maybe it's just a bug, but either way 13 fps isn't going to be usable.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

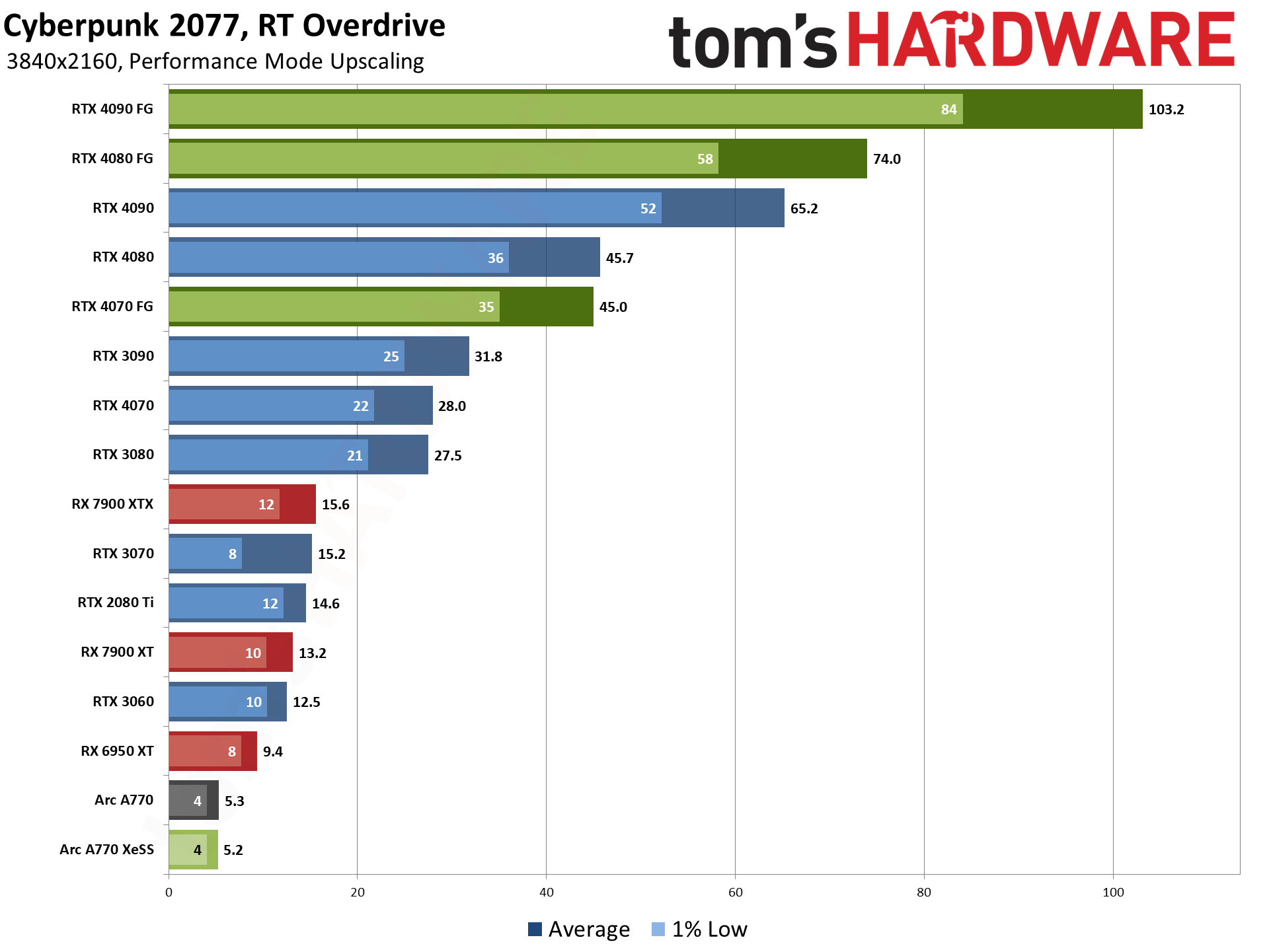

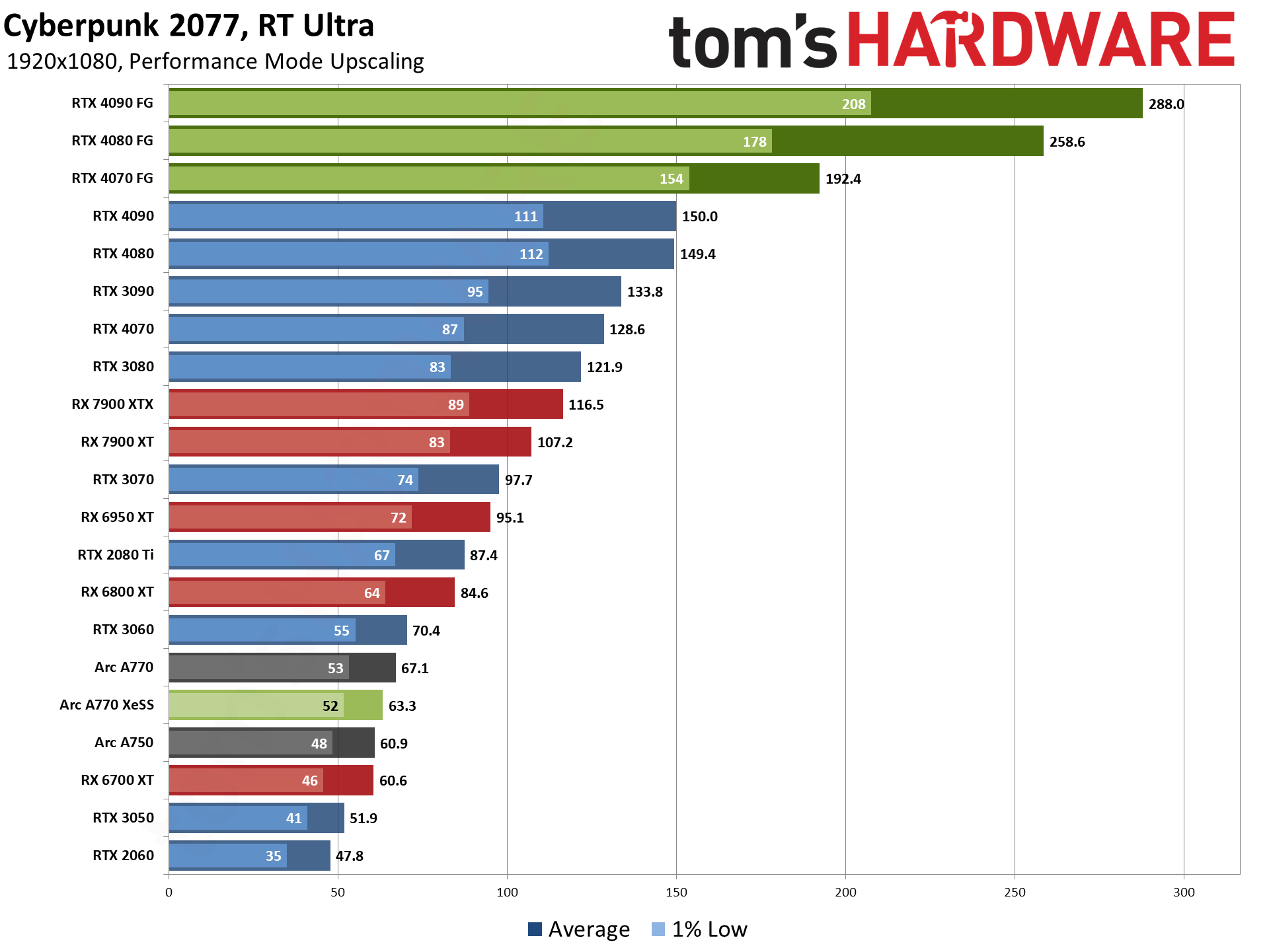

Cyberpunk 2077 RT Overdrive: Performance Upscaling

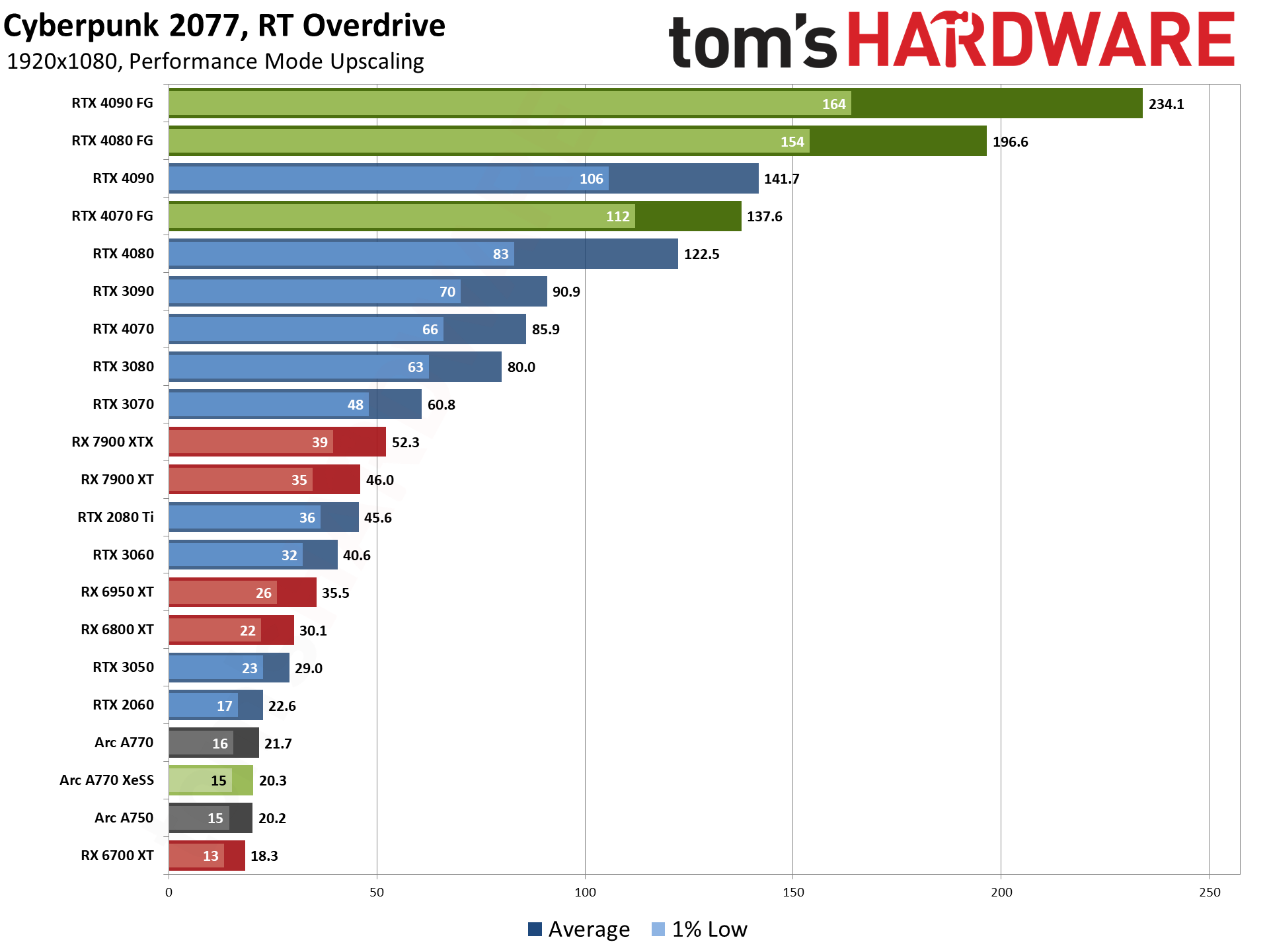

Going from native to Performance mode upscaling is a big jump. 4K should perform roughly the same as 1080p, for example (minus a bit due to the upscaling computations). That's not enough to push everything into the "playable" range, even at 1080p, but it's a start.

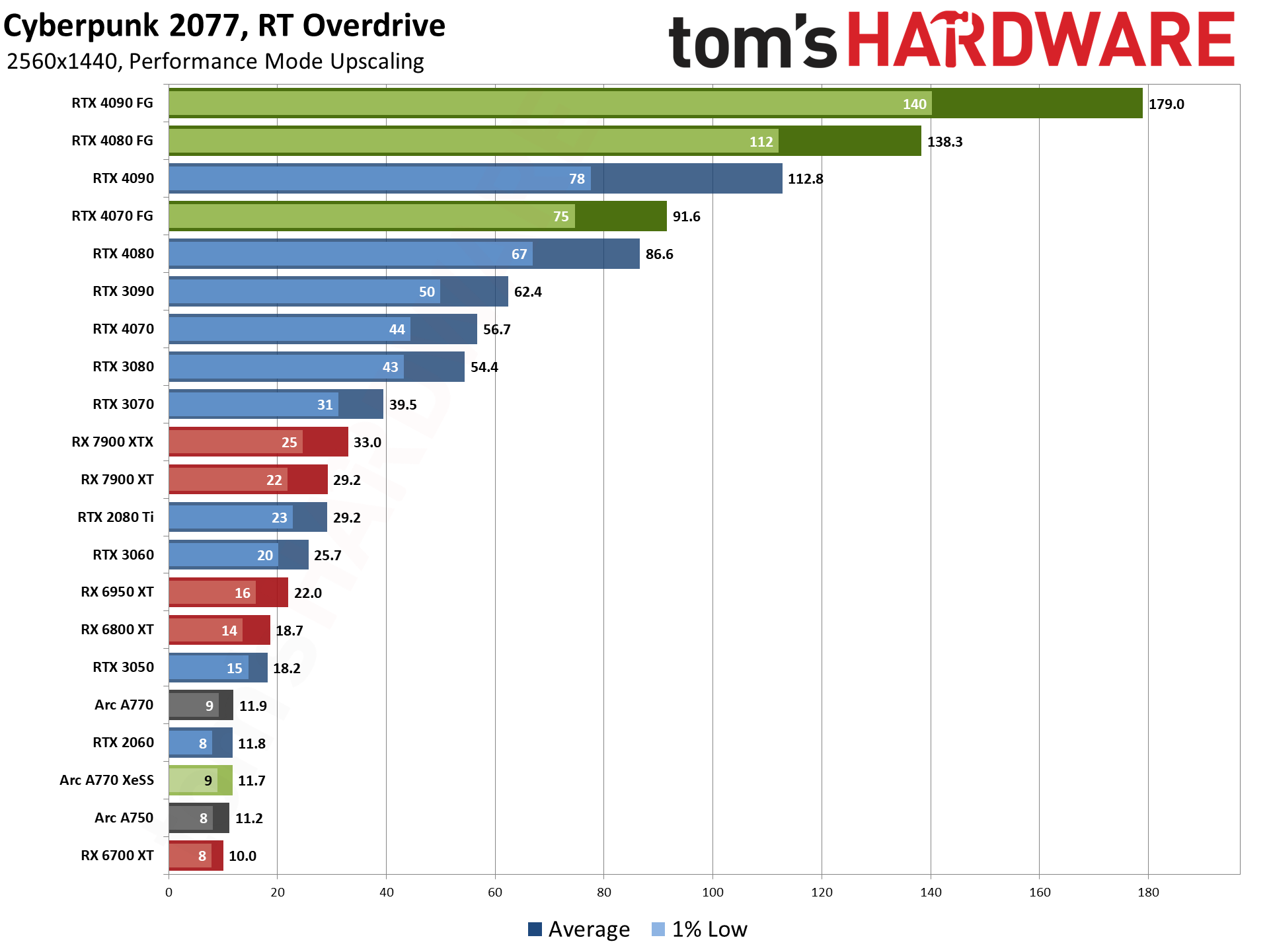

Obviously, Nvidia's 40-series parts with Frame Generation enabled look amazing if you're only seeing this chart. Again, those huge gains don't necessarily correlate directly with the feel of the game, but given we're seeing base performance (without FG) of 86 fps on the RTX 4070, you probably won't notice the slight increase in latency, and if you have a 144Hz or 240Hz monitor, you might even notice the slight benefit in higher frames to screen.

Without Frame Generation, the RTX 4090 now hits 142 fps, which is getting close to the CPU limit. The RTX 3090 and 3080 now easily break 60 fps, even on 1% lows, while the RTX 3070 just barely edges past the 60 fps mark. Note also that the RTX 4070, which was tied with the 3080 on average fps and slightly slower on 1% lows, now holds a slight performance advantage. That's likely thanks to the superior ray tracing hardware and tensor cores in the Ada Lovelace architecture.

AMD's RX 6800 XT and up are now technically playable at 30 fps or more, though really it's only the 7900 XT/XTX that truly reach acceptable levels of performance. Even an RTX 3060 comes out ahead of the RX 6950 XT, while the rather weak RTX 3050 nearly matches the RX 6800 XT. But there are plenty of GPUs that still need more help, even for 1080p gaming with path tracing.

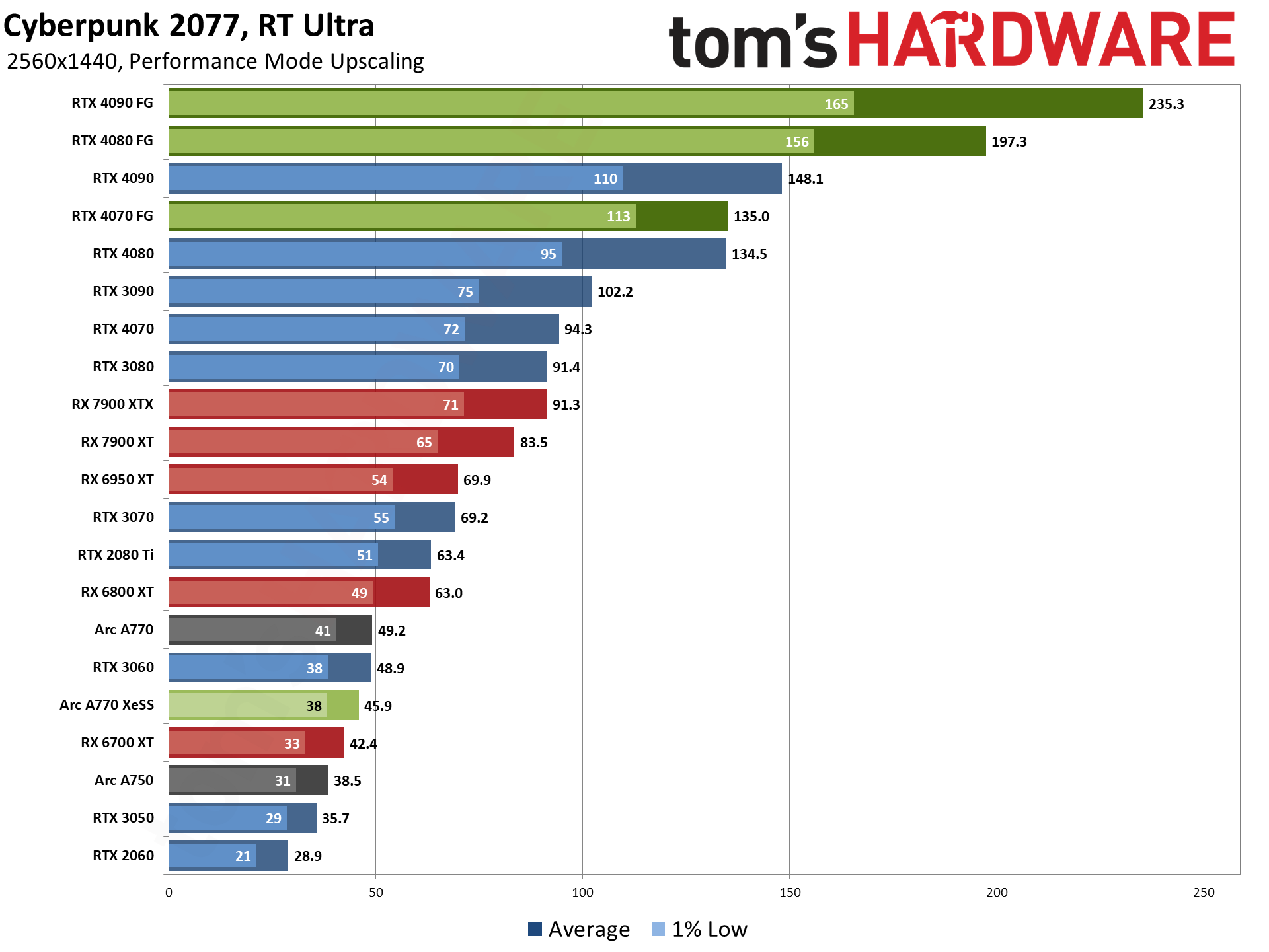

Before we go there, let's quickly check the 1440p and 4K results. Again, 40-series with DLSS 3 Frame Generation enabled all reach very good performance levels — you can definitely play Cyberpunk 2077 with RT Overdrive settings at 1440p, provided you turn on upscaling and Frame Generation. The previous generation RTX 3090 does barely manage to break 60 fps, and again the RTX 4070 (without FG) comes in just a bit ahead of the RTX 3080. That's likely due to the SER and OMM features supported on Ada, which the RTX 30-series lacks.

AMD's RTX 7900 XTX is the only non-Nvidia card to break 30 fps, while Intel's best solution can't even break into the teens. But let's quickly note that FSR2 provided better performance on the Arc A770 than XeSS 1.1. That's an interesting result, but it's tempered by the fact that XeSS looked better than FSR2 — 4X upscaling without AI (meaning, FSR2's algorithm) results in more noticeable "sparkling" on the edges of objects. Not that it matters too much, since even Performance upscaling at 1080p wasn't playable on Arc GPUs.

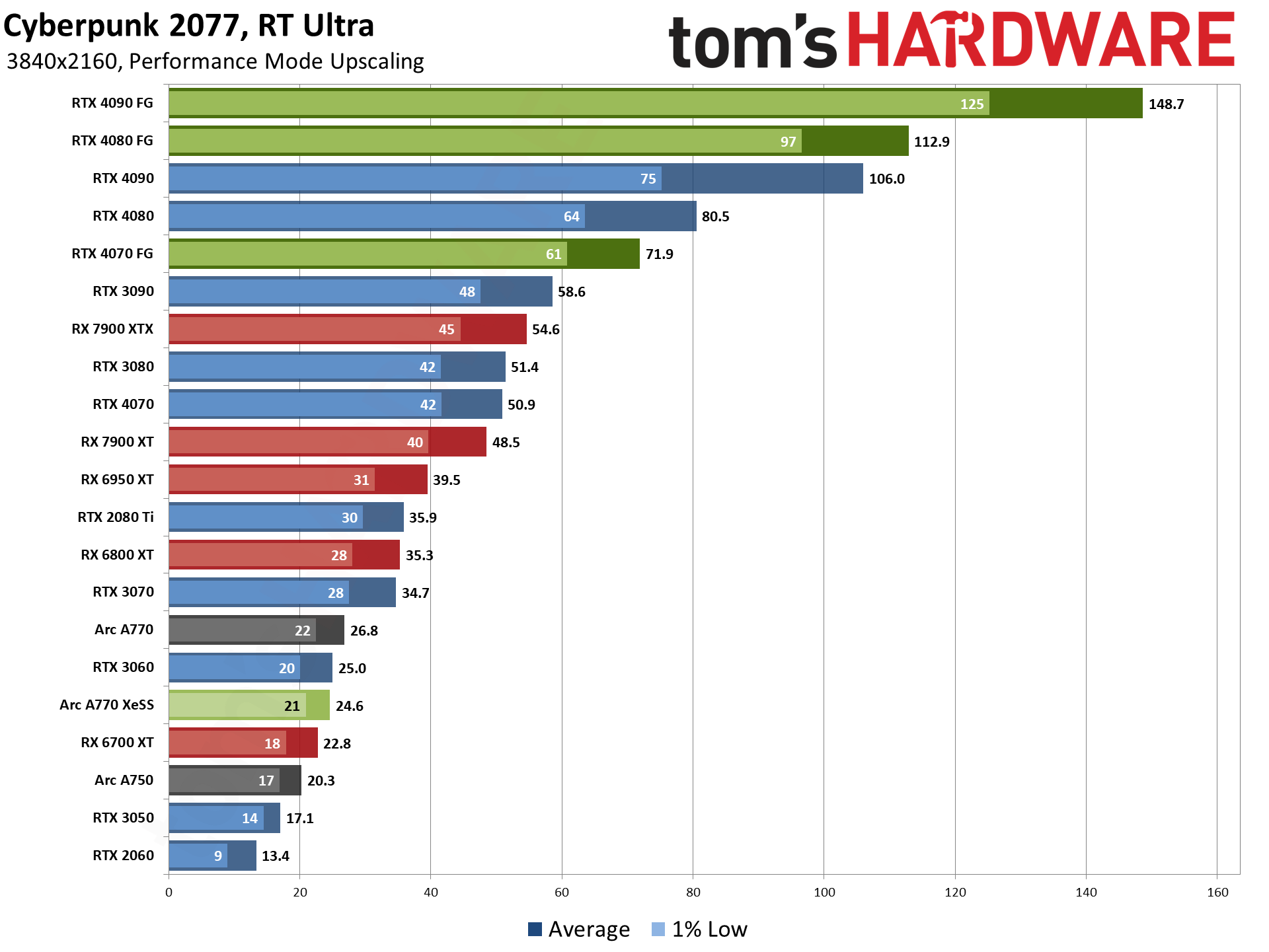

And finally, at 4K with Performance upscaling, the RTX 4090 still averages 65 fps without Frame Generation — about 7% slower than the native 1080p result, if you're keeping track. FG boosts that by 58%, while the RTX 4080 sees a slightly higher 62% uplift and the RTX 4070 gains 61%. Basically, the OFA hardware is the same on all of the 40-series parts, so the potential gains at a given resolution are generally in the same ballpark. As for previous generation GPUs, the RTX 3090 lands at 32 fps, technically still playable but not a great result.

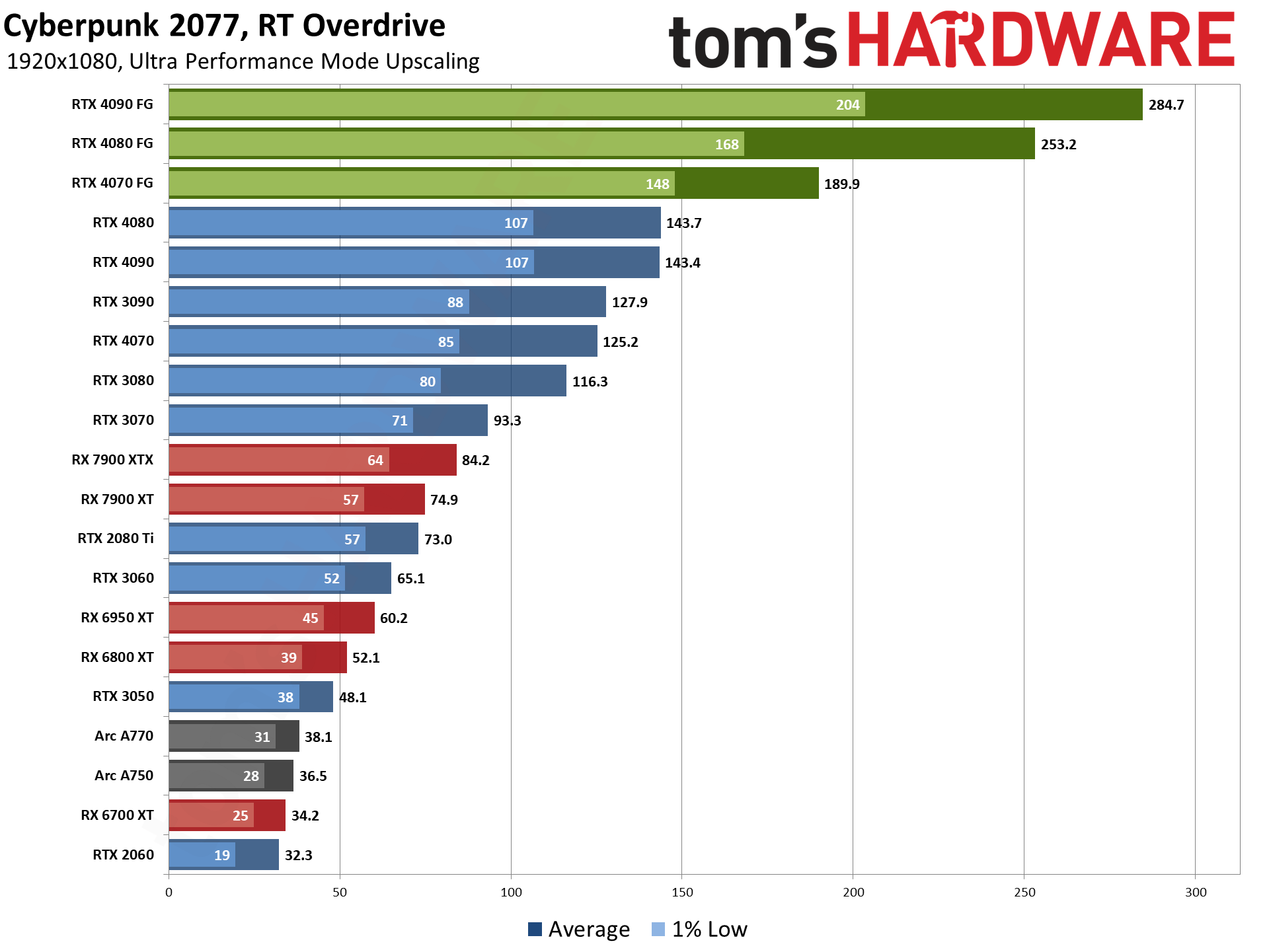

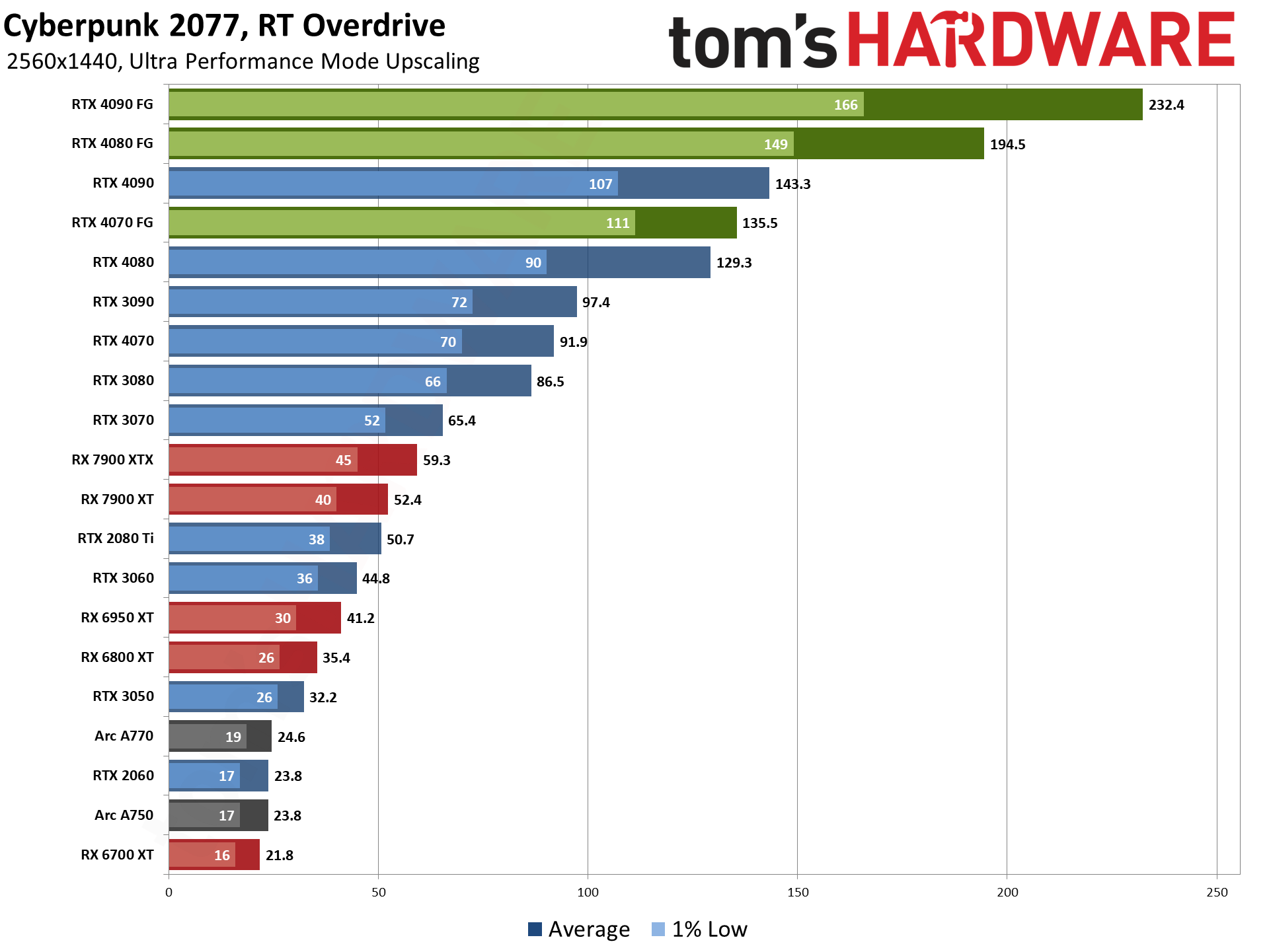

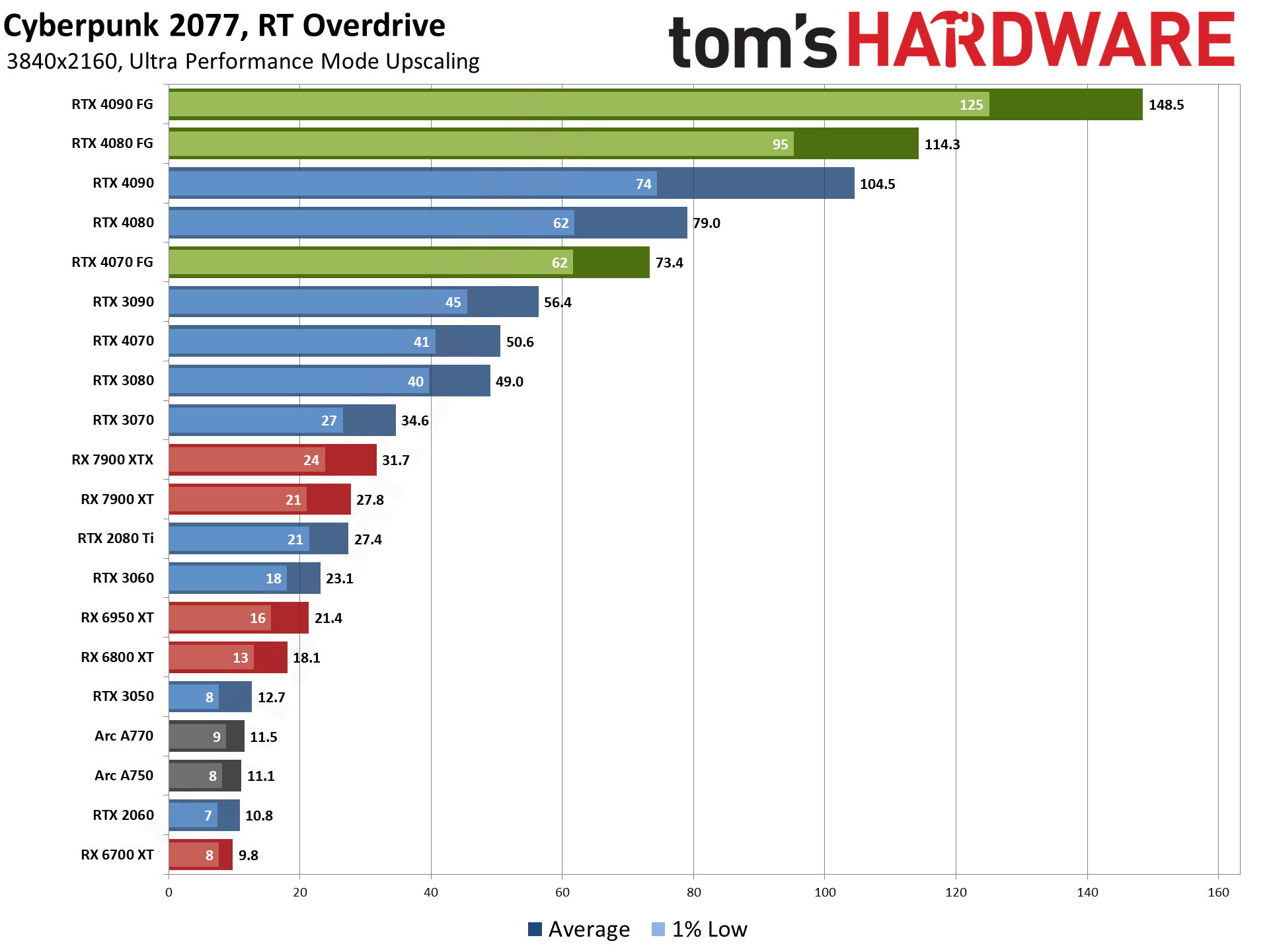

Cyberpunk 2077 RT Overdrive: Ultra Performance Upscaling

It's almost silly to talk about Ultra Performance upscaling modes (9X upscaling factor) as being something you'd have to use, but here we are. Originally intended "for 8K," many of the non-Nvidia GPUs (and even some of the slower Nvidia models) simply don't have the muscle to fully ray trace / path trace even 1920x1080 pixels. Chop that down to 640x360 on the other hand and suddenly we're in business.

Image fidelity is not great with this level of upscaling, but at least it's a foot in the door if you want to see for yourself how Cyberpunk 2077 looks in RT Overdrive mode. It's also worth noting that FSR2 doesn't seem to look as good with this upscaling mode as DLSS. That's not particularly shocking, as tripling the horizontal and vertical resolutions of the source content will always prove challenging, especially if you want to do that in a very short amount of time (i.e. at 60 fps).

First, notice that the RTX 4080 and 4090 are basically maxed out for 1080p performance, but interestingly the 4090 still has higher Frame Generation performance. That suggests that maybe the OFA isn't the only thing involved with Frame Generation, and the extra shader cores on the 4090 (or tensor cores) still help.

With the base resolution now set to 640x360 (before 9X upscaling), everything we tested manages to hit 30 fps, though that's not saying much for the RTX 2060, RX 6700 XT, and Arc GPUs. Nvidia's slowest RTX card is definitely held back by only having 6GB VRAM, as in other games it generally beats the RTX 3050. Then again, there are some architectural updates that favor the 3050 and we might be seeing those here — the 3070 and 2080 Ti are typically similar levels of performance, but here the 3070 leads by 28%.

AMD's RX 6700 XT meanwhile represents about the lowest GPU from AMD we'd even consider using with complex ray tracing turned on — the RX 6650 XT and below still support the feature, but it's not much practical use (and the same goes for the Intel Arc A380). Also notice that Intel's two Arc GPUs outperform the 6700 XT in this particular workload, another indication of Intel's better RT support. We suspect Intel will be able to further improve performance in this mode as well, maybe not to RTX 3060 levels but probably at least placing ahead of the RTX 3050.

Moving up to 2560x1440, with a base resolution of 853x480, performance ends up being pretty similar to what we saw at 1080p with Performance mode upscaling (i.e. from 960x540). Most of the GPUs are slightly faster at 1440p with Ultra Performance upscaling, and the RTX 3050 now clears the 30 fps mark, but otherwise the standings remain the same.

Finally, 3840x2160 with Ultra Performance mode (upscaled 1280x720) also looks nearly the same as 1440p with Performance mode (upscaled 1280x720). You'll still need an RTX 3070 or RX 7900 XTX to break the 30 fps barrier in either case, but the upscaling complexity ends up being a bit more demanding than at the lower resolution.

"Pure" Ray Tracing Performance

We noted that Cyberpunk 2077's Overdrive setting is our first look at how demanding future games might become when they implement "pure" ray tracing engines. This isn't the first game to utilize what Nvidia calls path tracing, but the previous attempts have all been far less complex environments. Quake II RTX is the oldest, a game that first launched in 1997. Minecraft RTX came next in the path tracing releases, and while the original game launched in 2009, it was intentionally less demanding on the graphics side of the equation (prior to ray tracing). Even Portal RTX represents a game that originally launched in 2007.

So making the jump to a game engine like Cyberpunk 2077, which came out in 2020 and was already one of the more demanding games around, represents a major step forward. And the graphics still don't look massively different, but it proves that we now have hardware (RTX 4080 and 4090 in particular) that can legitimately provide a good gaming experience via full "path tracing" in 2023.

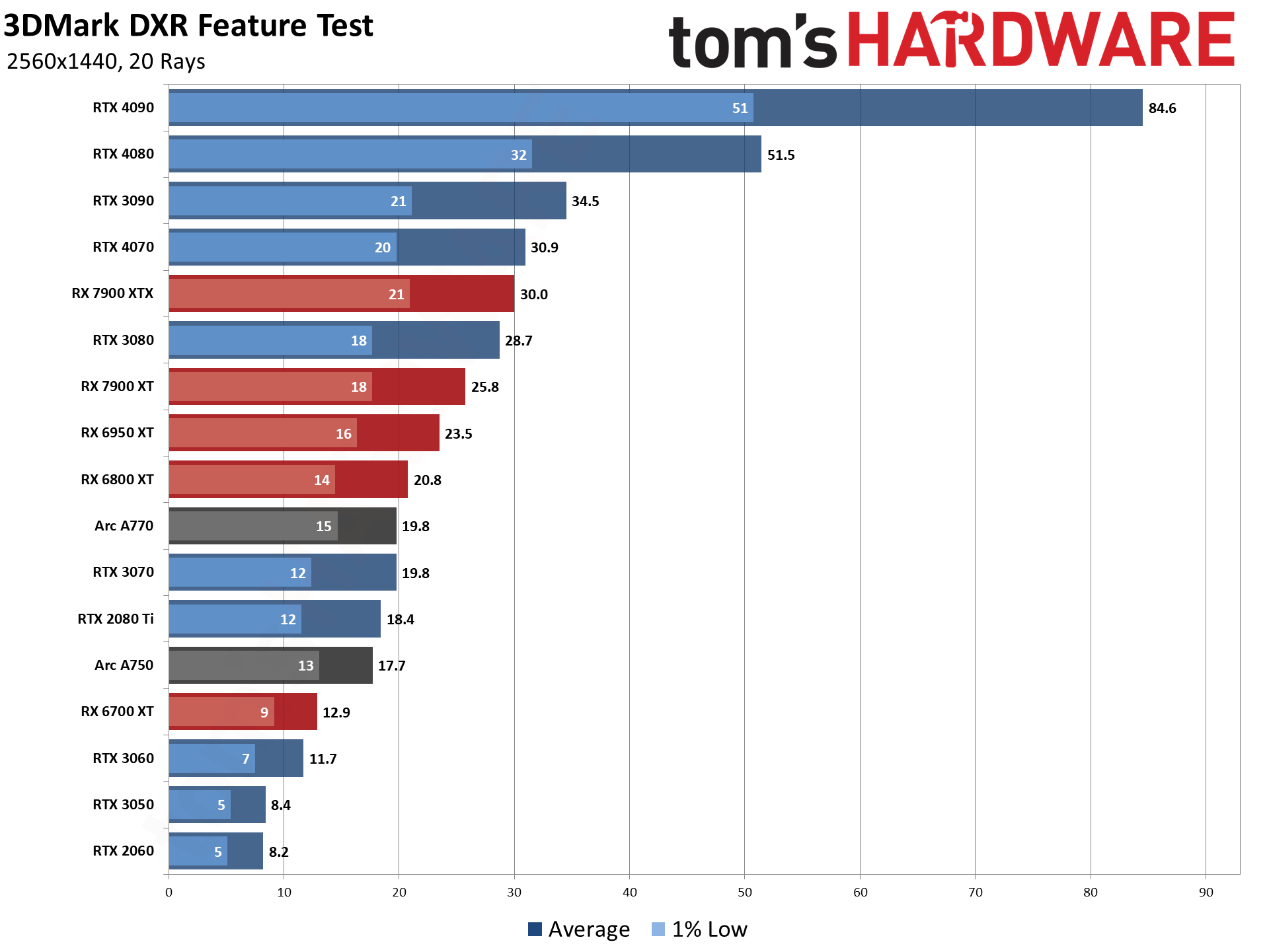

But we also have at least one reasonable synthetic benchmark that provides a slightly different look at what full ray tracing performance might look like from the various GPUs. 3DMark Port Royal has a separate DXR Feature Test that runs at 2560x1440 and implements full ray tracing in real time, with 20 rays per pixel as the maximum setting. Here's how Cyberpunk 2077 Overdrive performance at native 1080p looks compared with the DXR Feature Test, on the same collection of GPUs.

If we're talking about hardware specific optimizations, we have to think that 3DMark is going to be far more agnostic than Cyberpunk 2077. Also, we've dropped the Frame Generation results from the charts, since we're interested in native full ray tracing performance. Still, there are a lot of differences between these two charts.

First, the gap between the 4090 and 4080 becomes quite a bit larger with the 3DMark DXR Feature Test. Curiously, raw fps on a lot of the Nvidia cards ends up being pretty close in the two benchmarks, but Cyberpunk 2077 has the RTX 4090 as one major exception, topping out at 70 fps compared with 85 fps in 3DMark. That's a 21% difference, where the other RTX cards range from almost no difference at all (1% on the 3090) to at most 11% (the 2060, which probably has issues with 6GB VRAM in Cyberpunk 2077).

But the differences for non-Nvidia hardware are quite a bit more dramatic. In the 3DMark test, AMD's RX 7900 XTX performs 80% better than it does in Cyberpunk 2077. Similarly large gaps appear on other AMD GPUs: 7900 XT is 86% faster in 3DMark, the 6950 XT is 142% faster, 6800 XT is 163% faster, and the 6700 XT is three times as fast. On the Intel Arc GPUs, the A770 16GB is 236% faster in 3DMark, while the A750 is 'only' 205% faster.

Now, that doesn't necessarily mean that Cyberpunk 2077 was intentionally optimized for Nvidia's hardware... but that's the most likely explanation for most of the difference. Driver optimizations — or rather, the lack thereof — for Cyberpunk's Overdrive mode are another possibility, but regardless there's plenty of reasons to take the current AMD and Intel results in this particular game with a healthy dose of skepticism. Still, 3DMark isn't an actual game, and the way it renders also means we're not looking at pure apples to apples comparisons. The resulting performance should be relatively indicative of what we can expect with a properly optimized engine and drivers.

Cyberpunk 2077 RT Ultra: Performance Upscaling

Finally, just to provide a more realistic view of real-world gaming performance, here are results using Cyberpunk 2077's previous RT Ultra preset that doesn't implement full path tracing. We've run this test a lot over the years, except unlike our usual GPU benchmarks, we're enabling Performance mode upscaling on everything.

If you've previously played Cyberpunk 2077 at maxed out settings with upscaling and managed to get far more playable results than what we're showing with RT Overdrive mode, that's because RT Ultra mode is far less taxing of the ray tracing hardware units.

Intel's Arc GPUs run about 3X faster in RT Ultra mode at 1080p, and up to 4X faster at 1440p. AMD's RX 7900 cards are over twice as fast at 1080p, 3X faster at 1440p, and almost 4X faster at 4K. The same mostly applies with the RX 6000-series GPUs we've tested.

It might not look as nice, but anyone not using a high-end Nvidia card will almost be forced to opt for RT Ultra settings rather than path tracing at this point in time.

Cyberpunk 2077 Closing Thoughts

For what should be obvious reasons (see the "Pure RT" section), we're not convinced these performance results are fully representative of non-Nvidia GPUs. That's fine in some ways, as Nvidia likely helped out a lot with the programming side of things, not to mention this is labeled as a "Technology Preview." Still, previews can be used for marketing purposes, which shows once more that it's absolutely possible to wildly skew a game engine to favor one architecture over others.

Even disregarding the performance questions, I really do wish that the visual difference between RT Overdrive and RT Ultra modes was more noticeable. It looks better in many ways, but a lot of the time it just looks different, at least to me. Perhaps I'm too acclimated to the way "unnatural" rendering techniques look and I'm just not bothered by them that much. What's very noticeable is that path tracing can cut framerates by 50–75 percent. If you're not using an Nvidia GPU, the path tracing technology preview is more of a curiosity than anything you should seriously consider using.

Looking forward, though, if we can already get a full path tracing engine implemented in Cyberpunk 2077, certainly other games could try this as well. I'd love to see a developer try to create a game from the ground up with the goal of using full ray tracing, with no hybrid rendering support, and then hear some thoughts on how much that did or didn't help with the creation of art assets, level design, and such.

The bottom line is that we're still a solid generation or two away from full ray tracing being viable for even half of PC gamers. Yes, RTX 4090 can chew through Cyberpunk 2077 in RT Overdrive mode and provide pretty impressive performance even at 4K — provided you enable 4X upscaling and DLSS 3 Frame Generation. But the latest Steam Hardware Survey puts the number of gamers with an RTX 4090 at just a quarter of a percent.

In fact, if you sum up all of the RTX 40-series results, it's still less than 1% of gamers. (That's using the February 2023 results, as the March results look a bit mangled.) Take that a step further; at best, 44% of Steam users have a card with any ray tracing support. Drop everything below the RTX 2080 and RTX 3060 (because such cards are "too slow" for DXR), and only about a quarter of gamers have at least reasonably fast ray tracing support.

But we're clearly improving in performance and features, and as questionable as Frame Generation can be in some situations, such technologies aren't going away. That goes double if you're looking at fully ray traced games. Consider that the RTX 3060 performs about the same as an RTX 2070 Super and project that forward. Once we have "RTX 5060" GPUs performing at around RTX 3080 levels and selling for (hopefully / we're dreaming) $350, full ray tracing implementations could truly reach the tipping point.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

edzieba It would be worth having a look at Digital Foundry's coverage: I-ORt8313OgView: https://www.youtube.com/watch?v=I-ORt8313OgReply

Once you know what to look for - particularly when it comes to global bounce-light and reflections of lit objects - the differences between the full rasterised GI in RT Psycho vs. the raytraced GI in RT Overdrive become much more clear. e.g. the splitscreen at 06:40 where the raster GI solution results in a main scene looking pretty ok (though with no bounce-lighting on any surface not directly lit by the scene light) but with its reflection being completely lit in the wrong colour. That's going to be a general theme with raster vs. RT GI - if the raster GI probe is different from the as-lit scene (e.g. you have a high temperature global light to match a sunny sky but the local environment is lit by a low-temp point light) then RT GI will produce a correct output, but raster GI will end up completely wrong. -

InvalidError Looks like Intel has much Cyberpunk RT Overdrive work to do in its drivers. The A750 should at the very least be wiping the floor with the RTX3050.Reply -

Endymio Replymost gamers would likely prefer saving money on the cost of a new graphics card rather than helping a corporation save on game development costs....

Despite Jarred's subtle anti-capitalist slam, the fact remains that consumers ultimately bear the costs of game development, not corporations. I also question the logic of saying ray-tracing isn't necessary, because of its impact on "muh framez". The average Hollywood CGI film is vastly more realistic than any game, despite that it runs at a mere 30fps. Why? Better physics; better ray tracing. If you're capable of generating more than 60-75 fps already, the additional horsepower is better used for improved rendering, not more frames (I exclude the potential, but unlikely case of an advantage for professional eSports gamers). -

wr3zzz Competition will not let ray tracing be for development cost saving. Time spent on pre-bake lighting scale with complexity. Without having to worry about not looking right competition will drive developers to reallocate resources into adding details into the game. Perhaps we could finally see a big leap in physics. I am guessing realistic destructible stuff will be a lot easier to add into games if ray tracing can take care of lighting of all those stuff flying and falling instead of using artists. More variety in weathers will finally look good without adding a lot of costs too. Also as we add more vertices to characters ray tracing is almost a must for realistic faces to look good.Reply -

Gahl1k The built-in benchmark does a poor job of showing the difference between hybrid rendering and Path Tracing. You can easily notice the difference at the beginning of the game, especially when you get Dexter's quest (i.e., get in his car and talk to him about the bot quest). It looks night and day different.Reply

In addition to that, Cyberpunk's Overdrive mode utilises four of Nvidia's algorithms, that's why it runs better on their cards. Your stance on taking this game's performance on other cards with a dose of scepticism is correct. -

blacknemesist This feels like a mumbo-jumbo of misinformation put together by manipulation of data and data selection to guide the reader towards the conclusion the author wants to put out all the while completely missing the point of WHY this mode is even available in an already pretty demanding game. Guess anything that is not pure raster is not to exist for some people.Reply -

Giroro Ray Tracing continues to be a solution looking for a problem. It's a waste of silicon that is not at all worth the relentless RTX-era price hikes.Reply

required_username said:

Doesn't Nvidia own Digital Foundry?

If not outright, then through their massive ad-buys and exclusive access that built DF and are needed to keep DF afloat? Maybe something has changed lately, but they definitely used to be at the bottom of the classic YouTube trap: Shilling hard to try and win ad money, affiliate commissions, and "exclusive access".

Don't get me wrong, they are important pioneers and mouthpieces for the direct-to-customer influencer-marketing industry. It's very powerful to be able to make an ad that people are more likely to believe than a NASA scientist... but I trust DF's opinion about Nvidia in the same way I would trust the Home Shopping Network, or George Foreman's opinions about tabletop electric grills.

Endymio said:.... the fact remains that consumers ultimately bear the costs of game development, not corporations.

Consumer is a dirty word. It's dehumanizing. I'm tired of being treated like a disposable consumer.

I would much rather be a customer in a mutually beneficial relationship with a provider of goods and services. -

mhmarefat Reply

Then how else can 4090 owner lord himself over lowly console gamer? Because console gamer is already reaching dangerously close (120 FPS)! Can you imagine the DREAD of having to be on par with other human beings?!blacknemesist said:Guess anything that is not pure raster is not to exist for some people.

High FPS is the right of only a few and it belongs to them alone. -

JarredWaltonGPU Multiple people here are missing the point. I'm primarily focused on performance here, and I discussed that at length. You're all getting hung up on the "RT Overdrive doesn't radically alter the way the game looks." Yes, it absolutely changes the lighting, it's more complex, etc. But it doesn't make the game feel completely different, other than the fact that it can bring most GPUs to their knees.Reply

Digital Foundry spent way more effort on hyping up the image quality enhancements. Kudos to them. But for every part where they show RT Ultra or even RT Psycho (which generally doesn't look that different from RT Ultra) versus RT Overdrive, there are ten comparisons between Max Rasterization and RT Overdrive. That's because those differences are far more noticeable.

There will be rooms lit up by a light source that look far more "correct" with Overdrive than with RT Ultra or rasterization. It's still the same game underneath, however, so we're putting a bit of lipstick on a pig. Unless you love Cyberpunk, in which case maybe it's a fox. Whatever. If I hadn't already finished the game, sure, I'd turn on Overdrive and play it with DLSS upscaling and frame generation on a 4090. But I don't have a compelling need to go back and replay the game.

The guns still feel the same. The randomly generated people and cars that go nowhere are all the same. The quests are the same. But the lighting and shadows are different! Assuming you have a GPU capable of playing the game. -

nimbulan It might be unnecessary, but it's the best kind of unnecessary - a fully playable tech demo in a full-fledged AAA game. And better yet you can even reach a decent performance level with a last gen GPU. Personally I'm quite excited that this sort of new tech is no longer relegated to canned benchmarks for the better part of a decade before we actually get to play games utilizing them. I remember running a sub-surface scattering benchmark on a 7800 GT!Reply

Digital Foundry exists to analyze game graphics, especially cutting-edge graphics technology. Just because nVidia has been the company pushing graphics technology the hardest doesn't mean that DF is "shilling" or "owned" by nVidia, it's just the company providing the most relevant content for their channel.Giroro said:Doesn't Nvidia own Digital Foundry?

If not outright, then through their massive ad-buys and exclusive access that built DF and are needed to keep DF afloat? Maybe something has changed lately, but they definitely used to be at the bottom of the classic YouTube trap: Shilling hard to try and win ad money, affiliate commissions, and "exclusive access".