Immersion Brings TouchSense Force To Unity Engine With Haptic Lab

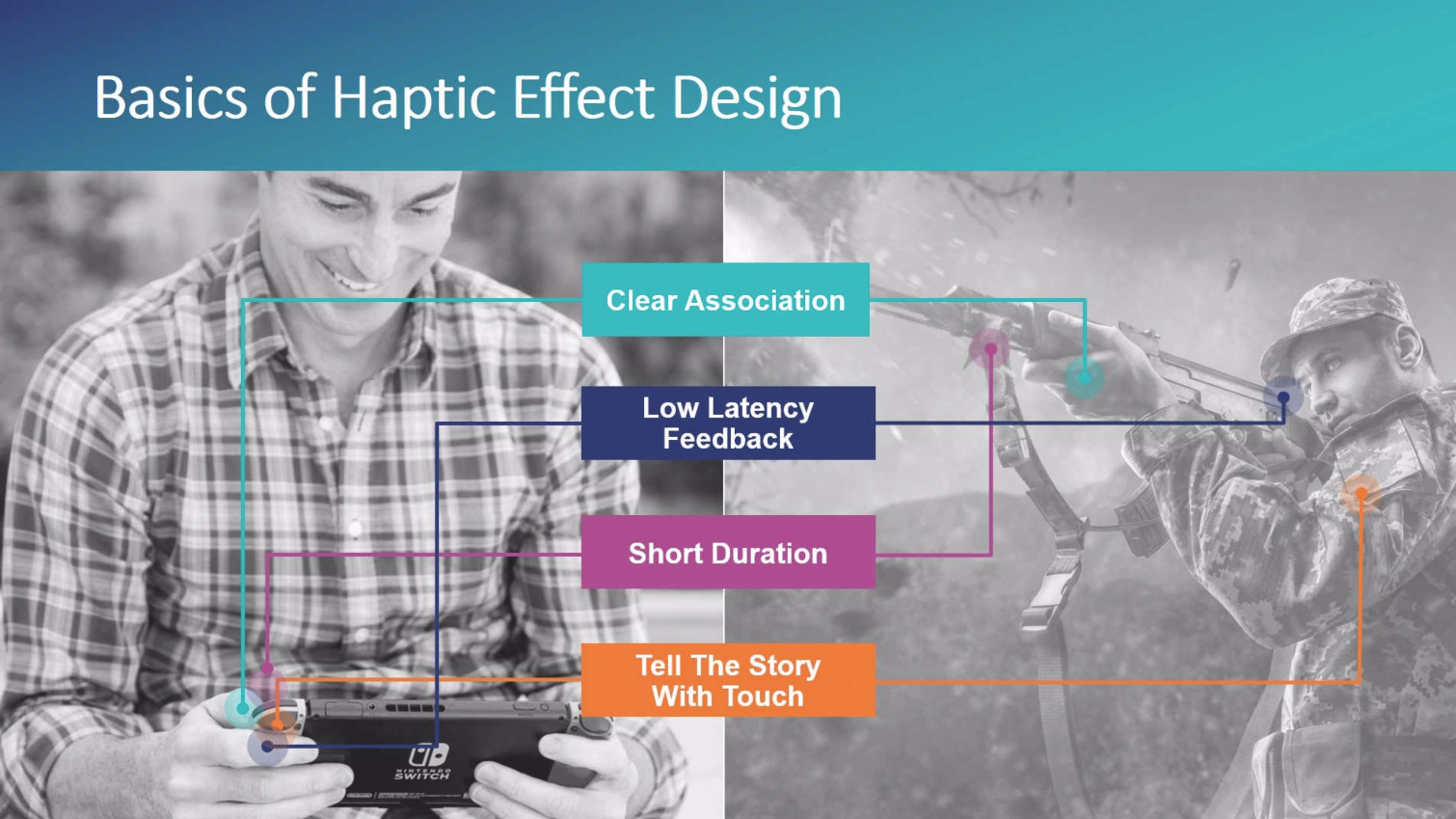

In February, Immersion revealed a haptic profile editing tool called TouchSense Force that enables developers to create complex force feedback profiles for a variety of game input devices without the need for complex code.

TouchSense Force offers a visual editor that resembles the sort of software you would expect an audio engineer or a video editor to use. Immersion said TouchSense Force could reduce the time it takes to calibrate a haptic response from multiple hours to a matter of minutes. It also offers the ability to make real-time changes to the vibration intensity of the linear response actuators. Without the visual editor, adjustments to haptic profiles require changes to the code that must be made by a developer. With the visual editor, animators can create haptic responses while they fine tune their animations.

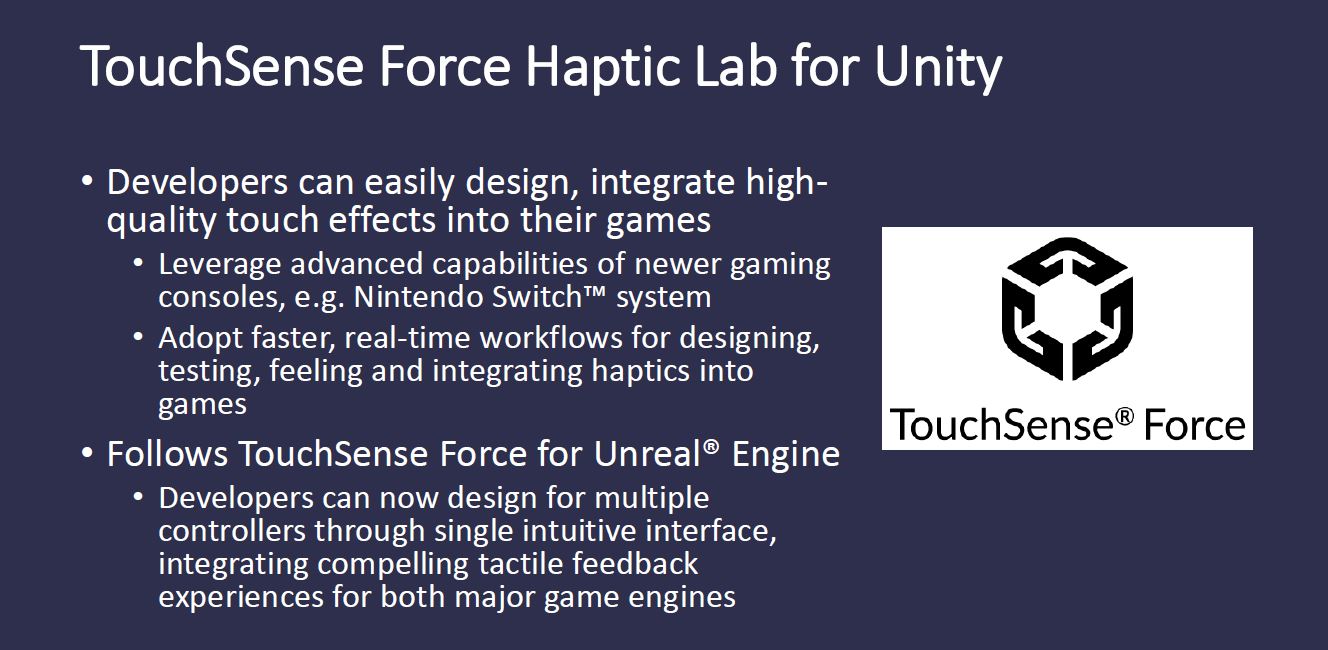

Immersion released TouchSense Force earlier this year, but it was available only to developers who use Epic’s Unreal Engine. Now, Unity developers have access to a visual editor for TouchSense Force technology called Haptic Lab.

“Since more games are made with Unity than with any other game technology, expanding our TouchSense Force solution to be compatible with Unity adds a tremendous opportunity for those developing games on this industry-leading platform,” said Chris Ullrich, Vice President of User Experience and Analytics at Immersion. “Developers can make their games much more engaging through the power of touch, including those for the wildly popular Nintendo Switch system.”

TouchSense Force for Unreal supports a handful of controllers, including the HTC Vive wands and the Nintendo Switch Joy-Cons. However, Unity developers are limited to Nintendo Switch support, for now. Matt Tullis, Immersion’s Director of Gaming & VR, said that other platforms would be supported in the future, but the initial release of the Unity editor plugin is for Nintendo developers.

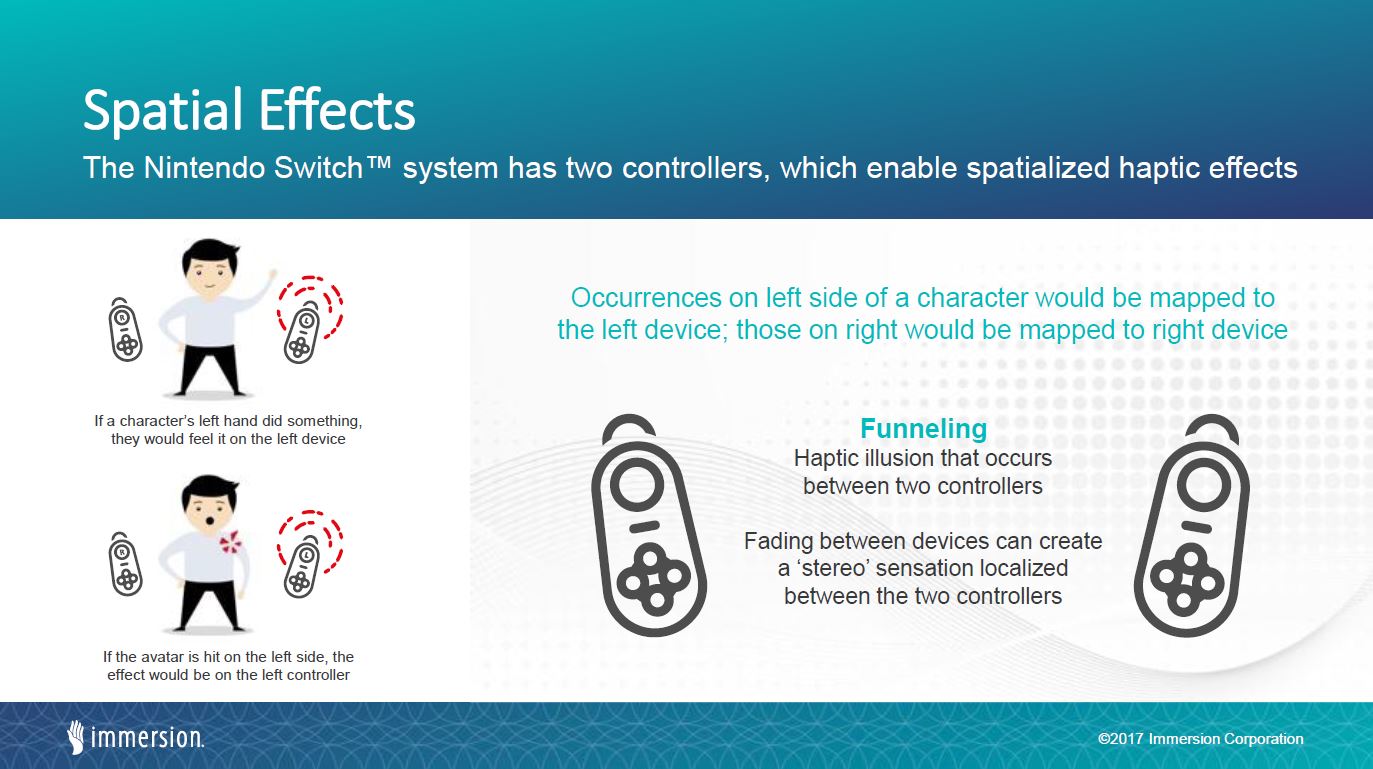

“One of the interesting things about the Nintendo Switch is that you have a controller in your left hand and a controller in your right hand now,” said Tullis. “From a haptics perspective that adds a lot of capabilities. You can have someone shoot a gun in their right hand and feel it there, or shoot from your left hand and feel it there. When you bump something on the right, you can feel it, versus when you bump something from the left. But also, you can do things by using the controllers in concert to create effects so that people feel as if something were between the controllers.”

Immersion is offering live demos of TouchSense Force for Unity at the Unity Unite conference this week, where the company is showing off some of the potential effects possible with TouchSense Force. Immersion created a spaceship demo for the Nintendo Switch that demonstrates how developers can use haptic feedback to create a convincing haptic experience.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Immersion’s spaceship demo includes a boosting function, which simulates the feeling of g-forces from accelerating. Immersion also created a “space brake” function, which feels like the pulse from an ABS braking system in a card. The demo also features a comparison between a simple and complex barrel roll action. The simple action features a left to right, right to left panning motion to help simulate the feeling of a rolling ship. The complex roll profile simulators rotational g-forces to inform the player of the active motion in a more immersive fashion.

Immersion is offering the Haptic Lab visual editor to a handful of select developers. Interested developers can sign up for access at Immersion’s website.

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.