Intel Demos 8-Core, 528-Thread PIUMA Chip with 1 TB/s Silicon Photonics

7nm chip has 66 threads per core and pushes 1 TB/s of optical I/O.

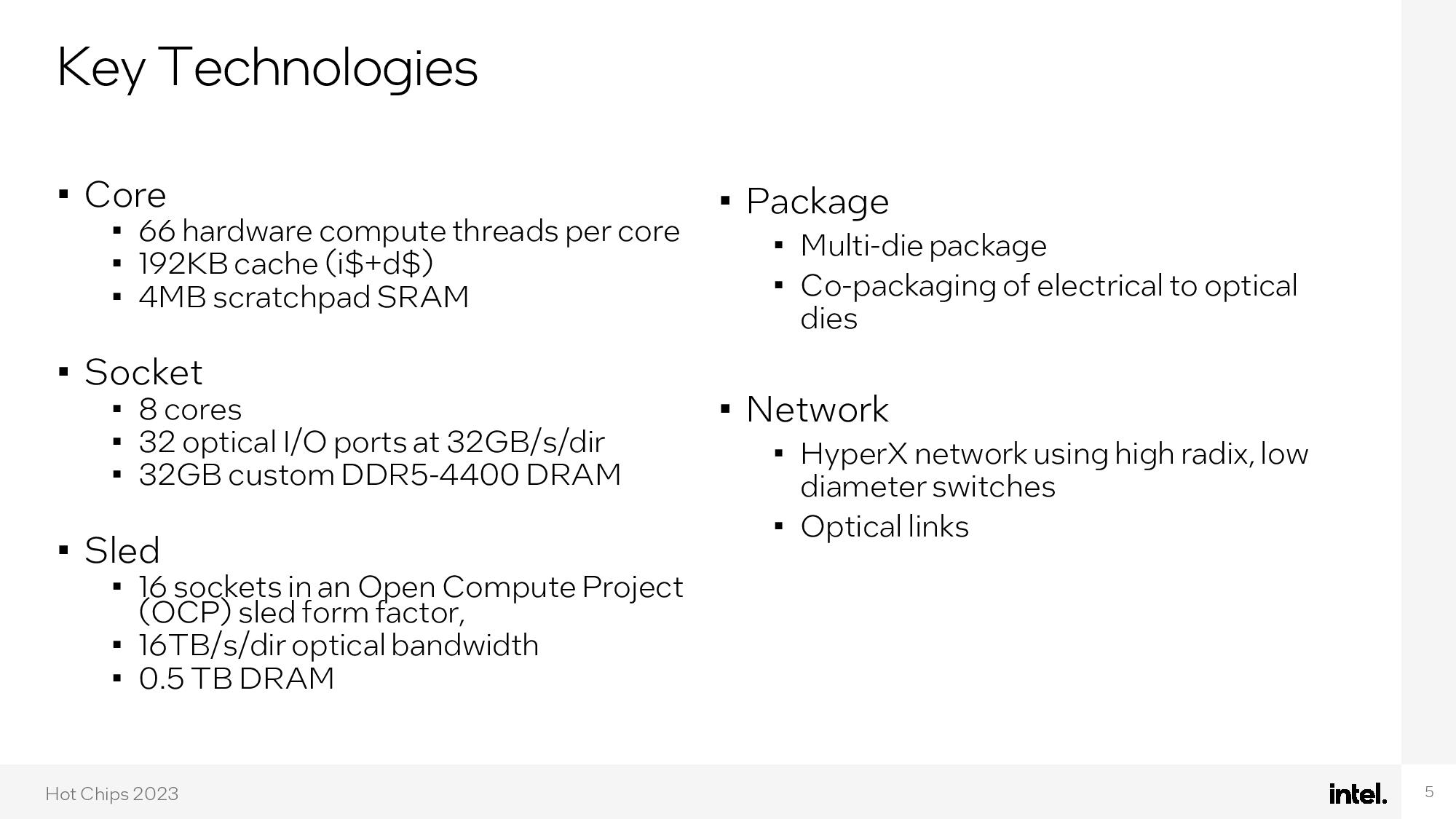

Intel unveiled its first direct mesh-to-mesh photonic fabric at the Hot Chips 2023 chip conference, highlighting its progress towards a future of optical chip-to-chip interconnects that are also championed by the likes of Nvidia and Ayar Labs. However, the eight-core 528-thread chip that Intel used for the demonstration stole the spotlight due to its unique architecture that sports 66 threads per core to enable up to 1TB/s of data throughput. Surprisingly, the chip consumes only 75W of power, with ~60% of the power being used by the optical interconnects, but the design could eventually enable systems with two million cores to be directly connected with under 400ns latency.

Intel's PIUMA (Programmable Integrated Unified Memory Architecture) chip is part of the DARPA HIVE program that focuses on improving performance in petabyte-scale graph analytics work to unlock a 1000X improvement in performance-per-watt in hyper-sparse workloads. Ayar Labs worked with Intel on the optical subsystem.

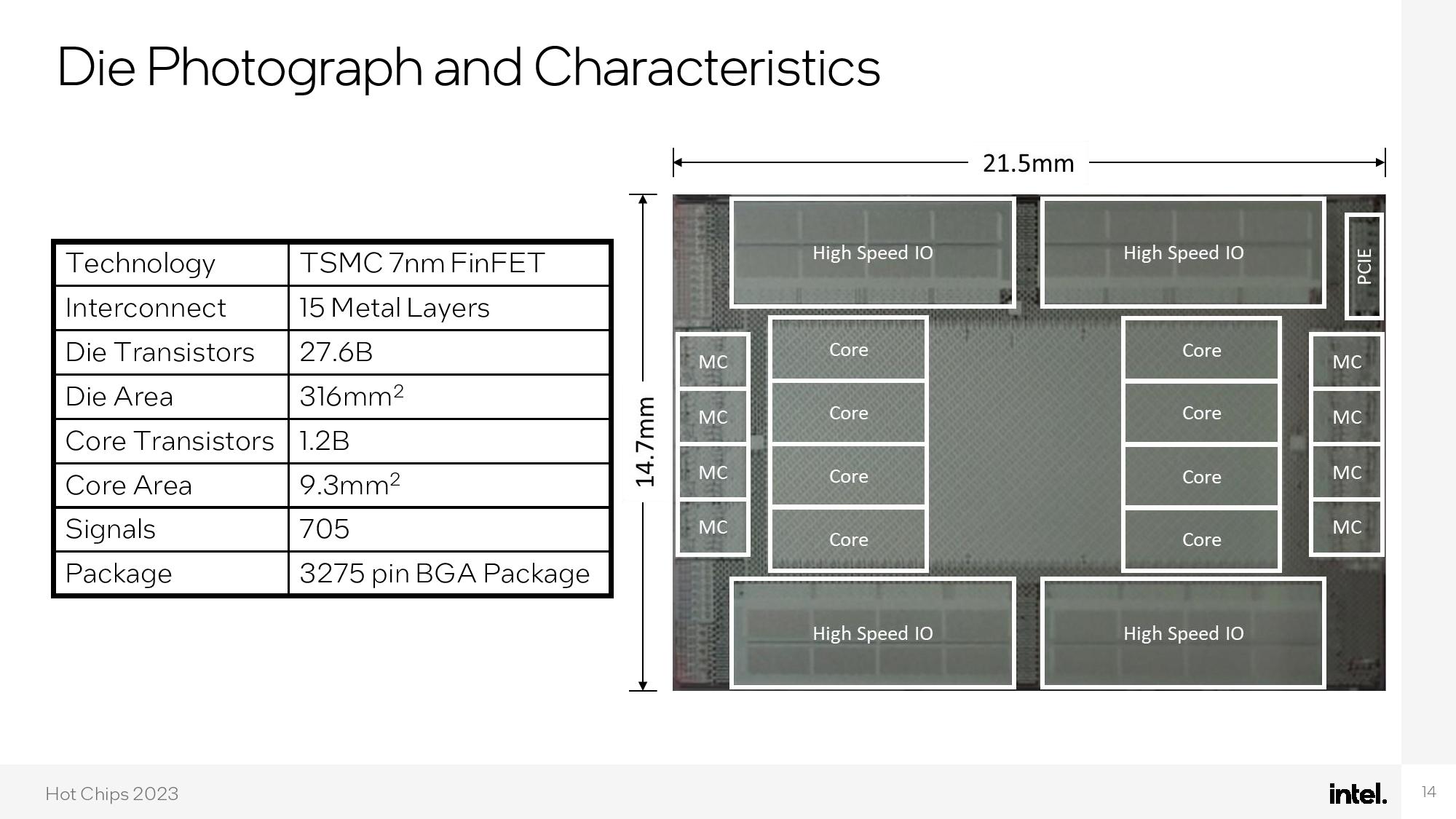

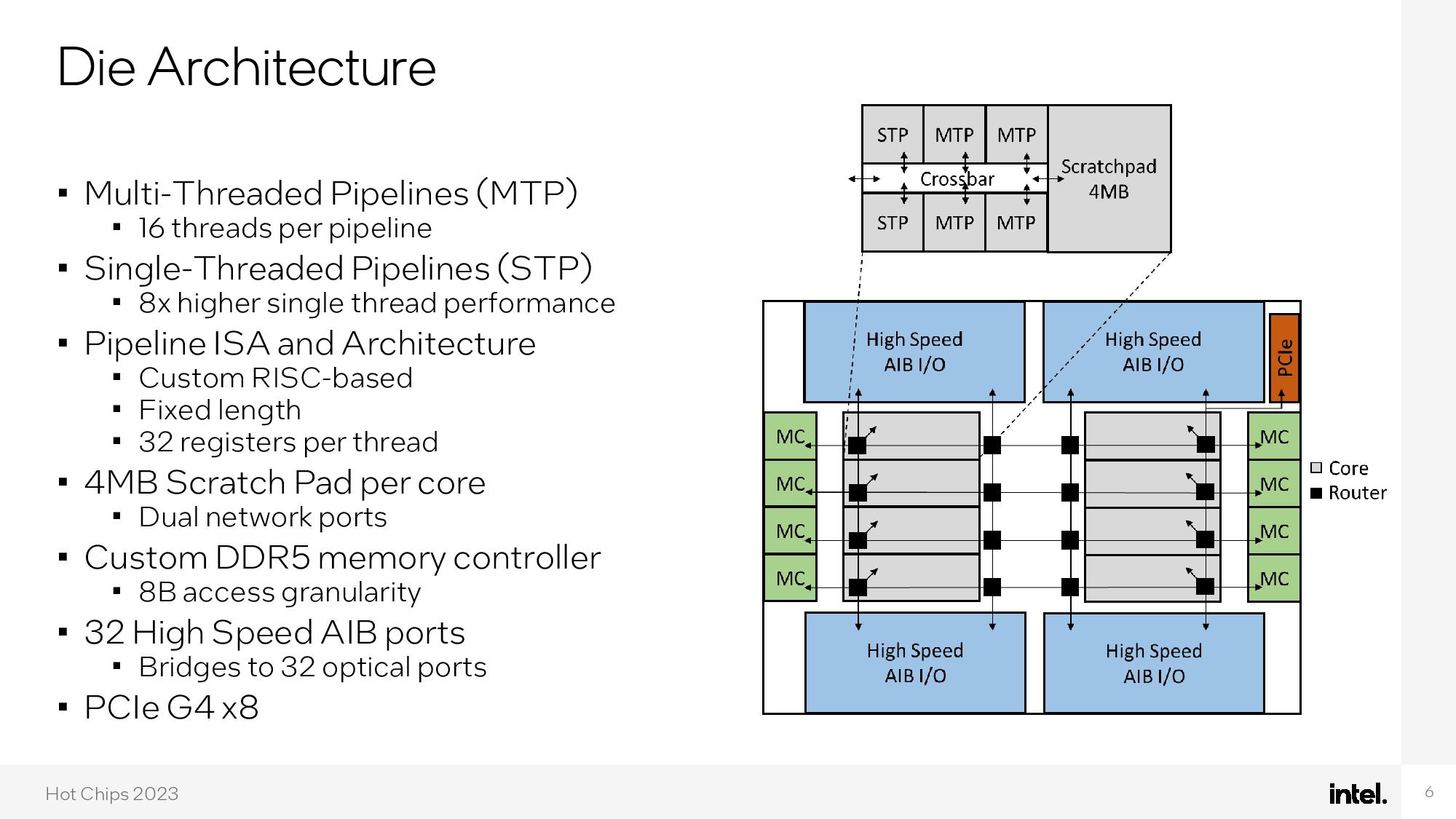

Surprisingly for an x86-centric company like Intel, the test chip utilizes a custom RISC architecture for streamlined performance in graph analytics workloads, delivering an 8X improvement in single-threaded performance. The chip is also created using TSMC's 7nm process, not Intel's own internal nodes.

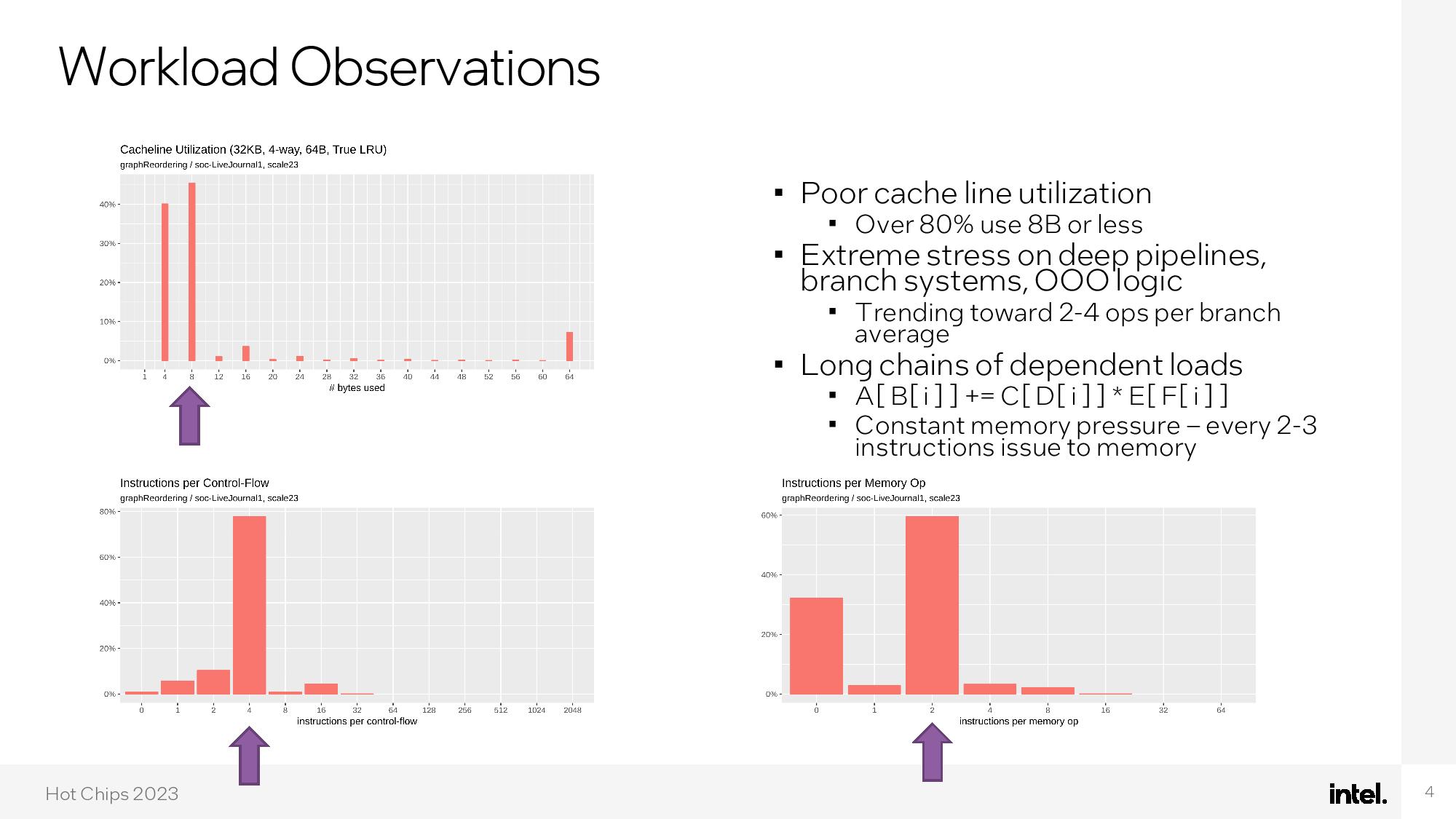

After characterizing the target workloads, Intel concluded that it needed to craft an architecture that solved the challenges associated with extreme stress on the memory subsystem, deep pipelines, branch predictors, and out-of-order logic created by the workload.

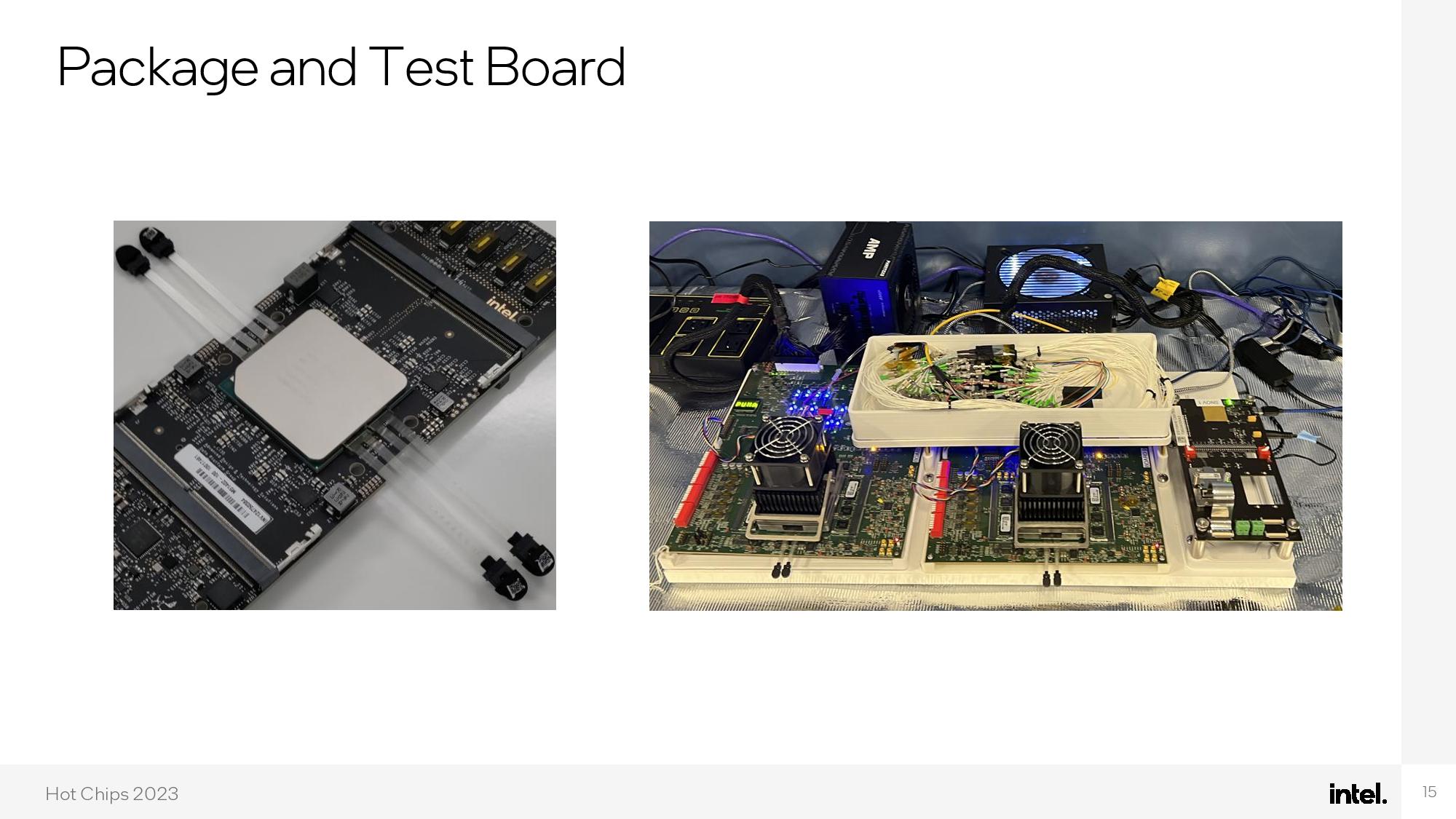

Intel's custom core employs extreme parallelism to the tune of 66 hardware threads for each of the eight cores, large L1 instruction and data caches, and 4MB of scratchpad SRAM per core. The eight-core chip features 32 optical I/O ports that operate at 32 GB/s/dir apiece, thus totaling 1TB/s of total bandwidth. The chips drop into an eight-socket OCP server sled, offering up to 16 TB/s of total optical throughput for the system, and each chip is fed by 32GB of custom DDR5-4000 DRAM.

Intel fabbed the chip on TSMC's 7nm process with 27.6 billion transistors spanning a 316mm^2 die. The eight cores, which consume 1.2 billion transistors, run down the center of the die, flanked by eight custom memory controllers with an 8-byte access granularity. Communication routers populate the 'empty' center of the chip.

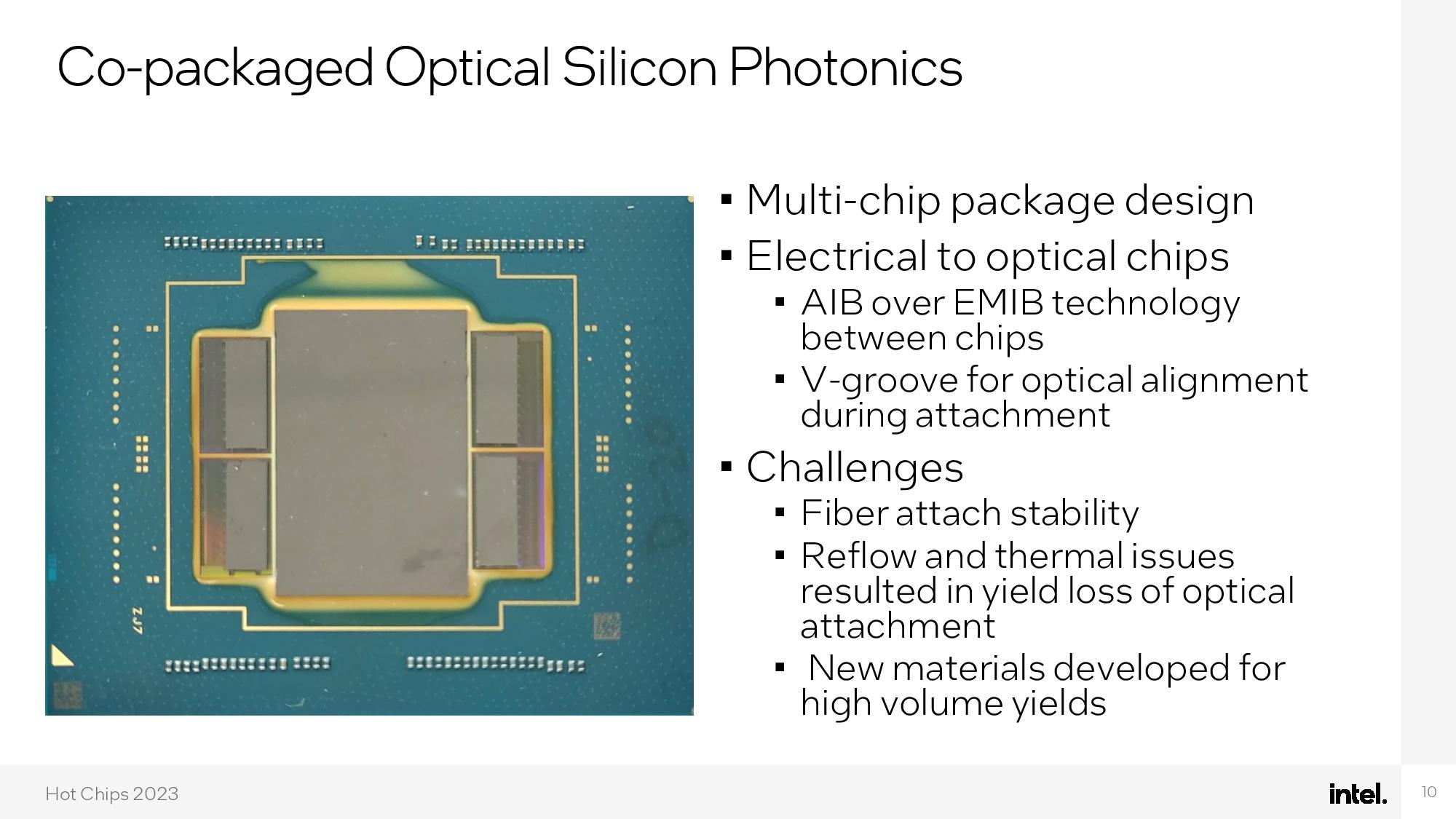

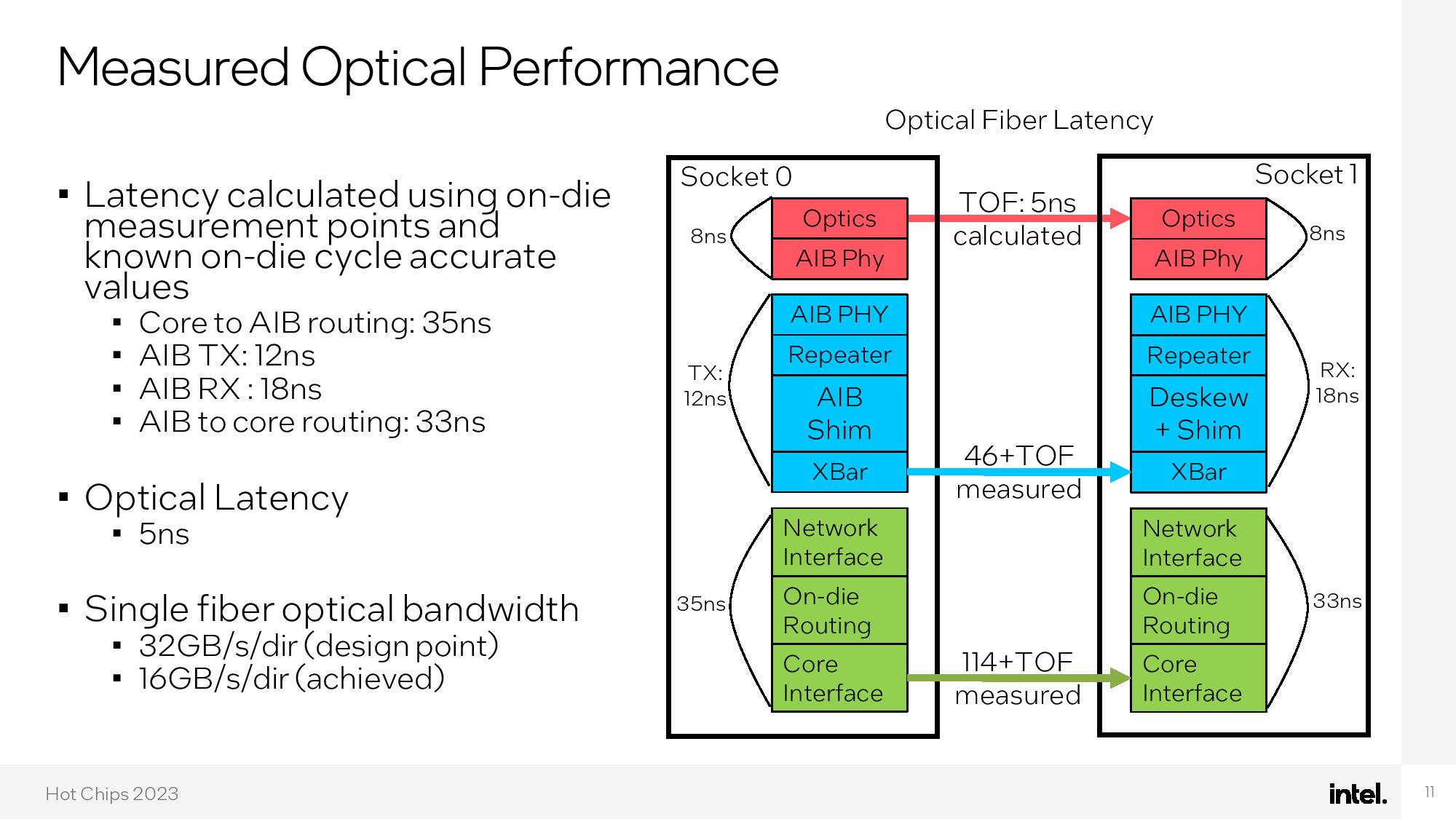

The chip also features four high-speed eight-channel optical I/O chiplets, two each at the top and bottom of the die, that bridge the internal electrical signals to external optical interconnects. These units are connected via Intel's EMIB packaging and use the AIB protocol. The chip also has a PCIe 4.0 x8 connection to communicate with the host system.

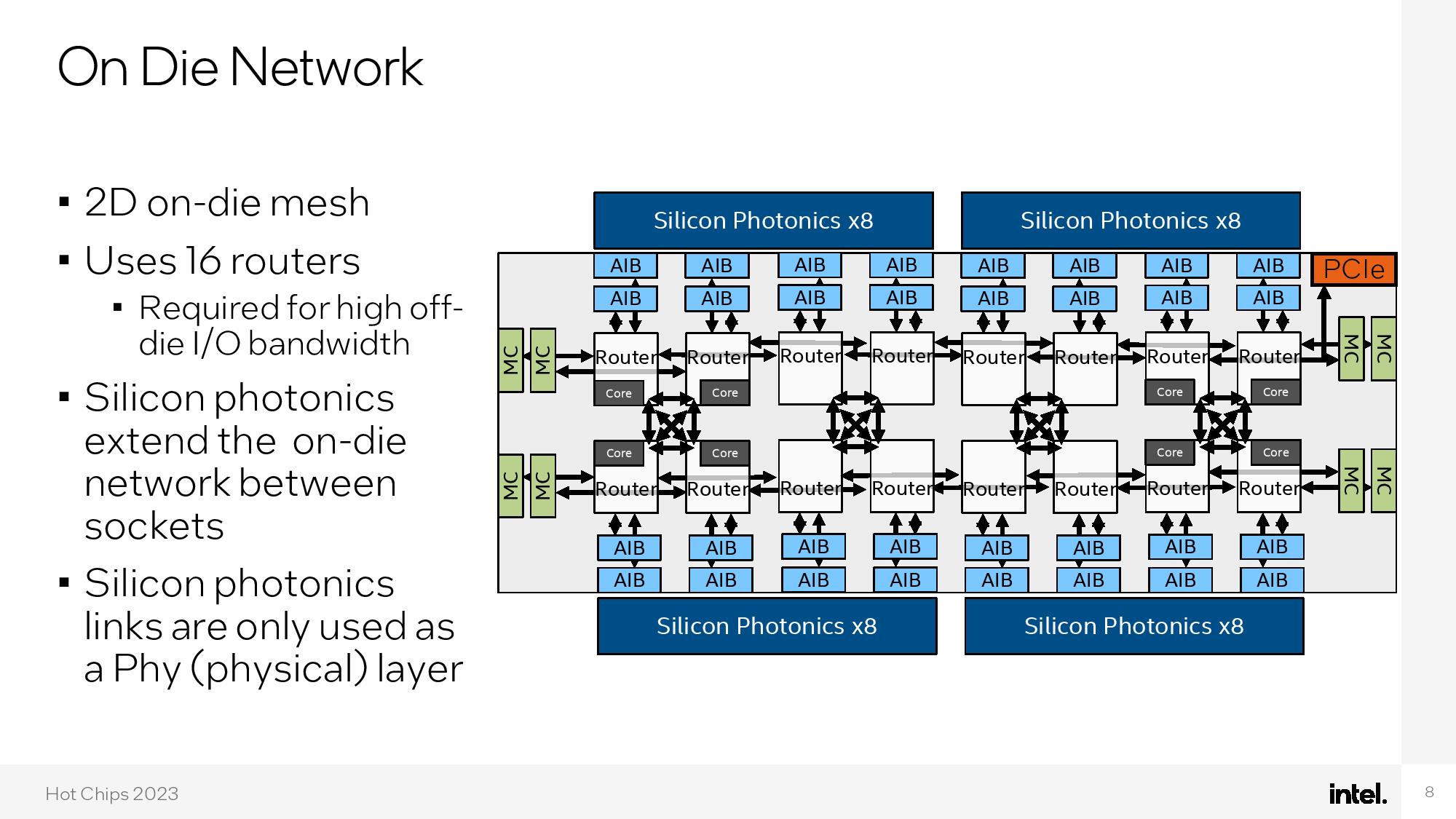

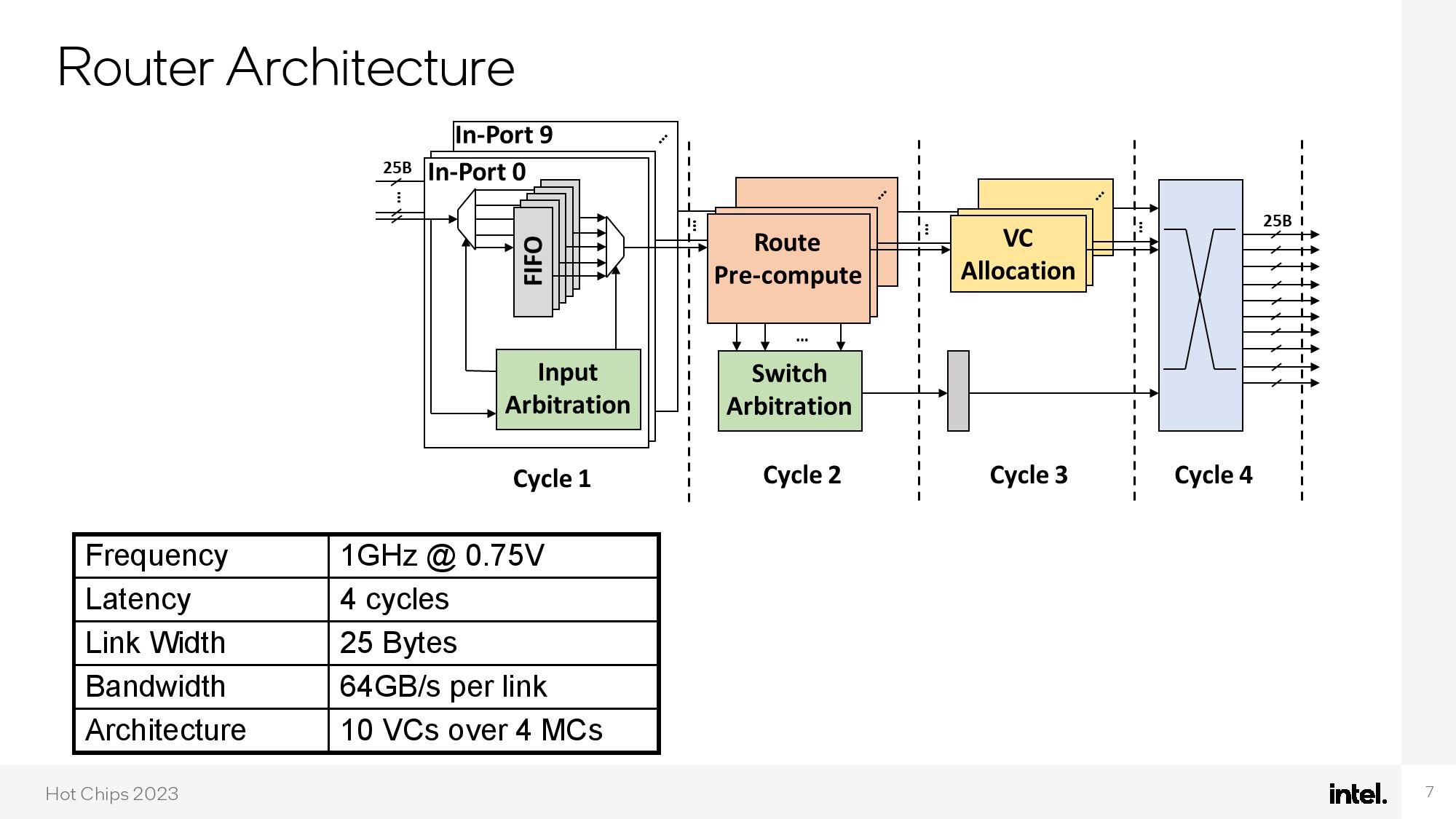

Moving the incredible amount of data generated by 528 threads around the die requires an optimized interconnect, so Intel designed a 2D on-die mesh with 16 routers to shuffle data between the cores, memory controllers, and silicon photonics interconnects (eight routers are integrated into the CPU cores, while six routers are dedicated entirely to just data movement).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

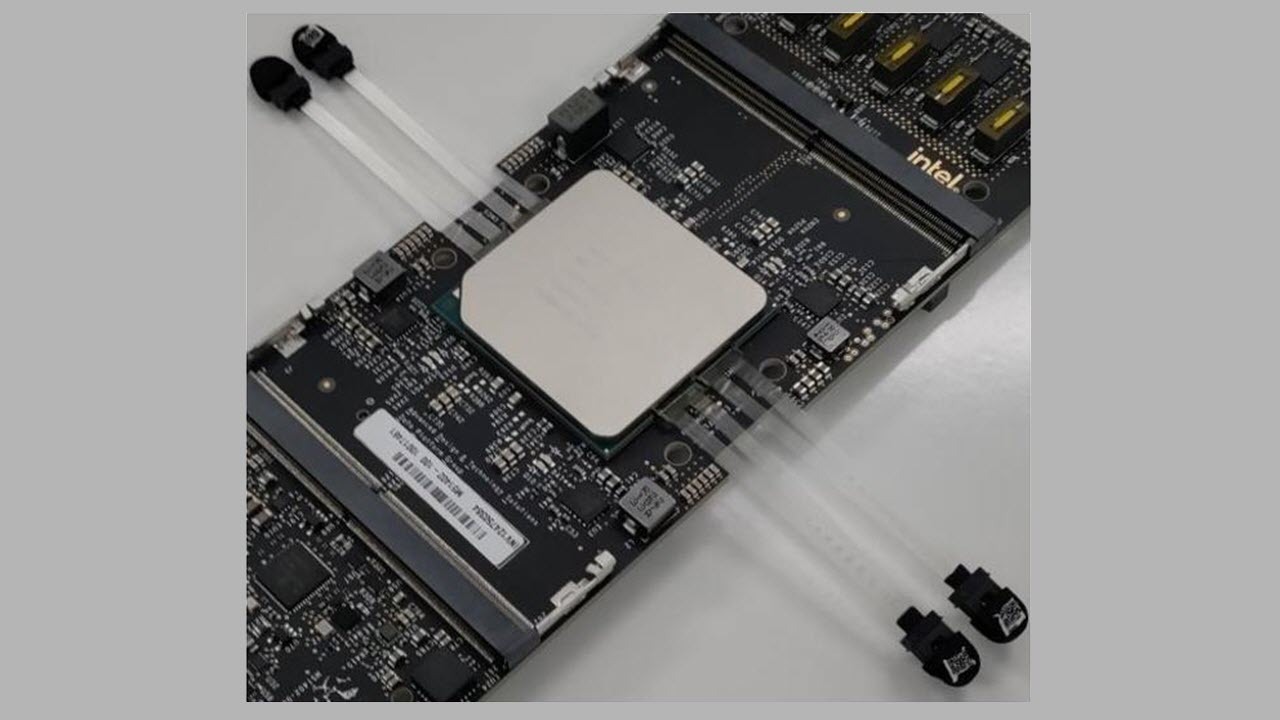

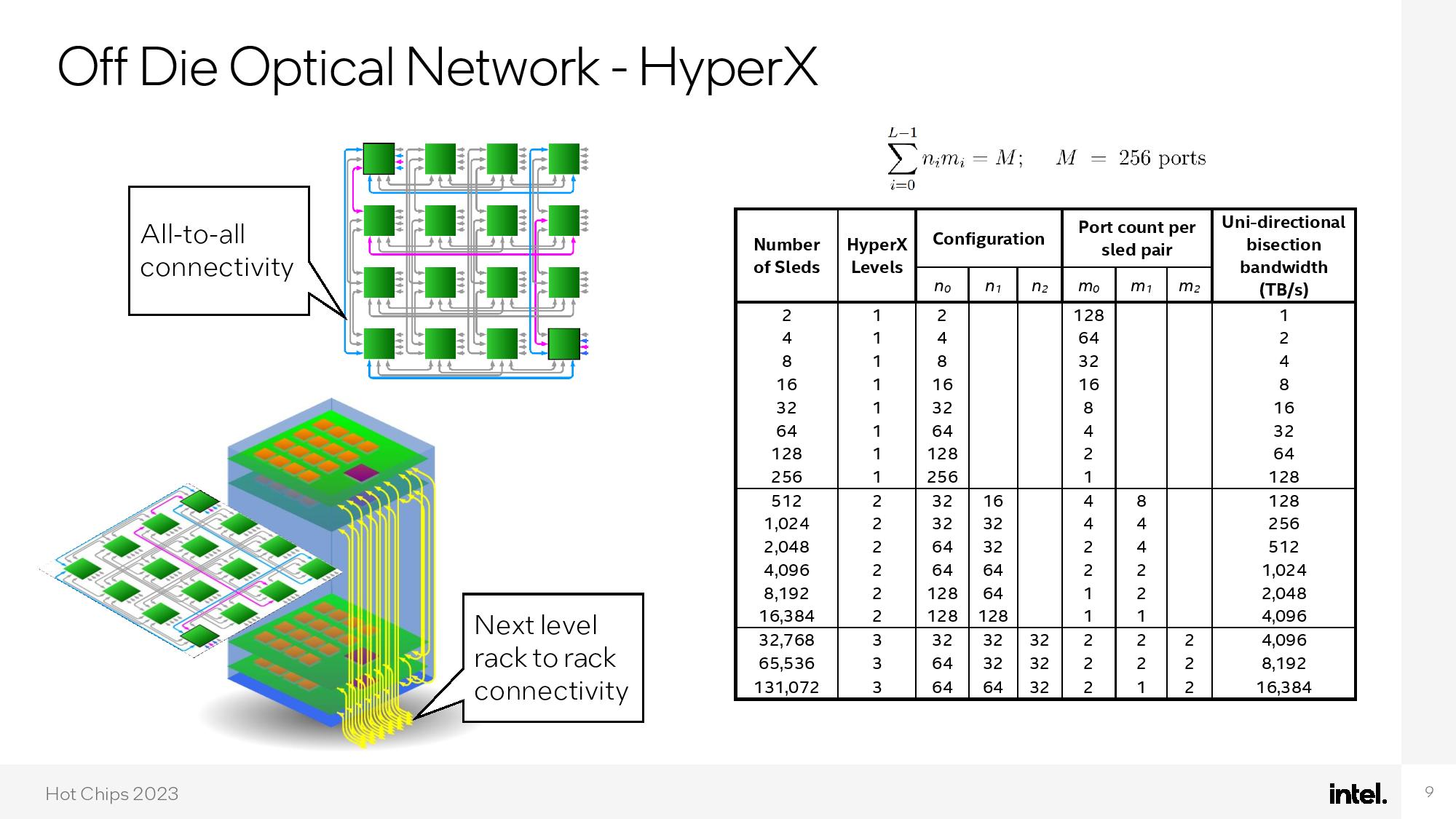

As you can see in the above album, the photonics connectors are integrated into the chip package and hang off the sides of the chip for external linking to other chips. The chip is connected to an external 'HyperX' optical network that provides all-to-all connections for the individual processing cores. This incredible network allows up to two million cores to be directly connected all-to-all with under 400ns latency.

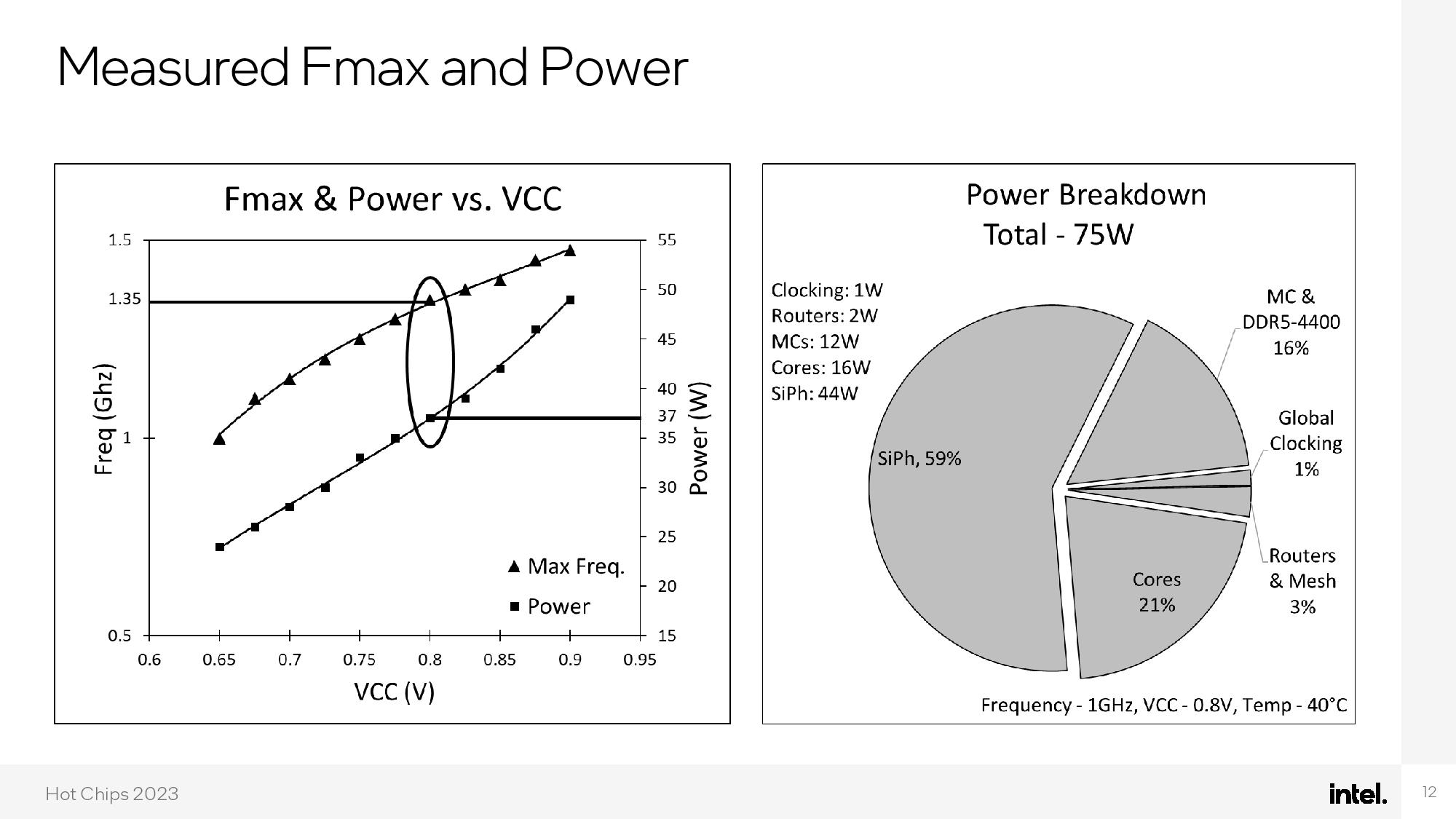

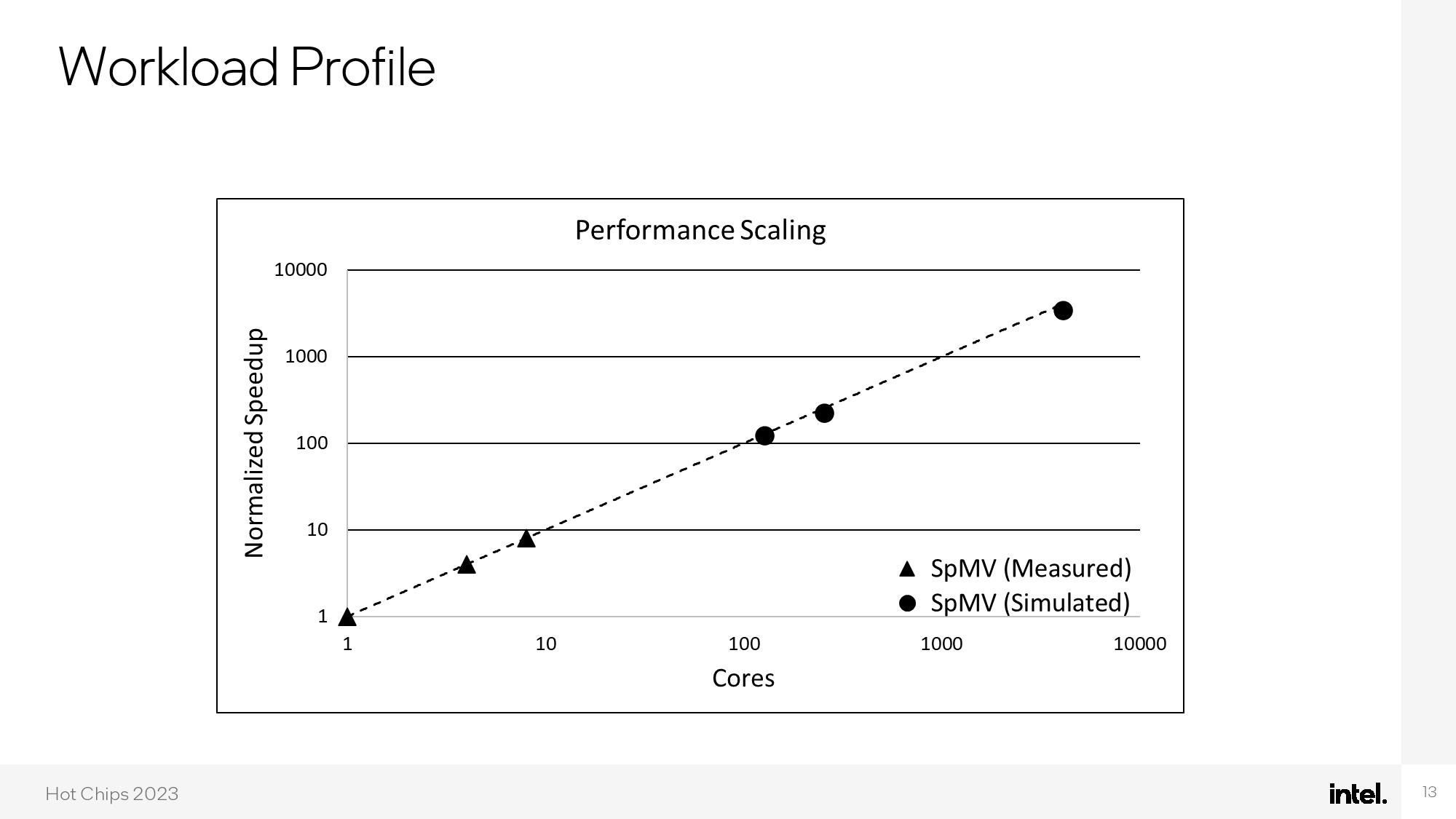

The end result is impressive — the chip consumes a mere 75W, with 59% of that budget dedicated to silicon photonics and 21% dedicated to the cores. Intel claims the enhanced performance of the optical network allows for nearly perfect linear performance scaling from one to 1000 cores.

The promise of optical interconnects has fueled an intensifying amount of research as the industry looks to future data transport methods that offer superior bandwidth, latency, and power consumption characteristics compared to traditional chip-to-chip communication techniques. While mainstream deployments of optical chip interconnects remain on the horizon, specialized implementations, like those championed by Intel, Nvidia, and Ayar Labs, are nearing readiness for large-scale deployments in the near future.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Kamen Rider Blade What I'm more surprised is that Intel didn't do this on their own internal fabs.Reply

Why did they need to use TSMC for this?

Also, a "Custom RISC" architecture vs a derivative off of x86, hmmm. -

InvalidError Reply

If you are going to build an application-specific (graph analysis) CPU with 66 hardware threads per core, you likely don't want a complex instruction decoder bottlenecking the whole thing and adding a handful of cycles latency penalty to every branch prediction miss and every hardware thread switch.Kamen Rider Blade said:Also, a "Custom RISC" architecture vs a derivative off of x86, hmmm.

Kind of a similar story to CPU vs GPU cores.

66 threads is an odd number to settle on. Must have barely missed mandatory performance targets with 64. -

NeoMorpheus Reply

And you can bet that the number one "feature" would be the IP not accessible to AMD, hence they finally found a way to kill their x86 license agreement.Kamen Rider Blade said:Also, a "Custom RISC" architecture vs a derivative off of x86, hmmm.

Itanium lives once more! -

Eximo Intel's best process nodes are busy churning out Intel x86 chips for the consumer computer market or doing development directly related to it. Makes so sense to interrupt their production to make a demo chip. They probably also have some spare TSMC fab time allotted.Reply -

dehjomz So what OS/software can this custom chip run? Also, is Intel signaling the end of x86 and the dawn of some new RISC-based architecture ?Reply -

Kamen Rider Blade Reply

They couldn't have killed 2x threads per core and added in 2x more cores for 10x core?InvalidError said:If you are going to build an application-specific (graph analysis) CPU with 66 hardware threads per core, you likely don't want a complex instruction decoder bottlenecking the whole thing and adding a handful of cycles latency penalty to every branch prediction miss and every hardware thread switch.

Kind of a similar story to CPU vs GPU cores.

66 threads is an odd number to settle on. Must have barely missed mandatory performance targets with 64. -

Kamen Rider Blade Reply

May the "Itanium 2" sink like the original "Itanic!"NeoMorpheus said:And you can bet that the number one "feature" would be the IP not accessible to AMD, hence they finally found a way to kill their x86 license agreement.

Itanium lives once more! -

JamesJones44 Reply

There are plenty of Linux variants out there that can run on RISC-V and other RISC variants. My guess would be a fork of one of those variants with updated kernels to handle the custom instructions. I also wouldn't be surprised if Intel's "custom"-RISC instructions where forked from another source.dehjomz said:So what OS/software can this custom chip run? Also, is Intel signaling the end of x86 and the dawn of some new RISC-based architecture ? -

InvalidError Reply

Since it is basically a tech-demo for graph analytics and on-package photonics, it may very well be running some sort of bare-bones micro-kernel just to coordinate compute and IO scheduling across nodes and with the host system/OS.dehjomz said:So what OS/software can this custom chip run? Also, is Intel signaling the end of x86 and the dawn of some new RISC-based architecture ?

The way I read it, these things are fundamentally meshed memory controllers with distributed compute capabilities, not stand-alone devices. -

NeoMorpheus Reply

Fingers crossed that it takes intel with it! :-)Kamen Rider Blade said:May the "Itanium 2" sink like the original "Itanic!"