Intel's GPU-Less CPUs Hit Their Stride

It's usually hard to convince people to buy an inferior product that costs the same as its superior counterpart. But when the inferior option is the only one people can easily buy, it suddenly becomes a lot easier, as Pepsi discovered when restaurants started to offer its sodas instead of Coca-Cola's. Intel learned that same lesson with its F-series processors, which have reportedly become increasingly popular among system builders since their debut.

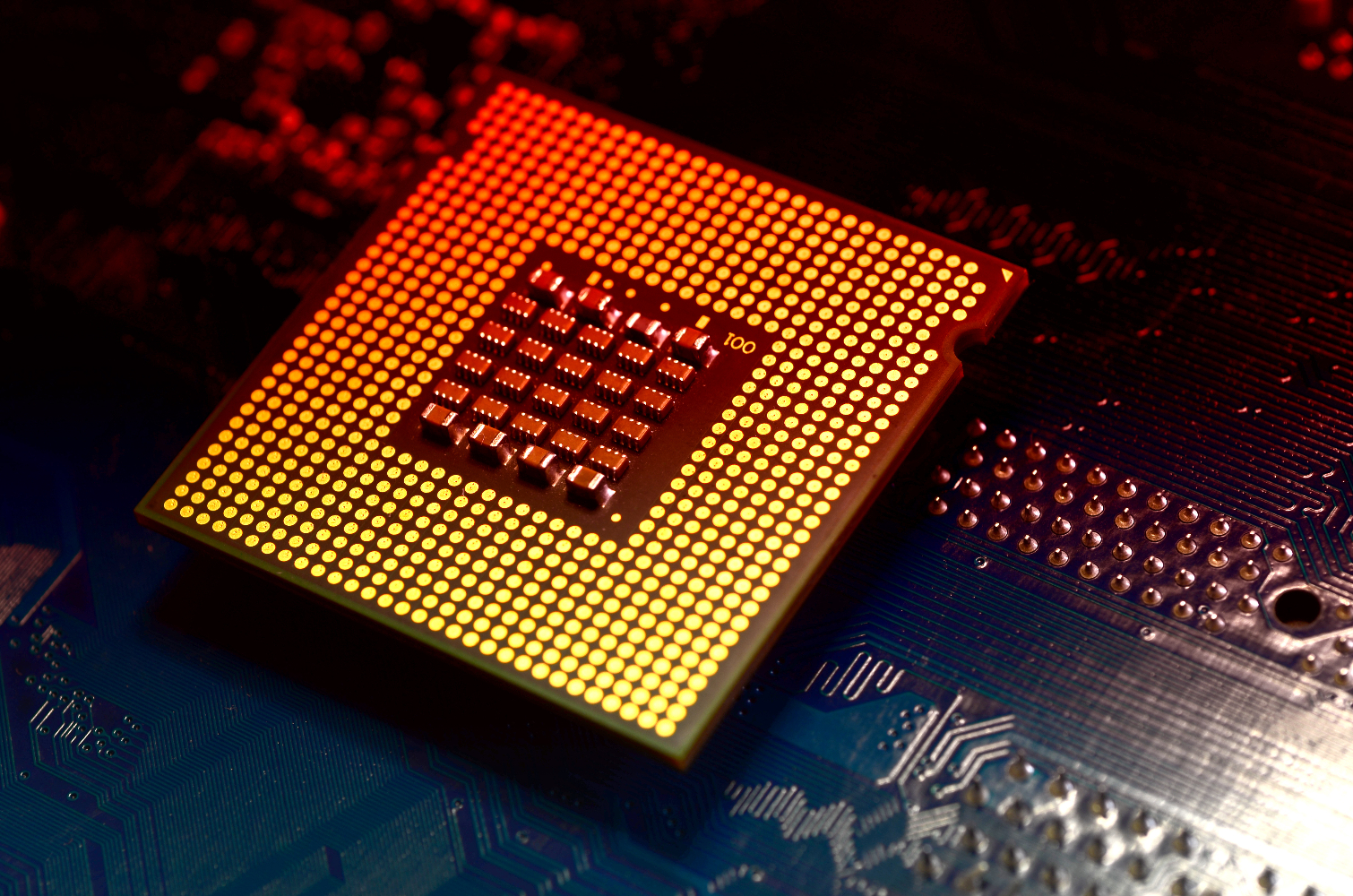

Intel announced the F-series line in January as a way to mitigate the effects of its processor shortage. The CPUs were nearly identical to their predecessors: they were based on the same Coffee Lake microarchitecture; built using the same 14nm process; and boasted the same core counts, TDPs, and frequencies. Even the prices were the same. The only difference was that Intel disabled the integrated GPUs on its F-series models.

Like we said at the start, that seemed like a hard sell, but it was actually pretty clever on Intel's part. The company was able to increase supplies during its CPU shortage without having to compromise on price, introduce completely new products, or rush processors built using the 10nm process to market. At least people who didn't need integrated graphics (which includes many enthusiasts) could upgrade their systems despite Intel's supply chain woes.

That gambit paid off. CRN reported on Friday that, according to "a top Intel channel executive," the F-series line "now accounts for more than 10 percent of total desktop CPUs sold to system builders through the company's U.S. authorized distributors." Much of the line's success likely stemmed from its expansion to Pentium processors and its availability during the CPU shortage. But it was also said to have resulted from incentives from Intel.

CRN reported that "the company's partner incentives for the F-series processors include points promotions for Intel Technology Provider partners, software and game bundles, graphics card bundles and other incentives offered through Intel's authorized distributors." Graphics card bundles were said to be particularly appealing to enthusiasts. Intel benefited, too, because the discount was taken out of the price of the graphics card instead of the CPU.

Intel U.S. channel chief Jason Kimrey told CRN that the F-series line wouldn't disappear after the CPU shortage eases up. (Which is expected to happen sometime around the third quarter.) "We are committed to this strategy moving forward," he said. "This particular product has a long life cycle ahead of it, and it's one that we're committed to because we actually see the benefit of doing it, and our customers have responded well to it."

Not that it would have mattered if customers didn't respond well, of course, because many would rather buy an Intel product they don't like than use an AMD processor. Such are the benefits of having an effective monopoly. Intel could make objectively inferior processors, sell them for the same price as before, and then convince their partners to bear the costs of any discounts simply because of its hold on the market.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Nathaniel Mott is a freelance news and features writer for Tom's Hardware US, covering breaking news, security, and the silliest aspects of the tech industry.

-

nneo Imagine when your gpu died, then u need to wait new gpu arrives to use your pc :rolleyes:Reply -

TerryLaze Reply

Imagine you saved $50 and have a drawer full of old cards.nneo said:Imagine when your gpu died, then u need to wait new gpu arrives to use your pc :rolleyes: -

Gam3r01 Reply

Most people dont plan on their graphics cards dying on them.nneo said:Imagine when your gpu died, then u need to wait new gpu arrives to use your pc :rolleyes:

By this logic, why dont you blame any other components that prevent a system from running without them?