GPU frequency overclocking world record broken using integrated Intel graphics — Arrow Lake outpaces discrete GPUs in clock speed competition

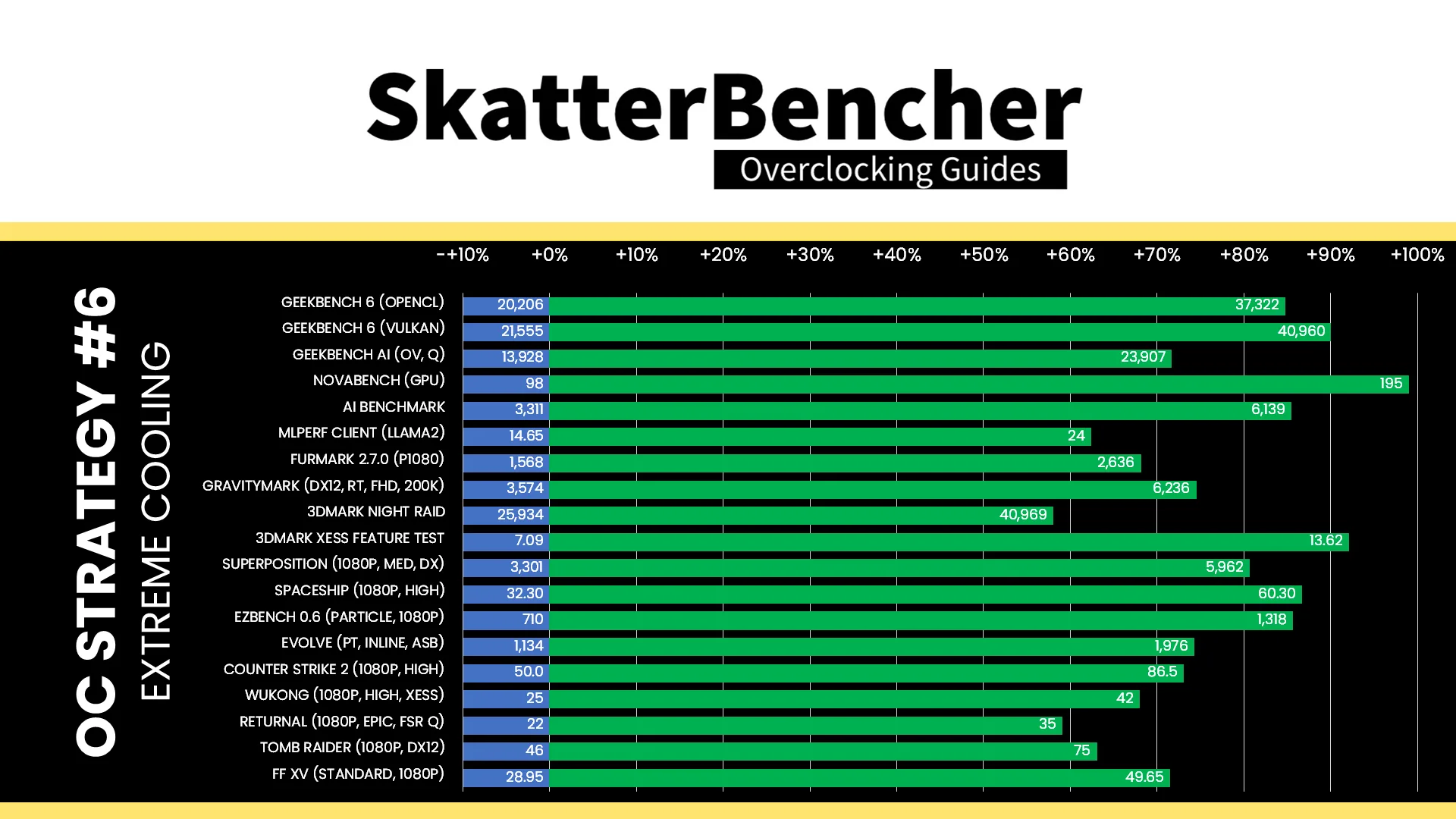

Delivers up to 2x more performance compared to stock settings.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

At Computex, extreme overclocker SkatterBencher pulled off a shocking feat, shattering the GPU frequency world record, not with a discrete powerhouse like the RTX 5090, or even the RX 9070 XT for that matter, but with an integrated GPU from Intel. That's right! The overclocker pushed the built-in graphics on his Core Ultra 9 285K to an astonishing 4.25 GHz, with the voltage maintained at 1.7V and a freezing temperature of -170 degrees Celsius.

The GPU overclocking database maintained by SkatterBencher is dominated by discrete GPUs like the RTX 4090 and RX 6900 XT, and that's exactly what you would expect. Splave has previously uncovered that Arrow Lake boasts substantial overclocking potential and headroom, even though performance in general tasks is considered middling. In the run-up to these record attempts, SkatterBencher achieved a respectable 3.1 GHz at 1.3V; however, the primary instinct for any enthusiast overclocker is "How fast can I push this thing?" and that's precisely what he did.

The clock for the built-in graphics on Arrow Lake is based on half the SoC reference clock, which defaults to 100 MHz. This value is then multiplied by the GT ratio, typically at 40x, resulting in an operating frequency of 2 GHz, or a theoretical maximum of 4.25 GHz at 85x. SkatterBencher discovered that Arrow Lake scales better with temperature as opposed to voltage, where at 1.3V, the GPU clocks at 3.1 GHz (30 degrees Celsius), increasing to 3.6 GHz at -150 degrees Celsius.

The roadmap was set; the overclocker aimed for 1.6- 1.7V alongside a frigid -170 degrees Celsius to breach the 4 GHz barrier. Throwing LN2 at the chip isn't all that simple, however, as Splave previously discovered, the system seldom doesn't boot up if the SoC Tile hits -100 degrees Celsius or lower. Even so, with the help of Asus's in-house overclocker Shamino and the Asus ROG Z890 Apex motherboard, SkatterBencher achieved a record-breaking frequency of 4.25 GHz, as reported in GPU-Z.

The next goal was to gauge performance across a suite of benchmarks and games. For a more consistent and stable experience, the GPU was overclocked to 3.9 GHz at 1.6V and -160 degrees Celsius, coupled with DDR5-8600 RAM. Overclocking the Intel GPU yielded substantial performance improvements. In Novabench, it delivered double the stock performance. Gaming benchmarks showed a jump from 50 FPS to 86 FPS in Counter-Strike 2, and a boost from 25 FPS to 42 FPS in Black Myth: Wukong.

Beyond 4 GHz, the performance gains were negligible, likely due to Intel's die-to-die interconnects. Increasing the reference clock from 100 MHz to 110 MHz offered some improvements, but they were not substantial. Still, this is a spectacular exhibition of what an integrated GPU can achieve. However, this is not practical in any case for the average consumer, since you'd need a continuous supply of LN2, and voltages beyond 1.5V can exponentially diminish a processor's lifespan.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

SyCoREAPER All public recognition and means nothing. Not achievable for virtually anyone. Not practical and long term even with liquid nitrogen, the CPU/APU will fry itself from the voltage.Reply

Interesting and my congratulations to them on achieving it but holistically pointless at the same time. -

Konomi Reply

Of course it is generally pointless, and those who participate in extreme overclocking know that. They do it for enjoyment. Bit like drag racing with cars - highly expensive, not practical for most people given in most circumstances you're not even going to be travelling at the sort of speeds the cars would hit, but people do it anyway. Besides, if people didn't have a natural curiosity to push things to their limits to see what would happen, things would be relatively boring and there'd be no innovation.SyCoREAPER said:All public recognition and means nothing. Not achievable for virtually anyone. Not practical and long term even with liquid nitrogen, the CPU/APU will fry itself from the voltage.

Interesting and my congratulations to them on achieving it but holistically pointless at the same time. -

artk2219 Reply

Its interesting, really what im most surprised by is the performance scaling, almost double the performance for double the clocks. You would think that makes sense, but generally most architectures cant achieve this. They eventually reach a point of limited returns far below the increase in clock speed, cache size becomes a problem, cache speed becomes a problem, the error rate goes up and the resulting work output goes down. None of those were a problem here, and it shows that architecture can take some serious clocks, honestly that was pretty interesting.SyCoREAPER said:All public recognition and means nothing. Not achievable for virtually anyone. Not practical and long term even with liquid nitrogen, the CPU/APU will fry itself from the voltage.

Interesting and my congratulations to them on achieving it but holistically pointless at the same time. -

SyCoREAPER ReplyKonomi said:Of course it is generally pointless, and those who participate in extreme overclocking know that. They do it for enjoyment. Bit like drag racing with cars - highly expensive, not practical for most people given in most circumstances you're not even going to be travelling at the sort of speeds the cars would hit, but people do it anyway. Besides, if people didn't have a natural curiosity to push things to their limits to see what would happen, things would be relatively boring and there'd be no innovation.

I don't dismiss the work and achievement, it is impressive that an iGPU can outperform a highend dedicated GPU. Konomi made a pretty good comparison with drag racing.artk2219 said:Its interesting, really what im most surprised by is the performance scaling, almost double the performance for double the clocks. You would think that makes sense, but generally most architectures cant achieve this. They eventually reach a point of limited returns far below the increase in clock speed, cache size becomes a problem, cache speed becomes a problem, the error rate goes up and the resulting work output goes down. None of those were a problem here, and it shows that architecture can take some serious clocks, honestly that was pretty interesting.

The way I see it, it's like putting a drag race record into a consumer (or back in in the day) StreetRacing mag. It's interesting but not directly relevant. Same here, it's the equivalent to dragracing and we are the streetracer mag community. It's interesting but not relevant to us.

Again, it's interesting and impressive but is the equivalent to the news mentioning a new cancer treatment we'll never see in real life or hear of again.

What would have made it more interesting would be some actual tests that every day people do , gaming, CAD, benchmarks, etc... to each their own though. -

greenreaper Reply

Arguably just suggests a bigger iGPU would be better to start with. I saw the same kind of scaling with the Ryzen 7600 iGPU in some games.artk2219 said:Its interesting, really what im most surprised by is the performance scaling, almost double the performance for double the clocks. You would think that makes sense, but generally most architectures cant achieve this.