Alexa, Google Assistant, Siri Vulnerable to Laser Beam Hacking

Facebook Portal and other devices using MEMS microphones are also affected.

Security researchers from the University of Michigan and the University of Electro-Communications (Tokyo) have invented a new technique called “Light Commands” that can take over voice assistants, including Amazon’s Alexa, Google Assistant, Facebook Portal and Apple’s Siri, through the use of a laser beam.

What Are Light Commands?

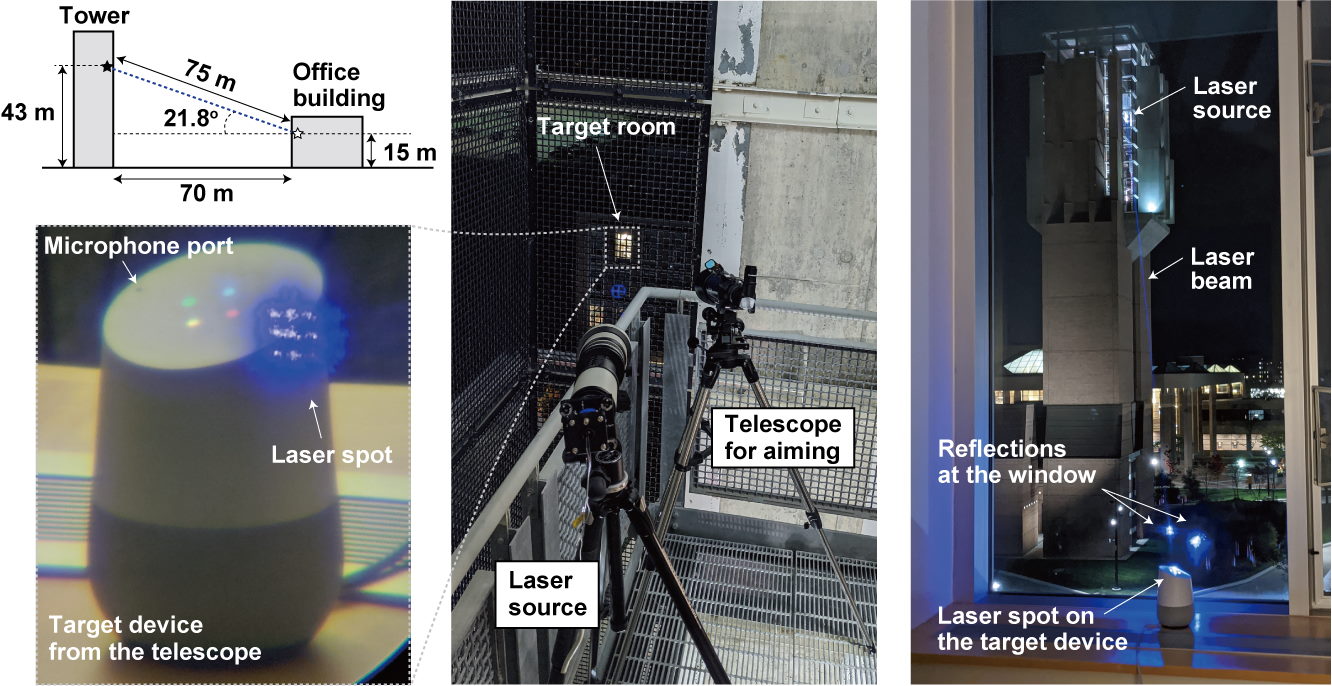

According to the researchers’ website, Light Commands is a vulnerability in Micro-Electro-Mechanical Systems (MEMS) microphones that allows attackers to remotely inject inaudible commands into popular voice assistants. The researchers were able to use this vulnerability to deliver an inaudible, invisible command via a laser beam targeted at the voice assistants.

The technique requires the laser to actually hit the target device’s microphone port, which could get significantly more difficult as the distance gets larger. However, the researchers also noted that the attackers could use binoculars or a telescope.

The longest distance they tested was 110 meters (360.9 feet), which was the length of the longest hallway they could find at the time. But the researchers noted that Light Commands could easily travel much longer distances.

In addition to voice assistants, the researchers said the attack works against other devices that use MEMS microphones and could be targeted by a laser beam -- including smart speakers, tablets, smartphones -- across large distances.

What these researchers have demonstrated is that security attacks are getting increasingly advanced and that companies need to consider not just software attacks but also physical attacks against their devices. Many companies haven't even caught up on properly securing their device's software yet, so it remains to be seen what they'll do about their devices' physical security.

Potential Mitigations

Voice recognition reportedly can’t protect users from this flaw, -- even if they switched it from being off by default -- according to the researchers, because it could also be easily bypassed with text-to-speech technologies that can be made to imitate the owner’s voice.

The researchers proposed a few mitigations that could work better against this sort of attack.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

One requires the user to authenticate with each new command, such as by answering a random question (something akin to a voice-based version of a CAPTCHA test).

Another requires manufacturers to increase the number of microphones on the device that are needed to receive a command. The laser beam can only target one of microphone at a time, so requiring two mics to listen to each command would, in theory, prevent this attack.

The final mitigation recommended is to add a physical barrier above the microphone's diaphragm to prevent light from hitting the microphone directly.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.