Nvidia Frame Generation Tech Plays Nice With AMD FSR 2.0, Intel XeSS

Nvidia says this is a feature, not a bug.

According to a report by Igor's Lab, Nvidia's DLSS 3.0 frame generation works with other upscaling algorithms besides DLSS. In testing, Igor could run Nvidia's frame generation technology with AMD's FSR 2.1 and Intel's XeSS temporal upscalers in Spider-Man Remastered. As a result, this gives us a good idea of how AMD's future FSR 3.0 update will work and if Intel will add frame generation to XeSS sometime later.

DLSS 3.0 is Nvidia's latest version of Deep Learning Super Sampling. Still, counterintuitively, version 3.0 does not enhance the super sampling portion of the tech but instead adds AI frame generation to the technology. They are allowing supported Nvidia GPUs to increase frame rates compared to native resolution vastly.

The technology works by inserting a single AI-generated frame between two actual 3D-rendered frames when the tech runs on a supported game. Unfortunately, a nasty side-effect of this type of frame generation is increased input lag - the amount of delay it takes for mouse and keyboard inputs to be registered on screen. As a result, Nvidia demands its Reflex technology be added to all DLSS 3.0-supported games to reduce the input lag to playable levels.

One cool feature Nvidia developed with DLSS 3.0 was separating the frame generation portion of the technology away from the actual DLSS upscaling tech. It means the two features can work separately, allowing the frame generator to be used at native resolution or with other upscalers. It is how Igor was able to conduct his tests, and he confirmed that Nvidia intended for DLSS 3.0 to work this way, so it's not a bug.

Benchmarks

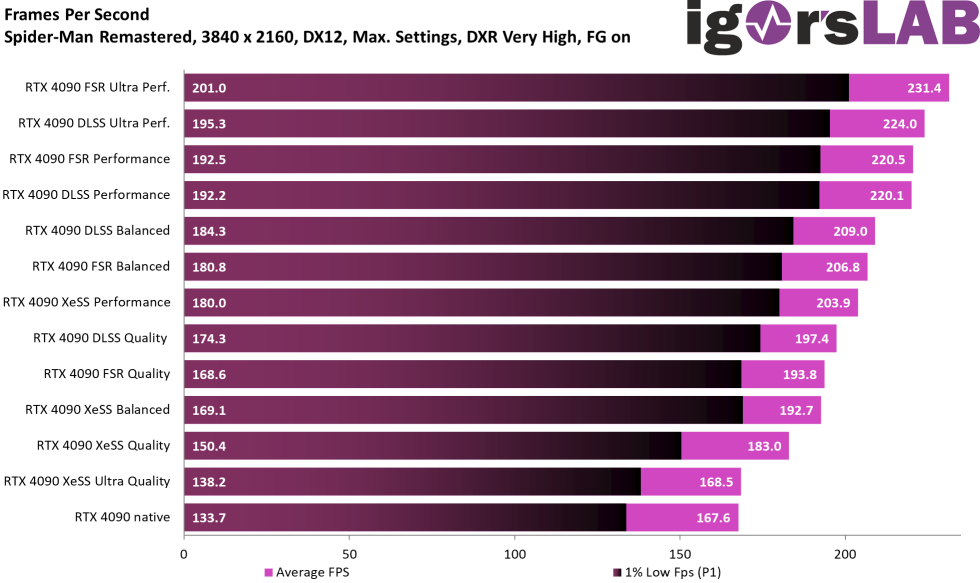

Igor tested DLSS 3.0's frame generation with Nvidia's actual DLSS upscaler, FSR 2.1, and XeSS in Spider-Man Remastered, operating on a GeForce RTX 4090 GPU with all the upscaling modes.

With frame generation enabled, the 4090 at its native resolution achieved an average FPS of 167.6. The closest and most demanding upscaling mode is Intel's XeSS Ultra Quality mode, sitting at just one frame above native at 168.5 FPS.

A bit higher is XeSS Quality Mode at 183 FPS; next, XeSS balanced at 192.7. Then Nvidia's DLSS Quality and FSR Quality sit above the XeSS balanced mode at 197.4 FPS and 193.8FPS, respectively. Next is XeSS Performance mode at 203.9 FPS, FSR Balanced at 206.8 FPS, and DLSS Balanced at 209.0 FPS.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Finally, we have FSR and DLSS performance and ultra-performance modes sitting at the top of the performance chart since Intel lacks an ultra-performance mode in its XeSS upscaler. As a result, DLSS and FSR performance sit right against each other at 220.1 and 220.5FPS, respectively. DLSS Ultra Performance is the 2nd fastest mode at 224.0 FPS, and FSR Ultra Performance takes the lead at 231.4 FPS.

Overall, this means that DLSS and FSR 2.1 are nearly identical in terms of performance, with frame rate margins that won't even be noticeable while gaming. XeSS, on the other hand, appears to be a bit more demanding, with its faster modes being an entire tier slower than FSR and DLSS's slightly higher-quality models.

Then, of course, you have XeSS Ultra Quality which is by far the slowest, beating out the native resolution by just a single FPS. But, this can still be beneficial since XeSS might have better anti-aliasing than the game's built-in TAA, SMAA, or other AA options.

Frame Generation Vs. Native Frame Rendering

Compared to frame generation disabled, all upscaling modes dwarf what the RTX 4090 can do with native frame rendering. Here we see an obvious CPU bottleneck, with the game capping out at 133 to 135 FPS on almost all presets, except native resolution, FSR Quality, and Ultra Quality, which sit between 125FPS and 130FPS.

But even between these three slower modes, the frame rate comparisons are so close they can be considered within the margin of error.

Image Comparisons and Conclusion

Unfortunately, Igor's image quality comparisons only comprise static scenes with no motion. As a result, we can't see what Nvidia's frame generation behavior looks like with FSR 2.1 and XeSS.

Overall, frame generation works very well with FSR 2.1 and XeSS, not just DLSS. In addition, it can provide games with alternative upscaling options, which might look better than DLSS, depending on the upscaler implementation. Though in the current state Spider-Man Remastered is in right now, DLSS is still a great option, with FSR 2.1 being the only option that can match DLSS in performance.

Speaking of FSR, Igor's testing provides us with a proof of concept of how FSR 3.0 will look when AMD releases it later in 2023. From the tests shown, Nvidia's frame generation works great with FSR 2.1 from the performance side.

Now all that's left to see is how FSR 3.0 will handle its version of frame generation, which we haven't seen yet. We don't know how AMD will be designing its frame generation version, but this will make or break FSR 3.0. We're hoping the tech can be used on any GPU like AMD has done with FSR up until now, but we don't know yet. It'll depend on the hardware requirements of FSR 3.0's motion vector solution and whether or not it'll require a hardware-only solution or a software solution that can work on standard GPU cores.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

setx There is nothing nice about DLSS 3.0. While DLSS 2 feeds you somewhat fake pixels, with 3.0 half of what you see is completely fake.Reply

What's the point of slightly higher fps when you lose almost half of true frames with actual information and get increased input lag? Reviewers should stop misdirecting people with those fps graphs that aren't actually apples-to-apples comparison. -

blacknemesist Reply

Sshhhh, don't tell him, you're going to break his perception of reality, he will never mentally recover /sripbeefbone said:Buddy everything you're looking at in a videogame is fake -

Xajel Replyblacknemesist said:Sshhhh, don't tell him, you're going to break his perception of reality, he will never mentally recover /s

He chose this, he took the blue pill. -

RichardtST So much going on here.... So much just plain wrong...Reply

<begin rant>

Interesting read on Nvidia Reflex "technology". Simply put, it removes most of the poor design crap in the pipelines between the CPU and the display. Would be nicer if it was all streamlined to begin with, but it is what it is, at least for now. Personally, I would expect that when I hand a generated frame to the driver, it is the next thing to go out to the display. I generate the current frame as I am viewing the previous. Anything less is simply a garbage design that needs to be fixed. No excuses.

Don't even get me started on the polling of keyboard and mice. This is absolutely ridiculous. Should have been fixed back in the 80s. Probably was. Sporadic inputs like keyboard and mice should be interrupt-driven, not polled. Again, hideous design decision that should have been corrected a long time ago.

Big news: artificially high frame rates (like AI generated frames!) and most ultra high-resolution options are simply to make things pretty. They are known to cause lag. Serious gamers turn them all off. They use low resolution for the very fact that the data gets to the viewable screen significantly faster. Less lag = more wins. Unless you are playing something that doesn't require quick reaction times, then of course, making pretty is nice.

And last but not least. No one, even the experts, can tell the difference between real ray tracing and faked ray tracing . No one needs it. Please make it go away and use the freed-up hardware space for something else.

No, wait... one more... Bring back blowers! These stupid GPUs are getting too big and there is no way to get the heat out of the case without installing a dozen case fans and having open screens on all six sides. It's silly at this point. Give me a blower so I can cram my GPU into a nice small desktop case without taking up half my desk. Surely someone can devise a nice quiet blower fan.

<end rant> -

Chung Leong This tech is basically using AI to perform what the human brain is perfectly capable of doing naturally. Generations have watched movies at 24 FPS, never complaining of choppiness. Our brain without our conscious effort would correctly infers what our eyes aren't seeing. So why this need for very high frame rate in games? Have people become retarded? Has technological advancement deprived the present generation of mental exercises such that their brains failed to properly develop?Reply