We Tested Intel's XeSS in Shadow of the Tomb Raider XeSS on Multiple GPUs

Benefits Pascal and later, RDNA 2 and Arc — most of the time

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Shadow of the Tomb Raider just received a new update featuring support for Intel's new XeSS technology, making it one of the first games on the planet to publicly offer XeSS. If you own the game, you can try XeSS right now for yourself, and it works on many GPUs, including Nvidia's and AMD's best graphics cards. Or does it?

This is where things can get a bit confusing with XeSS. It was created by Intel for the Arc GPUs, more or less as a direct competitor to DLSS. However, XeSS is supposed to have a fallback mode where it runs using DP4a instructions — four element 8-bit integer vector dot product instructions. What the hell does that mean, and what graphics cards support the feature? That's a bit more difficult to say.

Officially, we know all Nvidia GPUs since the Pascal architecture (GTX 10-series) have supported DP4a. Intel says its Gen11 Graphics (Ice Lake) and later support DP4a, and of course Arc GPUs support Xe Matrix Extensions (XMX) — the faster alternative to DP4a. What about AMD? In theory, Vega 20, Navi 12 (?), and later GPUs have support for it.

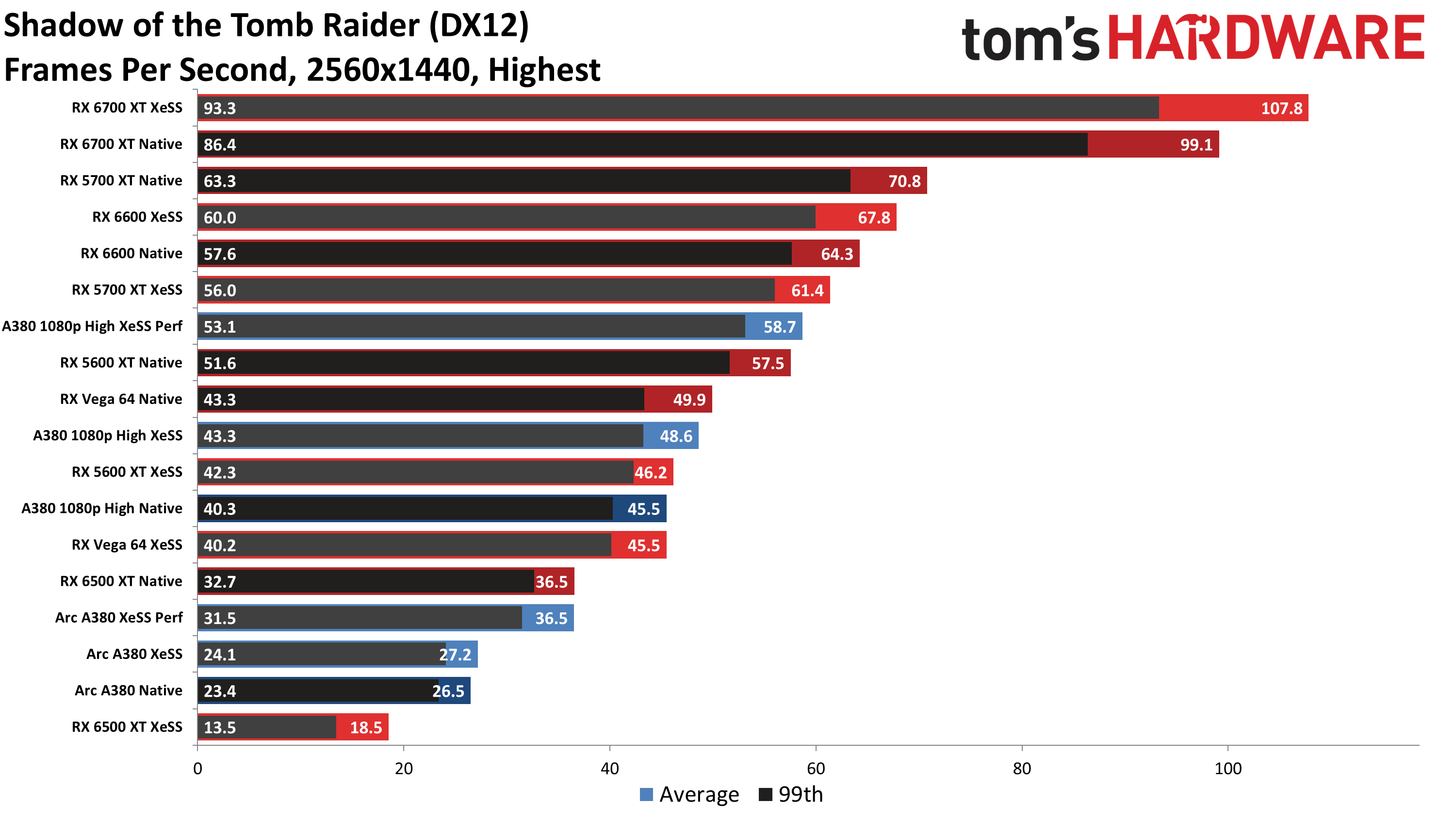

Here's where things really get odd, because we did some quick benchmarking of Shadow of the Tomb Raider at 1440p and the Highest preset (without ray traced shadows, so that we could run the same test on all GPUs). Guess which GPUs allowed us to enable XeSS: All of them. But the results of the benchmarks are telling, as XeSS didn't benefit quite a few of the GPUs and in some cases reduced performance.

What's going on? It looks like DP4a (8-bit integers) is being emulated via 24-bit integers on architectures that don't natively support it. Emulation generally reduces performance quite a bit, and so it would make sense that cards like the RX Vega 64 ran slower. The AMD Navi 10 (RX 5600 XT and RX 5700 XT) GPUs also lost performance with XeSS, and if the Navi 12 information is correct, only the Radeon Pro 5600M (used in some MacBooks and laptops) fully supports DP4a from the RDNA generation.

What about newer cards? Results were better, sort of, with most RX 6000-series benefitting — not a lot, but something is better than nothing. There were also some oddities with certain cards, like all the GPUs we tested that had 6GB VRAM. It may be that we were hitting some form of memory bottleneck, but this is a relatively old game and 6GB ought to have been sufficient.

Keep in mind that XeSS is a brand-new AI upscaling algorithm that aims to compete with the likes of Nvidia DLSS 2 and AMD FSR 2. As this is the first game we've been able to test with XeSS, there may be some early bugs that are still being worked out. As with DLSS and FSR, there are multiple upscaling modes: Ultra Quality, Quality, Balanced and Performance. Ultra Quality aims to provide a "Nvidia DLAA" like approach, with a focus on image quality over frame rate, while the last three modes prioritize frame rate over image quality.

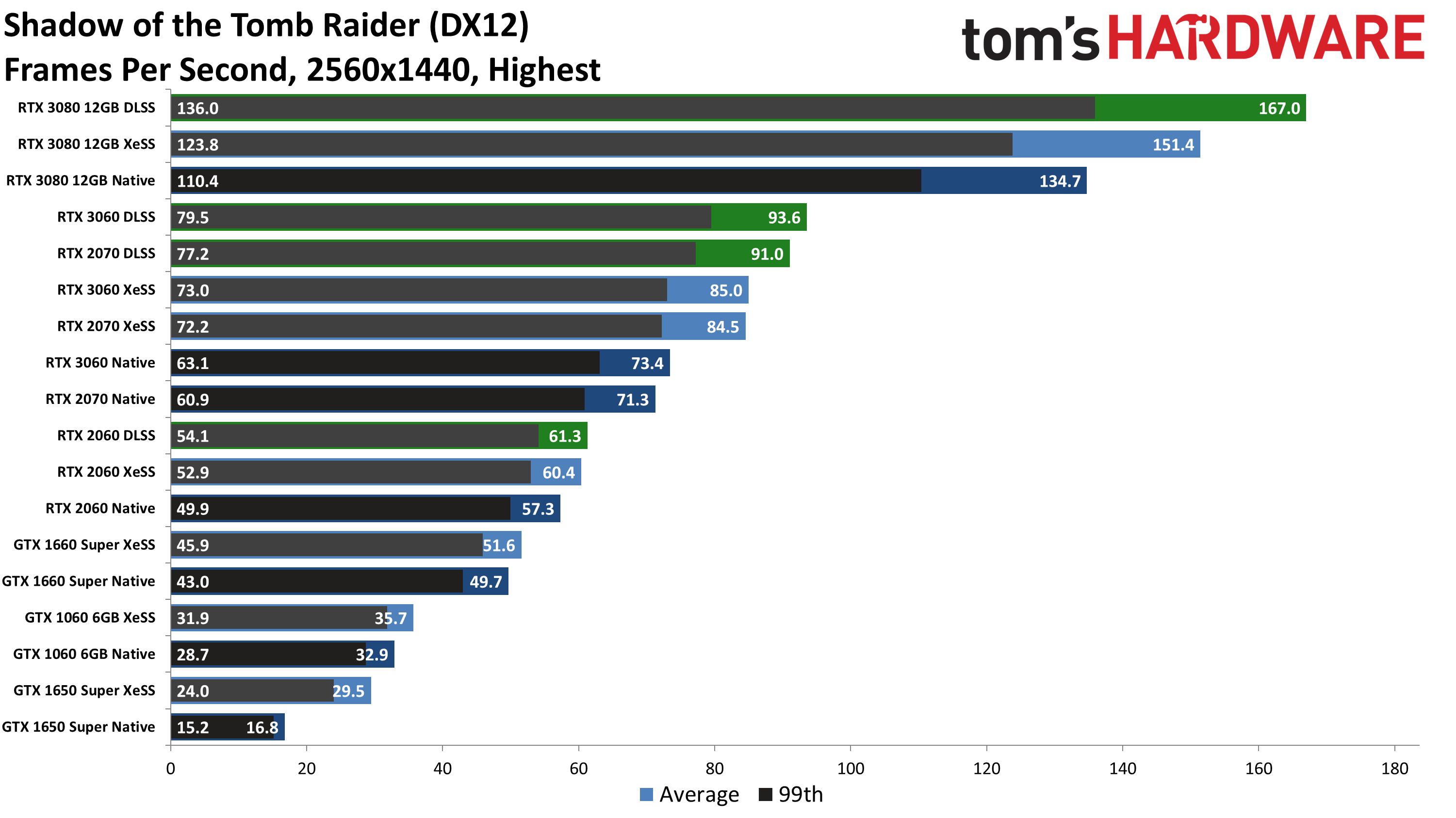

Anyway, let's get to the benchmark results. We tested native, XeSS Quality, and DLSS Quality (where available). We also tested a few other options on select cards, but those results aren't shown in the charts. We didn't test every possible GPU by any stretch, but we did include at least one card from quite a few different generations of hardware.

For the most part, XeSS ran quite smoothly in its current state with image quality overall being on par with the DLSS 2 implementation in the game. There were only a few area's that we've seen where image quality wasn't the best, like pixilation related to grated fences, and a bit of pixilation in Lara's hair from time to time. We'll dive deeper into XeSS' image quality comparisons at a later date, when more games support it.

The performance benefit of running XeSS varied quite a lot. It's no surprise that cards without DP4a were effectively useless. We tested several AMD cards that showed negative performance deltas — Vega 64, 5600 XT, 5700 XT, and even RX 6500 XT. XeSS in performance mode on the 5700 XT basically matched native performance, while rendering 1/4 as many pixels before upscaling.

Cards that showed improved performance with XeSS also showed some oddities. GPUs with less than 8GB VRAM barely improved at all — and that applies to DLSS on the RTX 2060 as well. Everything from the GTX 1060 6GB to the RTX 2060 6GB improved incrementally, by 4% to 8% at most. That doesn't make much sense, but perhaps something in the way Shadow of the Tomb Raider implements DLSS and XeSS is to blame. Intel's own Arc A380, another 6GB card, didn't benefit much either at 1440p Highest settings, improving by just 3%. So we tested it at 1080p High just to rule out memory capacity as a bottleneck. That showed a 7% improvement, still barely worth mentioning.

Low spec GPUs like the 4GB GTX 1650 Super appeared to benefit mostly because at native 1440p it ran out of VRAM. However, on the RX 6500 XT that also has 4GB VRAM, the opposite was true: it ran much better at native. Bug, underpowered hardware, lack of VRAM? We're not entirely sure, and it's too early to give any definitive statements.

But let's talk about the cards that did benefit. Nvidia's RTX 2070 8GB, RTX 3060 12GB, and RTX 3080 12GB showed performance increases ranging from 12% to 18% with XeSS in Quality mode, and Performance mode helped even more on the couple of cards we checked. Still, DLSS on those same cards improved performance by 24% to 28% in Quality mode. AMD's RX 6600 8GB and RX 6700 XT 12GB also showed improvements of 6% and 9%, respectively, so RDNA 2 with 8GB or more VRAM looks like you should see a net gain... just not a big improvement. It's too bad Shadow of the Tomb Raider didn't also enabled FSR2 support with the XeSS update, so that we could see another alternative to DLSS and XeSS.

Putting it straight, Shadow of the Tomb Raider hasn't been the best of launches for a new technology. XeSS didn't help much on the cards that 'properly' supported it, and even DLSS had odd performance results with cards that didn't have at least 8GB VRAM. The two are probably related. But now that the XeSS SDK has been released to the public, we could see quite a few more games adopt the tech, and we'll be keeping an eye on how they perform — Death Stranding for example just released its XeSS patch today (9/28/2022), and more games are certainly incoming.

Other bugs we encountered included at least one GPU (Aaron's RTX 2060 Super) where XeSS became completely unusable if the game was started without XeSS and then enabled inside the game. That didn't happen on every other GPU, though we didn't check every one that we tested.

Bottom line: XeSS is now available, and Shadow of the Tomb Raider is the first public release to get it. You can give it a shot on whatever GPU you might be running, but you'll be most likely to benefit if you have an Nvidia Pascal GPU or later, an AMD RX 6000 card, or an Intel Arc GPU. You'll also probably want 8GB or more VRAM or you may be limited to single digit percentage gains.

Nvidia RTX cards are still better off with DLSS, but GTX owners might appreciate the feature. Still, having choice isn't a bad thing, and hopefully XeSS adoption, performance, and compatibility will continue to improve.

If you do give Shadow of the Tomb Raider with XeSS a try, post your GPU and results in our comments. (We tested with our standard Core i9-12900K GPU test PC.) We used only the first sequence of the built-in benchmark if you want to compare your results with ours, capturing frametimes for 39 seconds using OCAT (FrameView and other utilities should also be fine).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

oofdragon Madness.. they should just all agree on a open source standard. The way things are we may In a while open the graphics settings and have dozens of these XGD DlLs ExS FPa weird nomenclatures crawling at us. I vote for a open source world, mind you with one single console that can run Mario, sonic, master chief, crash, etc. I mean seriously, how did we get here? These bitches better team up and give us ray traced ZeldaReply -

JarredWaltonGPU Reply

145oofdragon said:Madness.. they should just all agree on a open source standard. The way things are we may In a while open the graphics settings and have dozens of these XGD DlLs ExS FPa weird nomenclatures crawling at us. I vote for a open source world, mind you with one single console that can run Mario, sonic, master chief, crash, etc. I mean seriously, how did we get here? These bitches better team up and give us ray traced Zelda -

jkflipflop98 That comic is the first thing that popped into my head as well.Reply

Microsoft will end the bickering as usual by putting upscaling in the directX package. -

JarredWaltonGPU Reply

I would like to see this happen. I don't think Nvidia will give up on DLSS promotion any time soon, but if we got a Microsoft DirectX Upscaling API that would:jkflipflop98 said:That comic is the first thing that popped into my head as well.

Microsoft will end the bickering as usual by putting upscaling in the directX package.

Use Nvidia Tensor cores

Use Intel XMX cores

Support any future AMD tensor alternative

Fall back to shader-based upscaling (FSR2.1?) otherwise

That would be pretty darn sweet. And it could even use the API on the Xbox consoles. -

renz496 Replyoofdragon said:Madness.. they should just all agree on a open source standard. The way things are we may In a while open the graphics settings and have dozens of these XGD DlLs ExS FPa weird nomenclatures crawling at us. I vote for a open source world, mind you with one single console that can run Mario, sonic, master chief, crash, etc. I mean seriously, how did we get here? These bitches better team up and give us ray traced Zelda

there are times open source standard is the good solution and there are times it end up being a bad one. the good one? like what happen with VRR. the bad one: look what happen with OpenGL and OpenCL. then we also have things that happen with multi GPU support. it is supported natively on DX12. some people expect by making the feature being a standard inside major 3D API like direct x it will expand multi gpu tech development and adoption further where SLI and CF cannot reach before. in the end game developer just "haha" us about it. for AI upscaling like DLSS and XeSS if MS release a standard that can be used by every hardware vendor i can already see some of the hurdle it will going to face. -

JarredWaltonGPU You know what would be cool? If someone could figure out a way to hack DLSS to make the Nvidia Tensor core calculations run on Intel's XMX cores — or use the GPU's FP16 capability if neither Tensor or XMX is available. I suspect the latter would be more difficult and would run terribly slow, but on a theoretical level, hacking the DLL to make it use XMX should be possible. Just imagine how angry that would make Nvidia!Reply