SMI Eye Tracking In A Vive HMD, Hands-On

After an announcement earlier this week, we were anxious to get some hands-on time with a Vive headset that had SMI’s eye-tracking technology on board. At GDC, we met with the company in Valve’s showcase area and got a chance to see what the companies built together.

Embedded, Not Bolted On

It’s important to understand how SMI’s eye trackers have been implemented into the Vive. They haven’t been bolted on or awkwardly attached in some other kludgy way; they’re embedded inside the headset, just as Tobii’s EyeChip has been.

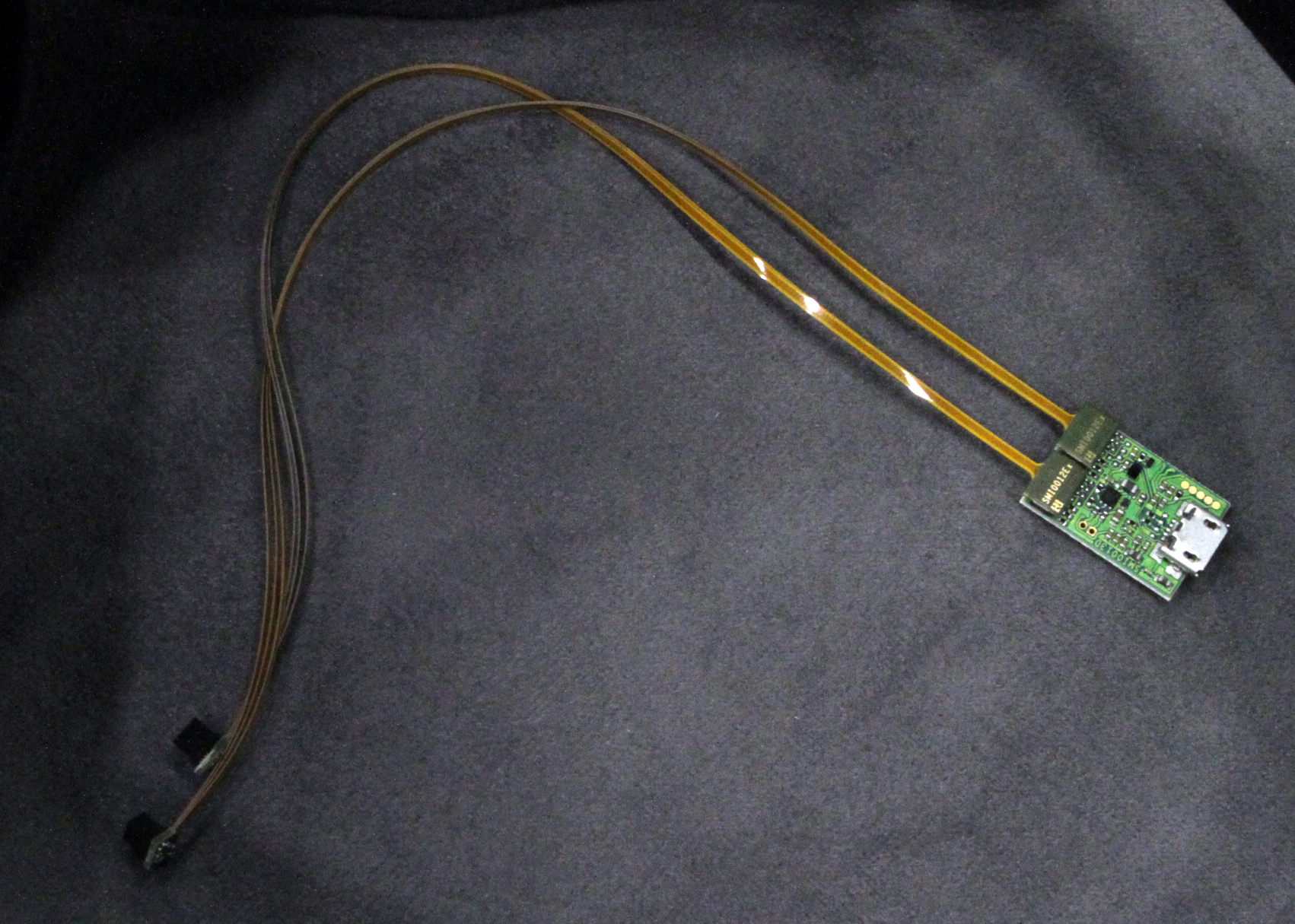

Further, we were frankly astonished to learn that the SMI hardware inside is exactly the same thing we saw a year ago at Mobile World Congress. It’s still that little PCB and two skinny wires with tiny cameras at the ends, it can still offer 250Hz, and it still costs under $10 to implement.

We were not permitted to take photographs of the inside of the HMD, but we can confirm that the lenses were ringed with illuminators, which eye trackers need so they can get a clear look into your eyes.

We saw a similar type of illuminators on the Vive HMD that had Tobii’s eye tracking, but we can’t confirm at this time whether SMI’s version had the same illuminators or just ones that were similar.

Our educated guess is that they’re the same. It makes sense that either Valve or HTC would likely help to build one illuminator ring and leave it at that. It’s really just about lighting and cameras; the lights illuminate the subject (in this case, your eyeballs) so the cameras can take better shots. Once the subject is well-lit, it doesn’t really matter so much which camera is taking the picture--in this case, either SMI’s or Tobii’s.

Since we first published this article, SMI confirmed that they made their own LED illuminator ring. A representative from SMI further indicated that there are only so many ways to create such a ring, so even if Tobii and sMI made different illuminator rings, they're probably nearly identical regardless.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Calibration

I should note that although calibrating SMI’s eye tracking is designed to be quick and easy, I had some issues getting it to “take.” All you have to do is follow a floating dot with your eyes for about five seconds. However, after my first calibration, the pink dot that tells you where your eyes are looking was jumping all over the place. We tried it a couple more times to no avail.

The problem, it turned out, was my glasses--specifically, when they fogged up. In VR, glasses-wearing is a constant issue for those of us with crummy vision. They really don’t fit well in any HMD, but they fit well enough most of the time. It’s kind of uncomfortable, but the alternative is that you have to either switch to contacts or just accept that (depending on your prescription) parts of the VR experience will be a little bit blurry.

But they also fog up sometimes.

I generally just have to pick an inconvenience and roll with it. Apparently, foggy glasses confuses SMI’s eye tracker. I pulled off the headset, removed my glasses, put the headset back on, ran the calibration, and voila, it worked.

Input And Social Experiences

Once I donned the SMI-equipped Vive, I was taken through several demos. They were designed to demonstrate how you can use eye tracking as a selection tool, how it can enhance social interactions in VR, and how it can enable foveated rendering.

In the first demo, I was put into a virtual room with a couple of lamps and a desk littered with parts for a CPU cooler. I was tasked with assembling the CPU cooler, which was easier than it sounds. The parts were comically large, and the “assembly” was really just a matter of picking each part up in turn and making them touch one another, similar to the video game mechanics we’re all accustomed to seeing. But what was cool about the demo is that I just looked at each part to highlight it, which made it more intuitive to reach out and grab the item in question.

There were some Easter eggs in the room, too. I could look at one of the lamps and click a button on the controller to turn it on or off. This is a delightful method of input, and wonderfully intuitive. You look at something, it gets “marked” or “highlighted” because the eye tracker knows what you’re looking at, and you can interact--whether that’s a button click, a locomotion, or what have you.

While in that particular demo, two other people joined me. We were all represented as cartoonish avatars, and we were standing around the desk. However, the combination of eye tracking, head tracking, and hand tracking (via the controllers) gave each of us some uniqueness.

It was further a reminder of how powerful VR can be for putting people who are not physically close to one another into the same virtual space. One of the two people who joined me was physically just a few feet away; the other was physically in Seattle. There were zero clues as to which person was where--no lag or latency, no degraded image, nothing.

We were able to make virtual eye contact because of the SMI technology. It’s hard to overstate how important that is within social VR; it’s one thing to use an avatar to get a sense of another person’s hand and head movements, but it’s quite another when they can make eye contact.

Think about all your real social interactions: Eye contact tells you a great deal. Some people make no eye contact, some people make too much, some people are a little shifty with their eyes, some people are looking over your shoulder for someone better to talk to, and so on. With eye tracking in a headset and a social VR setting, you get all of that.

I saw even more of that in the next demo, which put me into a room with those same two fellow where we sat around a table to play cards. In this environment, you could stand or sit; I was escorted to a virtual chair, and someone in the real world behind me gave me a physical chair to sit down on. (That process was a little weird, to be honest.)

Once seated, they showed me how you can blink, raise your eyebrows, cross your eyes, and more; the avatars reflected all of those actions.

Foveated Rendering

In the final demo, I stood in a dark room and was greeted by a grid of floating cubes that extended infinitely in all directions. The cubes were translucent and rainbow-colored. Someone manning the demo toggled foveated rendering on and off. I tried to trick the system by focusing on what was happening in my peripheral vision, because of course foveated rendering doesn’t bother to fully render the images around your peripheral vision, so the edges would be the giveaway.

But I couldn’t sneak a peek, because, well, that’s exactly how eye tracking works. Conclusion: SMI’s foveated rendering within the Vive works as advertised, at least in this demo.

SMI has come a long way in a year. When we first met the company and its technology, it was at an event at Mobile World Congress. They had a small table in a big ballroom where other smaller outfits that didn’t have a booth presence in the main convention halls gathered to show their wares to media.

At GDC, SMI was in Valve’s exclusive showcase area showing off the tech in a specially-modified Vive. We don’t know when we’ll see a shipping headset with SMI’s eye trackers, but it will probably be towards the end of this year.

Update, 3/3/17, 4:28pm PT: Added a note about the illuminators after hearing back from SMI.

Update, 3/4/17, 8:35am PT: An earlier version of this article misstated the calibration issue we had. SMI reached out to clarify that the glasses were not the issue--it was the fact that they became foggy.

Seth Colaner previously served as News Director at Tom's Hardware. He covered technology news, focusing on keyboards, virtual reality, and wearables.

-

NuiUser Anyone done any calculations on the dangers of having this many (how many?) IR LEDs thatclose to the eye? I know Tobii lists on their website that "it's safe" (with no proof).Reply

I'm not talking about cancer, but damage to the eye from intensity - this is tricky: it isn't about Thermal (IR), but about regular absorption by the molecules in the Rods/Cones (eg, standard IR vibrations). There are studies from years ago saying that IR from cameras at a distance of ~0.5m (your webcam) are safe, but this is right at your eye! Even if pulsed (which it presumably is), it doesn't seem safe! Again, the difference is the fall-off of power with distance - a single strong LED far from the eye will be safe, but how many are they using here, and at what (time averaged) power? -

WhyAreYou Awesome articles, I feel like VR might be dangerous to the eyes, but definitely something to use as like an occasional gaming treat, don't wanna get dizzy :)Reply -

JeffKang Reply19380375 said:Anyone done any calculations on the dangers of having this many (how many?) IR LEDs thatclose to the eye? I know Tobii lists on their website that "it's safe" (with no proof).

I'm not talking about cancer, but damage to the eye from intensity - this is tricky: it isn't about Thermal (IR), but about regular absorption by the molecules in the Rods/Cones (eg, standard IR vibrations). There are studies from years ago saying that IR from cameras at a distance of ~0.5m (your webcam) are safe, but this is right at your eye! Even if pulsed (which it presumably is), it doesn't seem safe! Again, the difference is the fall-off of power with distance - a single strong LED far from the eye will be safe, but how many are they using here, and at what (time averaged) power?

Tobii went from

EyeX

Backlight Assisted Near Infrared

(NIR 850nm + red light (650nm))

to

4C

Near Infrared (NIR 850nm) Only

I don't know what that means.

I think that you're supposed to pulse the infrared to keep it safe.

That's why I'm looking forward to MIT and U. Georgia's GazeCapture eyetracking.

They use crowd-sourcing, deep-learning, and ordinary cameras.

There's a GazeCapture app where you can submit data so that their eye-tracking can keep improving its accuracy. -

Arne42 "The problem, it turned out, was my glasses--specifically, when they fogged up."Reply

Try heating your lenses with a hairdryer before wearing the HMD. The basic idea is to get the temperature of the lenses higher than that of the surrounding environment. Of course this is adding another cumbersome step to wearing which could be solved by HMD manufacturers.

We have a chilly lab and this "was" constantly an issue. -

scolaner Reply19443710 said:"The problem, it turned out, was my glasses--specifically, when they fogged up."

Try heating your lenses with a hairdryer before wearing the HMD. The basic idea is to get the temperature of the lenses higher than that of the surrounding environment. Of course this is adding another cumbersome step to wearing which could be solved by HMD manufacturers.

We have a chilly lab and this "was" constantly an issue.

Good tip, thanks! An easier solution would be for me to wear contacts, but... I don't wanna! -

scolaner Reply19381596 said:19380375 said:Anyone done any calculations on the dangers of having this many (how many?) IR LEDs thatclose to the eye? I know Tobii lists on their website that "it's safe" (with no proof).

I'm not talking about cancer, but damage to the eye from intensity - this is tricky: it isn't about Thermal (IR), but about regular absorption by the molecules in the Rods/Cones (eg, standard IR vibrations). There are studies from years ago saying that IR from cameras at a distance of ~0.5m (your webcam) are safe, but this is right at your eye! Even if pulsed (which it presumably is), it doesn't seem safe! Again, the difference is the fall-off of power with distance - a single strong LED far from the eye will be safe, but how many are they using here, and at what (time averaged) power?

Tobii went from

EyeX

Backlight Assisted Near Infrared

(NIR 850nm + red light (650nm))

to

4C

Near Infrared (NIR 850nm) Only

I don't know what that means.

I think that you're supposed to pulse the infrared to keep it safe.

That's why I'm looking forward to MIT and U. Georgia's GazeCapture eyetracking.

They use crowd-sourcing, deep-learning, and ordinary cameras.

There's a GazeCapture app where you can submit data so that their eye-tracking can keep improving its accuracy.

That's quite interesting. I wonder if this will lead to new developments in optics and displays...