Supermicro 1023US-TR4 Review: Powerful Performance in a Slim 1U Package

Firing up AMD EPYC and Intel Xeon platforms

Supermicro's 1023US-TR4 is a slim 1U dual-socket server designed for high-density compute environments in high-end cloud computing, virtualization, and enterprise applications. With support for AMD's EPYC 7001 and 7002 processors, this high-end server packs up to two 64-core Eypc Rome processors, allowing it to cram 128 cores and 256 threads into one slim chassis.

We're on the cusp of Intel's Ice Lake and AMD's EPYC Milan launches, which promise to reignite the fierce competition between the long-time x86 rivals. In preparation for the new launches, we've been working on a new set of benchmarks for our server testing, and that's given us a pretty good look at the state of the server market as it stands today.

We used the Supermicro 1023US-TR4 server for EPYC Rome testing, and we'll focus on examining the platform in this article. Naturally, we'll add in Ice Lake and EPYC Milan testing as soon as those chips are available. In the meantime, here's a look at some of our new benchmarks and the current state of the data center CPU performance hierarchy in several hotly-contested price ranges.

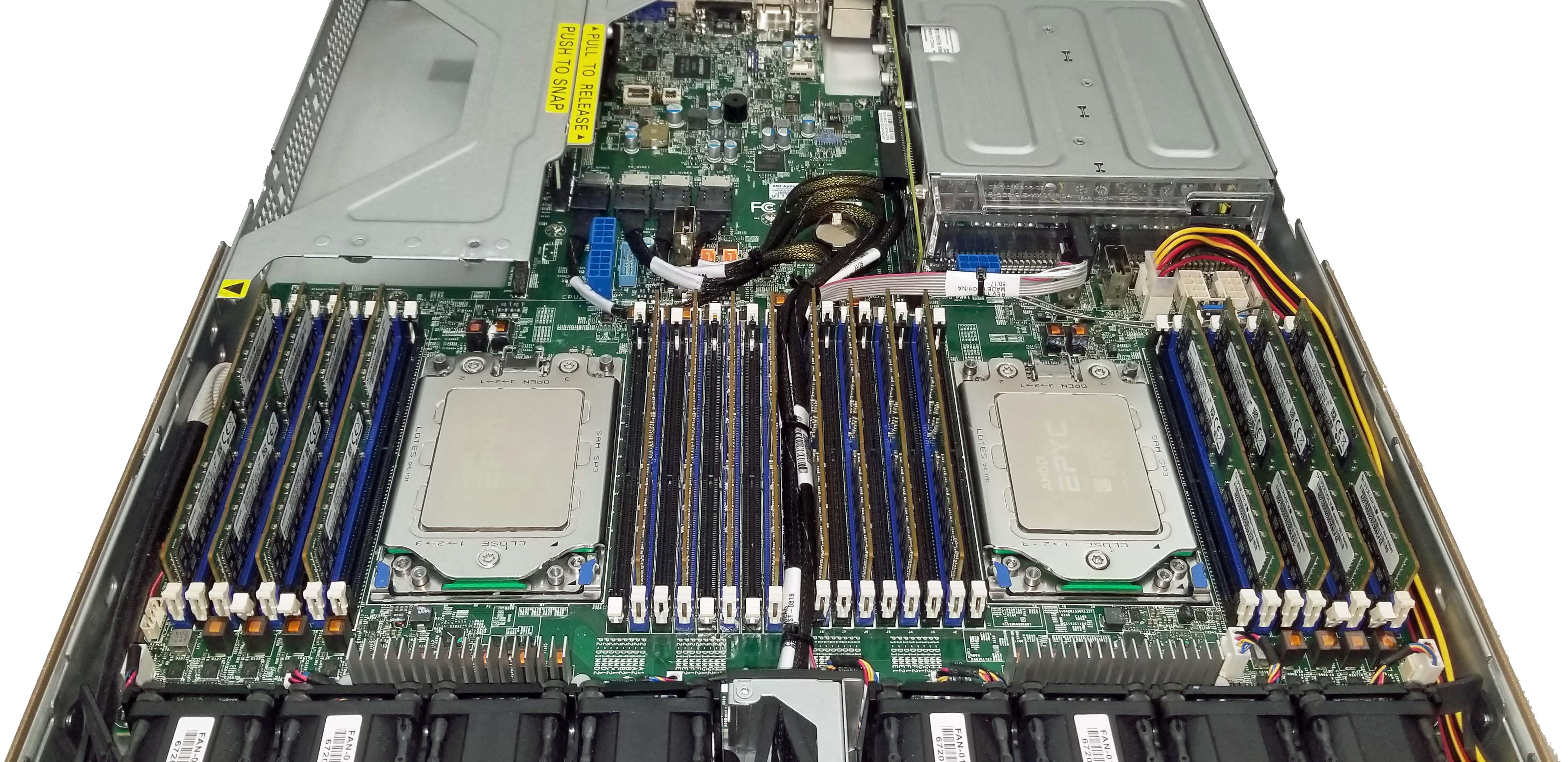

Inside the Supermicro 1023US-TR4 Server

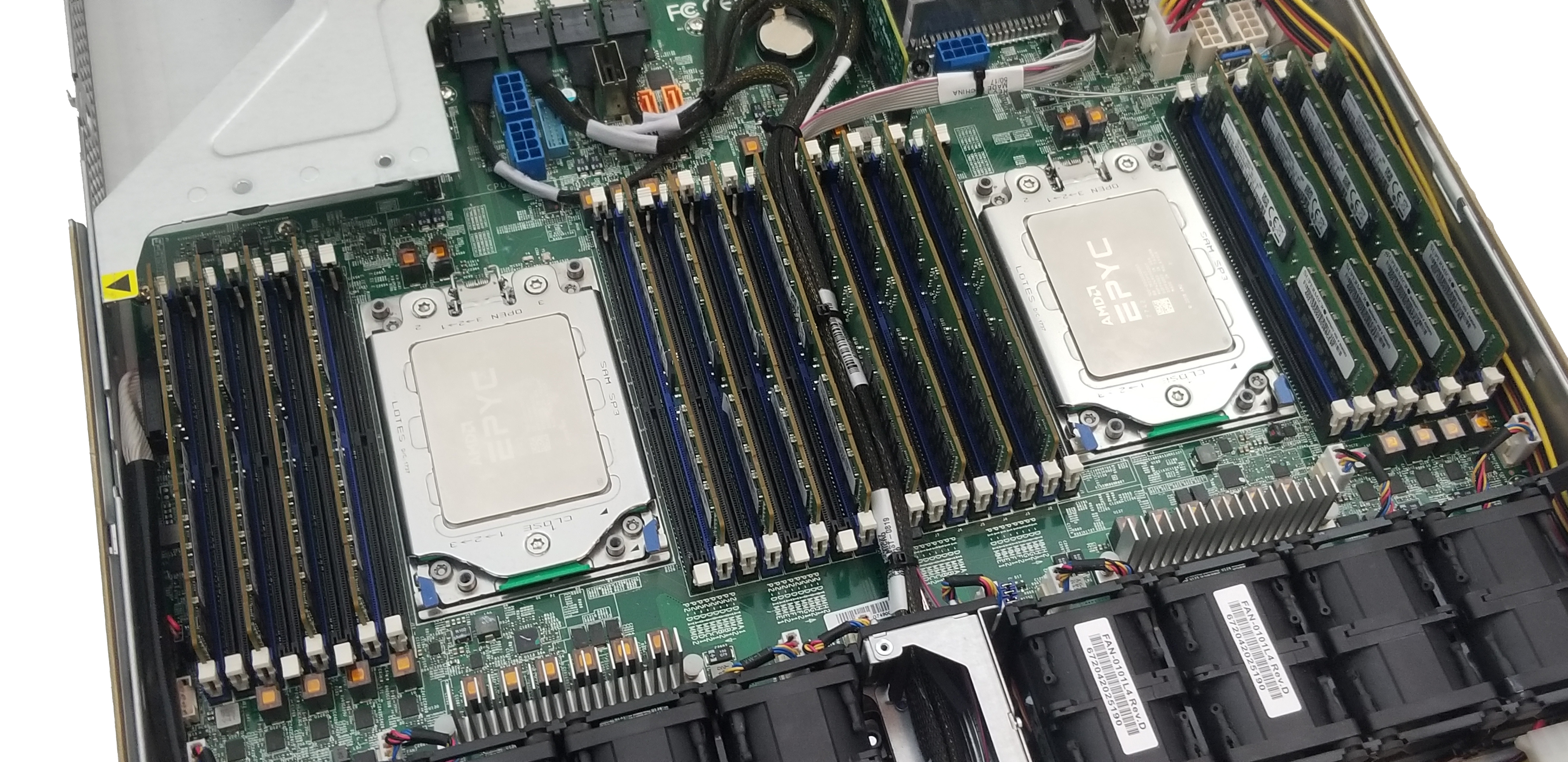

The Supermicro 1023US-TR4 server comes in the slim 1U form factor. And despite its slim stature, it can host an incredible amount of compute horsepower under the hood. The server supports AMD's EPYC 7001 and 7002 series chips, with the latter series topping out at 64 cores apiece, which translates to 128 cores and 256 threads spread across the dual sockets.

Support for the 7002 series chips requires a 2.x board revision, and the server can accommodate CPU cTDP's up to 280W. That means it can accommodate the beefiest of EPYC chips, which currently comes in the form of the 280W 64-core EPYC 7H12 with a 280W TDP.

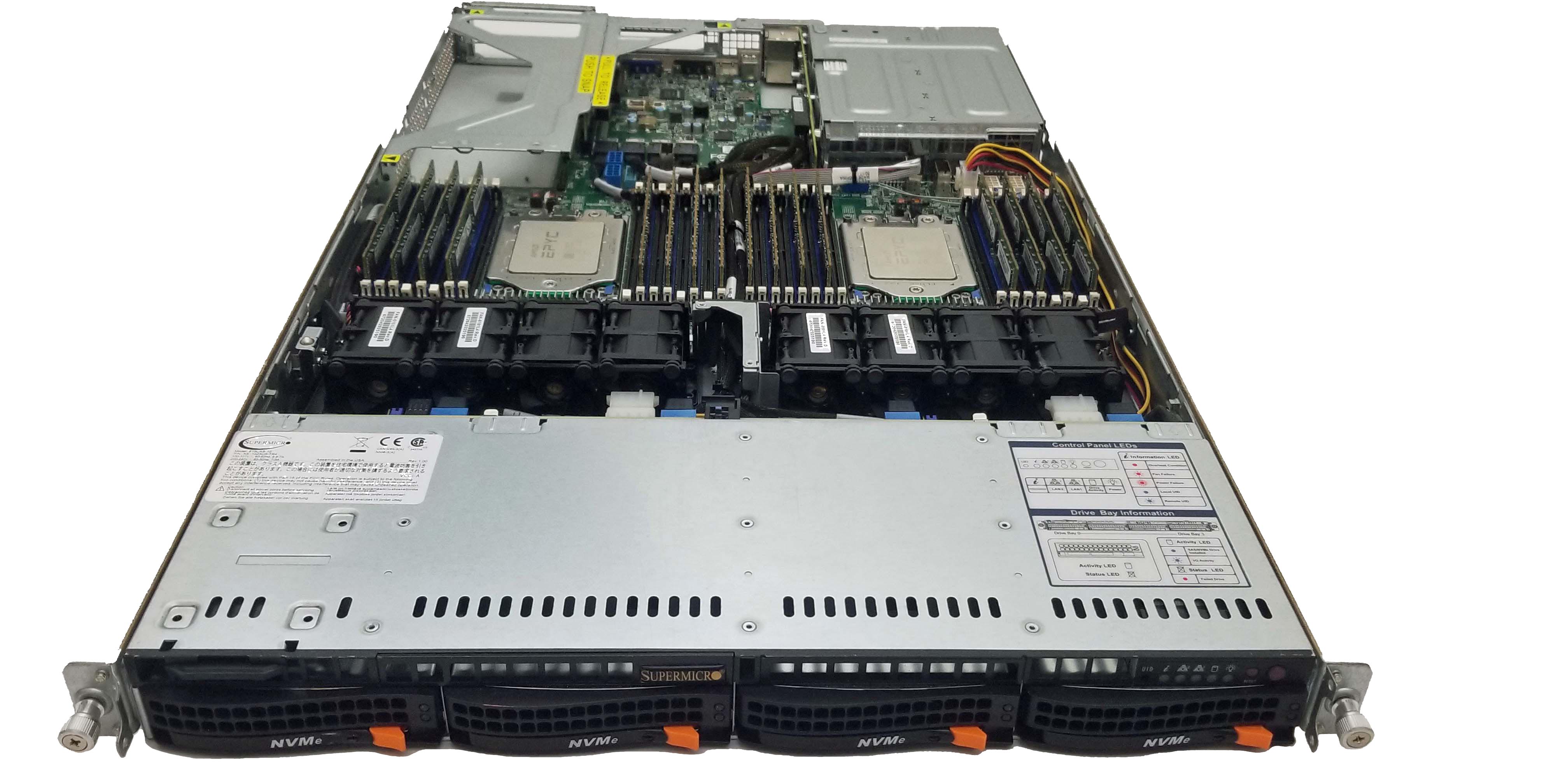

The server has a tool-less rail mounting system that eases installation into server racks and the CSE-819UTS-R1K02P-T chassis measures 1.7 x 17.2 x 29 inches, ensuring broad compatibility with standard 19-inch server racks.

The front panel comes with standard indicator lights, like a unit identification (UID) light that helps with locating the server in a rack, along with drive activity, power, status light (to indicate fan failures or system overheating), and two LAN activity LEDs. Power and reset buttons are also present at the upper right of the front panel.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

By default, the system comes with four tool-less 3.5-inch hot-swap SATA 3 drive bays, but you can configure the server to accept four NVMe drives on the front panel, and an additional two M.2 drives internally. You can also add an optional SAS card to enable support for SAS storage devices. The front of the system also houses a slide-out service/asset tag identifier card to the upper left.

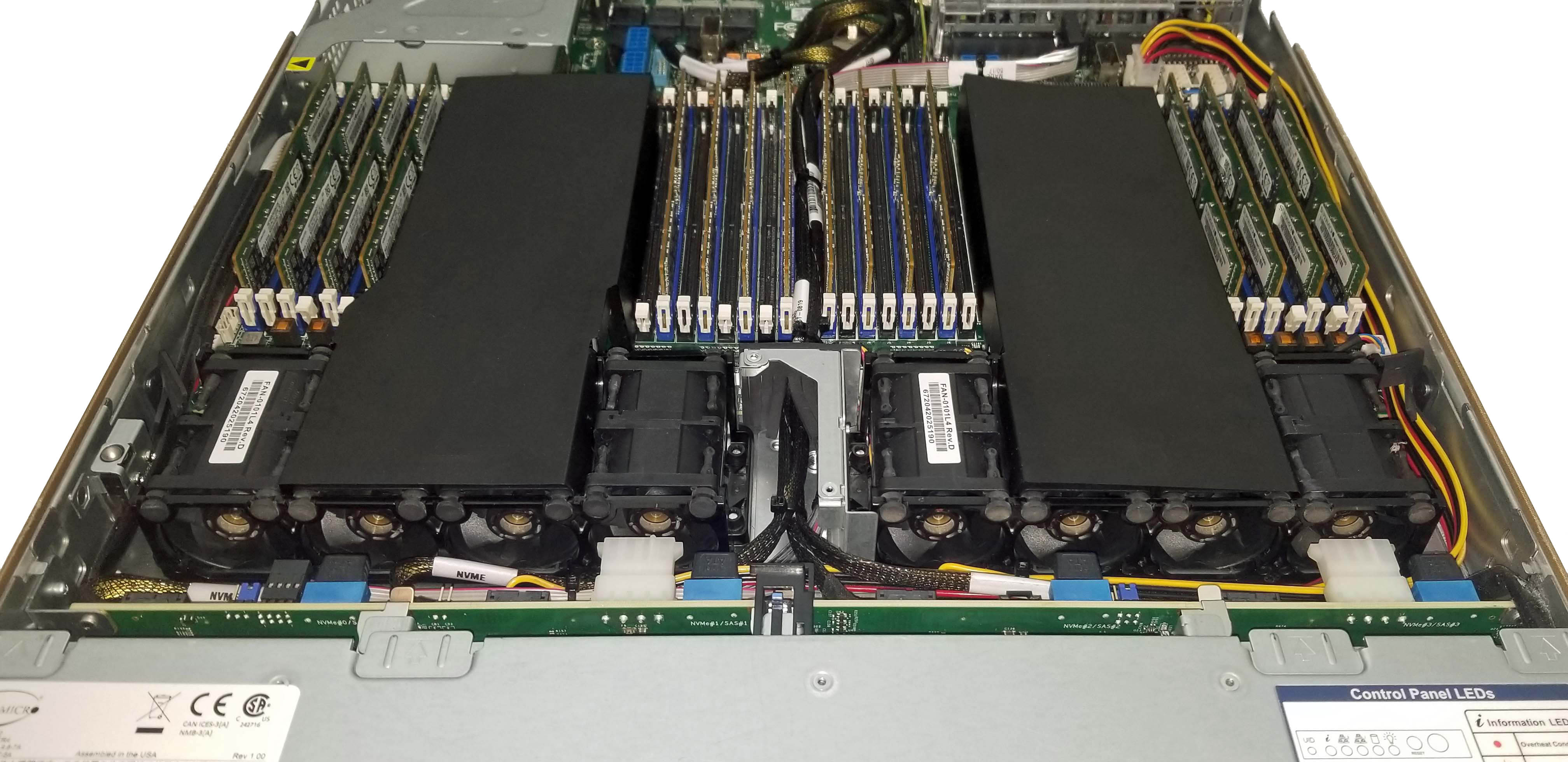

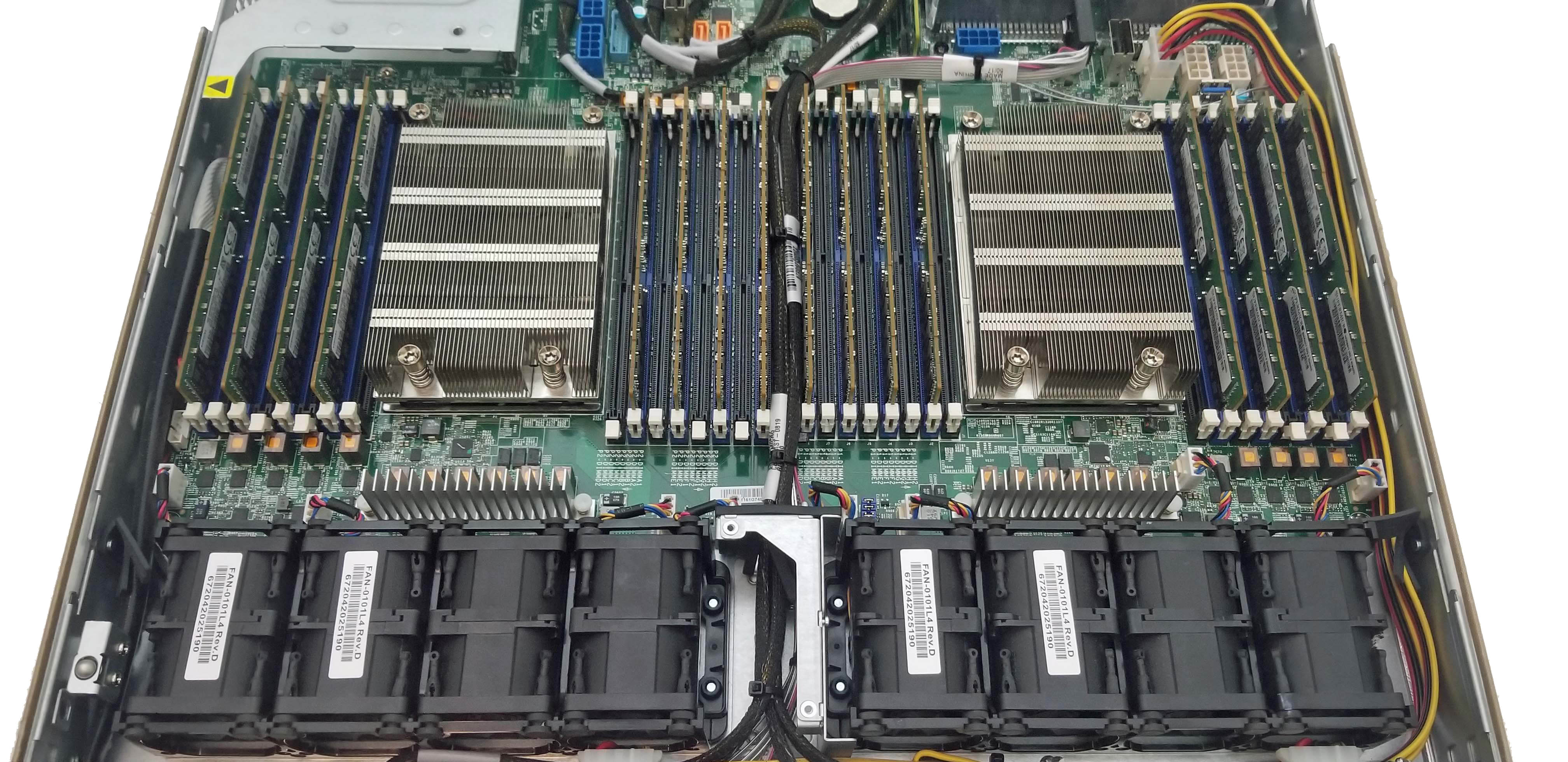

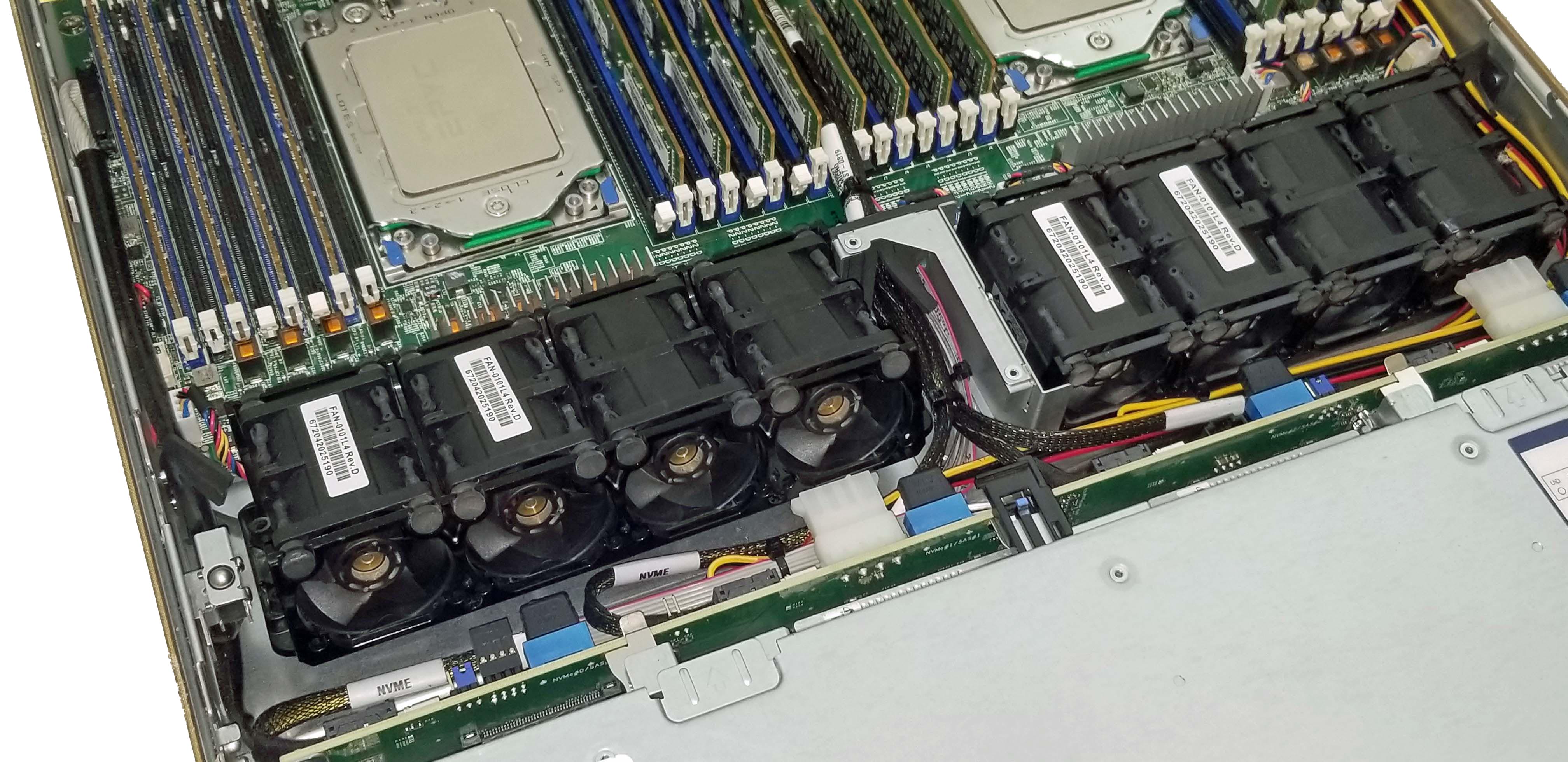

Popping the top off the chassis reveals two shrouds that direct air from the two rows of hot-swappable fans. A total of eight fan housings feed air to the system, and each housing includes two counter-rotating 4cm fans for maximum static pressure and reduced vibration. As expected with servers intended for 24/7 operation, the system can continue to function in the event of a fan failure. However, the remainder of the fans will automatically run at full speed if the system detects a failure. Naturally, these fans are loud, but that's not a concern for a server environment.

Two fan housings are assigned to cool each CPU, and a simple black plastic shroud directs air to the heatsinks underneath. Dual SP3 sockets house both processors, and they're covered by standard heatsinks that are optimized for linear airflow.

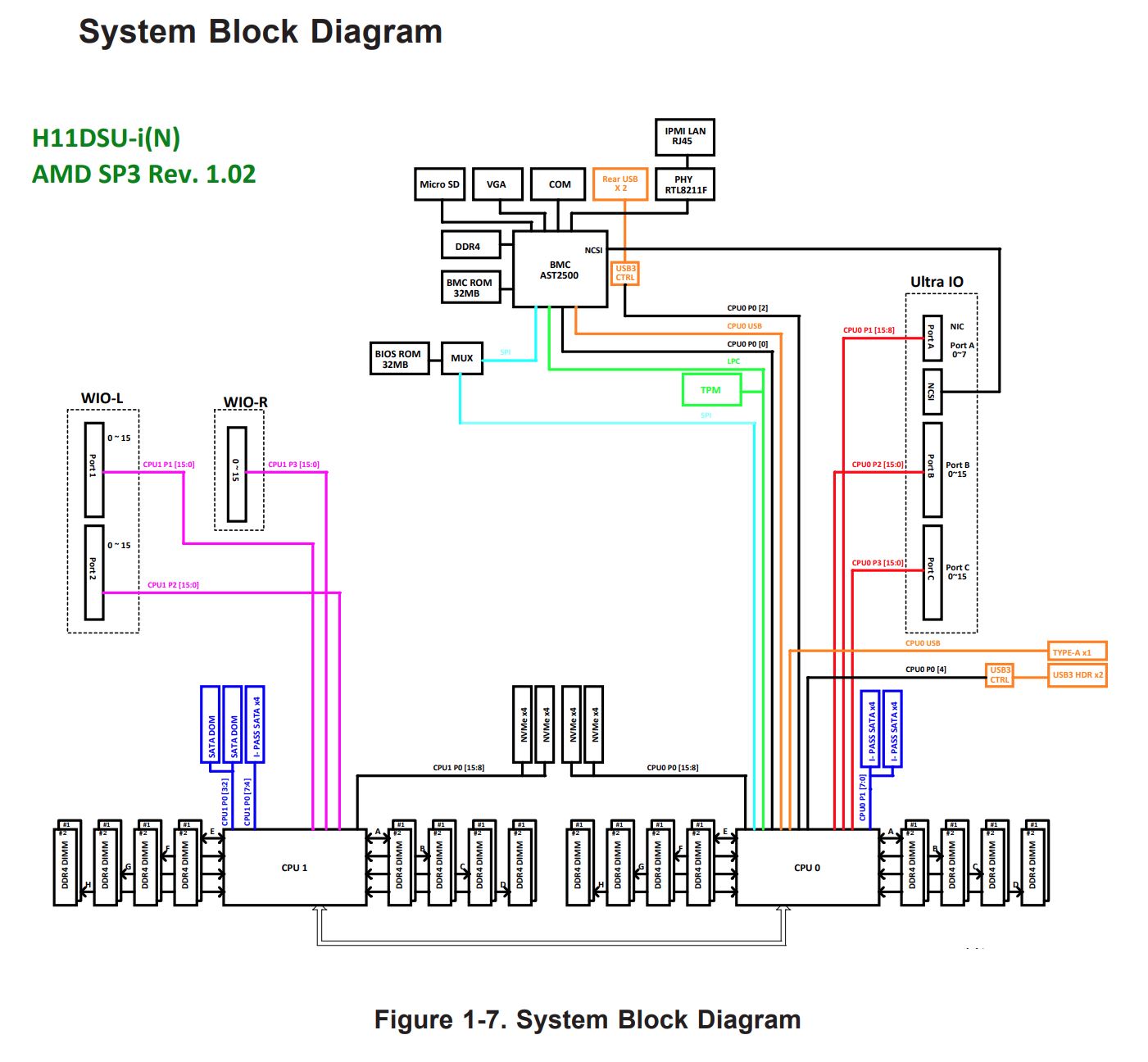

A total of 16 memory slots flank each processor, for a total of 32 memory slots that support up to 4TB of registered ECC DDR4-2666 with EPYC 7001 processors, or an incredible 8TB of ECC DDR4-3200 memory (via 256GB DIMMs) with the 7002 models, easily outstripping the memory capacity available with competing Intel platforms.

We tested the EPYC processors with 16x 32GB DDR4-3200 Samsung modules for a total memory capacity of 512GB. In contrast, we loaded down the Xeon comparison platform with 12x 32GB Sk hynix DDR4-2933 modules, for a total capacity of 384GB of memory.

The H11DSU-iN motherboard's expansion slots consist of two full-height 9.5-inch PCIe 3.0 slots and one low-profile PCIe 3.0 x8 slot, all mounted on riser cards. An additional internal PCIe 3.0 x8 slot is also available, but this slot only accepts proprietary Supermicro RAID cards. All told, the system exposes a total of 64 lanes (16 via NVMe storage devices) to the user.

As one would imagine, Supermicro has other server offerings that expose more of EPYCs available 128 lanes to the user and also come with the faster PCIe 4.0 interface.

The rear I/O panel includes four gigabit RJ45 LAN ports powered by an Intel i350-AM4 controller, along with a dedicated IPMI port for management. Here we find the only USB ports on the machine, which come in the form of two USB 3.0 headers, along with a COM and VGA port.

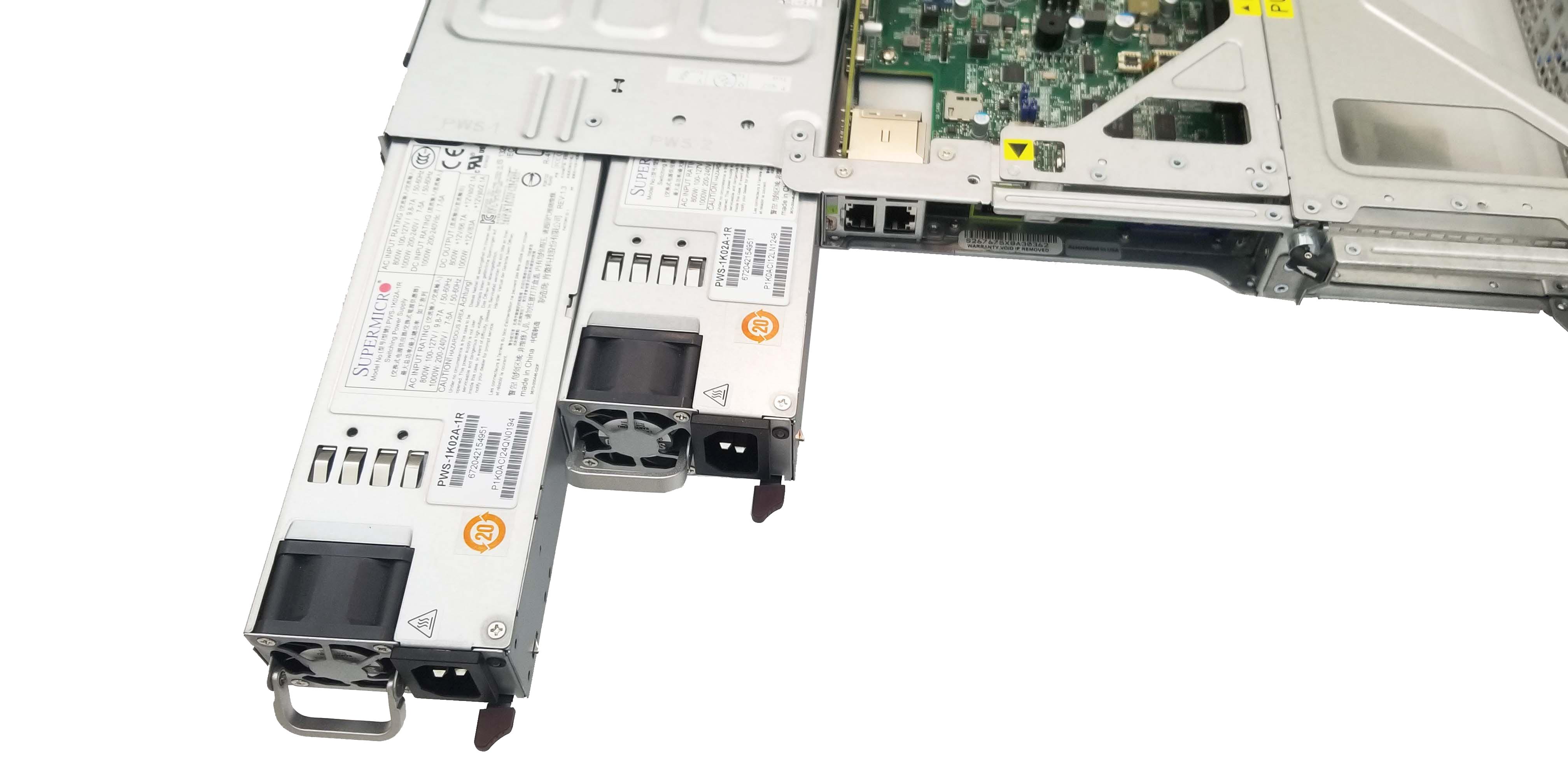

Two 1000W Titanium-Level (96%+) redundant power supplies provide power to the server, with automatic failover in the event of a failure, as well as hot-swapability for easy servicing.

The BIOS is easy to access and use, while the IPMI web interface provides a wealth of monitoring capabilities and easy remote management that matches the type of functionality available with Xeon platforms. Among many options, you can update the BIOS, use the KVM-over-LAN remote console, monitor power consumption, access health event logs, monitor and adjust fan speeds, and monitor the CPU, DIMM, and chipset temperatures and voltages. Supermicro's remote management suite is polished and easy to use, which stands in contrast to other platforms we've tested.

Test Setup

| Header Cell - Column 0 | Cores/Threads | 1K Unit Price | Base / Boost (GHz) | L3 Cache (MB) | TDP (W) |

|---|---|---|---|---|---|

| AMD EPYC 7742 | 64 / 128 | $6,950 | 2.25 / 3.4 | 256 | 225W |

| Intel Xeon Platinum 8280 | 28 / 56 | $10,009 | 2.7 / 4.0 | 38.5 | 205W |

| Intel Xeon Gold 6258R | 28 / 56 | $3,651 | 2.7 / 4.0 | 38.5 | 205W |

| AMD EPYC 7F72 | 24 / 48 | $2,450 | 3.2 / ~3.7 | 192 | 240W |

| Intel Xeon Gold 5220R | 24 / 48 | $1,555 | 2.2 / 4.0 | 35.75 | 150W |

| AMD EPYC 7F52 | 16 / 32 | $3,100 | 3.5 / ~3.9 | 256 | 240W |

| Intel Xeon Gold 6226R | 16 / 32 | $1,300 | 2.9 / 3.9 | 22 | 150W |

| Intel Xeon Gold 5218 | 16 / 32 | $1,280 | 2.3 / 3.9 | 22 | 125W |

| AMD EPYC 7F32 | 8 / 16 | $2,100 | 3.7 / ~3.9 | 128 | 180W |

| Intel Xeon Gold 6250 | 8 / 16 | $3,400 | 3.9 / 4.5 | 35.75 | 185W |

Here we can see the selection of processors we've tested for this review, though we use the Xeon Platinum Gold 8280 as a stand-in for the less expensive Xeon Gold 6258R. These two chips are identical and provide the same level of performance, with the difference boiling down to the more expensive 8280 coming with support for quad-socket servers, while the Xeon Gold 6258R tops out at dual-socket support.

| Header Cell - Column 0 | Memory | Tested Processors |

|---|---|---|

| Supermicro AS-1023US-TR4 | 16x 32GB Samsung ECC DDR4-3200 | EPYC 7742, 7F72, 7F52, 7F32 |

| Dell/EMC PowerEdge R460 | 12x 32GB SK Hynix DDR4-2933 | Intel Xeon 8280, 6258R, 5220R, 6226R, 6250 |

To assess performance with a range of different potential configurations, we used the Supermicro 1024US-TR4 server with four different EPYC Rome configurations. We outfitted this server with 16x 32GB Samsung ECC DDR4-3200 memory modules, ensuring that both chips had all eight memory channels populated.

We used a Dell/EMC PowerEdge R460 server to test the Xeon processors in our test group, giving us a good sense of performance with competing Intel systems. We equipped this server with 12x 32GB Sk hynix DDR4-2933 modules, again ensuring that each Xeon chip's six memory channels were populated. These configurations give the AMD-powered platform a memory capacity advantage, but come as an unavoidable side effect of the capabilities of each platform. As such, bear in mind that memory capacity disparities may impact the results below.

We used the Phoronix Test Suite for testing. This automated test suite simplifies running complex benchmarks in the Linux environment. The test suite is maintained by Phoronix, and it installs all needed dependencies and the test library includes 450 benchmarks and 100 test suites (and counting). Phoronix also maintains openbenchmarking.org, which is an online repository for uploading test results into a centralized database. We used Ubuntu 20.04 LTS and the default Phoronix test configurations with the GCC compiler for all tests below. We also tested both platforms with all available security mitigations.

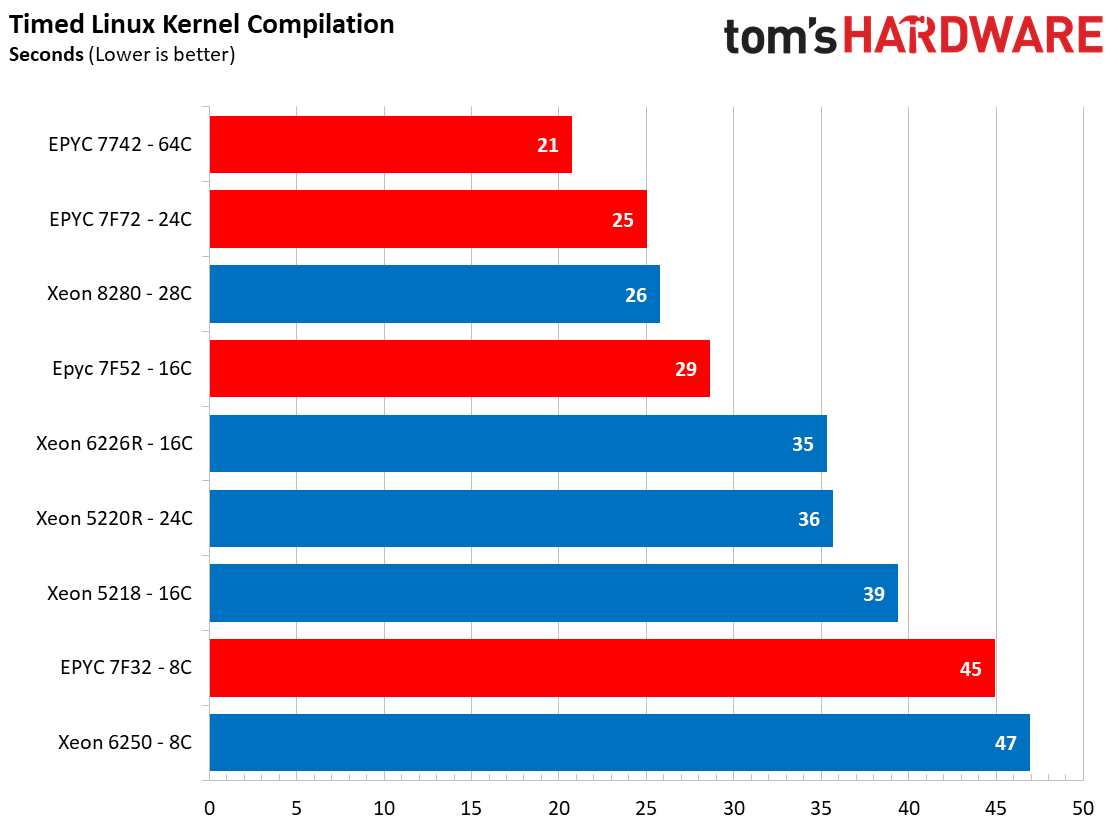

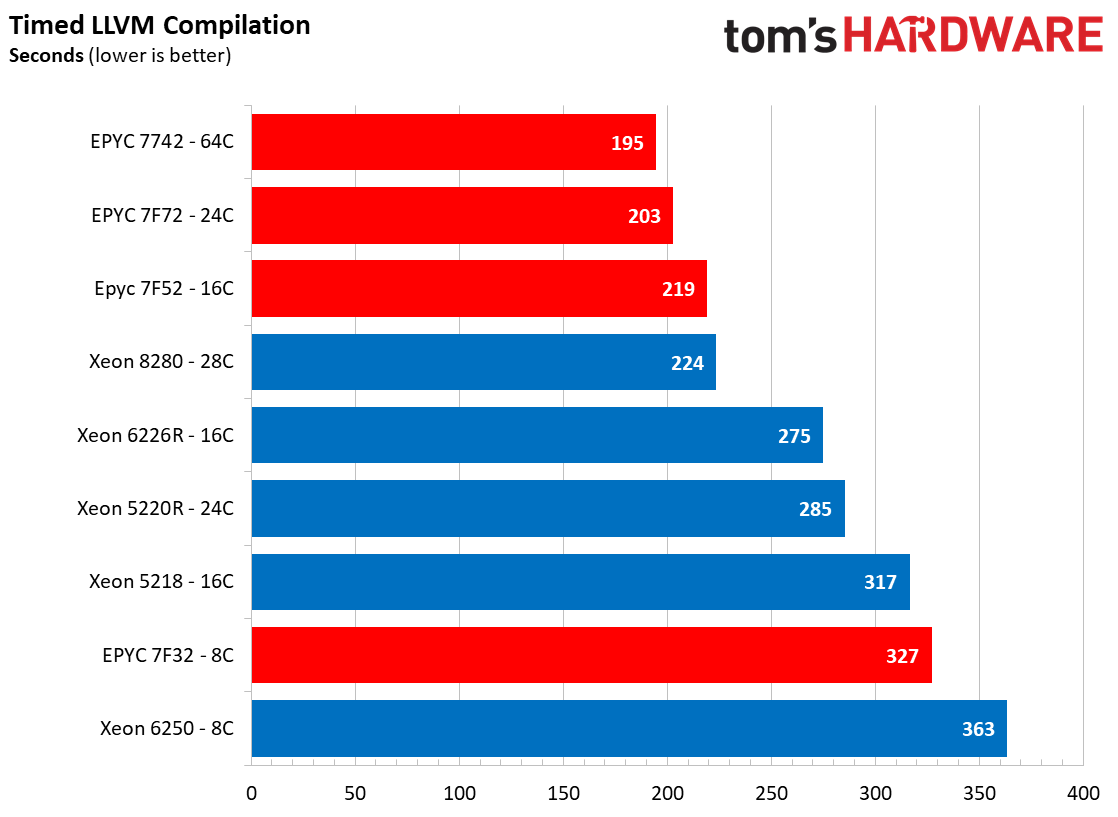

Linux Kernel and LLVM Compilation Benchmarks

We used the 1023US-TR4 for testing with all of the EPYC processors in the chart, and here we see the expected scaling in the timed Linux kernel compile test with the AMD EPYC processors taking the lead over the Xeon chips at any given core count. The dual EPYC 7742 processors complete the benchmark, which builds the Linux kernel at default settings, in 21 seconds. The dual 24-core EPYC 7F72 configuration is impressive in its own right — it chewed through the test in 25 seconds, edging past the dual-processor Xeon 8280 platform.

AMD's EPYC delivers even stronger performance in the timed LLVM compilation benchmark — the dual 16-core 7F72's even beat the dual 28-core 8280's. Performance scaling is somewhat muted between the flagship 64-core 7742 and the 24-core 7F72, largely due to the strength of the latter's much higher base and boost frequencies. That impressive performance comes at the cost of a 240W TDP rating, but the Supermicro server handles the increased thermal output easily.

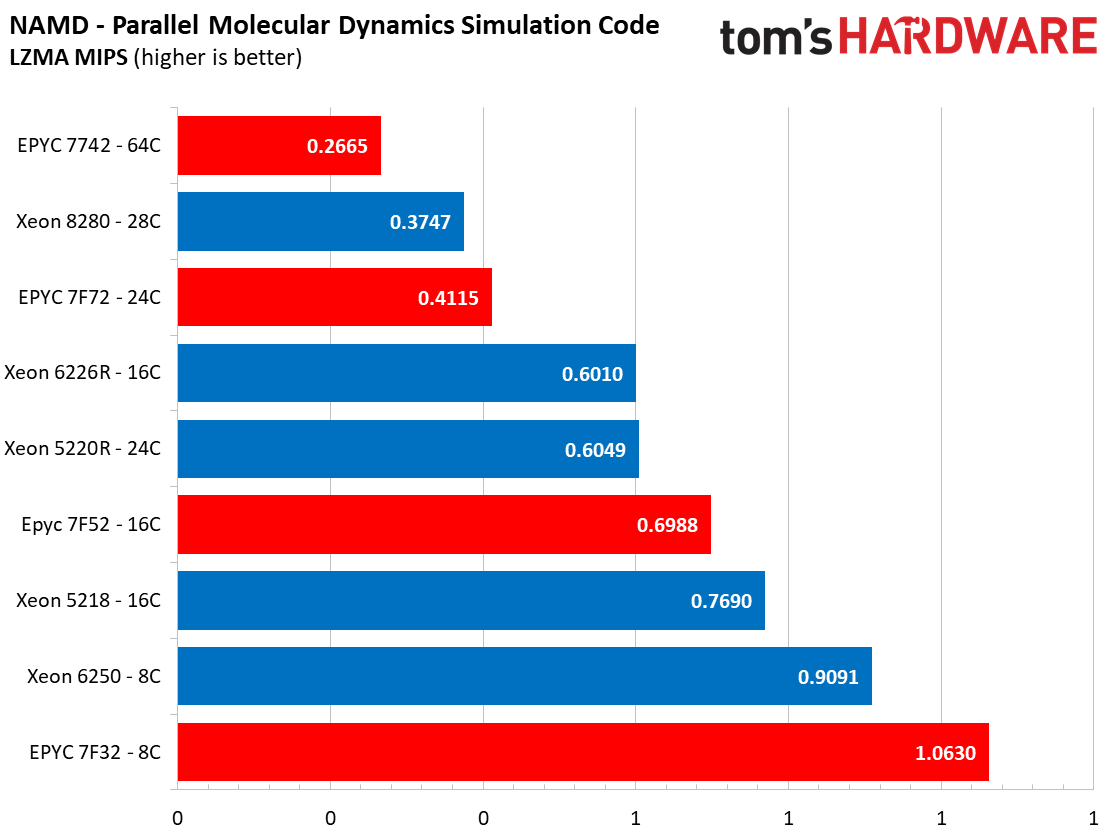

Molecular Dynamics and Parallel Compute Benchmarks

NAMD is a parallel molecular dynamics code designed to scale well with additional compute resources; it scales up to 500,000 cores and is one of the premier benchmarks used to quantify performance with simulation code. The EPYC processors are obviously well-suited for these types of highly-parallelized workloads due to their prodigious core counts, with the dual 7742 configuration completing the workload 28% faster than the dual Xeon 8280 setup.

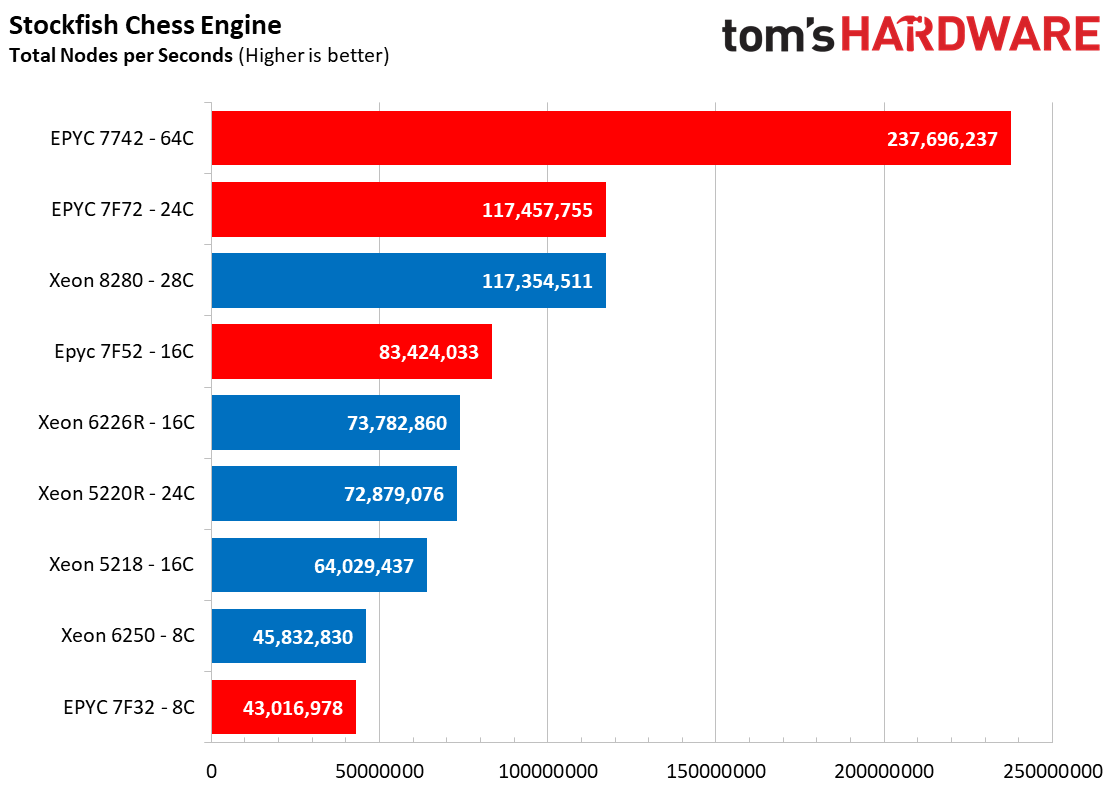

Stockfish is a chess engine designed for the utmost in scalability across increased core counts — it can scale up to 512 threads. Here we can see that this massively parallel code scales well with EPYC's leading core counts. But, as evidenced by the dual 24-core 7F72's effectively tying the 28-core Xeon 8280's, the benchmark also generally responds well to the EPYC processors. The dual 16-core 7F52 configuration also beat out both of the 16-core Intel comparables. Intel does pull off a win as the eight-core 6250 processors beat the 7F32's, though.

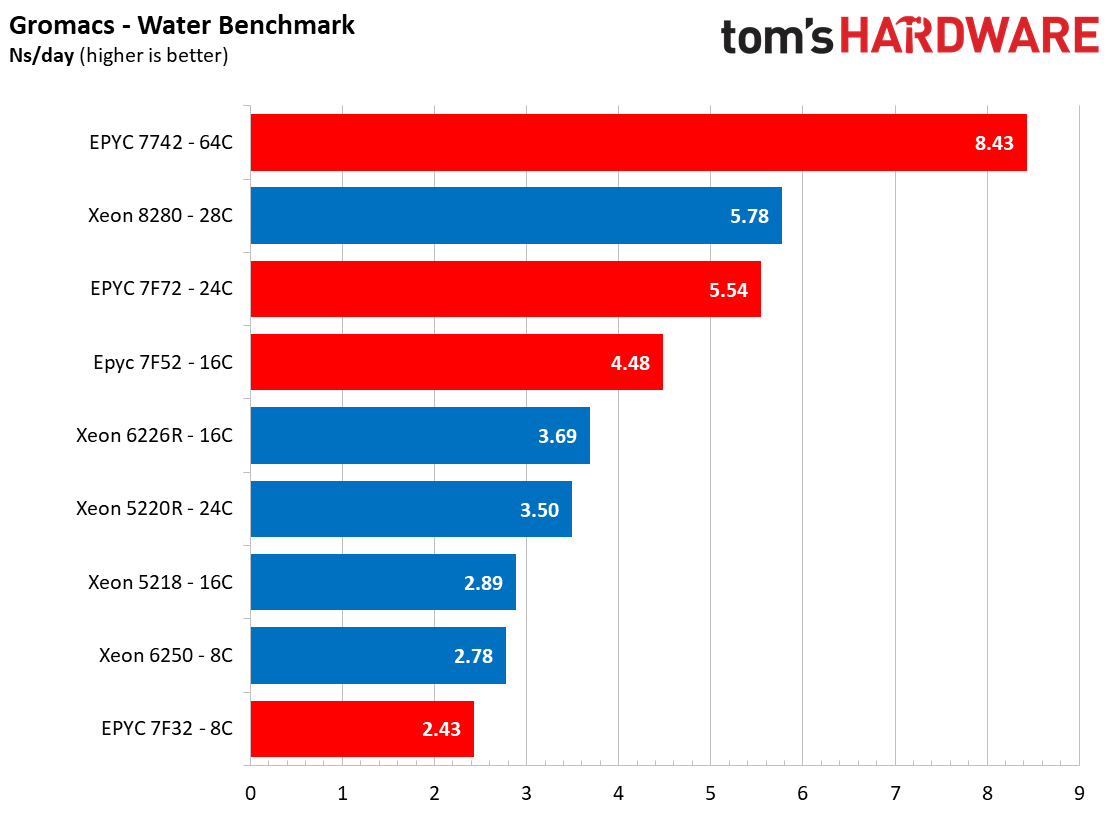

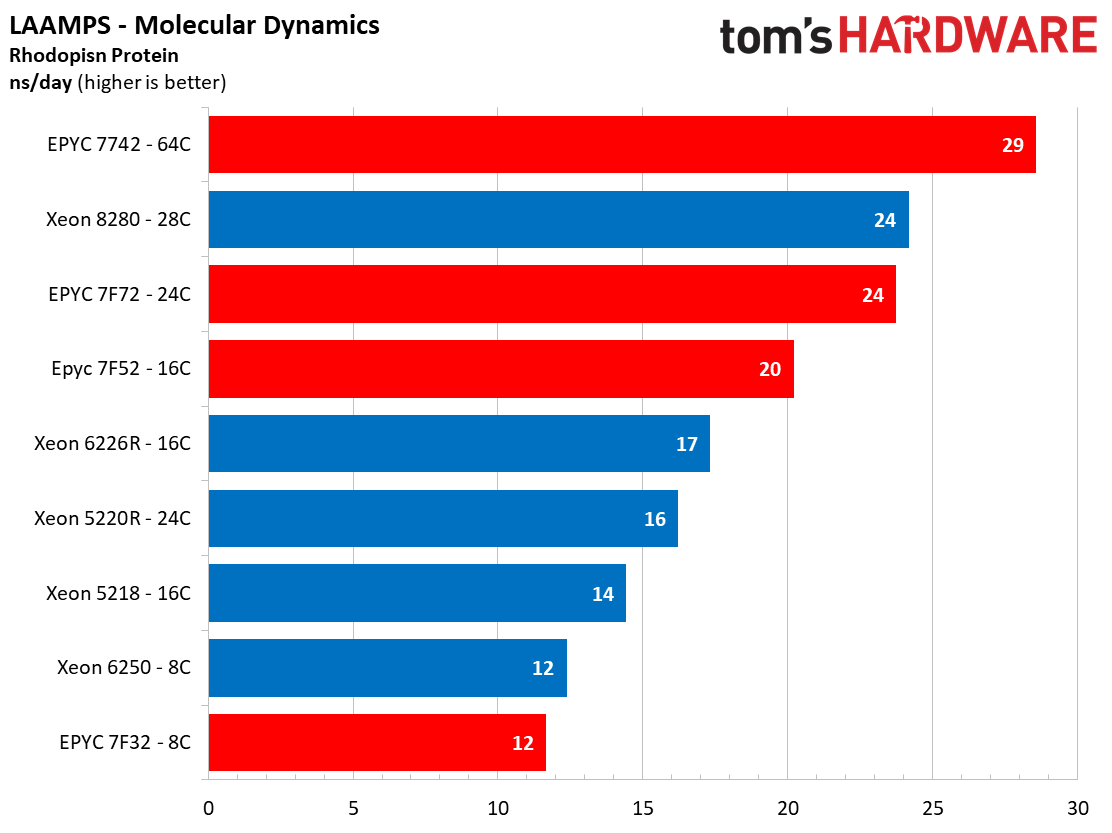

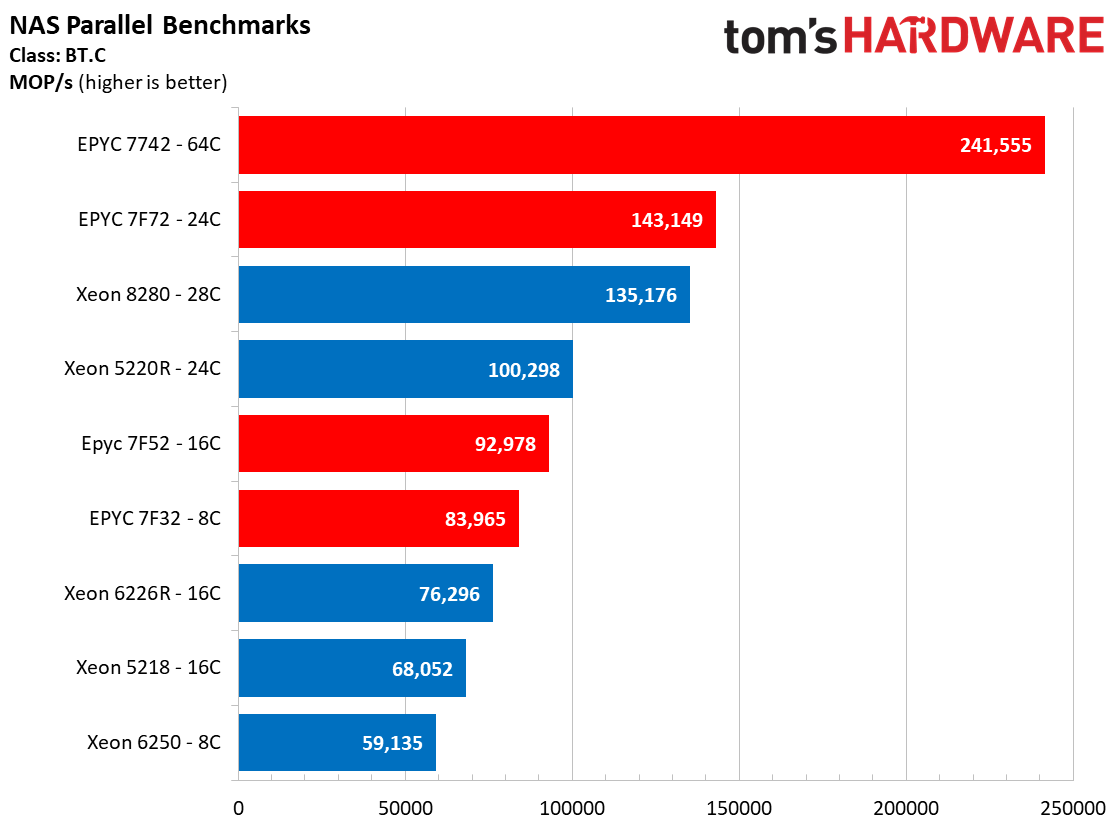

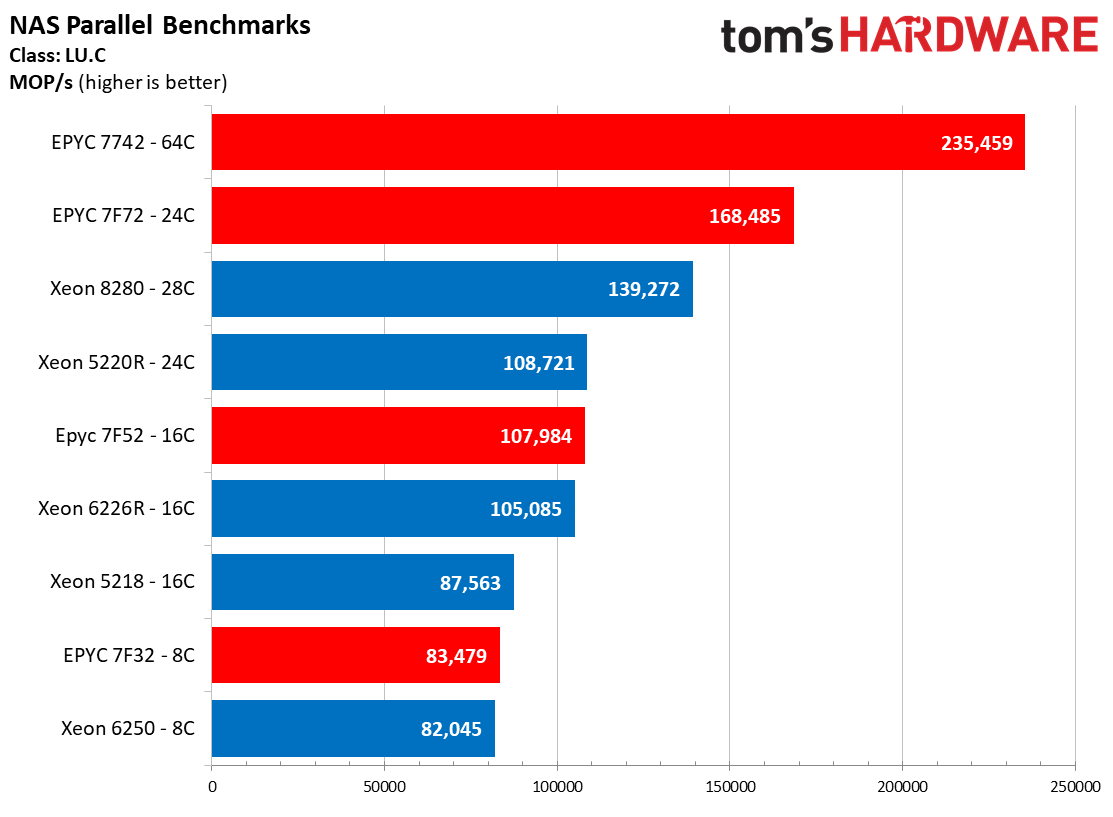

We see similarly impressive performance in other molecular dynamics workloads, like the Gromacs water benchmark that simulates Newtonian equations of motion with hundreds of millions of particles and the NAS Parallel Benchmarks (NPB) suite. NPB characterizes Computational Fluid Dynamics (CFD) applications, and NASA designed it to measure performance from smaller CFD applications up to "embarrassingly parallel" operations. The BT.C test measures Block Tri-Diagonal solver performance, while the LU.C test measures performance with a lower-upper Gauss-Seidel solver.

Regardless of the workload, the EPYC processors deliver a brutal level of performance in highly-parallelized applications, and the Supermicro server handled the heat output without issue.

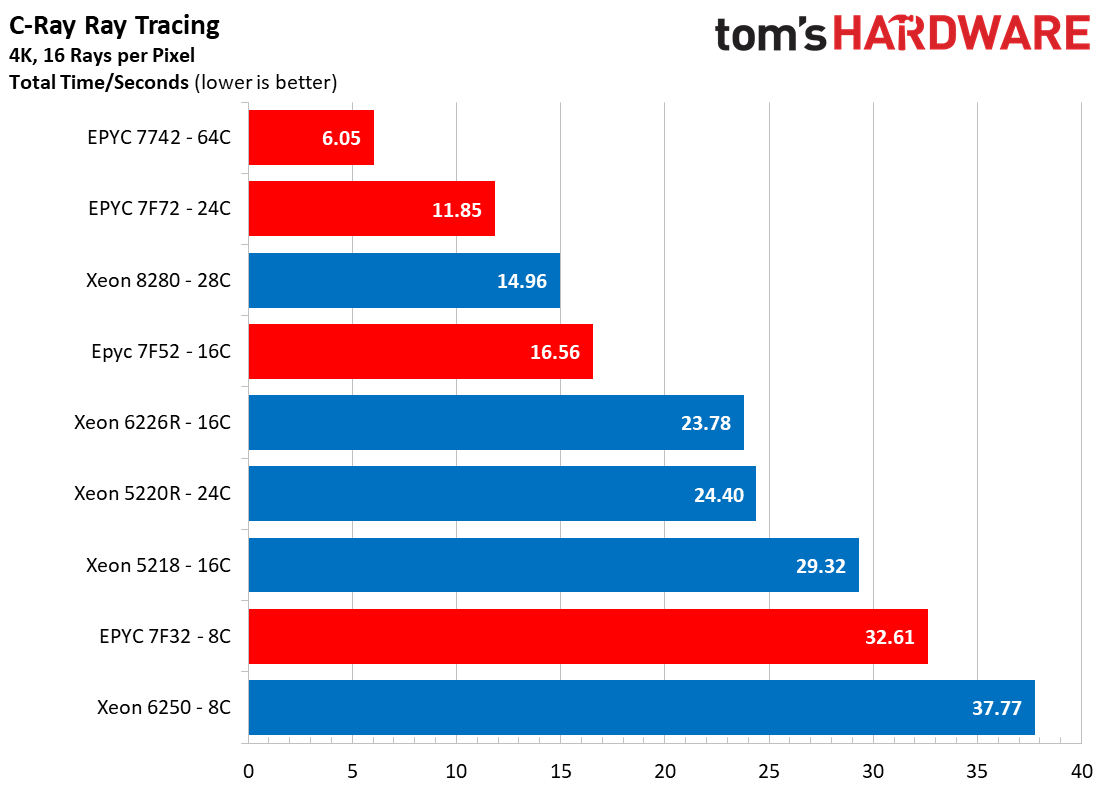

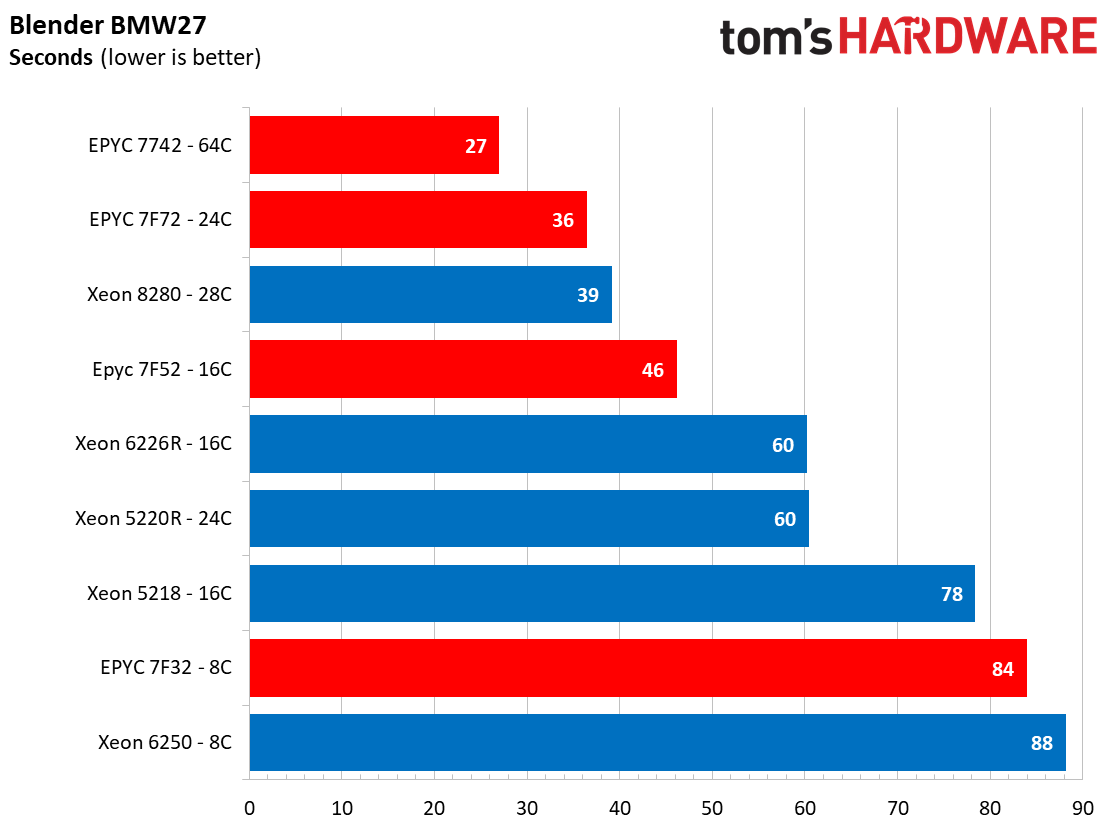

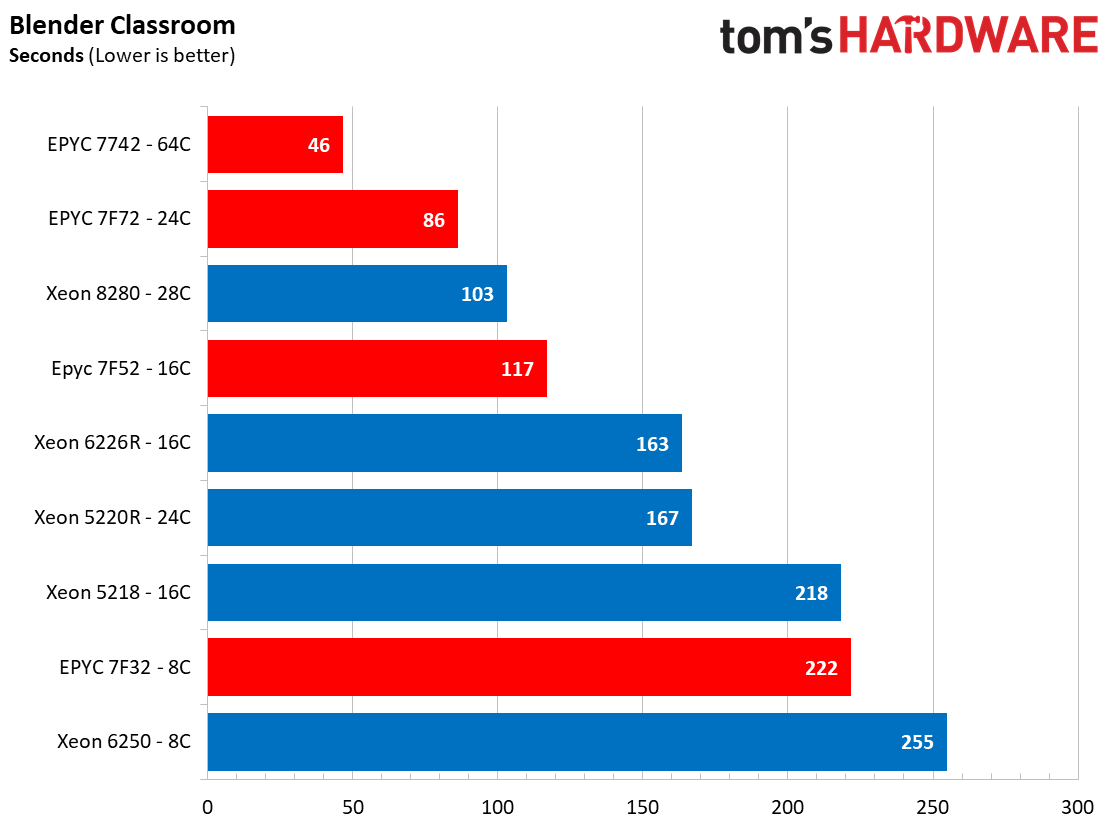

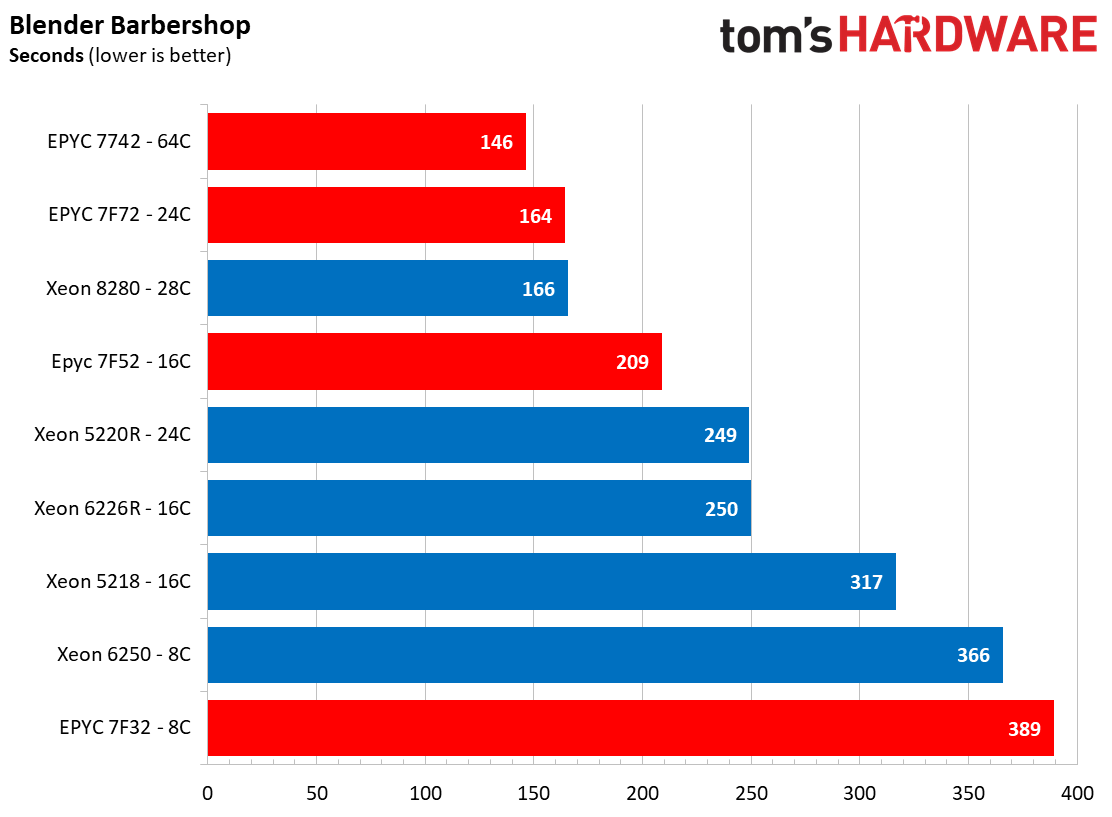

Rendering Benchmarks

Turning to more standard fare, provided you can keep the cores fed with data, most modern rendering applications also take full advantage of the compute resources. Given the well-known strengths of EPYC's core-heavy approach, it isn't surprising to see the 64-core EPYC 7742 processors carve out a commanding lead in the C-Ray and Blender benchmarks. Still, it is impressive to see the 7Fx2 models beat the competing Xeon processors with similar core counts nearly across the board.

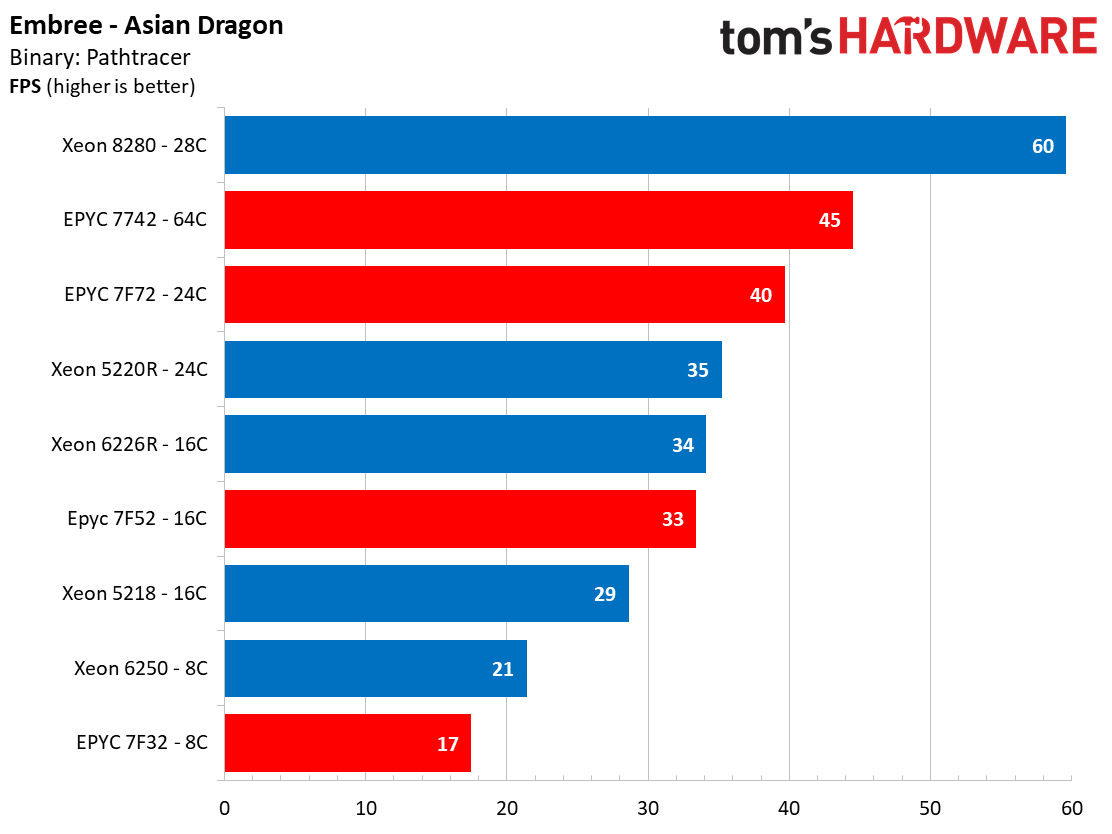

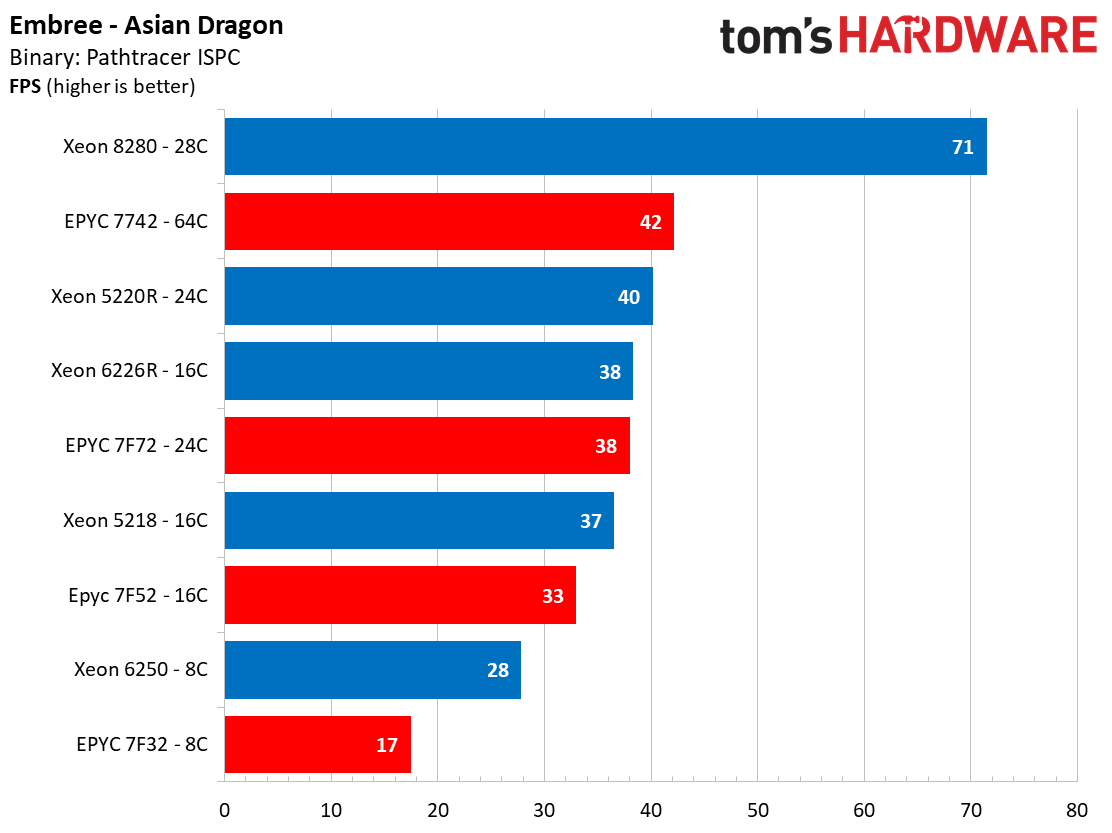

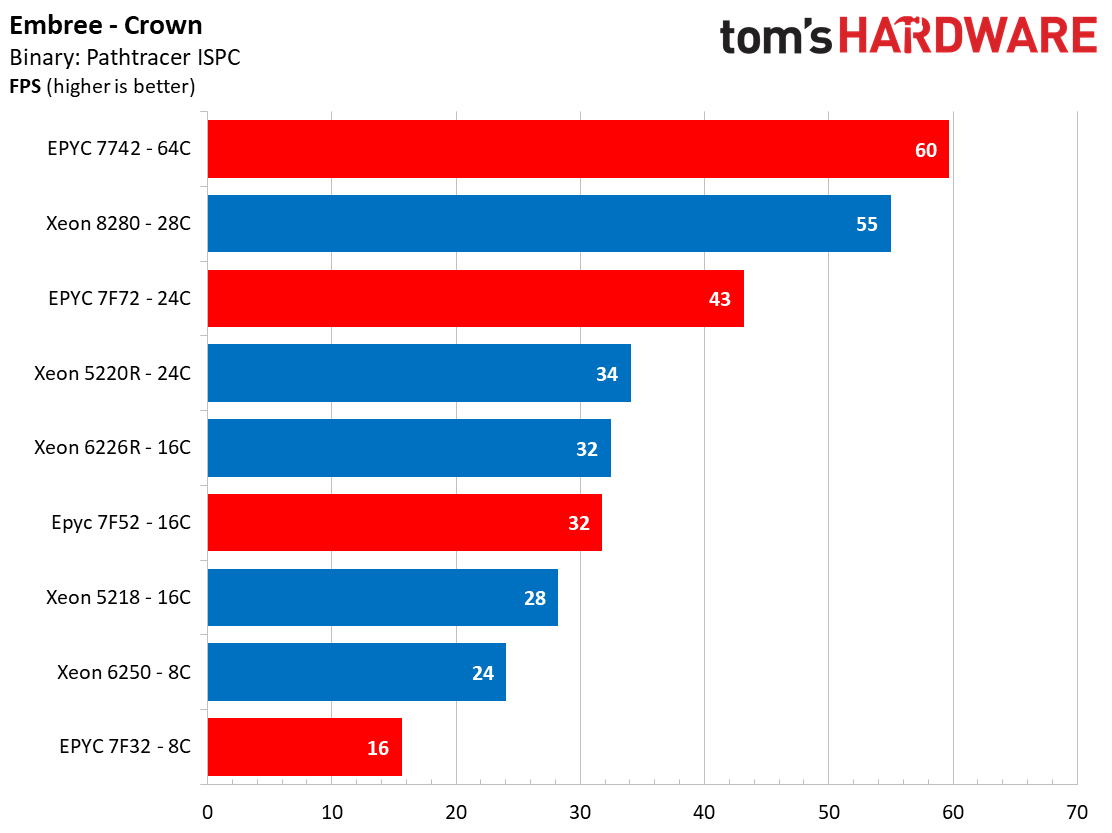

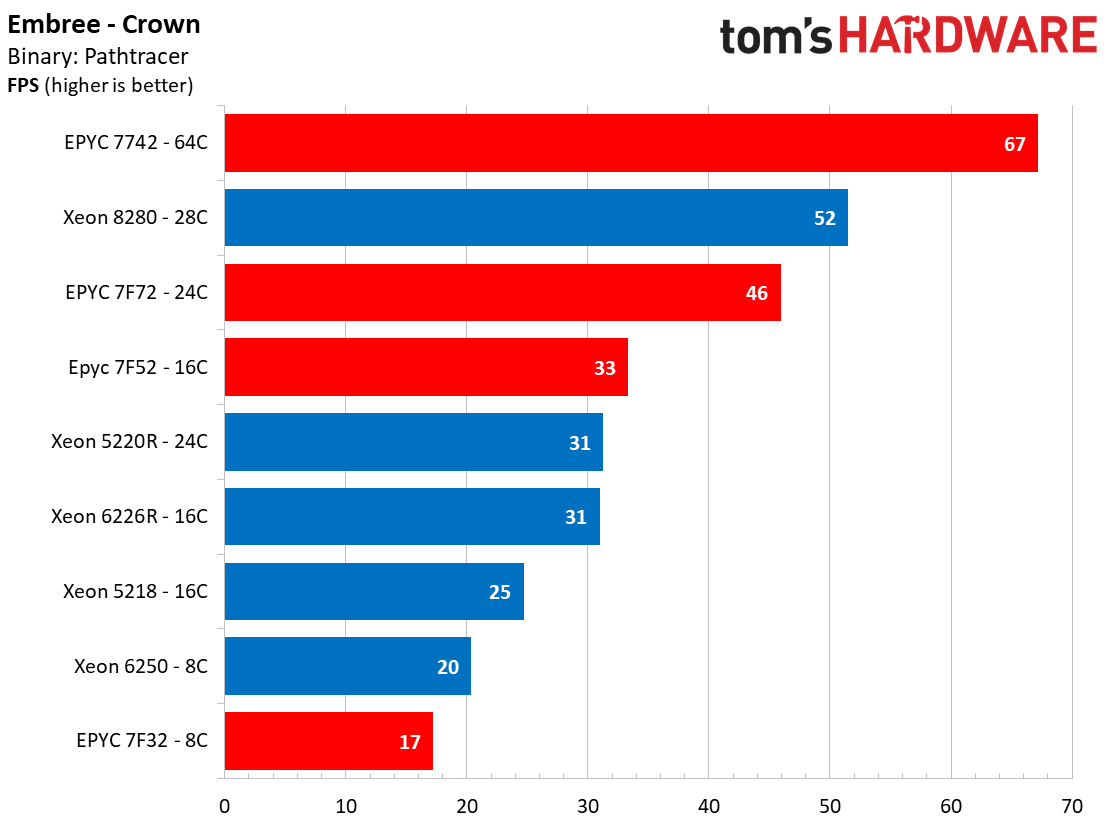

The performance picture changes somewhat with the Embree benchmarks, which test high-performance ray tracing libraries developed at Intel Labs. Naturally, the Xeon processors take the lead in the Asian Dragon renders, but the crown renders show that AMD's EPYC can offer leading performance even with code that is heavily optimized for Xeon processors.

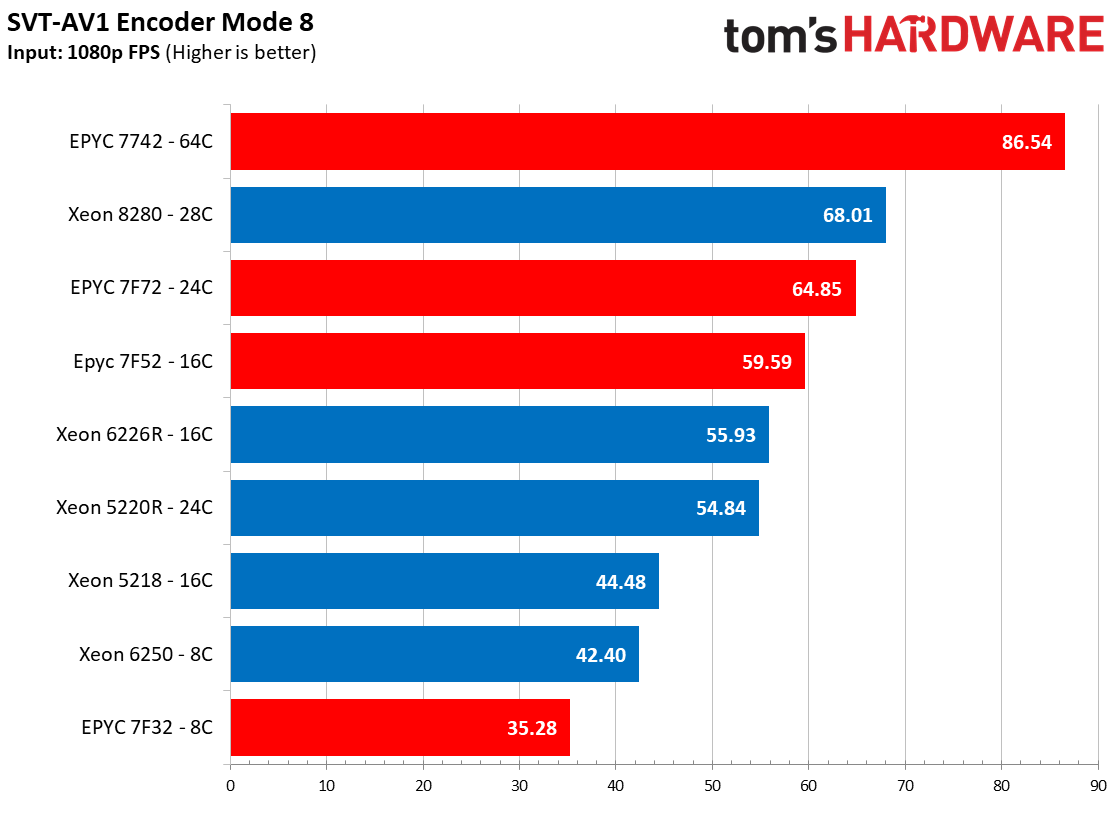

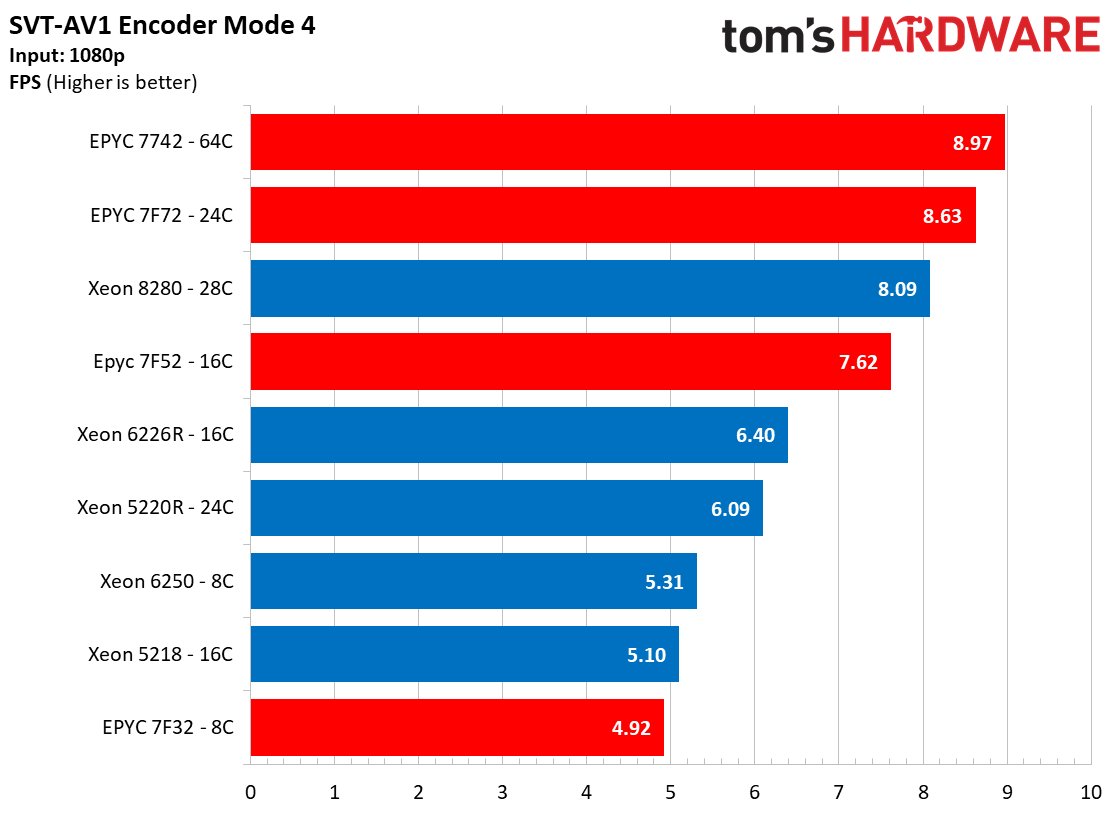

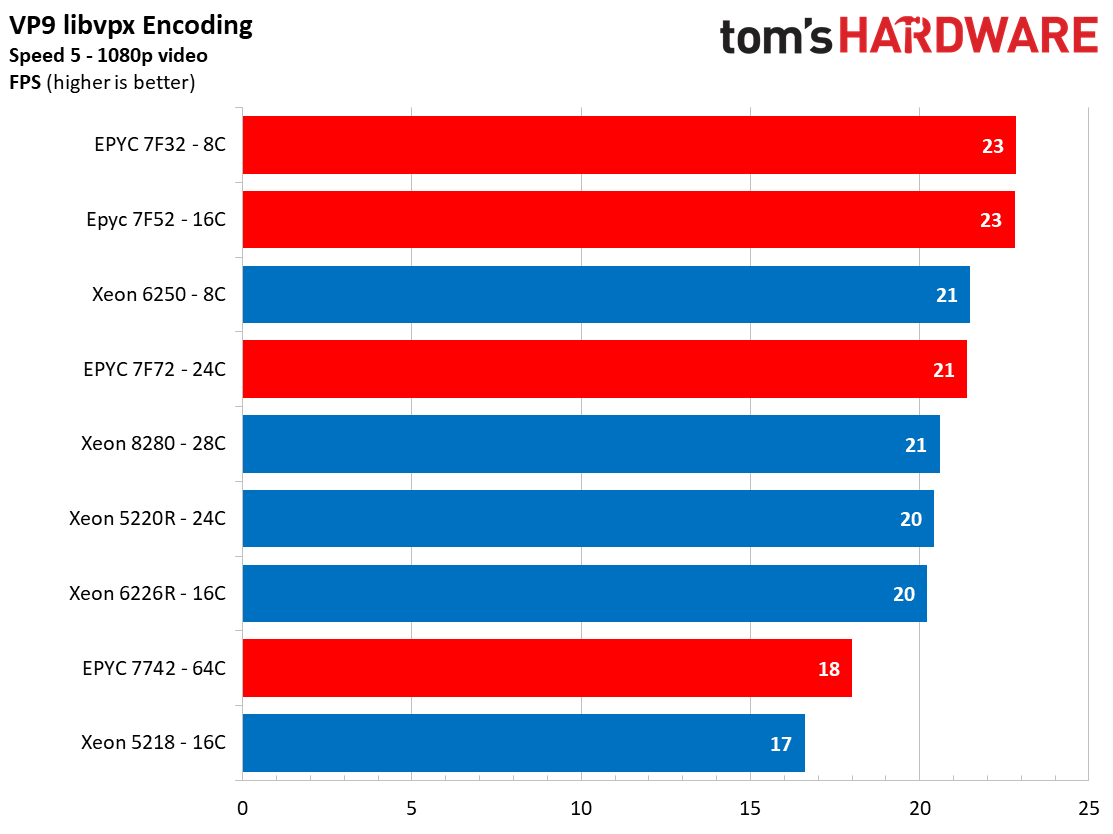

Encoding Benchmarks

Encoders tend to present a different type of challenge: As we can see with the VP9 libvpx benchmark, they often don't scale well with increased core counts. Instead, they often benefit from per-core performance and other factors, like cache capacity.

However, newer encoders, like Intel's SVT-AV1, are designed to leverage multi-threading more fully to extract faster performance for live encoding/transcoding video applications. Again, we can see the impact of EPYC's increased core counts paired with its strong per-core performance as the EPYC 7742 and 7F72 post impressive wins.

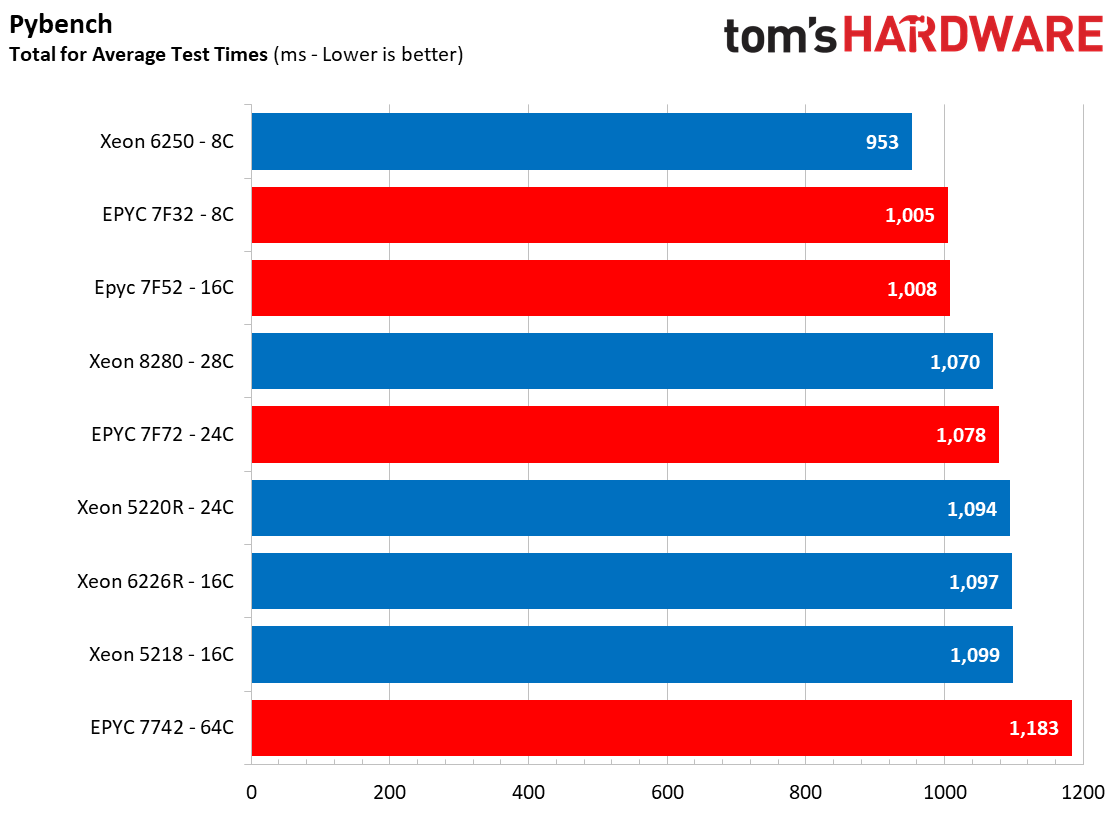

Python and Sysbench Benchmarks

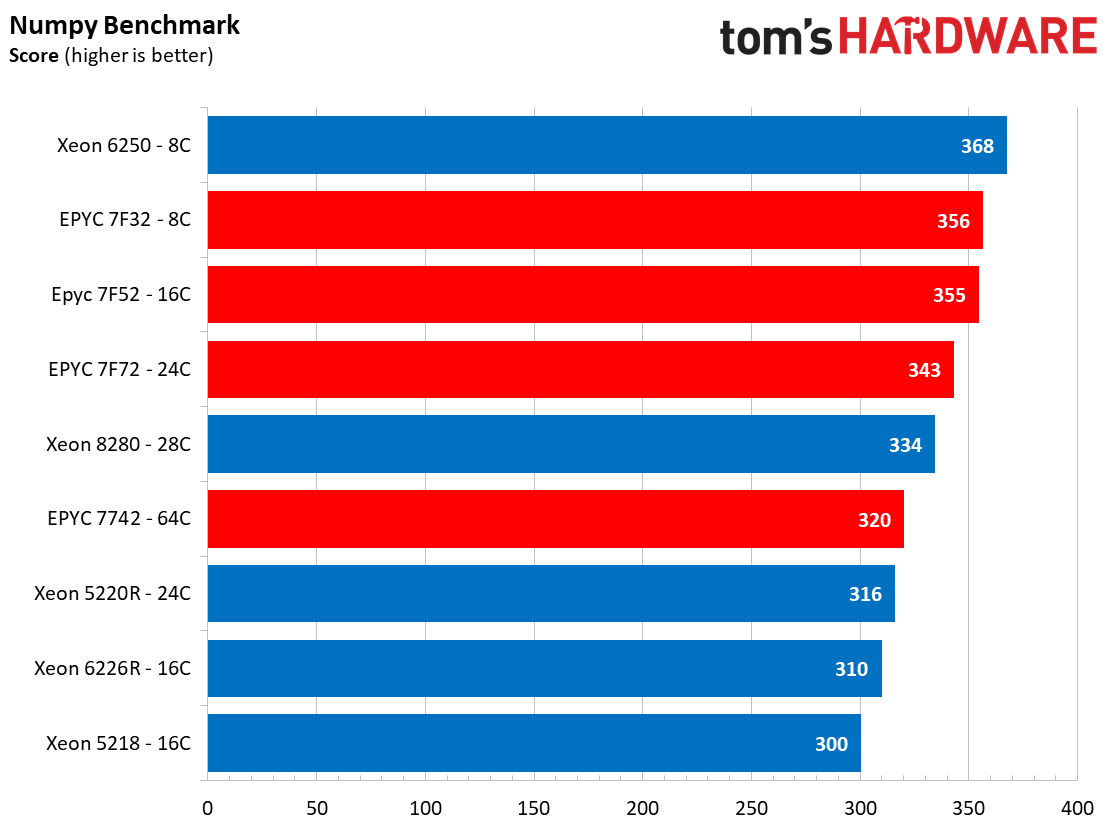

The Pybench and Numpy benchmarks are used as a general litmus test of Python performance, and as we can see, these tests don't scale well with increased core counts. That allows the Xeon 6250, which has the highest boost frequency of the test pool at 4.5 GHz, to take the lead.

Compression and Security

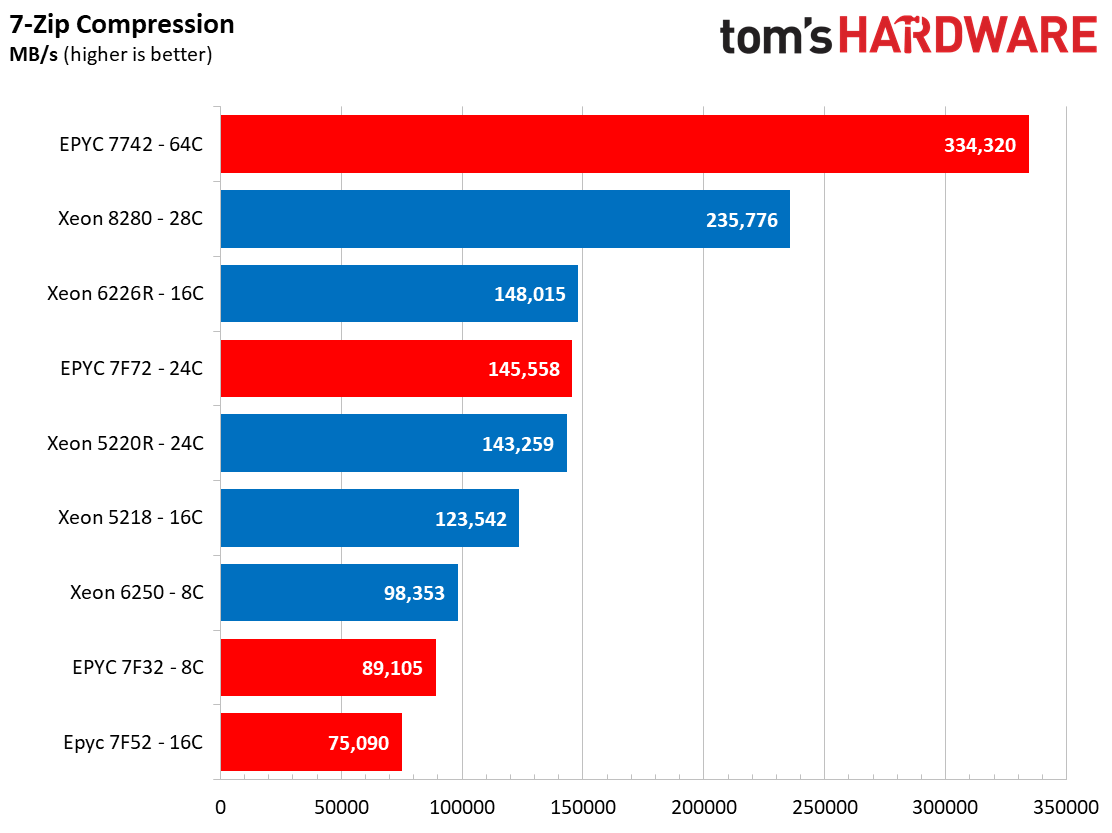

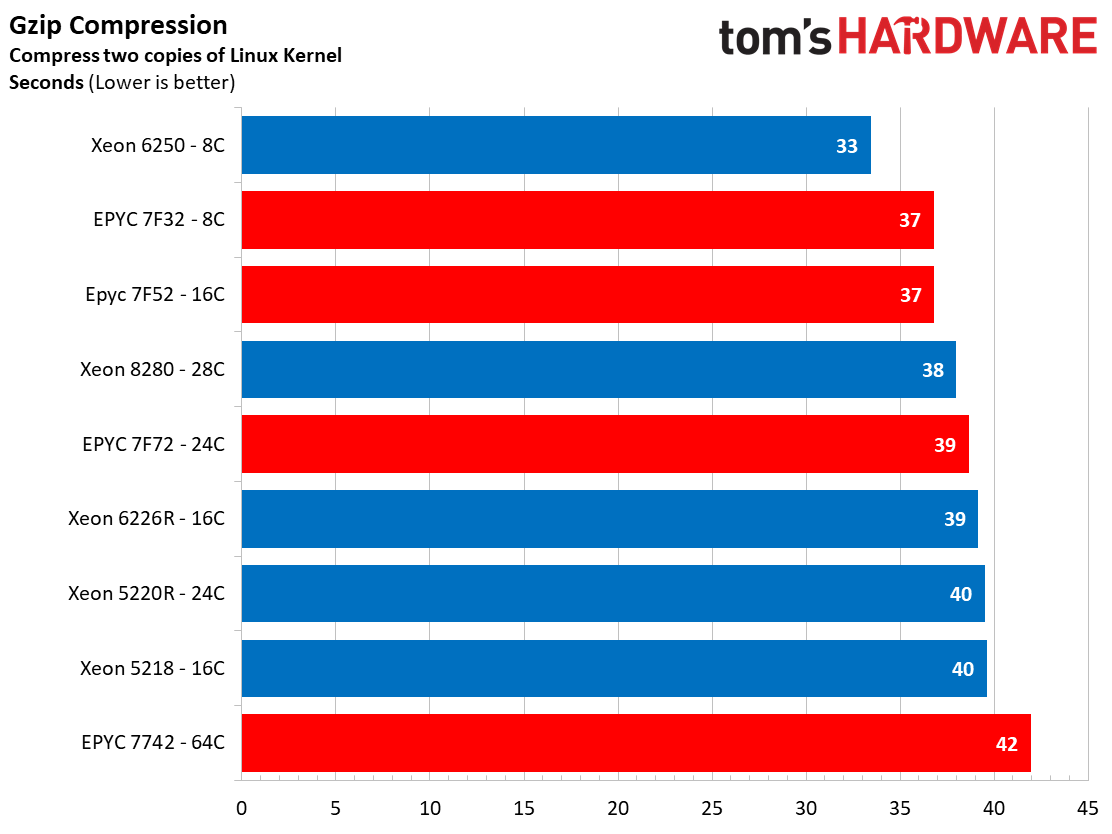

Compression workloads also come in many flavors. The 7-Zip (p7zip) benchmark exposes the heights of theoretical compression performance because it runs directly from main memory, allowing both memory throughput and core counts to impact performance heavily. As we can see, this benefits the EPYC 7742 tremendously, but it is noteworthy that the 28-core Xeon 8280 offers far more performance than the 24-core 7F72 if we normalize throughput based on core counts. In contrast, the gzip benchmark, which compresses two copies of the Linux 4.13 kernel source tree, responds well to speedy clock rates, giving the eight-core Xeon 6250 the lead due to its 4.5 GHz boost clock.

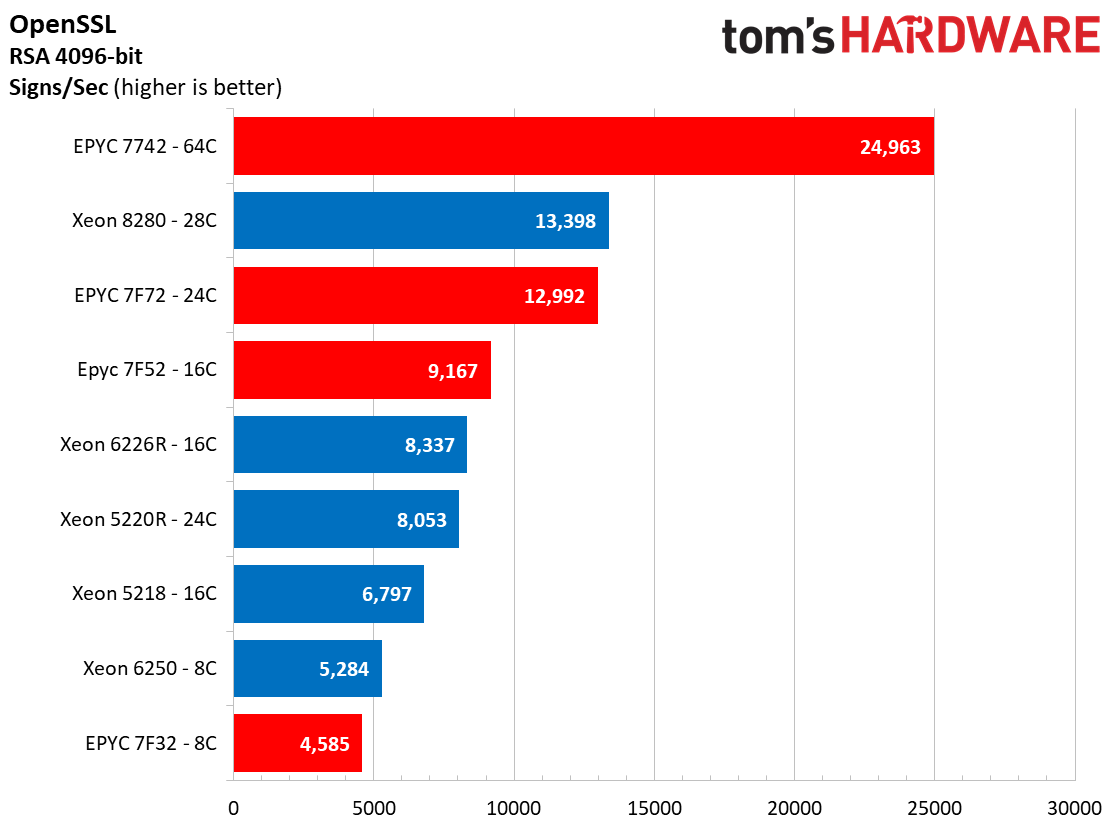

The open-source OpenSSL toolkit uses SSL and TLS protocols to measure RSA 4096-bit performance. As we can see, this test favors the EPYC processors due to its parallelized nature, but offloading this type of workload to dedicated accelerators is becoming more common for environments with heavy requirements.

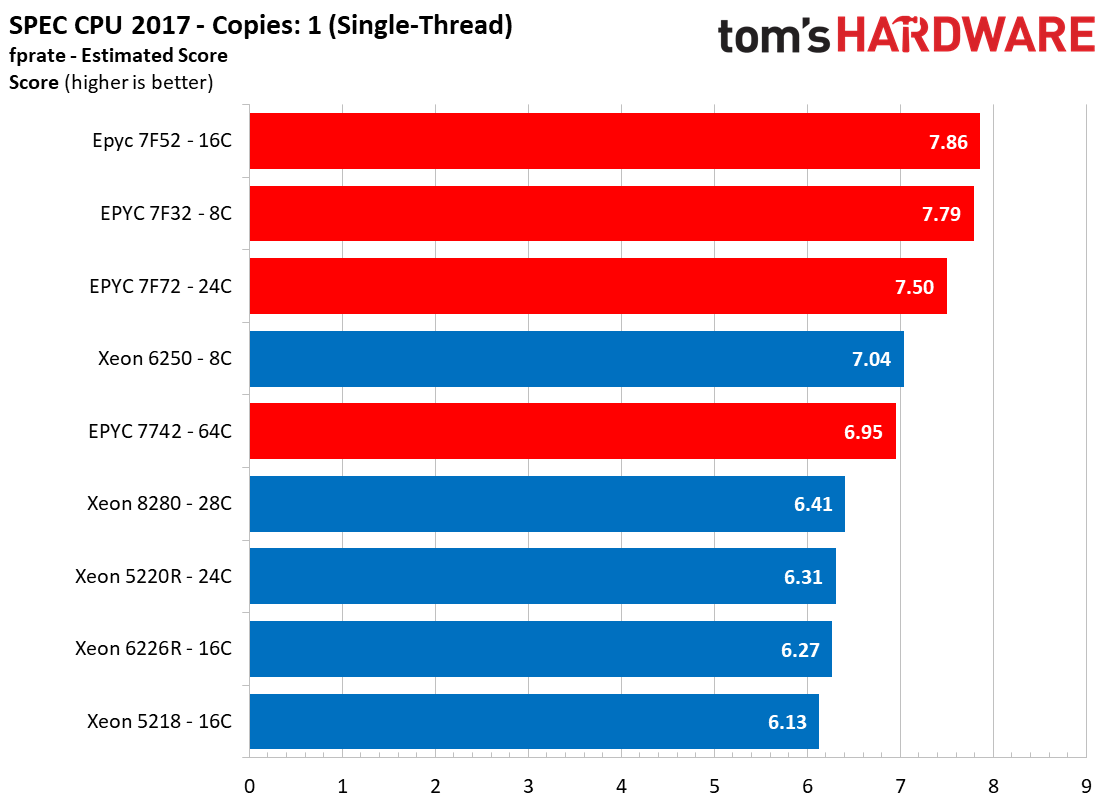

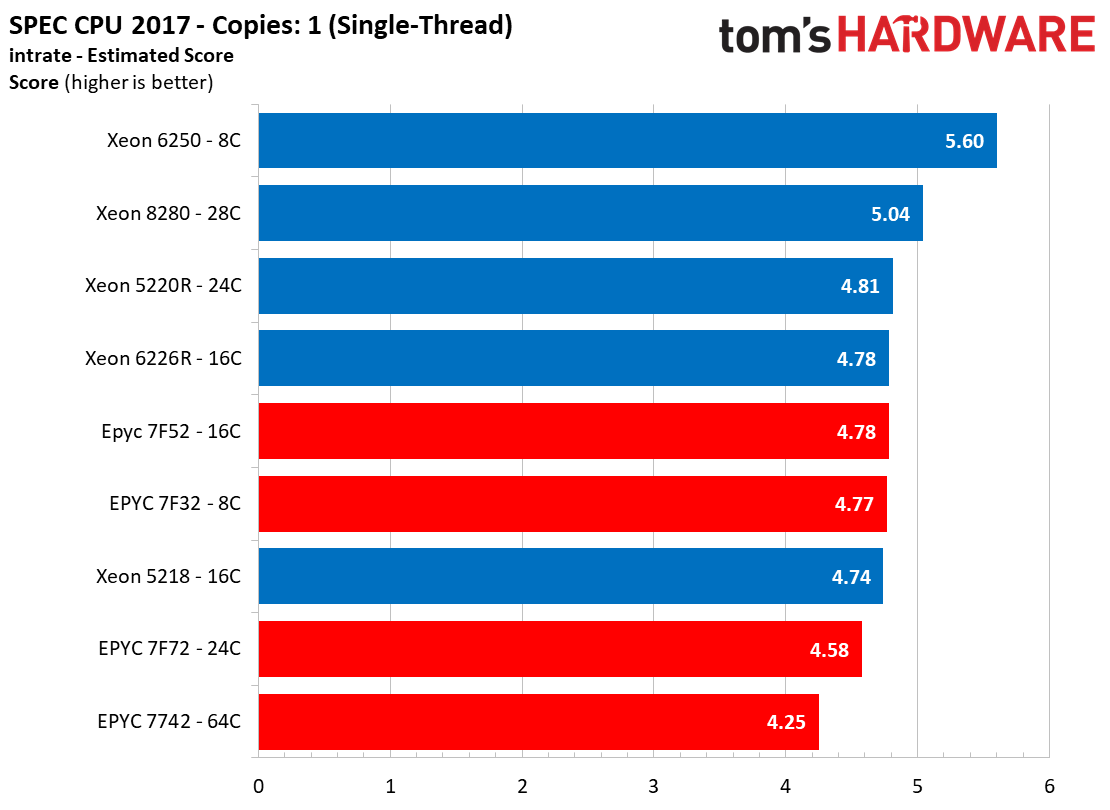

SPEC CPU 2017 Estimated Scores

We used the GCC compiler and the default Phoronix test settings for these SPEC CPU 2017 test results. SPEC results are highly contested and can be impacted heavily with various compilers and flags, so we're sticking with a bog-standard configuration to provide as level of a playing field as possible. It's noteworthy that these results haven't been submitted to the SPEC committee for verification, so they aren't official. Instead, view the above tests as estimates, based on our testing.

The Xeon 6250 and 8280 processors take the lead in the single-threaded intrate tests, while the AMD EPYC processors post impressively-strong single-core measurements in the fprate tests.

Conclusion

AMD has enjoyed a slow but steadily-increasing portion of the data center market, and much of its continued growth hinges on increasing adoption beyond hyperscale cloud providers to more standard enterprise applications. That requires a dual-pronged approach of not only offering a tangible performance advantage, particularly in workloads that are sensitive to per-core performance, but also having an ecosystem of fully-validated OEM platforms readily available on the market.

The Supermicro 1023US-TR4 server slots into AMD's expanding constellation of OEM EPYC systems and also allows discerning customers to upgrade from the standard 7002 series processors to the high-frequency H- and F-series models as well. It also supports up to 8TB of ECC memory, which is an incredible amount of available capacity for memory-intensive workloads. Notably, the system comes with the PCIe 3.0 interface while the second-gen EPYC processors support PCIe 4.0, but this arrangement allows customers that don't plan to use PCIe 4.0 devices to procure systems at a lower price point. As one would imagine, Supermicro has other offerings that support the faster interface.

Overall we found the platform to be robust, and out-of-the-box installation was simple with a tool-less rail kit and an easily-accessible IPMI interface that offers a cornucopia of management and monitoring capabilities. Our only minor complaints are that the front panel could use a few USB ports for easier physical connectivity. The addition of a faster embedded networking interface would also free up an additional PCIe slot. Naturally, higher-end Supermicro platforms come with these features.

As seen throughout our testing, the Supermicro 1023US-TR4 server performed admirably and didn't suffer from any thermal throttling issues regardless of the EPYC processors we used, which is an important consideration. Overall, the Supermicro 1023US-TR4 server packs quite the punch in a small form factor that enables incredibly powerful and dense compute deployments in cloud, virtualization, and enterprise applications.

MORE: Best CPUs

MORE: CPU Benchmarks and Hierarchy

MORE: All CPUs Content

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.