China planning 1,600-core chips that use an entire wafer — similar to American company Cerebras 'wafer-scale' designs

Bigger is better.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Scientists from the Institute of Computing Technology at the Chinese Academy of Sciences introduced an advanced 256-core multi-chiplet and have plans to scale the design up to 1,600-core chips that employ an entire wafer as one compute device.

It is getting harder and harder to increase transistor density with every new generation of chips, so chipmakers are looking for other ways to increase performance of their processors, which includes architectural innovations, larger die sizes, multi-chiplet designs, and even wafer-scale chips. The latter has only been managed by Cerebras so far, but it looks like Chinese developers are looking towards them as well. Apparently, they have already built a 256-core multi-chiplet design and are exploring ways to go wafer-scale, using an entire wafer to build one large chip.

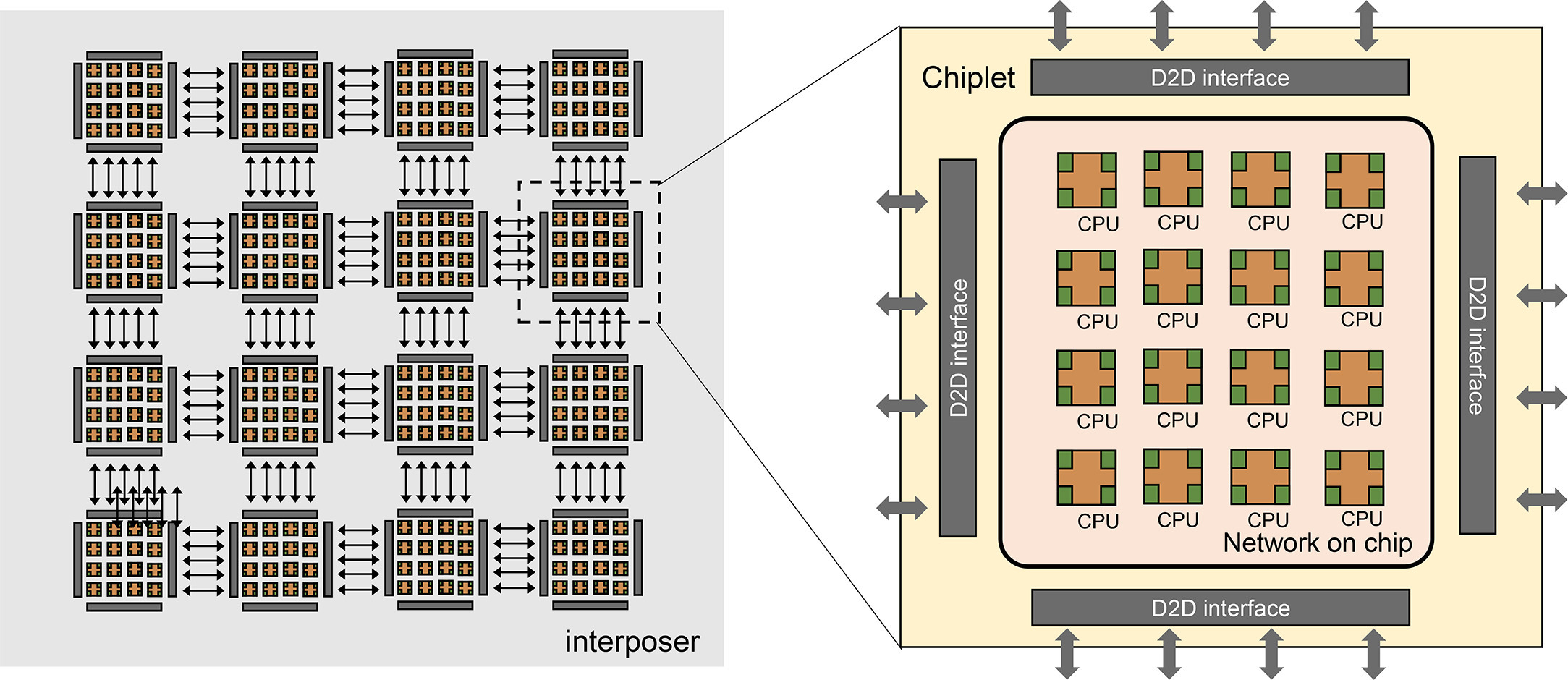

Scientists from the Institute of Computing Technology at the Chinese Ac ademy of Sciences introduced an advanced 256-core multi-chiplet compute complex called Zhejiang Big Chip in a recent publication in the journal Fundamental Research, as reported by The Next Platform. The multi-chiplet design consists of 16 chiplets containing 16 RISC-V cores each and connected to each other in a conventional symmetric multiprocessor (SMP) manner using a network-on-chip so that the chiplets could share memory. Each chiplet has multiple die-to-die interfaces to connect to neighbor chiplets over a 2.5D interposer and the CAS researchers say that the design is scalable to 100 chiplets, or to 1,600 cores.

Zhejiang chiplets are reportedly made on a 22nm-class process technology, presumably by Semiconductor Manufacturing International Corp. (SMIC). We are not sure how much power a 1,600-core assembly interconnected using an interposer and made on a 22nm production node would consume. As The Next Platform points out, nothing stops CAS from producing a 1,600-core wafer-scale chip, which would greatly optimize power consumption and performance due to reduced latencies.

The paper explores the limits of lithography and chiplet technology and discusses the potential of this new architecture for future computing needs. Multi-chiplet designs could be used to build processors for exascale supercomputers, the researchers note, something that AMD and Intel do today.

"For the current and future exascale computing, we predict a hierarchical chiplet architecture as a powerful and flexible solution," the researchers wrote. “The hierarchical-chiplet architecture is designed as many cores and many chiplets with hierarchical interconnect. Inside the chiplet, cores are communicated using ultra-low-latency interconnect while inter-chiplet are interconnected with low latency beneficial from the advanced packaging technology, such that the on-chiplet latency and the NUMA effect in such high-scalability system can be minimized."

Meanwhile, the CAS researchers propose to use multi-level memory hierarchy for such assemblies, which could potentially introduce difficulties with programming of such devices.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

"The memory hierarchy contains core memory [caches], on-chiplet memory and off-chiplet memory," the description reads. "The memory from these three levels vary in terms of memory bandwidth, latency, power consumption and cost. In the overview of hierarchical-chiplet architecture, multiple cores are connected through cross switch and they share a cache. This forms a pod structure and the pod is interconnected through the intra-chiplet network. Multiple pods form a chiplet and the chiplet is interconnect through the inter-chiplet network and then connects to the off-chip(let) memory. Careful design is needed to make full use of such hierarchy. Reasonably utilizing the memory bandwidth to balance the workload of different computing hierarchy can significantly improve the chiplet system efficiency. Properly designing the communication network resource can ensure the chiplet collaboratively performing the shared-memory task."

The Big Chip design could also take advantage of such things as optical-electronic computing, near-memory computing, and 3D stacked memory. However, the paper stops short of providing specific details on the implementation of these technologies or addressing the challenges they might pose in the design and construction of such complex systems.

Meanwhile, The Next Platform assumes that CAS has already built its 256-core Zhejiang Big Chip multi-chiplet compute complex. From here, the company can explore performance of its chiplet design and then make decisions regarding system-in-packages with a higher number of cores, different classes of memory, and wafer-scale integration.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user I think this doesn't have too much potential, as a general-purpose architecture. The main problem is how to connect up enough RAM to support all of those cores running general-purpose workloads. Even if you could connect the RAM and have enough bandwidth, maintaining cache coherency over 1600 cores would seem to be quite taxing.Reply

Right now, the best way to use such dense compute is in dataflow computing, like what Cerebras does. -

TCA_ChinChin Reply

I think this is more of a proof of concept and research design rather than something pushed to premature product. I imagine this would lead to domestic capability in the same realm as what Cerebras does currently, when it actually matures.bit_user said:I think this doesn't have too much potential, as a general-purpose architecture. The main problem is how to connect up enough RAM to support all of those cores running general-purpose workloads. Even if you could connect the RAM and have enough bandwidth, maintaining cache coherency over 1600 cores would seem to be quite taxing.

Right now, the best way to use such dense compute is in dataflow computing, like what Cerebras does. -

toffty Two main issues with this approach are:Reply

1. Keeping lanes the same length to each core to memory

2. Cooling such a behemoth

Let alone imperfections. Depending on the transistor size, they'll never get a fully working chip -

bit_user Reply

Why?toffty said:Two main issues with this approach are:

1. Keeping lanes the same length to each core to memory

They can run it at a low enough clock speed to make the heat manageable. Here are some specs on Cerebras' CS-1:toffty said:2. Cooling such a behemoth

https://www.eetimes.com/powering-and-cooling-a-wafer-scale-die/

This shows exploded views + info about the CS-2:

https://www.cerebras.net/cs2virtualtour

Cerebras reported full yields of their WSE-1. They built enough redundancy into each die that they didn't even have to disable any of them.toffty said:Let alone imperfections. Depending on the transistor size, they'll never get a fully working chip -

ThomasKinsley Let me get this straight. China is preparing to produce a wafer chip, the likes of which only Cerebras has made. And this is happening amid a CIA investigation into a potential leak of Cerebras technology into China's hands from a United Arab Emirates company headed by an ethnic Chinese CEO who renounced his American citizenship for UAE citizenship?Reply -

George³ Reply

You apparently failed to understand that they already have a 256 core model that they hope they can increase further. If they copied, they would already be eating whole silicon wafers, with no intermediate stages.ThomasKinsley said:Let me get this straight. China is preparing to produce a wafer chip, the likes of which only Cerebras has made. And this is happening amid a CIA investigation into a potential leak of Cerebras technology into China's hands from a United Arab Emirates company headed by an ethnic Chinese CEO who renounced his American citizenship for UAE citizenship? -

ThomasKinsley Reply

The 256 core model is not at wafer scale as the new 1,600 core chip is. The article indicates Cerebras finally figured out how to do it after overcoming significant manufacturing complexity. The timing of this is peculiar given that there is an international investigation analyzing whether G42 gave China Cerebras IP. It's not proof, but it's indicative that there may have been a technology transfer.George³ said:You apparently failed to understand that they already have a 256 core model that they hope they can increase further. If they copied, they would already be eating whole silicon wafers, with no intermediate stages. -

eryenakgun Reply

Currentbit_user said:I think this doesn't have too much potential, as a general-purpose architecture. The main problem is how to connect up enough RAM to support all of those cores running general-purpose workloads. Even if you could connect the RAM and have enough bandwidth, maintaining cache coherency over 1600 cores would seem to be quite taxing.

Right now, the best way to use such dense compute is in dataflow computing, like what Cerebras does. -

eryenakgun Reply

Current GPUs has 10k-20k cores in it, while all of them connected in common RAM bus. It is not a rocket science. Just connect CPUs together. And link them to RAM.bit_user said:I think this doesn't have too much potential, as a general-purpose architecture. The main problem is how to connect up enough RAM to support all of those cores running general-purpose workloads. Even if you could connect the RAM and have enough bandwidth, maintaining cache coherency over 1600 cores would seem to be quite taxing.

Right now, the best way to use such dense compute is in dataflow computing, like what Cerebras does. -

Notton To me, this looks like an experiment to test how good the domestic chip production is.Reply

Even if it doesn't work well, they will gain experience from it.