Nvidia's generative AI tool delivers a radical 60X performance boost for chipmakers - TSMC and Synopsys are now using the cuLitho software in production

Nvidia adds generative AI for a 2X boost.

Nvidia announced here at GTC 2024 that TSMC and Synopsys have now employed its cuLitho software in production to speed up computational lithography, a key workload that helps chipmakers sidestep limitations as they move to 2nm and smaller transistors produced with the latest cutting-edge chipmaking tools, like High-NA EUV. The computational horsepower required to produce today's chips is increasing with each new node, and Nvidia touted an example of a cuLitho-powered system with 350 H100 GPUs delivering a 60X performance speedup to a workload that typically requires 40,000 CPU systems crunching away for up to 30 million or more hours of compute time.

Nvidia announced cuLitho last year, but the company has also now integrated generative AI into the workflow, thus providing an additional 2X speedup over the already impressive gains. Overall, Nvidia claims cuLitho drastically reduces the time required for heavy computational lithography workloads of all ilks, and with Synopsys integrating the tech into its software tools, it's likely to permeate to other chipmakers, too.

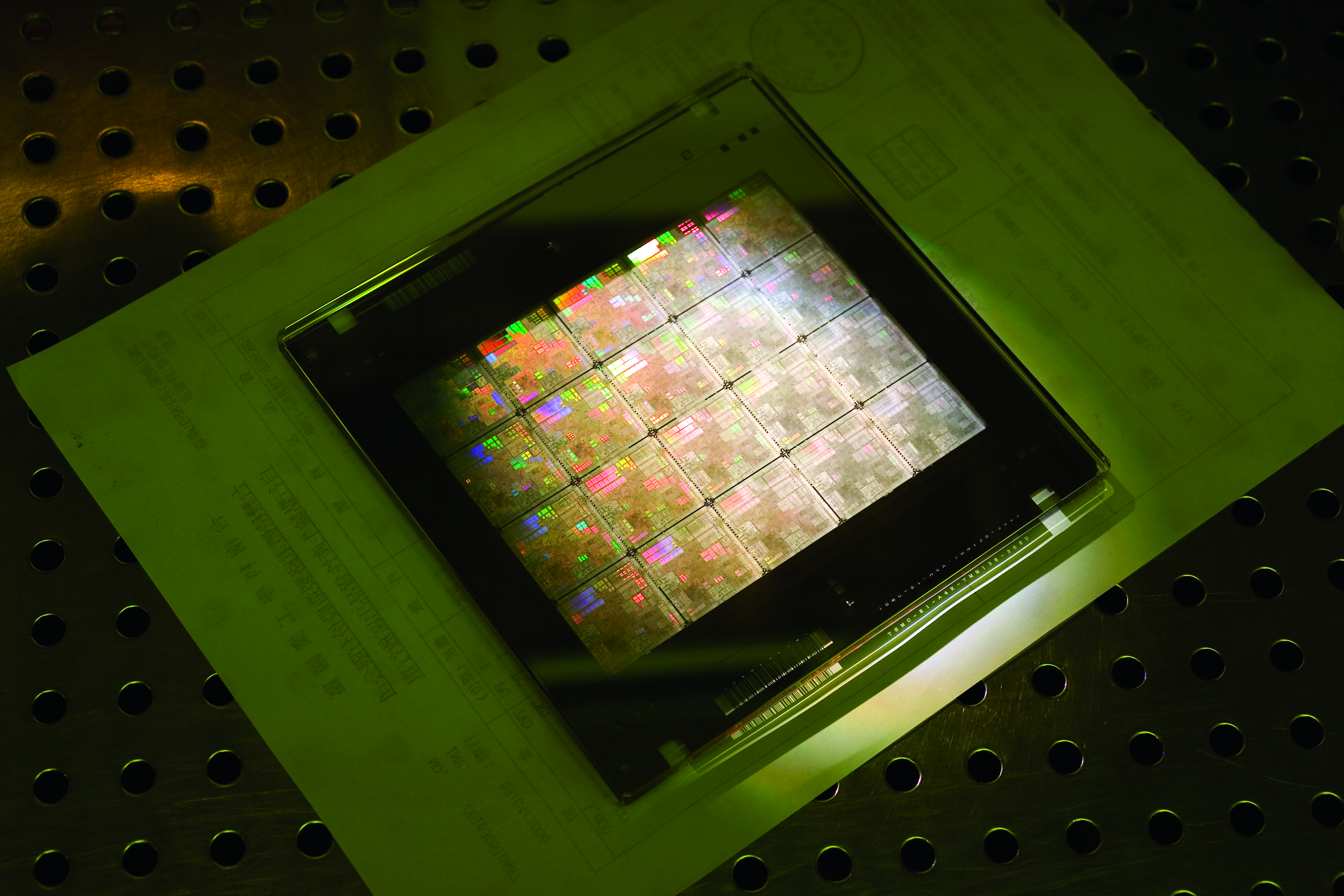

Printing nanometer-scale features on a chip requires a chunk of transparent quartz called a photomask. The quartz has an imprinted pattern of a chip design and works much like a stencil. By shining ultraviolet light through the mask, called an exposure, the chip design can be etched onto the wafer, thus creating the billions of 3D transistors and wire structures that comprise a modern chip.

Early chipmaking tools employed a single photomask to print an entire wafer, but new chips require such high resolution that a photomask is used with a reticle to print each die on the wafer individually. Each chip design requires multiple exposures to build up the chip's design in layers, and the number of photomasks used during the chipmaking process varies based on the chip; it can even exceed 100 masks. However, new problems have cropped up as the tool's print features finer than the wavelength of the ultraviolet light used for exposures.

The continued shrinkage of the features has led to issues with diffraction, which essentially 'blurs' the design being printed onto the silicon. These issues with optical imperfections occur due to a number of factors, like mirror curvature, chemical properties, and positioning offsets, among others, which require mitigations to ensure the design is printed without defects.

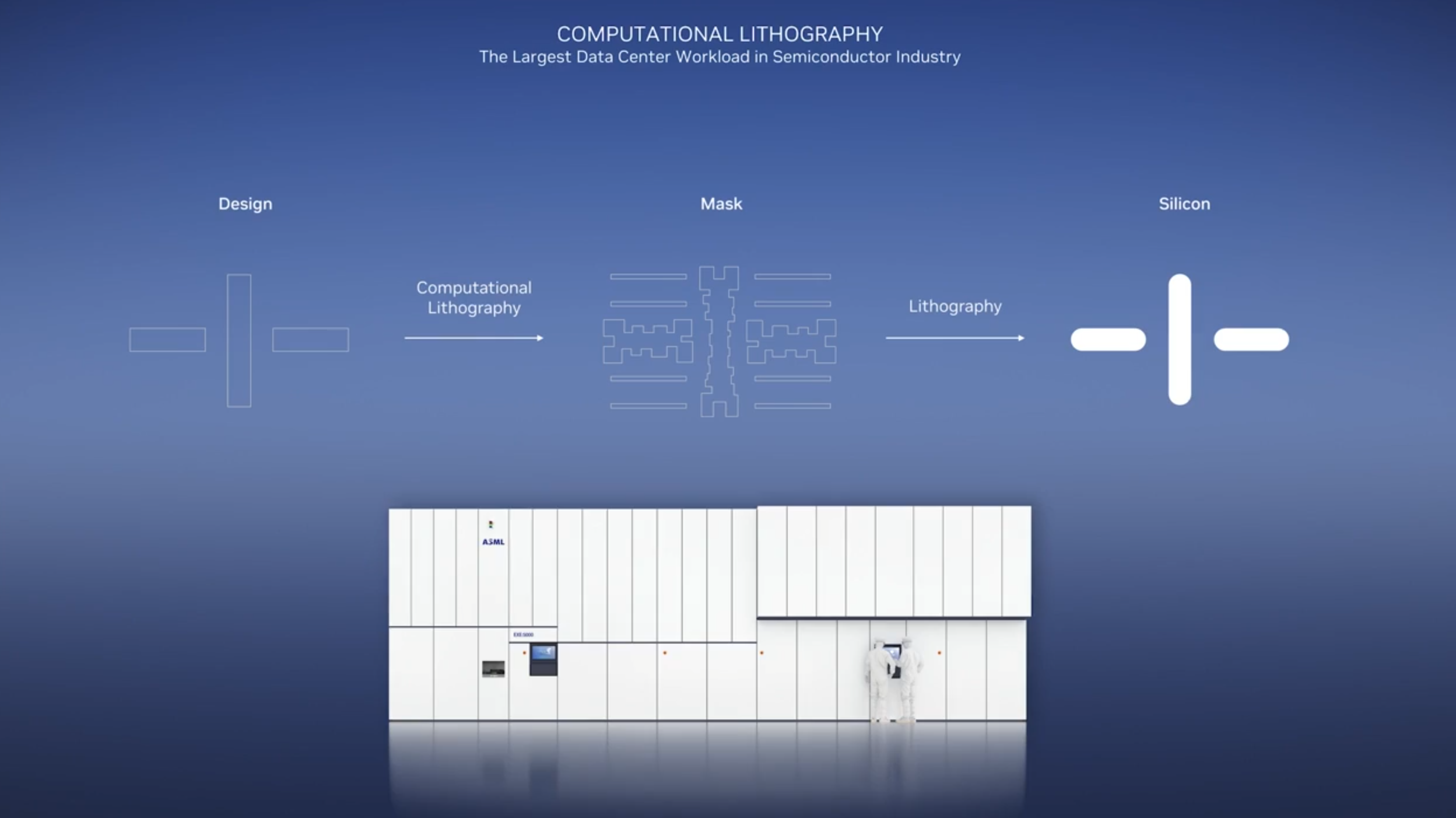

Resolution Enhancement Technologies (RET) techniques paired with computational lithography counteract the clarity issues by conducting complex mathematical operations that optimize the mask layout, bending the light in such a way that chipmakers can achieve higher resolutions than before. However, this task is becoming increasingly compute-intensive as features shrink even further and billions more transistors are added to each design, creating an escalating computational workload that grows with each new generation of chips.

The key to addressing the issue lies in creating ever-more sophisticated masks, but they’re incredibly complex - for instance, Intel says each of its masks holds the equivalent of an astounding five petabytes of data, or ten times as much data as an IMAX movie. High-NA EUV and new techniques, like Inverse Lithography Technology (ILT), which employs curvilinear masks, are expected to increase the amount of data processing for masks by 10X in the coming years.

The advent of multi-beam writers, a new class of mask creation tool, allows finer control of the mask-making process and enables much more complex designs, like those found in curvilinear masks. However, this requires much more intense computation. Nvidia's cuLitho is designed to shift the computational lithography workload to GPUs and, through the company's software libraries, reduce the amount of time required to complete any given workload.

The cuLitho library can be integrated into computational lithography software that designs masks using ILT (curvilinear shapes), Optical Proximity Correction (OCP, which uses 'Manhattan' shapes), and Source Mask Optimization (SMO).

Now that cuLitho has employed generative AI and has been moved to production, Nvidia has shared the results of its tests, with a Manhattan workload experiencing a 58X improvement, a curvilinear mask design receiving a 45X speed up, and an Nvopc workload getting a 40X boost.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

TSMC is now employing cuLitho in its mask-making operations, and the great effect; “Our work with NVIDIA to integrate GPU-accelerated computing in the TSMC workflow has resulted in great leaps in performance, dramatic throughput improvement, shortened cycle time and reduced power requirements,” said Dr. C.C. Wei, CEO of TSMC. “We are moving NVIDIA cuLitho into production at TSMC, leveraging this computational lithography technology to drive a critical component of semiconductor scaling."

“Synopsys has a proud history of empowering engineering teams to solve previously unsolvable challenges, and now we’re taking that to the next level by harnessing the power of AI and accelerated computing,” said Sassine Ghazi, president and CEO of Synopsys.

Synopsys and TSMC are the first to employ cuLitho, but other EDA and chipmaking companies can also employ the software in their mask-making operations, thus improving the amount of time required to fabricate new masks while saving power and reducing cost.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Alvar "Miles" Udell I remember reading an article on TH about...10 years ago or so which talked about how AMD used computers (now called "AI") to help design chips, and how it led to significant inefficiencies and waste. Now "AI" has advanced such that it's reducing waste and inefficiencies.Reply